This repo contains experiments related to the representation of combinatorial optimization problems as (heterogeneous) graphs. We include all the code related to the implementations of 4 problem-specific representations, as well as our generic model and our XCSP3 parser.

The problem-specific models are the following:

- NeuroSAT : a GNN trained to predict whether a given SAT problem is solvable or not- and an attempt to model SAT problems with a generic graph representation.

- TSP-GNN : a GNN trained to predict the decision variant of a TSP.

- GC-GNN : a GNN trained to predict the decision variant of a Graph coloring problem.

- KS-GNN : a GNN trained to predict the decision variant of a Knapsack problem.

The generic graph representation is intended to be used to model any combinatorial optimization as a graph.

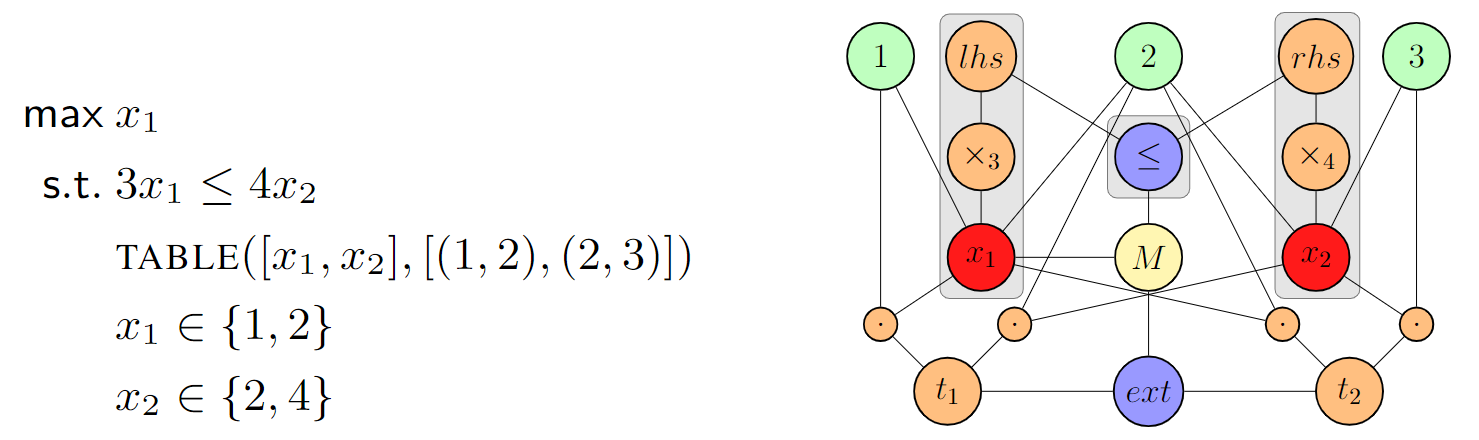

Formally, a combinatorial problem

Our goal is to build a function

A value-vertex is introduced for each constant appearing in

A variable-vertex is introduced for each variable appearing in

A constraint-vertex is introduced for each constraint appearing in

Tightly related to constraints, operator-vertices are used to unveil the combinatorial structure of constraints. Operators represent modifications or restrictions on variables involved in constraints (e.g., arithmetic operators, logical operators, views, etc.). An operator-vertex is added each time a variable

There is only one model-vertex per graph. This vertex is connected to all constraint-vertices and all variable-vertices involved in the objective function. Its semantics is to gather additional information about the combinatorial problem (e.g., the direction of the optimization) by means of its feature vector (

There are

The repo is structured as follows:

src

├── __init__.py

├── generic_xcsp

│ ├── __init__.py

│ ├── alldifferent.py

│ ├── constraints

│ ├── constraints.py

│ ├── dataset.py

│ ├── element.py

│ ├── generic_model.py

│ ├── graph_builder.py

│ ├── instance.py

│ ├── intension_utils.py

│ ├── sum_constraint.py

│ ├── training_config.py

│ └── variable_parsing.py

├── models

│ ├── __init__.py

│ ├── common

│ │ ├── __init__.py

│ │ ├── config.py

│ │ ├── lstm_conv.py

│ │ ├── pytorch_lr_scheduler.py

│ │ ├── pytorch_models.py

│ │ ├── pytorch_utilities.py

│ │ └── training_utils.py

│ ├── decision_tsp

│ │ ├── __init__.py

│ │ ├── base_model.py

│ │ ├── config.py

│ │ ├── dataset.py

│ │ ├── generic_model.py

│ │ ├── instance_generator.py

│ │ ├── instance_parser.py

│ │ ├── plotting_utils.py

│ │ ├── setup.md

│ │ ├── xml_element_generator.py

│ │ └── xml_extension_generator.py

│ ├── graph_coloring

│ │ ├── __init__.py

│ │ ├── base_model.py

│ │ ├── config.py

│ │ ├── dataset.py

│ │ ├── gc_parser.py

│ │ ├── graph_coloring_instances

│ │ ├── instance_generator.py

│ │ ├── rename_graphs.py

│ │ └── xml_generator.py

│ ├── knapsack

│ │ ├── instance_generator.py

│ │ └── knapsack_model.py

│ └── sat

│ ├── __init__.py

│ ├── config.py

│ ├── dataset.py

│ ├── neurosat_model.py

│ ├── plotting_utils.py

│ ├── sat_parser.py

│ ├── scripts

│ │ ├── PyMiniSolvers

│ │ ├── gen_sr_dimacs.py

│ │ ├── generate_data.sh

│ │ └── setup.sh

├── build_marty_et_al_datasets.py

├── requirements.txt

├── test_generic_model.py

├── test_graph_coloring.py

├── test_knapsack_specific_model.py

├── test_sat.py

├── test_tsp.py

├── train_generic_model.py

├── train_graph_coloring.py

├── train_knapsack_specific_model.py

├── train_sat.py

├── train_tsp.py

└── utils

└── lzma_utils.py

The src/models subdirectory contains problem-specific models, instance generators and related utilities, while the generic stuff in in the src/generic_xcsp subdirectory. The src/utils subdirectory contains utilities for compressing and decompressing lzma files.

As usual, start by creating a virtual environment and installing the requirements. We will describe the setup to generate data for all different problems. Please note that, for the problem-specific models, as well as the generic models, you will need 2 directories to store your data: raw and processed. The raw directory will contain the raw data, while the processed directory will contain the data after it has been processed and converted to PyTorch-Geometric objects.

The SAT model and instance generator are based on the NeuroSAT. To complete the setup, cd into src/models/sat and run setup.sh. To generate instances, cd into src/models/sat and run ./generate_data.sh.

The TSP instance generator setup is a little tedious. It uses pyconcorde - a pytorch wrapper for the concorde TSP solver. The setup is described in the following section.

Concorde requires a linear programming solver. In this setup, we use QSopt. Simply download it (all three files) from (this link)[https://www.math.uwaterloo.ca/~bico/qsopt/downloads/downloads.htm]. I am using ubuntu, so I download all 3 files located at the bottom of the page and I place them in a directory named qsopt_solver.

sudo apt update

sudo apt install build-essential libgmp-dev libgsl-dev

wget http://www.math.uwaterloo.ca/tsp/concorde/downloads/codes/src/co031219.tgz

tar -xvf co031219.tgz

cd concorde

./configure --prefix=/usr/local --with-qsopt=[full_path_to_qsopt_solver_directory]

make

export PATH="/usr/local/concorde/TSP:$PATH

Adjust the last line according to where you downloaded the concorde solver. Following this step by step will allow you to use concorde. This can be tested by running the following command:

concorde -s 99 -k 100

Running this command should yield the following:

concorde -s 99 -k 100

Host: [host_name] Current process id: xxxx

Using random seed 99

Random 100 point set

XSet initial upperbound to 780 (from tour)

LP Value 1: 738.500000 (0.00 seconds)

LP Value 2: 765.000000 (0.02 seconds)

LP Value 3: 774.660000 (0.05 seconds)

LP Value 4: 778.000000 (0.09 seconds)

LP Value 5: 778.465517 (0.13 seconds)

LP Value 6: 778.705882 (0.16 seconds)

LP Value 7: 779.538462 (0.20 seconds)

LP Value 8: 779.937500 (0.24 seconds)

LP Value 9: 780.000000 (0.26 seconds)

New lower bound: 780.000000

Final lower bound 780.000000, upper bound 780.000000

Exact lower bound: 780.000000

DIFF: 0.000000

Final LP has 180 rows, 336 columns, 2921 nonzeros

Optimal Solution: 780.00

Number of bbnodes: 1

Total Running Time: 0.45 (seconds)

pyconcorde is a python wrapper for the concorde TSP solver. It can be installed by running the following commands:

git clone https://github.com/jvkersch/pyconcorde

cd pyconcorde

pip install -e .

Once the setup is complete, cd into src/models/decision_tsp and run python3 instance_generator.py.

The data generation for the graph coloring problem is straightforward. Simply cd into src/models/graph_coloring and run python3 instance_generator.py.

The data generation for the knapsack problem is straightforward. Simply cd into src/models/knapsack and run python3 instance_generator.py.

The generic raw data is created from the original raw data. For each problem, a data generator is provided. It is slightly different for all problems.

Both generic and specific SAT instances are created directly from DIMACS files. The generic data is created by setting generic_representation to True in the training script.

To generate TSP-Element instances, cd into src/models/decision_tsp and run python3 xml_element_generator.py. This will generate the raw data.

To generate TSP-Element instances, cd into src/models/decision_tsp and run python3 xml_extension_generator.py. This will generate the raw data.

To generate graph coloring instances, cd into src/models/graph_coloring and run python3 xml_generator.py. This will generate the raw data.

Knapsack are generated directly as XML files.

Training scripts are provided for all models. They are located in the src directory. The training scripts are named as follows:

train_sat.pyTrains either a SAT-specific or a generic modeltrain_tsp.pyTrains a TSP-specific modeltrain_graph_coloring.pyTrains a graph coloring-specific modeltrain_knapsack_specific_model.pyTrains a knapsack-specific modeltrain_generic_model.pyTrains a generic model