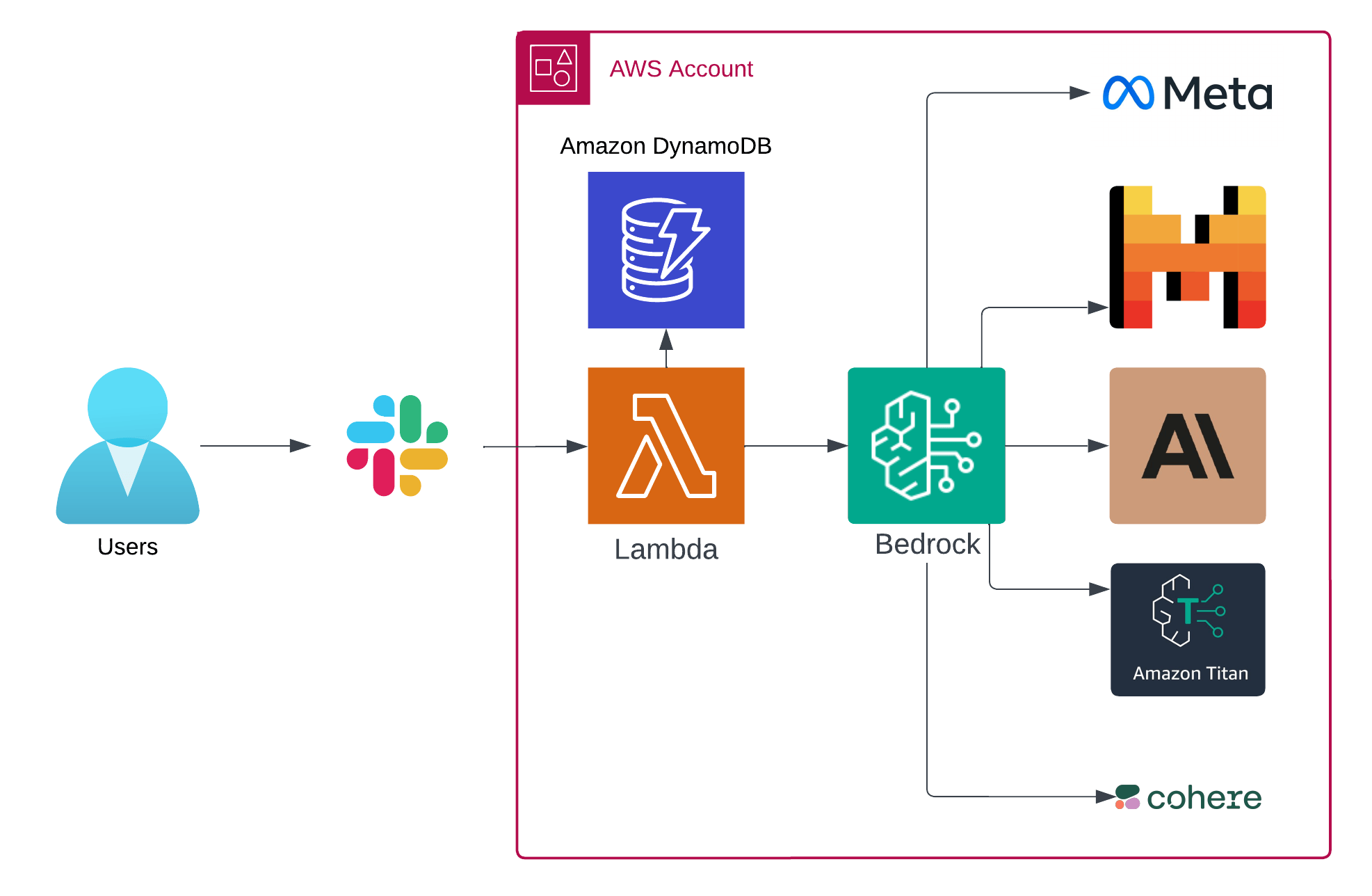

Converse with your favorite LLMs without ever leaving Slack! Slackrock is a conversational AI assistant powered by Amazon Bedrock & your favorite cutting-edge frontier models. The project is focused on cost efficiency & simplicity, while supporting a wide variety of AI models with differing strengths & weaknesses to fit the widest array of use cases.

slackrock_demo.mp4

💬 Using Amazon Bedrock Converse API enables multi-turn conversations, allowing models to retain context of the discussion over a long period of time. You can come back to a thread days later and pick up where you left off. Currently supports interaction via @app mentions in public & private channels or sending direct messages to the bot.

🎛️ Customization: Quickly evaluate and experiment with supported models including Amazon Titan, Anthropic Claude, Mistral AI, Cohere Command, and Meta Llama. Using Slack Bolt for Python makes it easy to extend the Lambda code to create your own event handlers or Slack Slash Commands.

- Users can easily switch their preferred Bedrock model directly from the Slack app's home tab.

🧮 Cost Efficiency: Only pay for what you use with Amazon Bedrock's pay-as-you-go pricing.

- Costs automatically decrease during decreased periods of low usage ie. holidays and weekends.

- Serverless compute requires minimal operational overhead and uses efficient Graviton2 processors.

- Works with free Slack workspace, pro/enterprise plans not required.

😊 Simplicity: Get your own bot up and running quickly with just a few sam commands.

- AWS account with an IAM User or Role with necessary permissions to create and manage all resources required for the solution. See: How do I create and activate a new AWS account?

- A Slack workspace where you have permission to create and install apps. A pro Slack plan is not required.

- aws-sam-cli

- aws-cli

- Python 3.12

Let's get started!

-

Request access to desired Amazon Bedrock models. The default model used in this solution is

anthropic.claude-3-5-sonnet-20240620-v1:0. Feel free to request as many or as few as you like; the process is automated, and models become available immediately. -

Create a Slack app (https://api.slack.com/apps) from manifest using the

slack-app-manifest.yamlfile provided in this project.create_slack_app.mp4

-

Once the app is created, install the app in your Slack workspace.

-

Gather Slack secrets

- Navigate to 'Basic Information' and note the

Signing Secret - Navigate to 'OAuth & Permissions' and note the

Bot User OAuth Token

- Navigate to 'Basic Information' and note the

-

Store the secrets in AWS Secrets Manager using the

awscli:aws secretsmanager create-secret \ --name "slackrock" \ --secret-string '{ "SLACK_BOT_TOKEN": "xoxb-XXXXXXX-XXXXXXXXXXXXX-XXXXXXXXXXXXXXXXX", "SLACK_SIGNING_SECRET": "XXXXXXXXXXXXXXXXXXXXXXX" }'

-

Next, build & deploy the app to your AWS account. Ensure AWS access credentials are loaded into your current environment. (Pro-tip: https://www.granted.dev/)

-

Run

sam buildNote: any changes you make to template.yaml or the lambda code will require re-running

sam build. -

Run

sam deploy --guided❗ Answer

yto this prompt, see FAQ for further details:Slackrock Function Url has no authentication. Is this okay? [y/N]

-

-

After the deployment is completed, note the CloudFormation stack output

SlackrockUrland head back to your Slack app developer page. -

Navigate to

Event Subscriptionsand Enable Events -

Enter your

SlackrockUrlfrom the previous step into Request URL field and confirm the endpoint is verified. -

Add the following bot event subscriptions and click the green save changes button at the bottom.

-

app_home_opened - User clicked into your App Home

-

message.channels - A message was posted to a channel

-

message.groups - A message was posted to a private channel

-

message.im - A message was posted in a direct message channel

It's time to test your bot! Review Cloudwatch Logs if you get any errors or the bot is unresponsive.

- Invite the app to a channel

- Mention

@Slackrockin a message, maybe ask about the history of Bundnerkase cheese 🧀, or anything else you'd like to know! - Navigate to the app's home tab and try out different models.

See open issues, any help is much appreciated!

To iterate on the code locally, use sam sync. The command below starts a process that watches your local application for changes and automatically syncs them to the AWS Cloud.

sam sync --watch --stack-name Slackrock

Users can now select their preferred model directly from the Slack app's home tab. To set system-wide default, update the BEDROCK_MODEL_ID environment variable in template.yaml to one of the supported models and run sam build && sam deploy.

Alternatively, you can also update the Lambda function environment variables directly via the AWS console or CLI.

The complete list of currently available foundation model IDs is available here. However, keep in mind that not all available Bedrock models are supported by the Converse API.

The Bedrock Converse API supports Amazon Bedrock models and model features listed here.

Currently this model is only available in US West (Oregon, us-west-2). Change the region parameter in samconfig.toml and try it out!

Absolutely! This is a very useful and often fun feature to experiment with. Modify the system prompt in the converse api call to influence how Bedrock models respond. You can fine-tune your system prompt to give your bot a more unique personality, adhere to specific constraints, meet business requirements, or add additional context for specific use cases. See: Getting started with the Amazon Bedrock Converse API.

The default Slackrock system prompt is simple, the response messages should be formatted with Slack flavored Markdown, however it's not perfect. Anthropic has great documentation on system prompts.

This example system prompt will keep your bot in character as a pirate 🏴☠️

response = client.converse(

modelId=modelId,

messages=messages,

system=[

{"text": "Respond in character as a pirate."}

],

)Example response:

Another approach is to set the stage and ask the model to respond in a certain way in your first message, see the demo video for an example of this.

Yes! Use ngrok and modify the sam template to use API gateway. Currently the sam local start-api command does not support Lambda Function URLs.

- Install ngrok and create a free static domain.

- Run

ngrok http --domain=free-custom-domain.ngrok-free.app 3000. This will be your Slack App event URL. - Add the

Eventsproperty toAWS::Serverless::Function:Resources: BedrockSlackApp: Type: AWS::Serverless::Function Properties: Events: ApiEvent: Type: Api Properties: Path: /slack/events Method: post - Run

sam local start-api --env-vars env.json --warm-containers eager

By default, provisioned concurrency is not enabled in the SAM template to keep costs as low as possible. But you may want to enable it depending on your performance requirements.

Using Lambda provisioned concurrency for a Slack app endpoint function is beneficial for three main reasons in this case:

- Provisioned concurrency reduces cold start latencies by keeping a specified number of function instances warm and ready to respond, eliminating delays caused by initializing the execution environment.

- Slack requires a response within 3 seconds or will resend messages and cause unnecessary duplicate Bedrock model invocations.

- Fast response times are essential for providing the best user experience in realtime chat applications like Slack apps. Provisioned concurrency minimizes latency and delivers a smoother user experience.

By allocating provisioned concurrency, you ensure that your Slack app endpoint function is always ready to handle incoming requests promptly, meeting Slack's strict latency requirements and providing a reliable and responsive user experience.

AWS Lambda function URLs are publicly accessible by default, which may seem concerning from a security perspective. However, Slack apps require publicly accessible endpoints to receive events and commands from Slack's servers.

The slack_bolt Python library used in this project uses the signing_secret and token to secure your Lambda function URL endpoint by verifying the authenticity and integrity of the incoming requests from Slack.

Here's how the slack_bolt library utilizes these secrets:

-

SLACK_SIGNING_SECRET:- When you create a Slack app, Slack generates a unique

SLACK_SIGNING_SECRETfor your app. This secret is used to verify the signature of the requests sent by Slack to your app. - When Slack sends a request to your Lambda function URL, it includes a special

X-Slack-Signatureheader in the request. This header contains a hash signature that is computed using your app'sSLACK_SIGNING_SECRETand the request payload. - The

slack_boltlibrary automatically verifies theX-Slack-Signatureheader by recomputing the signature using theSLACK_SIGNING_SECRETand comparing it with the signature sent by Slack. If the signatures match, it confirms that the request is genuinely coming from Slack and hasn't been tampered with. - If the signature verification fails, the

slack_boltlibrary will reject the request, preventing unauthorized access to your Lambda function.

- When you create a Slack app, Slack generates a unique

-

SLACK_BOT_TOKEN:- The

SLACK_BOT_TOKENis an access token that is used to authenticate your app when making API calls to Slack. - When you initialize the

Appinstance in your Lambda function with thetokenparameter set to yourSLACK_BOT_TOKEN, theslack_boltlibrary uses this token to authenticate requests made to the Slack API on behalf of your app. - The

SLACK_BOT_TOKENensures that your app has the necessary permissions to perform actions and retrieve information from Slack.

- The