Question Answering Bot powered by OpenAI GPT models.

$ go get -u github.com/coseyo/gptbotfunc main() {

ctx := context.Background()

apiKey := os.Getenv("OPENAI_API_KEY")

encoder := gptbot.NewOpenAIEncoder(apiKey, "")

store := gptbot.NewLocalVectorStore()

// Feed documents into the vector store.

feeder := gptbot.NewFeeder(&gptbot.FeederConfig{

Encoder: encoder,

Updater: store,

})

err := feeder.Feed(ctx, &gptbot.Document{

ID: "1",

Text: "Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model released in 2020 that uses deep learning to produce human-like text. Given an initial text as prompt, it will produce text that continues the prompt.",

})

if err != nil {

fmt.Printf("err: %v", err)

return

}

// Chat with the bot to get answers.

bot := gptbot.NewBot(&gptbot.BotConfig{

APIKey: apiKey,

Encoder: encoder,

Querier: store,

})

question := "When was GPT-3 released?"

answer, err := bot.Chat(ctx, question)

if err != nil {

fmt.Printf("err: %v", err)

return

}

fmt.Printf("Q: %s\n", question)

fmt.Printf("A: %s\n", answer)

// Output:

//

// Q: When was GPT-3 released?

// A: GPT-3 was released in 2020.

}NOTE:

- The above example uses a local vector store. If you have a larger dataset, please consider using a vector search engine (e.g. Milvus).

- With the help of GPTBot Server, you can even upload documents as files and then start chatting via HTTP!

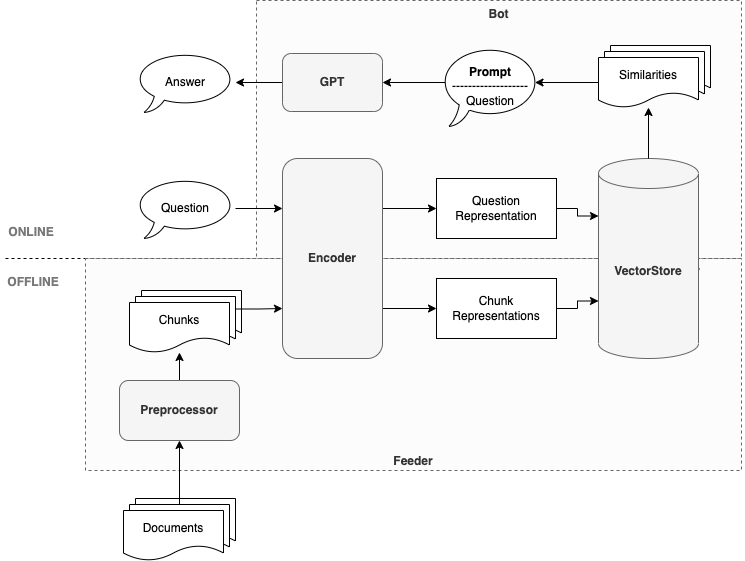

GPTBot is an implementation of the method demonstrated in Question Answering using Embeddings.

| Concepts | Description | Built-in Support |

|---|---|---|

| Preprocessor | Preprocess the documents by splitting them into chunks. | ✅[customizable] Preprocessor |

| Encoder | Creates an embedding vector for each chunk. | ✅[customizable] OpenAIEncoder |

| VectorStore | Stores and queries document chunk embeddings. | ✅[customizable] LocalVectorStore Milvus |

| Feeder | Feeds the documents into the vector store. | / |

| Bot | Question answering bot to chat with. | / |