Code and data for paper Uncovering Limitations of Large Language Models in Information Seeking from Tables (Findings of ACL 2024).

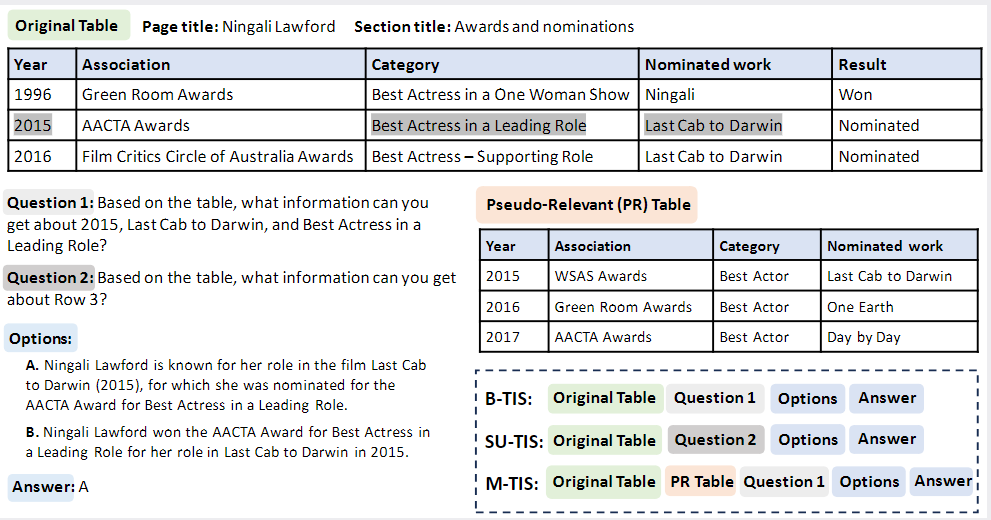

Tables are recognized for their high information density and widespread usage, serving as essential sources of information. Seeking information from tables (TIS) is a crucial capability for Large Language Models (LLMs), serving as the foundation of knowledge-based Q&A systems. However, this field presently suffers from an absence of thorough and reliable evaluation. This paper introduces a more reliable benchmark for Table Information Seeking (TabIS). To avoid the unreliable evaluation caused by text similarity-based metrics, TabIS adopts a single-choice question format (with two options per question) instead of a text generation format. We establish an effective pipeline for generating options, ensuring their difficulty and quality. Experiments conducted on 12 LLMs reveal that while the performance of GPT-4-turbo is marginally satisfactory, both other proprietary and open-source models perform inadequately. Further analysis shows that LLMs exhibit a poor understanding of table structures, and struggle to balance between TIS performance and robustness against pseudo-relevant tables (common in retrieval-augmented systems). These findings uncover the limitations and potential challenges of LLMs in seeking information from tables. We release our data and code to facilitate further research in this field.

Our data is available here: link.

- prompt: The input prompt used to test LLMs; all prompts are in a single-choice question format with two options (one-shot).

- response: The standard answer, represented as either "A" or "B".

- option_types: The strategy used to generate the incorrect option, categorized as either "mod-input", "mod-answer", "exam-judge", or "human-labeled".

- reasoning: The reasoning path of LLMs when generating the incorrect options, specifically distinguishing the option for "exam-judge".

- components: Sub-components of the prompt for data reproduction.

Please complete the following config files before conducting the evaluation.

config/model_collections.yaml config the model path (huggingface) for evaluation.

To access OpenAI APIs, please specify your key:

export OPENAI_KEY="YOUR_OPENAI_KEY"

python eval_benchmark.py --exp_path /path/to/exp \

--eval_models gpt-3.5-turbo gpt-4-1106-preview \

--dataset_path /path/to/datasets \

--max_data_num 5000 \

--pool_num 10 \

--gpu_devices 4,5,6,7 \

--max_memory_per_device 10 \

--seed 0 \

--eval_group_key option_types

Please put the test datasets (xxx.json) under dataset_path.

2024/06/12 We add the evaluation results of Llama3 models.

We only report the results of B-TIS subset here. Please refer to our paper for more experimental results. EJ, MI, MO, HA denotes four stategies to generate wrong options.

| Model | ToTTo | HiTab | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| EJ | MI | MO | HA | Avg. | EJ | MI | MO | HA | Avg. | |

| proprietary model | ||||||||||

| Gemini-pro | 70.2 | 93.3 | 87.9 | 76.9 | 85.6 | 53.1 | 67.6 | 79.1 | 67.4 | 66.6 |

| GPT-3.5-turbo-instruct | 60.7 | 81.8 | 80.6 | 55.7 | 75.1 | 62.3 | 71.9 | 78.4 | 45.7 | 68.3 |

| GPT-3.5-turbo-1106 | 56.9 | 77.8 | 76.6 | 64.8 | 72.1 | 42.5 | 64.6 | 71.3 | 48.6 | 57.5 |

| GPT-3.5-turbo-16k | 58.4 | 84.5 | 82.8 | 59.1 | 76.7 | 48.4 | 67.5 | 75.4 | 43.8 | 61.2 |

| GPT-4-turbo-1106 | 79.8 | 93.5 | 96.4 | 85.2 | 91.2 | 73.5 | 85.2 | 91.8 | 77.1 | 82.4 |

| open source model | ||||||||||

| Llama2-7b-chat | 54.3 | 52.4 | 53.1 | 60.2 | 53.6 | 44.3 | 54.8 | 47.8 | 39.1 | 47.8 |

| TableLlama-7b | 53.2 | 54.7 | 53.9 | 58.0 | 54.3 | 43.8 | 53.3 | 48.9 | 41.0 | 47.7 |

| Mistral-7b-instruct-v0.2 | 52.8 | 77.4 | 81.0 | 70.5 | 73.2 | 40.9 | 63.5 | 72.4 | 47.6 | 56.9 |

| Llama2-13b-chat | 52.4 | 66.7 | 66.7 | 60.2 | 63.3 | 45.0 | 52.2 | 64.8 | 53.3 | 53.4 |

| Mixtral-8*7b-instruct | 55.8 | 88.7 | 88.1 | 73.9 | 80.6 | 51.6 | 75.1 | 77.1 | 52.4 | 65.6 |

| Llama2-70b-chat | 52.1 | 70.9 | 79.6 | 65.9 | 70.0 | 46.8 | 60.0 | 68.0 | 50.5 | 56.9 |

| Tulu2-70b-DPO | 64.4 | 91.7 | 93.1 | 78.4 | 85.7 | 55.5 | 72.5 | 81.4 | 61.0 | 68.2 |

| StructLM-7b | 47.6 | 68.8 | 70.1 | 64.8 | 64.6 | 38.4 | 60.3 | 57.7 | 49.5 | 50.8 |

| StructLM-13b | 57.3 | 85.9 | 83.0 | 70.5 | 77.8 | 45.0 | 68.4 | 68.9 | 50.5 | 58.9 |

| StructLM-34b | 61.4 | 87.1 | 86.3 | 71.6 | 80.4 | 45.4 | 61.7 | 70.2 | 52.4 | 57.7 |

| codellama-7b | 47.2 | 61.2 | 61.2 | 56.8 | 58.0 | 33.6 | 59.4 | 56.3 | 41.0 | 47.9 |

| codellama-13b | 49.4 | 58.4 | 57.8 | 61.4 | 56.5 | 42.7 | 56.2 | 52.5 | 40.0 | 49.0 |

| codellama-34b | 54.7 | 81.8 | 81.2 | 64.8 | 74.8 | 44.5 | 61.7 | 72.1 | 44.8 | 57.3 |

| Llama3-8b-instruct | 62.9 | 89.4 | 92.5 | 77.3 | 84.3 | 55.3 | 71.0 | 80.9 | 58.1 | 67.3 |

| Llama3-70b-instruct | 83.9 | 94.0 | 96.4 | 90.9 | 92.6 | 72.4 | 83.2 | 91.5 | 74.3 | 81.1 |

If you find our work helpful, please cite us

@inproceedings{pang2024uncovering,

title={Uncovering Limitations of Large Language Models in Information Seeking from Tables},

author={Chaoxu Pang and Yixuan Cao and Chunhao Yang and Ping Luo},

booktitle={Findings of Association for Computational Linguistics (ACL)},

year={2024}

}