This repository provides an implementation of the Learning Rate Range Test and Cyclical Learning Rates (CLR) as originally described in the paper: Cyclical Learning Rates for Training Neural Networks by Leslie N. Smith [1].

What's in the box?

- Implementations of the triangular, triangular2, decay and exp_range policies.

- Implementation of the Learning Rate Range Test described in

Section 3.3 - Ports of the full and quick CIFAR10 Caffe models to pytorch.

- Experiments which verify the efficacy of CLR combined with the Learning Rate Range Test in reducing training time, compared to the default Caffe configuration.

The experiments performed in this repository were conducted on an Ubuntu 18.04 paperspace instance with a Nvidia Quadro P4000 GPU, NVIDIA Driver: 410.48, CUDA 10.0.130-1.

-

git clone git@github.com:coxy1989/clr.git -

cd clr -

conda env create -f environment.yml -

source activate clr -

jupyter notebook

Experiments - Reproduce results from the Result section of this README.

Figures - Render figures from the Result section of this README.

Schedulers - Render graphs for learning rate policies.

The architecture with which the experiments below were conducted was ported from caffe's CIFAR10 'quick train test' configuration.

| LR | Start | End |

|---|---|---|

| 0.001 | 0 | 60,000 |

| 0.0001 | 60,000 | 65,000 |

| 0.00001 | 65,000 | 70,000 |

Table 1: Fixed policy schedule

| Step Size | Min LR | Max LR | Start | End |

|---|---|---|---|---|

| 2000 | 0.0025 | 0.01 | 0 | 35,000 |

Table 2: CLR policy schedule

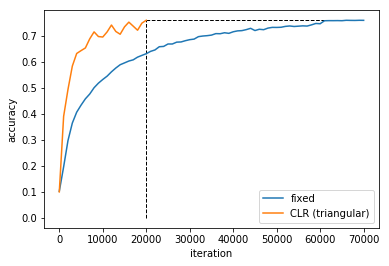

| LR Policy | Iterations | Accuracy (%) |

|---|---|---|

| fixed | 70,000 | 76.0 |

| CLR (triangular policy) | 20,000 | 76.0 |

Table 3: Fixed vs CLR Training Result (average of 5 training runs). The CLR policy achieves the same accuracy in 20,000 iterations as that obtained by the fixed policy in 70,000 iterations:

Plot 1: Iteration vs Accuracy for the result in Table 3

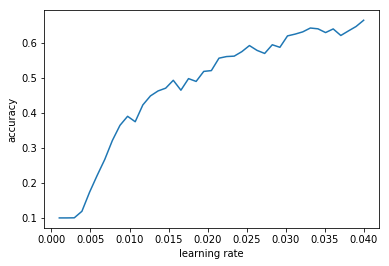

Plot 2: Learning Rate Range Test: Suitable boundries for the CLR policy are at ~0.0025, where the accuracy starts to increase and at ~0.01, where the Learning Rate Range Test plot becomes ragged.

[1] Leslie N. Smith. Cyclical Learning Rates for Training Neural Networks. arXiv:1506.01186, 2015.