Hefeng Wang, Jiale Cao, Rao Muhammad Anwer, Jin Xie, Fahad Shahbaz Khan, Yanwei Pang

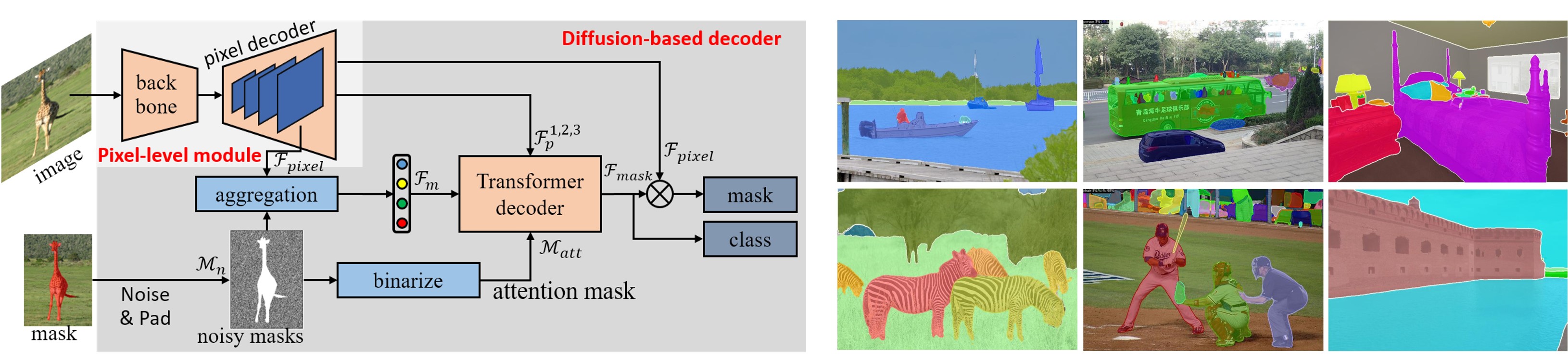

arXiv:2306.03437This paper introduces an approach, named DFormer, for universal image segmentation. The proposed DFormer views universal image segmentation task as a denoising process using a diffusion model. DFormer first adds various levels of Gaussian noise to ground-truth masks, and then learns a model to predict denoising masks from corrupted masks. Specifically, we take deep pixel-level features along with the noisy masks as inputs to generate mask features and attention masks, employing diffusion-based decoder to perform mask prediction gradually. At inference, our DFormer directly predicts the masks and corresponding categories from a set of randomly-generated masks. Extensive experiments reveal the merits of our proposed contributions on different image segmentation tasks: panoptic segmentation, instance segmentation, and semantic segmentation.

See installation instructions.

See Preparing Datasets for DFormer.

See Getting Started with DFormer.

We provide the baseline results and trained models available for download.

| Name | Backbone | epochs | PQ | download |

|---|---|---|---|---|

| DFormer | R50 | 50 | 51.1 | model |

| DFormer | Swin-T | 50 | 52.5 | model |

| Name | Backbone | epochs | AP | download |

|---|---|---|---|---|

| DFormer | R50 | 50 | 42.6 | model |

| DFormer | Swin-T | 50 | 44.4 | model |

| Name | Backbone | iterations | mIoU | download |

|---|---|---|---|---|

| DFormer | R50 | 160k | 46.7 | model |

| DFormer | Swin-T | 160k | 48.3 | model |

If you use DFormer in your research or wish to refer to the baseline results published in the Model Zoo and Baselines, please use the following BibTeX entry.

@article{wang2023dformer,

title={DFormer: Diffusion-guided Transformer for Universal Image Segmentation},

author={Wang, Hefeng and Cao, Jiale and Anwer, Rao Muhammad and Xie, Jin and Khan, Fahad Shahbaz and Pang, Yanwei},

journal={arXiv preprint arXiv:2306.03437},

year={2023}

}Many thanks to the nice work of Mask2Former @Bowen Cheng and DDIM @Jiaming Song. Our codes and configs follow Mask2Former and DDIM.