This repository contains details and pretrained models for the newly introduced dataset NTU-X, which is an extended version of popular NTU dataset. For additional details and results on experiments using NTU60-X, take a look at the paper NTU60-X: Towards Skeleton-based Recognition of Subtle Human Actions

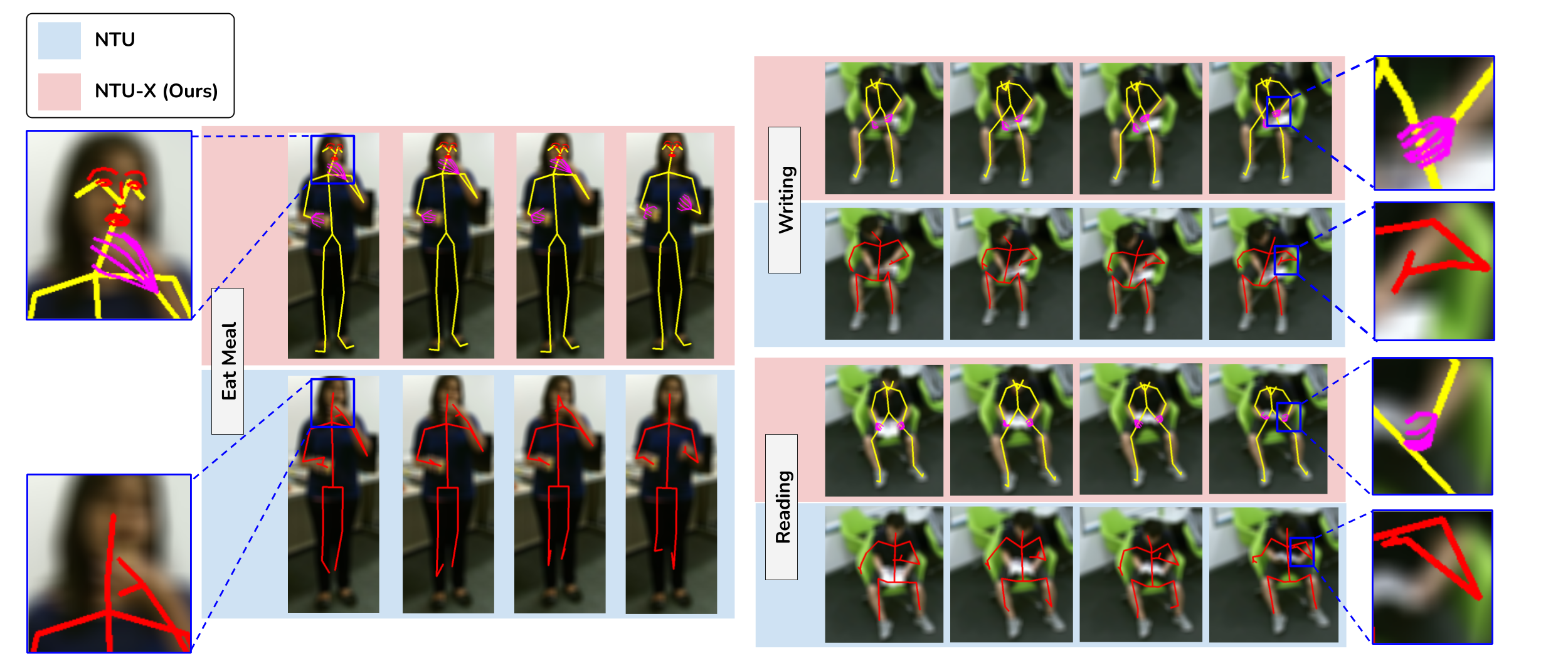

The original NTU dataset contains the human action skeleton which are captured using the Kinect. These skeletons have 25 joints. However, all the current top performing models seem to be bottlenecked at certain classes which involve finer finger level movements such as, reading, writing, eat meal etc.

Hence the new NTU-X dataset, introduces a more detailed 118 joints skeleton for the action sequences of the NTU dataset. This new dataset, along with 25 body joints, contains 42 finger joints and 51 face joints.

| Model | NTU60 | NTU60-X |

|---|---|---|

| MsG3d | 91.50 | 91.76 |

| PA-ResGCN | 90.90 | 91.64 |

| 4s-ShiftGCN | 90.70 | 91.78 |

| NTU | NTU-X (ours) | |

| Write |  |

|

| Read |  |

|

| Eat Meal |  |

|

| Dataset | Body | Fingers | Face | # Joints | # Sequences | # Classes |

|---|---|---|---|---|---|---|

| MSR-Action 3d | ✔️ | 20 | 567 | 20 | ||

| Northwestern-UCLA | ✔️ | 24 | 1475 | 10 | ||

| NTU-RGB+D | ✔️ | 25 | 56880 | 60 | ||

| NTU-X (Ours) | ✔️ | ✔️ | ✔️ | 118 | 56148 | 60 |

Coming Soon...

Few experiments are performed to benchmark this new dataset using the top performing models of the original NTU RGB+D dataset. Details about this models can be found at Models

NTU-X contains same classes as NTU RGB+D dataset. The action labels are mentioned below:

| A1 drink water. | A2 eat meal/snack. | A3 brushing teeth. |

| A4 brushing hair. | A5 drop. | A6 pickup. |

| A7 throw. | A8 sitting down. | A9 standing up (from sitting position). |

| A10 clapping. | A11 reading. | A12 writing. |

| A13 tear up paper. | A14 wear jacket. | A15 take off jacket. |

| A16 wear a shoe. | A17 take off a shoe. | A18 wear on glasses. |

| A19 take off glasses. | A20 put on a hat/cap. | A21 take off a hat/cap. |

| A22 cheer up. | A23 hand waving. | A24 kicking something. |

| A25 reach into pocket. | A26 hopping (one foot jumping). | A27 jump up. |

| A28 make a phone call/answer phone. | A29 playing with phone/tablet. | A30 typing on a keyboard. |

| A31 pointing to something with finger. | A32 taking a selfie. | A33 check time (from watch). |

| A34 rub two hands together. | A35 nod head/bow. | A36 shake head. |

| A37 wipe face. | A38 salute. | A39 put the palms together. |

| A40 cross hands in front (say stop). | A41 sneeze/cough. | A42 staggering. |

| A43 falling. | A44 touch head (headache). | A45 touch chest (stomachache/heart pain). |

| A46 touch back (backache). | A47 touch neck (neckache). | A48 nausea or vomiting condition. |

| A49 use a fan (with hand or paper)/feeling warm. | A50 punching/slapping other person. | A51 kicking other person. |

| A52 pushing other person. | A53 pat on back of other person. | A54 point finger at the other person. |

| A55 hugging other person. | A56 giving something to other person. | A57 touch other person's pocket. |

| A58 handshaking. | A59 walking towards each other. | A60 walking apart from each other. |

1. How is the NTU-X dataset created?

It is collected by estimating 3D SMPL-X pose outputs from the RGB frames of the NTU-60 RGB videos. We use both SMPL-X and Expose to perform these estimations.

2. How the pose extractor (SMPLx/ExPose) is decided for each class?

We use a semi-automatic approach to estimate the 3D pose for the videos of each class. Keeping the intra-view and intra-subject variance of the NTU dataset in mind, we sample random videos covering each view perclass of NTU and estimate the SMPL-X, ExPose outputs. The estimated skeleton is then backprojected to its corresponding RGB frame and the accuracy of the alignment is used to select between SMPL-X and Expose.

3. Which class IDs have ExPose used as pose extractor and which class IDs have SMPLx used as pose extractor?

Empirically, we observe that ExPose,SMPL-X perform equally well for single-person actions but SMPL-X, though slow, provides better pose estimates for multi-person action class sequences. The classes selected for SMPL-X and Expose are as follows:

-

A1. drink water

-

A2. eat meal/snack

-

A3. A3. brushing teeth.

-

A4. brushing hair.

-

A8. sitting down.

-

A9. standing up (from sitting position).

-

A12. writing.

-

A14. wear jacket.

-

A15. take off jacket.

-

A16. wear a shoe.

-

A17. take off a shoe.

-

A18. wear on glasses.

-

A19. take off glasses.

-

A20. put on a hat/cap.

-

A21. take off a hat/cap.

-

A24. kicking something.

-

A25. reach into pocket.

-

A26. hopping (one foot jumping).

-

A29. playing with phone/tablet.

-

A30. typing on a keyboard.

-

A33. check time (from watch).

-

A34. rub two hands together.

-

A36. shake head.

-

A37. wipe face.

-

A38. salute.

-

A41. sneeze/cough.

-

A42. staggering.

-

A43. falling.

-

A44. touch head (headache).

-

A46. touch back (backache).

-

A47. touch neck (neckache).

-

A48. nausea or vomiting condition.

-

A49. use a fan (with hand or paper)/feeling warm.

-

A5. drop.

-

A6. pickup.

-

A7. throw.

-

A10. clapping.

-

A11. reading.

-

A13. tear up paper.

-

A22. cheer up.

-

A23. hand waving.

-

A27. jump up.

-

A28. make a phone call/answer phone.

-

A31. pointing to something with finger.

-

A32. taking a selfie.

-

A35. nod head/bow.

-

A39. put the palms together.

-

A40. cross hands in front (say stop).

-

A45. touch chest (stomachache/heart pain).

-

A50. punching/slapping other person.

-

A51. kicking other person.

-

A52. pushing other person.

-

A53. pat on back of other person.

-

A54. point finger at the other person.

-

A55. hugging other person.

-

A56. giving something to other person.

-

A57. touch other person's pocket.

-

A58. handshaking.

-

A59. walking towards each other.

-

A60. walking apart from each other.