Official code for the paper Neural ODE Processes (ICLR 2021).

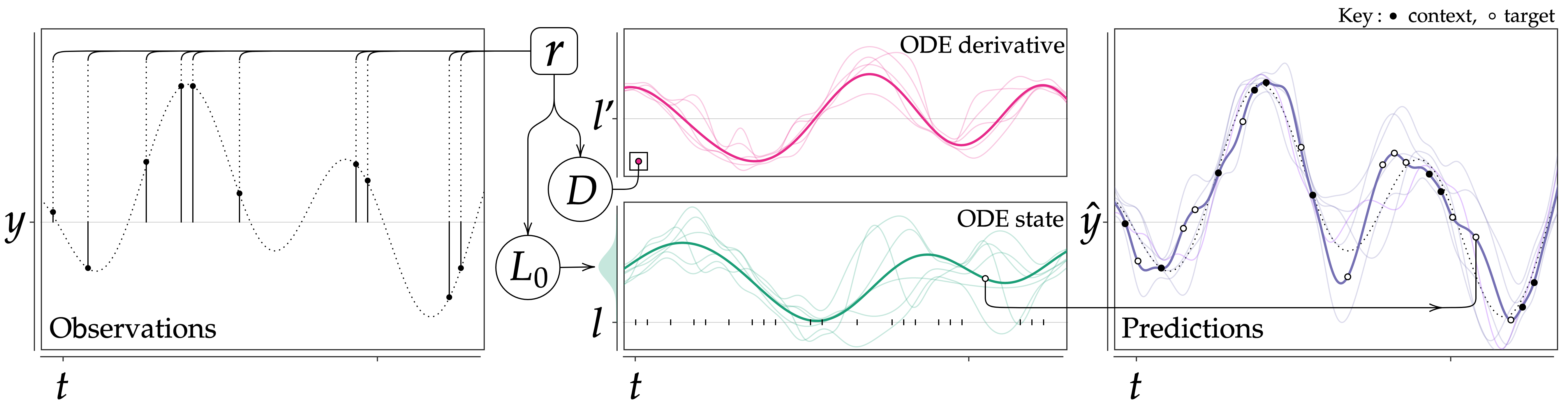

Neural Ordinary Differential Equations (NODEs) use a neural network to model the instantaneous rate of change in the state of a system. However, despite their apparent suitability for dynamics-governed time-series, NODEs present a few disadvantages. First, they are unable to adapt to incoming data-points, a fundamental requirement for real-time applications imposed by the natural direction of time. Second, time-series are often composed of a sparse set of measurements that could be explained by many possible underlying dynamics. NODEs do not capture this uncertainty. In contrast, Neural Processes (NPs) are a new class of stochastic processes providing uncertainty estimation and fast data-adaptation, but lack an explicit treatment of the flow of time. To address these problems, we introduce Neural ODE Processes (NDPs), a new class of stochastic processes determined by a distribution over Neural ODEs. By maintaining an adaptive data-dependent distribution over the underlying ODE, we show that our model can successfully capture the dynamics of low-dimensional systems from just a few data-points. At the same time, we demonstrate that NDPs scale up to challenging high-dimensional time-series with unknown latent dynamics such as rotating MNIST digits.

@inproceedings{

norcliffe2021neural,

title={Neural {\{}ODE{\}} Processes},

author={Alexander Norcliffe and Cristian Bodnar and Ben Day and Jacob Moss and Pietro Li{\`o}},

booktitle={International Conference on Learning Representations},

year={2021},

url={https://openreview.net/forum?id=27acGyyI1BY}

}

For development, we used Python 3.8.5 and PyTorch 1.8. First, install PyTorch

and torchvision using the official page and then run the following command to install

the requited packages:

pip install -r requirements.txtTo run the 1D regression experiments, run one of the following commands:

python -m main.1d_regression --model ndp --exp_name ndp_sine --data sine --epochs 30

python -m main.1d_regression --model ndp --exp_name ndp_exp --data exp --epochs 30

python -m main.1d_regression --model ndp --exp_name ndp_linear --data linear --epochs 30

python -m main.1d_regression --model ndp --exp_name ndp_oscil --data oscil --epochs 30To run the 2D regression experiments use one of the following

python -m main.2d_regression --model ndp --exp_name ndp_lv --data deterministic_lv --epochs 100

python -m main.2d_regression --model ndp --exp_name ndp_hw --data handwriting --epochs 100To run the high-dimensional regression experiments use:

python -m main.img_regression --model ndp --exp_name ndp_vrm --data VaryRotMNIST --use_y0 --epochs 50

python -m main.img_regression --model ndp --exp_name ndp_rr --data RotMNIST --use_y0 --epochs 50To use the rotating MNIST datasets, run the script below in order to download the required data:

bash data/download_datasets.shOur code relies to a great extent on the Neural Process implementation by Emilien Dupont. The RotMNIST dataset code adapts the ODE2VAE code.