Tiange Luo*, Chris Rockwell*, Honglak Lee†, Justin Johnson† (*Equal contribution †Equal Advising)

Data download available at Hugging Face, including 1,002,422 descriptive captions for 3D objects in Objaverse and a subset of Objaverse-XL, from our two works Cap3D and DiffuRank. We also include 1,002,422 point clouds and rendered images (with camera, depth, and MatAlpha information), corresponding to the objects with captions. 3D-caption pairs for ABO are listed here.

Our 3D captioning codes can be found in the captioning_pipeline folder, while the text-to-3D folder contains codes for evaluating and fine-tuning text-to-3D models. Some newer code (e.g., rendering) are included at DiffuRank.

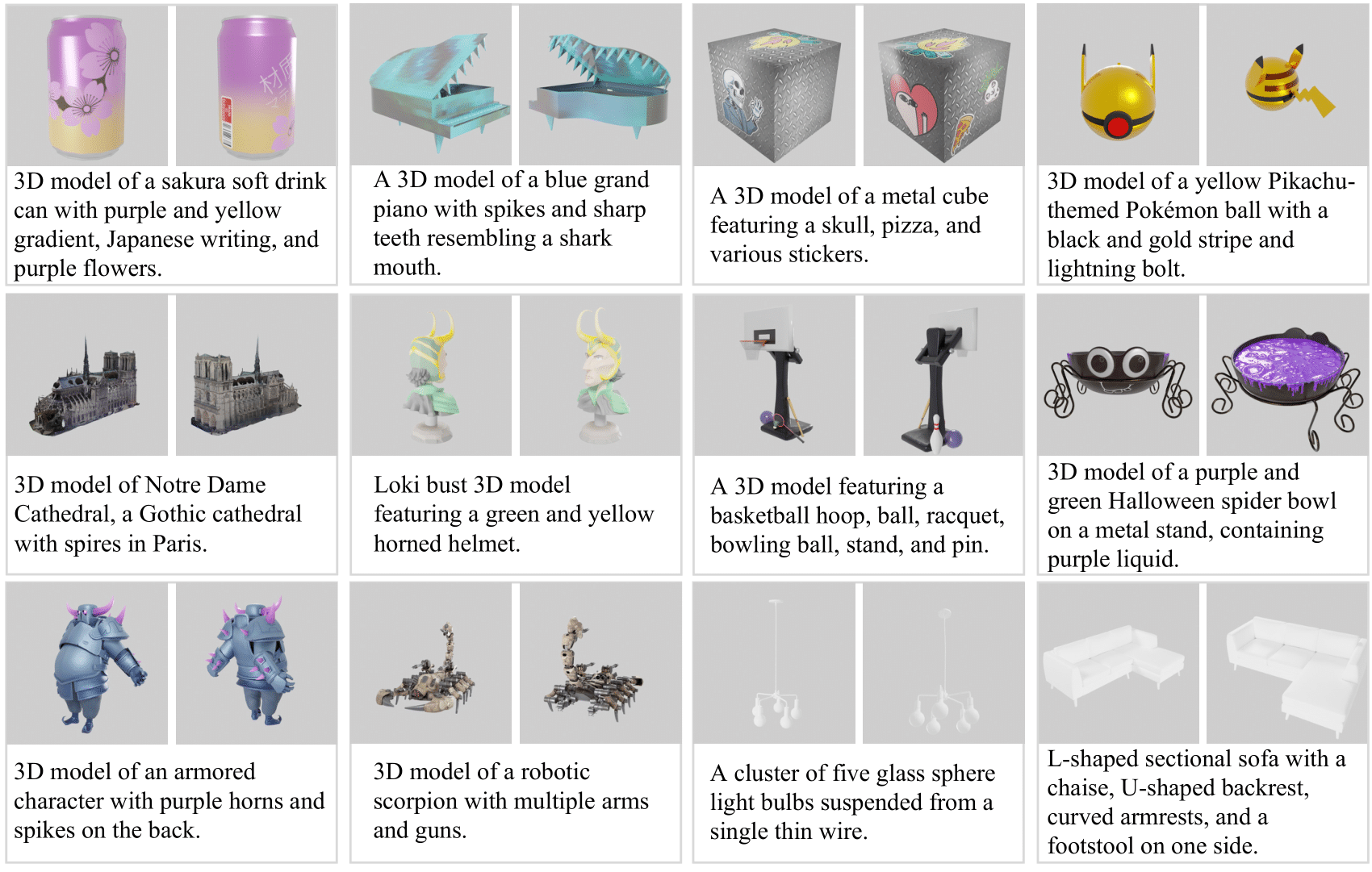

Cap3D provides detailed descriptions of 3D objects by leveraging pretrained models in captioning, alignment, and LLM to consolidate multi-view information.

If you find our code or data useful, please consider citing:

@article{luo2023scalable,

title={Scalable 3D Captioning with Pretrained Models},

author={Luo, Tiange and Rockwell, Chris and Lee, Honglak and Johnson, Justin},

journal={arXiv preprint arXiv:2306.07279},

year={2023}

}

@article{luo2024view,

title={View Selection for 3D Captioning via Diffusion Ranking},

author={Luo, Tiange and Johnson, Justin and Lee, Honglak},

journal={arXiv preprint arXiv:2404.07984},

year={2024}

}

If you use our captions for Objaverse objects, please cite:

@inproceedings{deitke2023objaverse,

title={Objaverse: A universe of annotated 3d objects},

author={Deitke, Matt and Schwenk, Dustin and Salvador, Jordi and Weihs, Luca and Michel, Oscar and VanderBilt, Eli and Schmidt, Ludwig and Ehsani, Kiana and Kembhavi, Aniruddha and Farhadi, Ali},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={13142--13153},

year={2023}

}

If you use our captions for ABO objects, please cite:

@inproceedings{collins2022abo,

title={Abo: Dataset and benchmarks for real-world 3d object understanding},

author={Collins, Jasmine and Goel, Shubham and Deng, Kenan and Luthra, Achleshwar and Xu, Leon and Gundogdu, Erhan and Zhang, Xi and Vicente, Tomas F Yago and Dideriksen, Thomas and Arora, Himanshu and others},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={21126--21136},

year={2022}

}

This work is supported by two grants from LG AI Research and Grant #1453651 from NSF. Thanks to Kaiyi Li for his technical supports. Thanks to Mohamed El Banani, Karan Desai and Ang Cao for their many helpful suggestions. Thanks Matt Deitke for helping with Objaverse-related questions. Thanks Haochen for helping fix the incorrect renderings.

We also thank the below open-source projects: