By Haoran Bai, Di Kang, Haoxian Zhang, Jinshan Pan, and Linchao Bao

In CVPR 2023 [Paper: https://arxiv.org/abs/2211.13874]

Rendering demos [YouTube video]

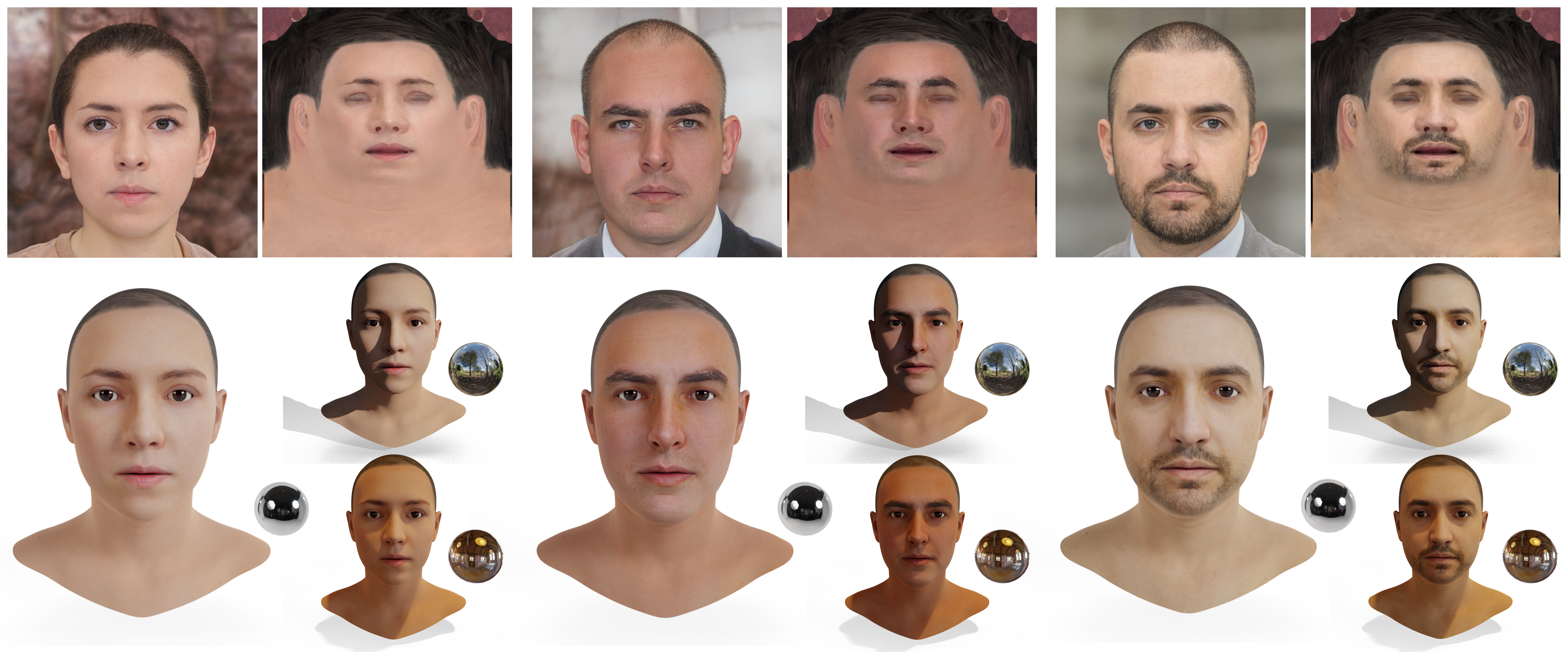

FFHQ-UV is a large-scale facial UV-texture dataset that contains over 50,000 high-quality texture UV-maps with even illuminations, neutral expressions, and cleaned facial regions, which are desired characteristics for rendering realistic 3D face models under different lighting conditions.

The dataset is derived from a large-scale face image dataset namely FFHQ, with the help of our fully automatic and robust UV-texture production pipeline. Our pipeline utilizes the recent advances in StyleGAN-based facial image editing approaches to generate multi-view normalized face images from single-image inputs. An elaborated UV-texture extraction, correction, and completion procedure is then applied to produce high-quality UV-maps from the normalized face images. Compared with existing UV-texture datasets, our dataset has more diverse and higher-quality texture maps.

[2023-07-11] A solution for using our UV-texture maps on a FLAME mesh is available [here].

[2023-07-10] A more detailed description and a new version of the RGB fitting process is available [here].

[2023-07-10] A more detailed description of the facial UV-texture dataset creation pipeline is available [here].

[2023-03-17] The source codes for adding eyeballs into head mesh are available [here].

[2023-03-16] The project details of the FFHQ-UV dataset creation pipeline are released [here].

[2023-03-16] The OneDrive download link was updated and the file structures have been reorganized.

[2023-02-28] This paper will appear in CVPR 2023.

[2023-01-19] The source codes are available, refer to [here] for quickly running.

[2022-12-16] The OneDrive download link is available.

[2022-12-16] The AWS CloudFront download link is offline.

[2022-12-06] The script for generating face images from latent codes is available.

[2022-12-02] The latent codes of the multi-view normalized face images are available.

[2022-12-02] The FFHQ-UV-Interpolate dataset is available.

[2022-12-01] The FFHQ-UV dataset is available [here].

[2022-11-28] The paper is available [here].

- Linux + Anaconda

- CUDA 10.0 + CUDNN 7.6.0

- Python 3.7

- dlib:

pip install dlib - PyTorch 1.7.1:

pip install torch==1.7.1 torchvision==0.8.2 torchaudio==0.7.2 - TensorBoard:

pip install tensorboard - TensorFlow 1.15.0:

pip install tensorflow-gpu==1.15.0 - MS Face API:

pip install --upgrade azure-cognitiveservices-vision-face - Other packages:

pip install tqdm scikit-image opencv-python pillow imageio matplotlib mxnet Ninja google-auth google-auth-oauthlib click requests pyspng imageio-ffmpeg==0.4.3 scikit-learn torchdiffeq==0.0.1 flask kornia==0.2.0 lmdb psutil dominate rtree - Important: OpenCV's version needs to be higher than 4.5, otherwise it will not work well.

- PyTorch3D and Nvdiffrast:

mkdir thirdparty

cd thirdparty

git clone https://github.com/facebookresearch/iopath

git clone https://github.com/facebookresearch/fvcore

git clone https://github.com/facebookresearch/pytorch3d

git clone https://github.com/NVlabs/nvdiffrast

conda install -c bottler nvidiacub

pip install -e iopath

pip install -e fvcore

pip install -e pytorch3d

pip install -e nvdiffrast

- Please refer to this [README] to download the dataset and learn more.

- Please refer to this [README] for details of checkpoints and topology assets.

- Please refer to this [README] for details of running facial UV-texture dataset creation pipeline.

- Microsoft Face API is not accessible for new users, one can find an alternative API, or manually fill in the json file to avoid this step.

- We provide the detected facial attributes of the FFHQ dataset we used, please find details from here.

- Please refer to this [README] for details of running RGB fitting process.

- We provide a new version of RGB fitting, where the FFHQ-UV-Interpolate dataset is used and the GAN-based texture decoder only generates facial textures. More details can found here.

- Prepare a head mesh with HiFi3D++ topology, which is without eyeballs.

- Modify the configuration and then run the following script to add eyeballs into head mesh.

sh run_mesh_add_eyeball.sh # Please refer to this script for detailed configuration

There are two ways to generate a UV-texture map from a given facial image:

- Facial editing + texture unwrapping (Section 3.1 of the paper)

- RGB fitting (Section 4.2 of the paper)

- The FFHQ-UV dataset is created from the FFHQ dataset in this way.

- See source codes of facial UV-texture dataset creation pipeline, which including GAN inversion, attribute detection, StyleGAN-based editing, and texture unwrapping steps.

- Advantages:

- The generated textures are directly extracted from facial images, which are detailed and with high-quality.

- Disadvantages:

- The GAN inversion step would be failed for some samples.

- The attribute detection step requires the Microsoft Face API, which is no longer accessible.

- The StyleGAN-based editing would change the ID of the faces, resulting in textures with low-fidelity.

- This is the proposed 3D face reconstruction algorithm, which uses FFHQ-UV dataset to train a nonlinear texture basis.

- See source codes of RGB fitting.

- Advantages:

- The generated textures are fitted based on the supervision of input faces, which are with high-fidelity.

- Disadvantages:

- The textures are generated by a GAN-based texture decoder, sometimes with less detail than texture maps in the FFHQ-UV dataset. This is mainly due to the limitations of the nonlinear texture basis design and training.

- Future work will improve the generation capabilities of the nonlinear texture basis, in order to take full advantage of the high-quality and detailed UV texture maps in the FFHQ-UV dataset.

- We provide a solution for using our UV-texture maps on a FLAME mesh, please refer to this [README] for details.

@InProceedings{Bai_2023_CVPR,

title={FFHQ-UV: Normalized Facial UV-Texture Dataset for 3D Face Reconstruction},

author={Bai, Haoran and Kang, Di and Zhang, Haoxian and Pan, Jinshan and Bao, Linchao},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition},

month={June},

year={2023}

}

This implementation builds upon the awesome works done by Tov et al. (e4e), Zhou et al. (DPR), Abdal et al. (StyleFlow), and Karras et al. (StyleGAN2, StyleGAN2-ADA-PyTorch, FFHQ).

This work is based on HiFi3D++ topology, and was supported by Tencent AI Lab.