By Haoran Bai, Jinshan Pan, Xinguang Xiang, and Jinhui Tang

[2022-1-23] Inference logs are available!

[2022-1-23] Training code is available!

[2022-1-23] Pre-trained models are available!

[2022-1-23] Testing code is available!

[2022-1-23] Paper is available!

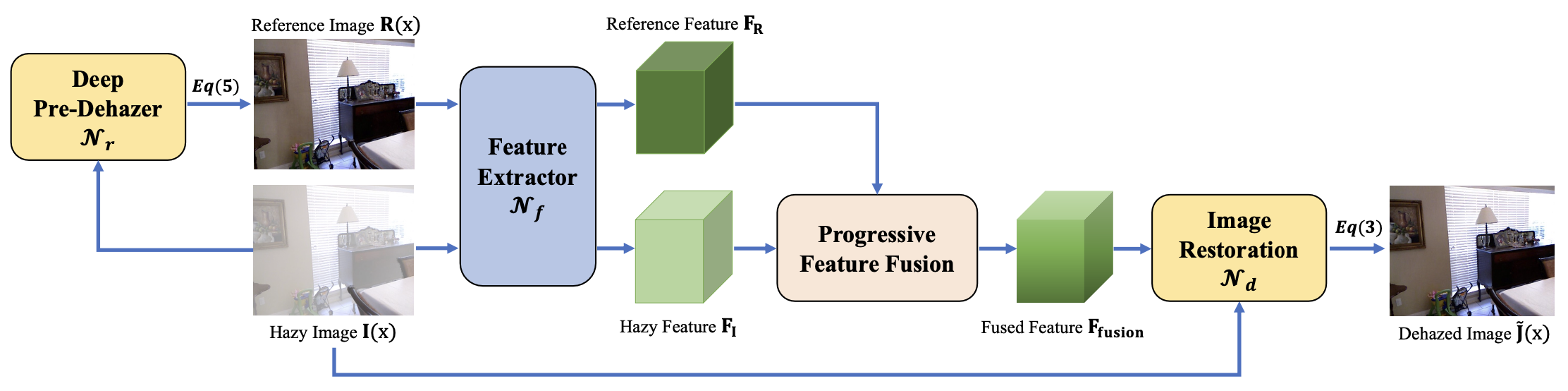

We propose an effective image dehazing algorithm which explores useful information from the input hazy image itself as the guidance for the haze removal. The proposed algorithm first uses a deep pre-dehazer to generate an intermediate result, and takes it as the reference image due to the clear structures it contains. To better explore the guidance information in the generated reference image, it then develops a progressive feature fusion module to fuse the features of the hazy image and the reference image. Finally, the image restoration module takes the fused features as input to use the guidance information for better clear image restoration. All the proposed modules are trained in an end-to-end fashion, and we show that the proposed deep pre-dehazer with progressive feature fusion module is able to help haze removal. Extensive experimental results show that the proposed algorithm performs favorably against state-of-the-art methods on the widely-used dehazing benchmark datasets as well as real-world hazy images.

More detailed analysis and experimental results are included in [Paper].

- Linux (Tested on Ubuntu 18.04)

- Python 3 (Recommend to use Anaconda)

- PyTorch 0.4.1:

conda install pytorch=0.4.1 torchvision cudatoolkit=9.2 -c pytorch - numpy:

conda install numpy - matplotlib:

conda install matplotlib - opencv:

conda install opencv - imageio:

conda install imageio - skimage:

conda install scikit-image - tqdm:

conda install tqdm

- Pretrained models and Datasets can be downloaded from [Here].

- If you have downloaded the pretrained models,please put them to './pretrained_models'.

- If you have downloaded the datasets,please put them to './dataset'.

If you prepare your own dataset, please follow the following form:

|--dataset

|--clear

|--image 1

|--image 2

:

|--image n

|--hazy

|--image 1

|--image 2

:

|--image n

- Download training dataset from [Here], or prepare your own dataset like above form.

- Run the following commands to pretrain the deep pre-dehazer:

cd ./code

python main.py --template Pre_Dehaze

- After deep pre-dehazer pretraining is done, put the trained model to './pretrained_models' and name it as 'pretrain_pre_dehaze_net.pt'.

- Run the following commands to train the Video SR model:

python main.py --template ImageDehaze_SGID_PFF

- Download the pretrained models from [Here].

- Download the testing dataset from [Here].

- Run the following commands:

cd ./code

python inference.py --quick_test SOTS_indoor

# --quick_test: the results in Paper you want to reproduce, optional: SOTS_indoor, SOTS_outdoor

- The dehazed result will be in './infer_results'.

- Download the pretrained models from [Here].

- Organize your dataset like the above form.

- Run the following commands:

cd ./code

python inference.py --data_path path/to/hazy/images --gt_path path/to/gt/images --model_path path/to/pretrained/model --infer_flag my_test

# --data_path: the path of the hazy images in your dataset.

# --gt_path: the path of the gt images in your dataset.

# --model_path: the path of the downloaded pretrained model.

# --infer_flag: the flag of this inference.

- The dehazed result will be in './infer_results'.

@article{bai2022self,

title = {Self-Guided Image Dehazing Using Progressive Feature Fusion},

author = {Bai, Haoran and Pan, Jinshan and Xiang, Xinguang and Tang, Jinhui},

journal = {IEEE Transactions on Image Processing},

volume = {31},

pages = {1217 - 1229},

year = {2022},

publisher = {IEEE}

}