#Train first

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=horse2zebra --continue_train=True --print_freq=50

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=hockey2rugby --sample_dir=sampleh2r --continue_train=1

tensorboard --logdir=./logs

#turn to frames

youtube-dl <video_with_horses> -o test.mp4 ( -f 22 for 720p -F for available)

ffmpeg -i test.mp4 -f image2 out%06d.jpg

ffmpeg -i test.mp4 -f image2 out%06d.jpg -vf fps=5

ffmpeg -i myvideo.avi -ss 00:00:10 -vf fps=1/60 img%03d.jpg # frame every minute, strating at 10 s

#Optional scaling 256x256, not sure training images need this...

# but other datasets already were scaled down to 256

ffmpeg -i youtube_out.mp4 -f image2 -vf scale=256:256 out_%06d.jpg

backup horse2zebra/testA

copy frames to horse2zebra/testA

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=horse2zebra --phase=test --test_dir=./test5

#STich together in blender or ffmpeg

cd test5

ffmpeg -i BtoA_image%06d.jpg out.mp4 #make sure no '-' character in name

#voila, watch cycle GAN video

Grab video time slice

ffmpeg -ss 3:59:10 -i $(youtube-dl -f 22 -g 'https://www.youtube.com/watch?v=XXXXXXXX') \

-t 3:06:00

#train with different image size output

#get frames larger than 512

#put in /datasets/your_dataset/ folders trainA trainB testA testB

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=your_dataset --load_size=514 --fine_size=512

ffmpeg -i $(youtube-dl -f 22 -g 'https://www.youtube.com/watch?v=hONkNECf6aE

') rubgy_out.mp4

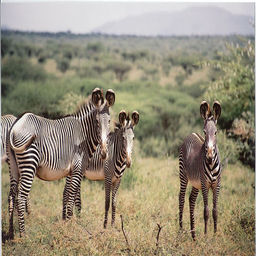

Tensorflow implementation for learning an image-to-image translation without input-output pairs. The method is proposed by Jun-Yan Zhu in Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networkssee. For example in paper:

The results of this implementation:

You can download the pretrained model from this url

and extract the rar file to ./checkpoint/.

- tensorflow r1.1

- numpy 1.11.0

- scipy 0.17.0

- pillow 3.3.0

- Install tensorflow from https://github.com/tensorflow/tensorflow

- Clone this repo:

git clone https://github.com/xhujoy/CycleGAN-tensorflow

cd CycleGAN-tensorflow- Download a dataset (e.g. zebra and horse images from ImageNet):

bash ./download_dataset.sh horse2zebra- Train a model:

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=horse2zebra- Use tensorboard to visualize the training details:

tensorboard --logdir=./logs- Finally, test the model:

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=horse2zebra --phase=test --which_direction=AtoBTo train a model,

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=/path/to/data/ Models are saved to ./checkpoints/ (can be changed by passing --checkpoint_dir=your_dir).

To test the model,

CUDA_VISIBLE_DEVICES=0 python main.py --dataset_dir=/path/to/data/ --phase=test --which_direction=AtoB/BtoADownload the datasets using the following script:

bash ./download_dataset.sh dataset_namefacades: 400 images from the CMP Facades dataset.cityscapes: 2975 images from the Cityscapes training set.maps: 1096 training images scraped from Google Maps.horse2zebra: 939 horse images and 1177 zebra images downloaded from ImageNet using keywordswild horseandzebra.apple2orange: 996 apple images and 1020 orange images downloaded from ImageNet using keywordsappleandnavel orange.summer2winter_yosemite: 1273 summer Yosemite images and 854 winter Yosemite images were downloaded using Flickr API. See more details in our paper.monet2photo,vangogh2photo,ukiyoe2photo,cezanne2photo: The art images were downloaded from Wikiart. The real photos are downloaded from Flickr using combination of tags landscape and landscapephotography. The training set size of each class is Monet:1074, Cezanne:584, Van Gogh:401, Ukiyo-e:1433, Photographs:6853.iphone2dslr_flower: both classe of images were downlaoded from Flickr. The training set size of each class is iPhone:1813, DSLR:3316. See more details in our paper.

- The torch implementation of CycleGAN, https://github.com/junyanz/CycleGAN

- The tensorflow implementation of pix2pix, https://github.com/yenchenlin/pix2pix-tensorflow