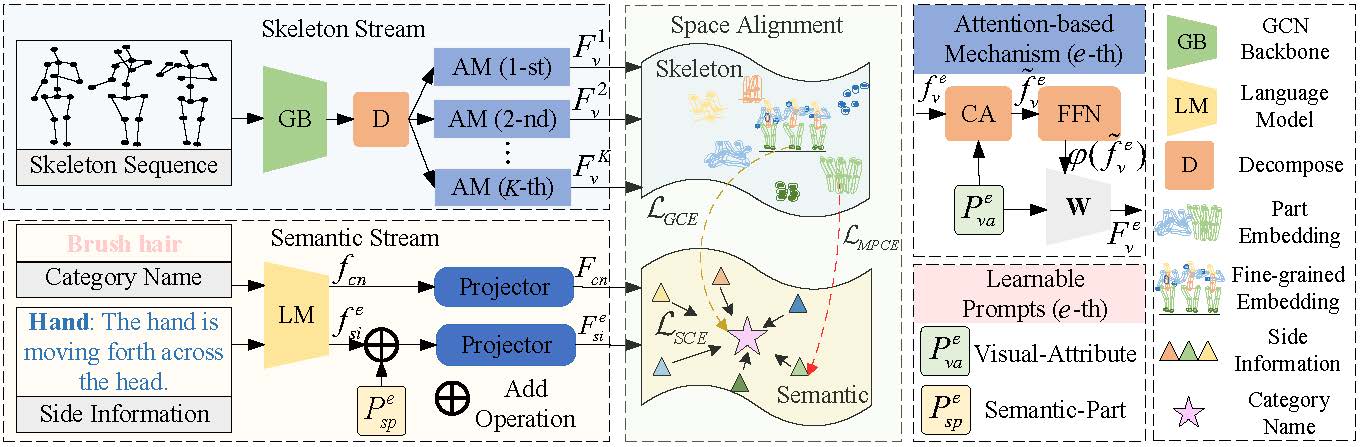

This repo is the official implementation for Fine-Grained Side Information Guided Dual-Prompts for Zero-Shot Skeleton Action Recognition. The paper is accepted to ACM MM 2024.

Note: We conduct extensive experiments on three datasets in the ZSL and GZSL settings.

- Python >= 3.6

- PyTorch >= 1.1.0

Download the raw data of NTU RGB+D 60, NTU RGB+D 120, and PKU MMD II datasets.

For NTU series datasets, we take the same data processing procedure as the CTR-GCN. For PKU MMD II dataset, we take the same data processing procedure as the AimCLR.

We conduct the experiment based on the official experimental settings (cross-subject, cross-view, and cross-set) of the NTU RGB+D 60, NTU RGB+D 120, and PKU-MMD I datasets. To make a fair comparison, we update the performance results of the previous studies (e.g., SynSE, SMIE) under the current experimental settings based on their public codes.

As for known and unknown categories partition strategies that the official requirements lacked, for convenience, we follow the SynSE. For NTU 60, we utilize the 55/5 and 48/12 split strategies, which include 5 and 12 unknown categories. For NTU 120, we employ the 110/10 and 96/24 split strategies. For PKU-MMD I, we take the 46/5 and 39/12 split strategies.

For the optimized experimental settings in Table 5, we follow the known-unknown categories partition strategies of SMIE.

You can download Shift-GCN pretraining weights from BaiduDisk or Google Drive for convenience. Following the Shift-GCN procedures, you can train the skeleton encoder yourself.

This repo is based on CTR-GCN and GAP. The data processing is borrowed from CTR-GCN and AimCLR. The baseline methods are from SynSE and SMIE.

Thanks to the original authors for their work!

Please cite this work if you find it useful:.

@inproceedings{chen2024star,

title={Fine-Grained Side Information Guided Dual-Prompts for Zero-Shot Skeleton Action Recognition},

author={Chen, Yang and Guo, Jingcai and He, Tian and Lu, Xiaocheng and Wang, Ling},

booktitle={Proceedings of the 32nd ACM International Conference on Multimedia},

pages = {778–786},

year={2024}

}