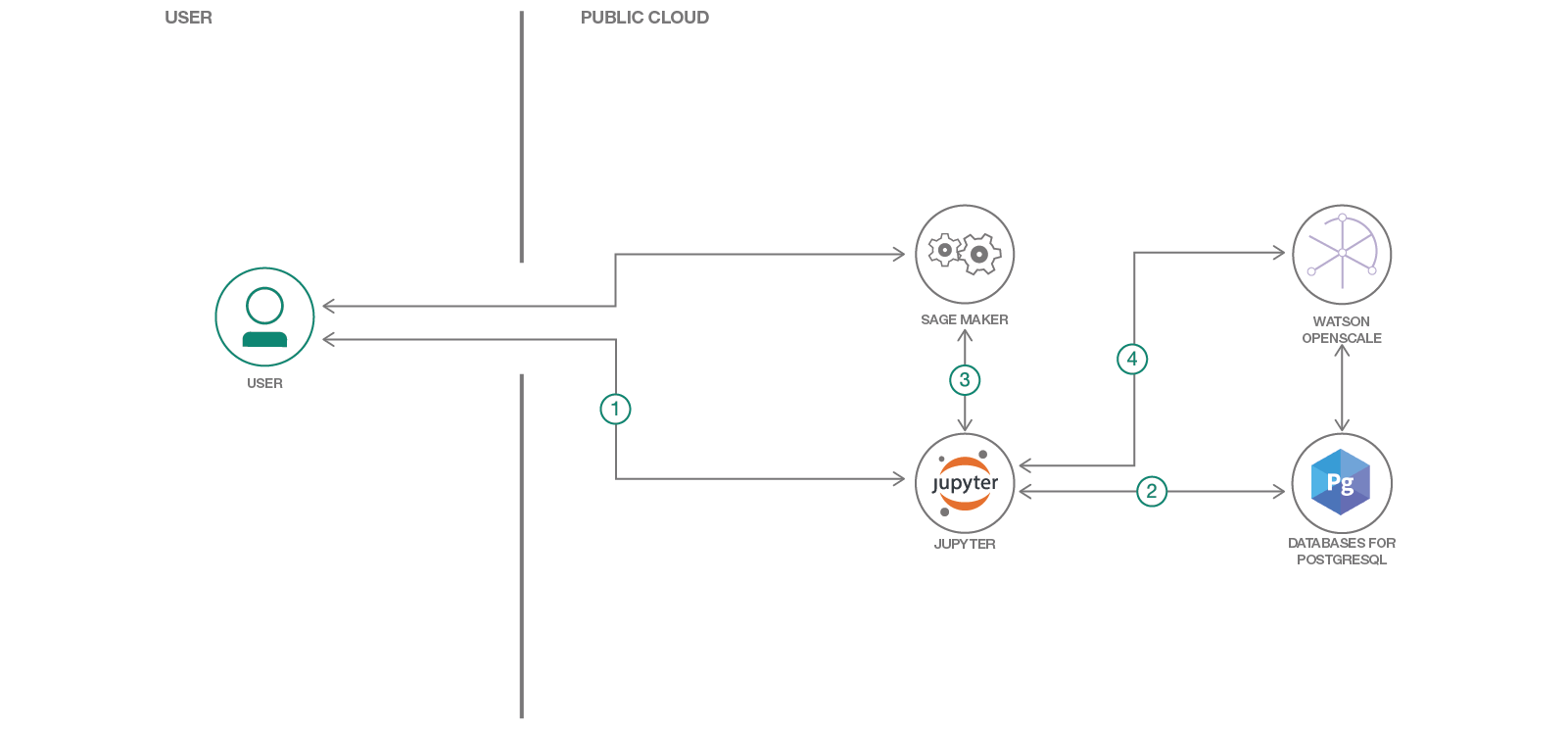

In this Code Pattern, we will use a German Credit dataset to create a logistic regression model using AWS SageMaker. We will use Watson OpenScale to bind the ML model deployed in the AWS cloud, create a subscription, and perform payload and feedback logging.

When the reader has completed this Code Pattern, they will understand how to:

- Prepare data, train a model, and deploy using AWS SageMaker

- Score the model using sample scoring records and the scoring endpoint

- Bind the SageMaker model to the Watson OpenScale Data Mart

- Add subscriptions to the Data Mart

- Enable payload logging and performance monitoring for both subscribed assets

- Use Data Mart to access tables data via subscription

- The developer creates a Jupyter Notebook.

- The Jupyter Notebook is connected to a PostgreSQL database, which is used to store Watson OpenScale data.

- An ML model is created using Amazon SageMaker, using data from credit_risk_training.csv, and then it is deployed to the cloud.

- Watson Open Scale is used by the notebook to log payload and monitor performance.

- An IBM Cloud Account

- IBM Cloud CLI

- IBM Cloud Object Storage (COS)

- An account on AWS SageMaker

git clone https://github.com/IBM/monitor-sagemaker-ml-with-watson-openscale

cd monitor-sagemaker-ml-with-watson-openscaleCreate Watson OpenScale, either on the IBM Cloud or using your On-Premise Cloud Pak for Data.

On IBM Cloud

-

If you do not have an IBM Cloud account, register for an account

-

Create a Watson OpenScale instance from the IBM Cloud catalog

-

Select the Lite (Free) plan, enter a Service name, and click Create.

-

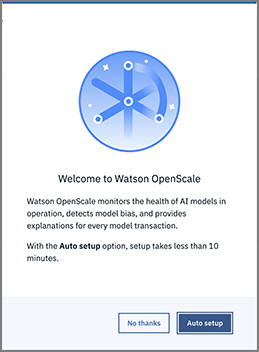

Click Launch Application to start Watson OpenScale.

-

Click Auto setup to automatically set up your Watson OpenScale instance with sample data.

-

Click Start tour to tour the Watson OpenScale dashboard.

On IBM Cloud Pak for Data platform

Note: This assumes that your Cloud Pak for Data Cluster Admin has already installed and provisioned OpenScale on the cluster.

-

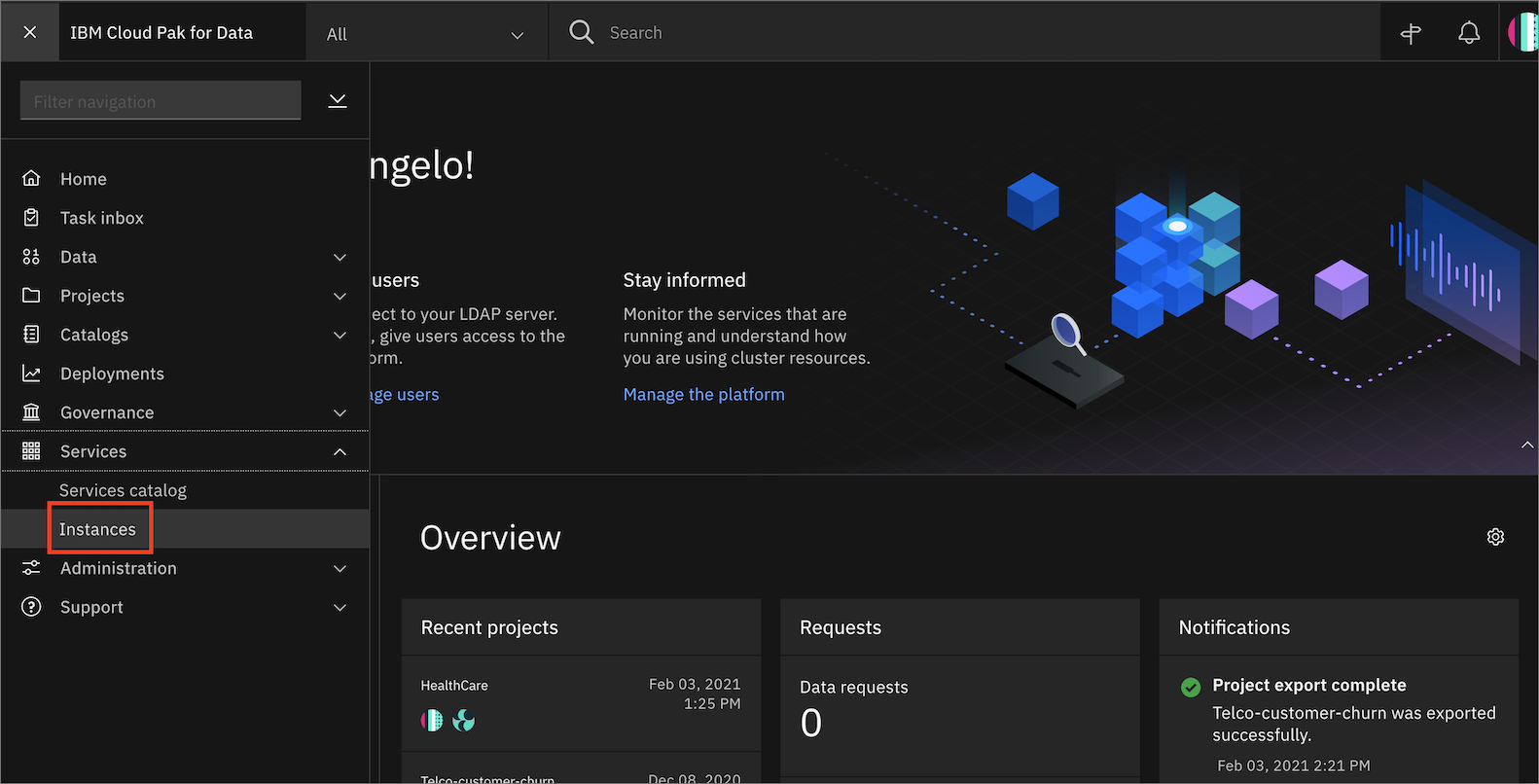

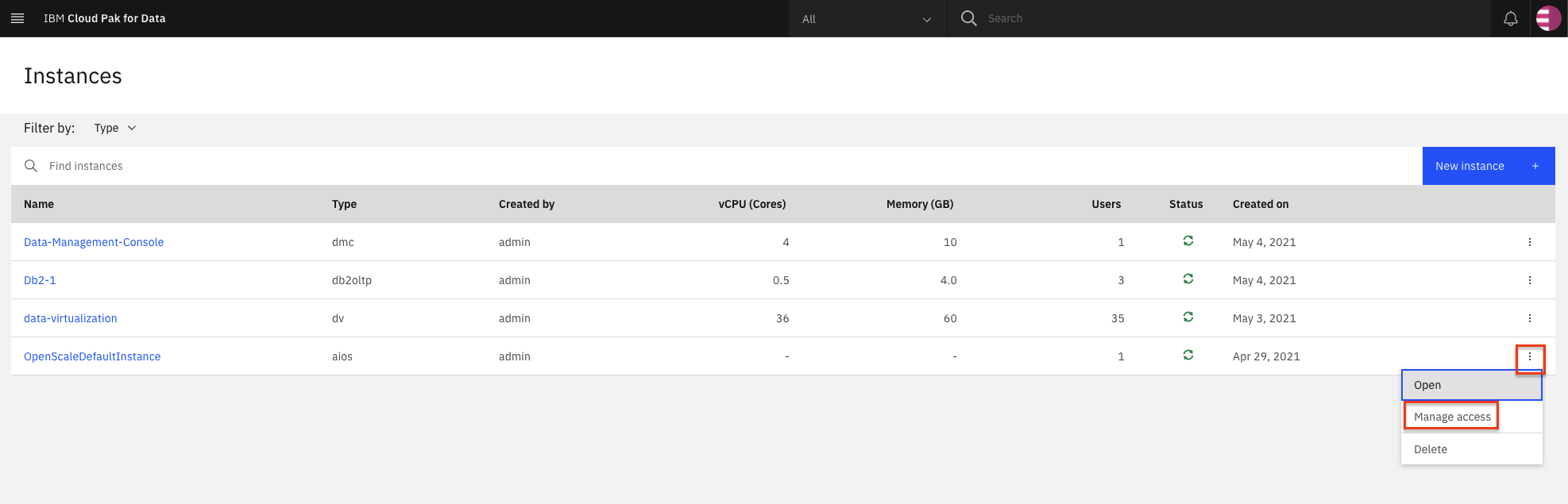

In the Cloud Pak for Data instance, go the (☰) menu and under

Servicessection, click on theInstancesmenu option. -

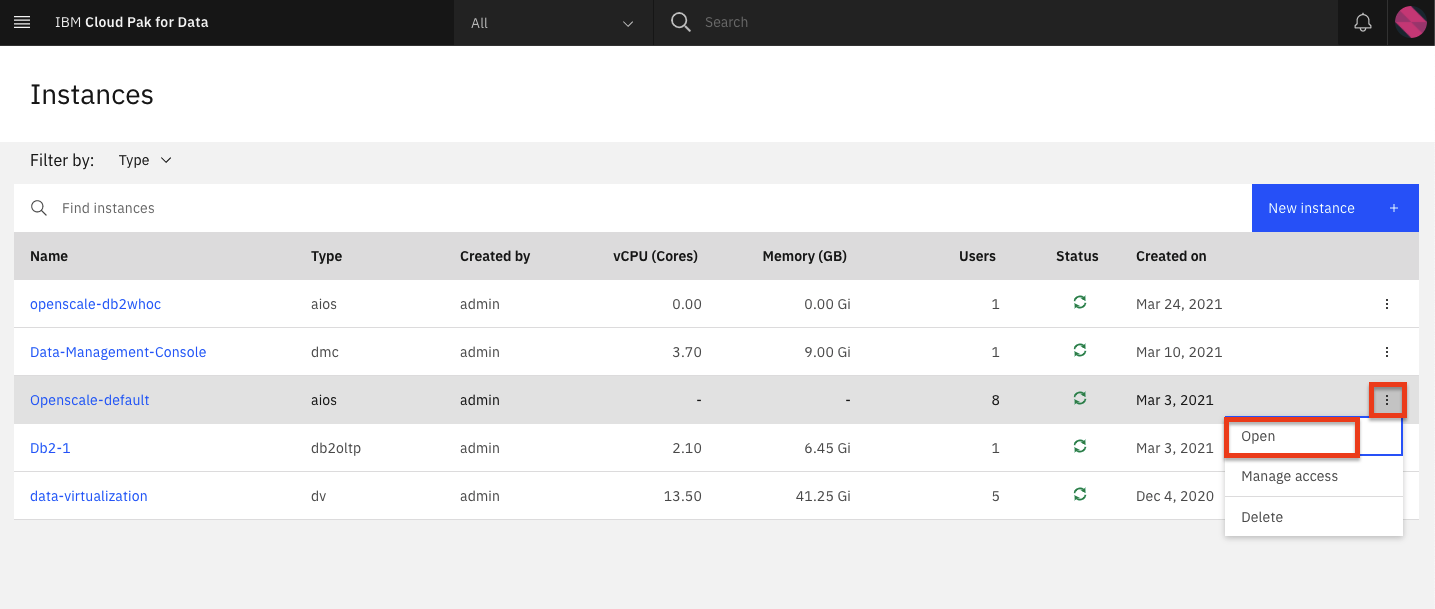

Find the

OpenScale-defaultinstance from the instances table and click the three vertical dots to open the action menu, then click on theOpenoption. -

If you need to give other users access to the OpenScale instance, go the (☰) menu and under

Servicessection, click on theInstancesmenu option. -

Find the

OpenScale-defaultinstance from the instances table and click the three vertical dots to open the action menu, then click on theManage accessoption. -

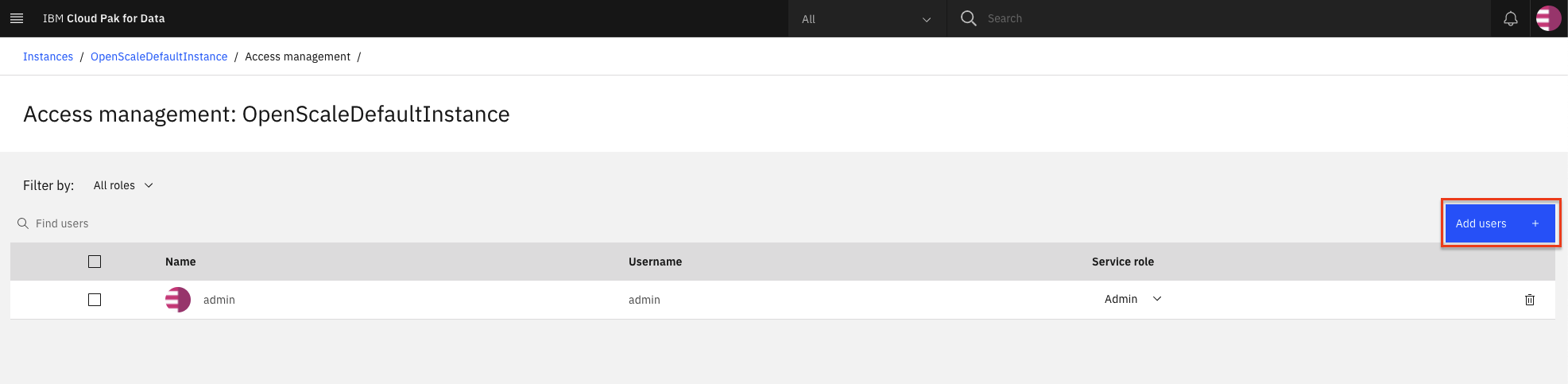

To add users to the service instance, click the

Add usersbutton. -

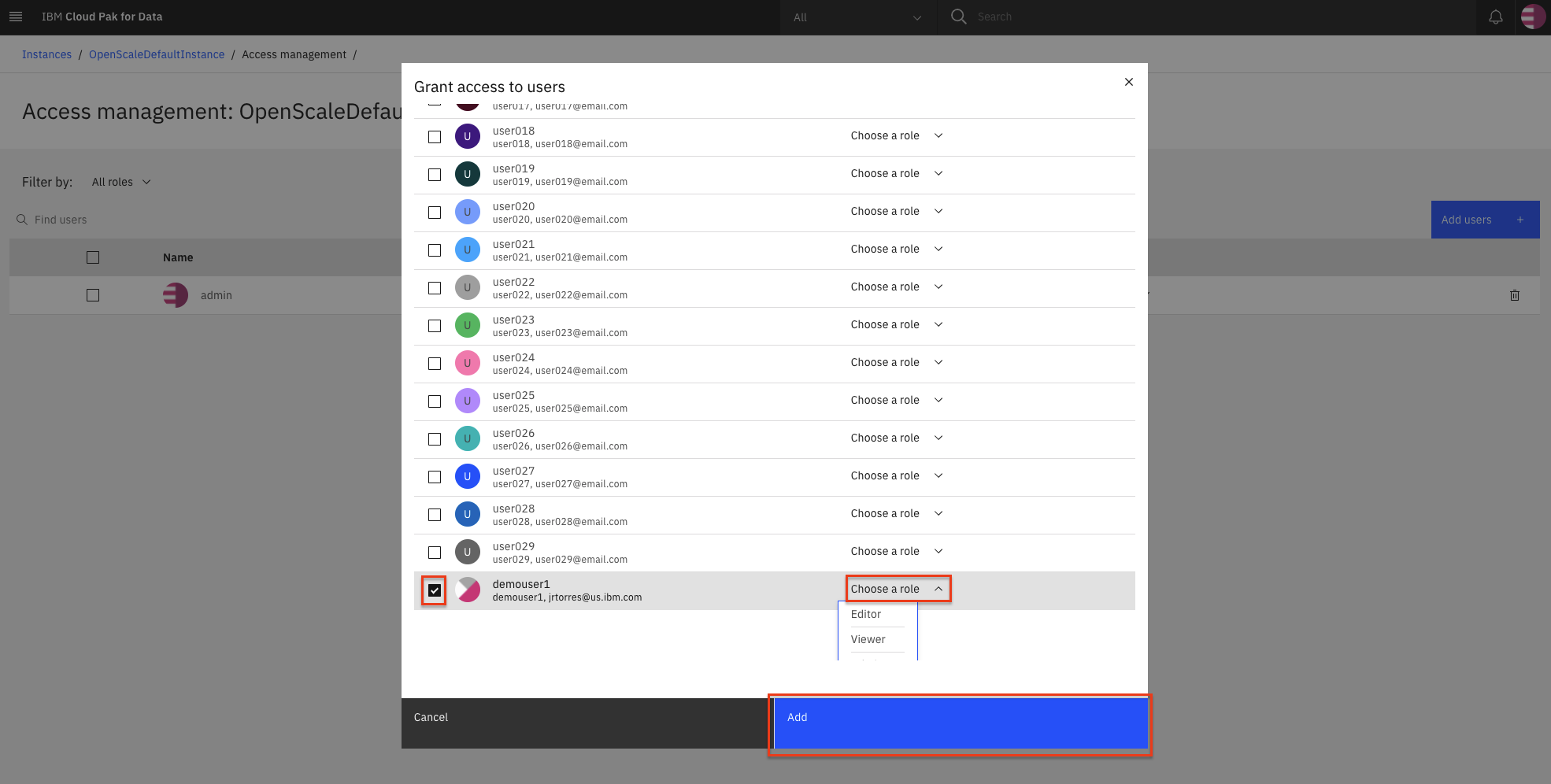

For all of the user accounts, select the

Editorrole for each user and then click theAddbutton.

There are 2 notebooks for this pattern.

Begin by running CreditModelSagemakerLinearLearner.ipynb

- You will need to setup AWS SageMaker

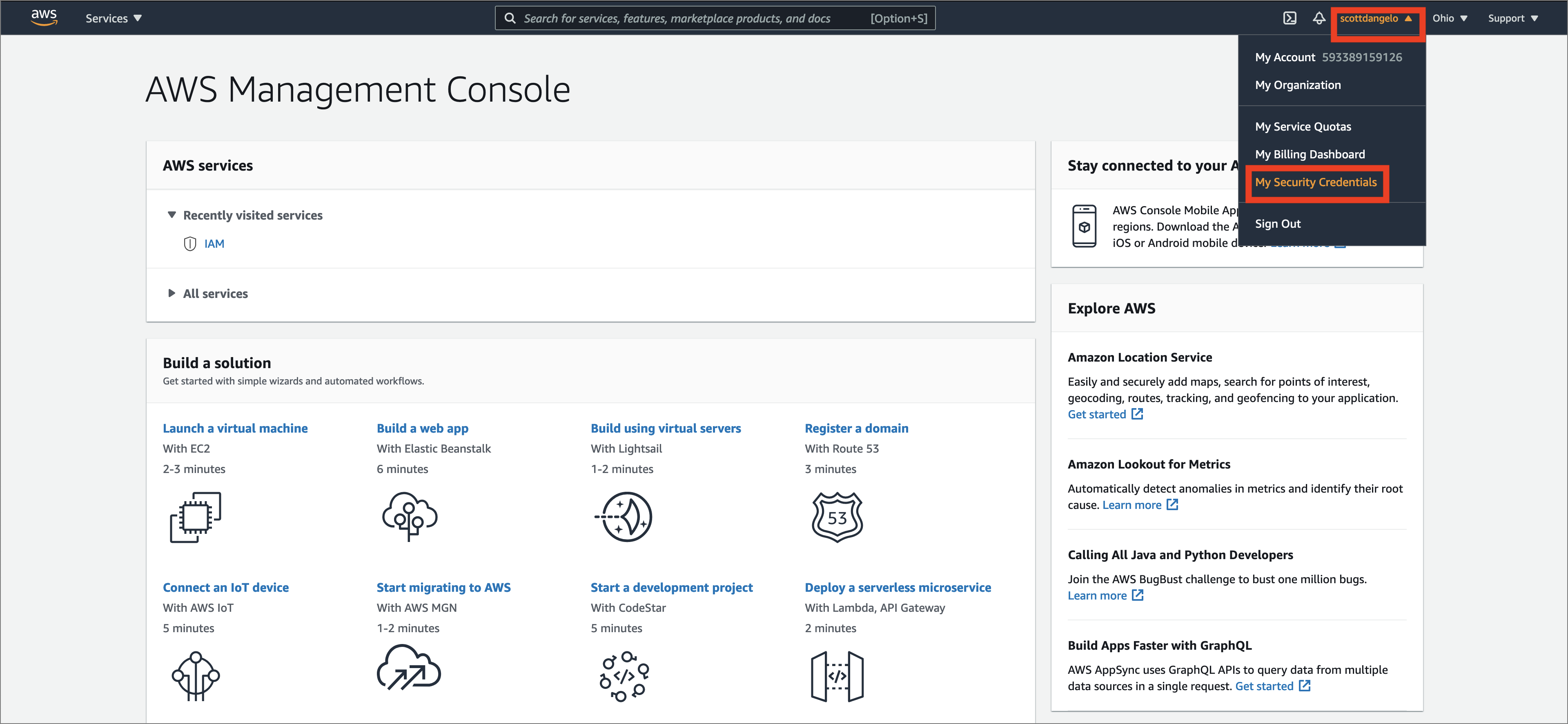

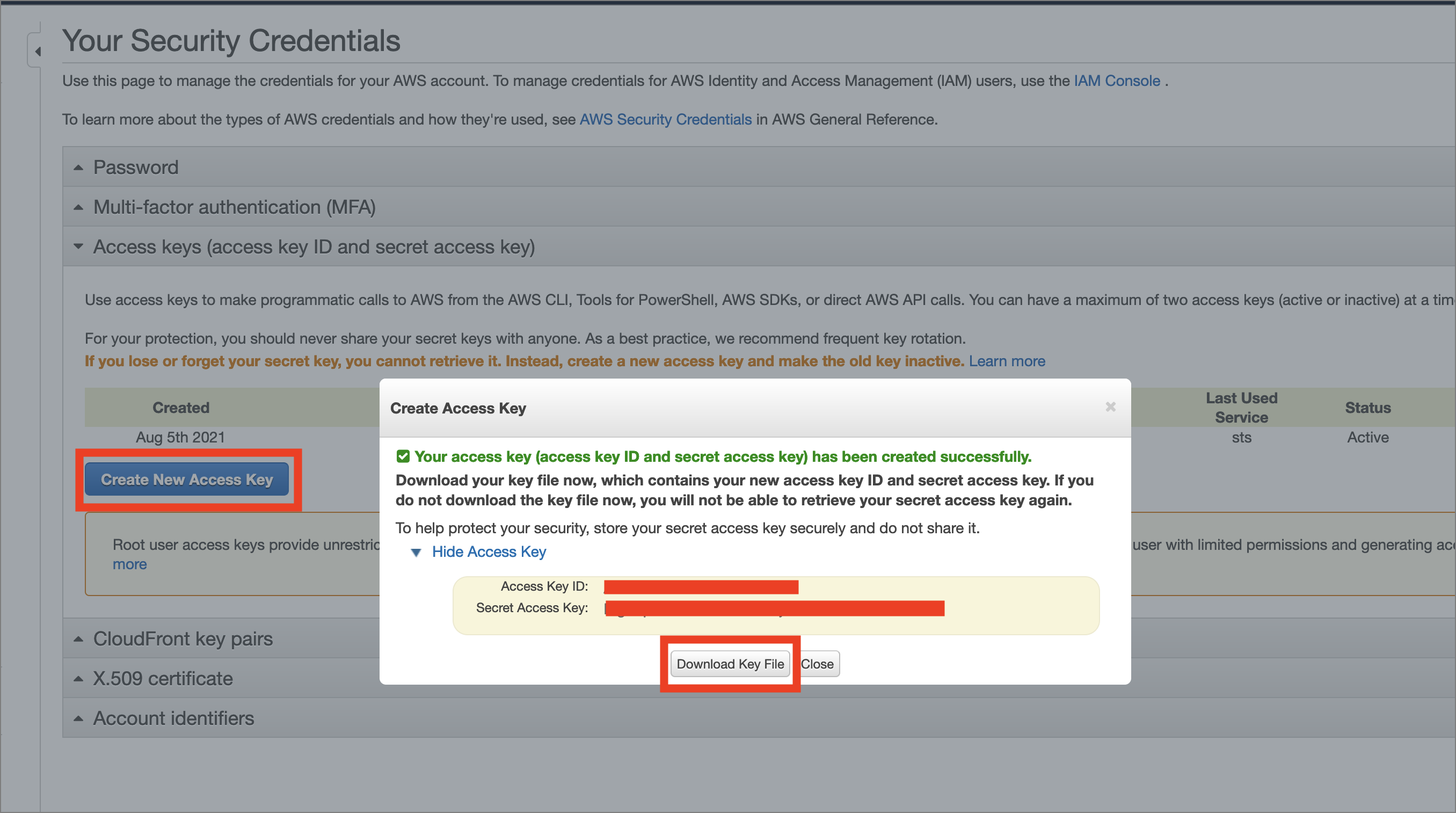

- Get your AWS security credentials from the AWS console by highlighting your logged-in user and going to

My Security Credentials:

- Click

Access keys (access key ID and secret access key)->Create Access Keyand then download the key (and/or copy it from the screen) to obtain both the AWSAccessKeyId and the AWSSecretKey:

-

Insert your AWS credentials after the cell 2.4.1 Add AWS credentials. You can find your region_name from the URL of the AWS console when you are logged in, i.e. my URL is

https://us-east-2.console.aws.amazon.com/console/home?region=us-east-2so my region_name isus-east-2. -

Get your bucket_name by running

2.4.2 Get bucket nameand using the default bucket, or run the cell that follows using[bkt.name for bkt in s3.buckets.all()]to choose another bucket. -

Insert your AWS S3

bucket_nameafter the cell2.4 Create an S3 bucket and use the name in the cell below for bucket_name -

Move your cursor to each code cell and run the code in it. Read the comments for each cell to understand what the code is doing. Important when the code in a cell is still running, the label to the left changes to In [*]:. Do not continue to the next cell until the code is finished running.

Next Run AIOpenscaleSagemakerMLengine.ipynb

- Follow the instructions for

ACTION: Get Watson OpenScale instance_guid and apikeyusing the IBM Cloud CLI

How to get api key using ibmcloud console:

ibmcloud login --sso

ibmcloud iam api-key-create 'my_key'- Enter the CLOUD_API_KEY you just created after the cell 1.1.1 Add the IBM Cloud apikey as CLOUD_API_KEY

-

In your IBM Cloud Object Storage instance, create a bucket with a globally unique name. The UI will let you know if there is a naming conflict. This will be used in cell 1.3.1 as BUCKET_NAME.

-

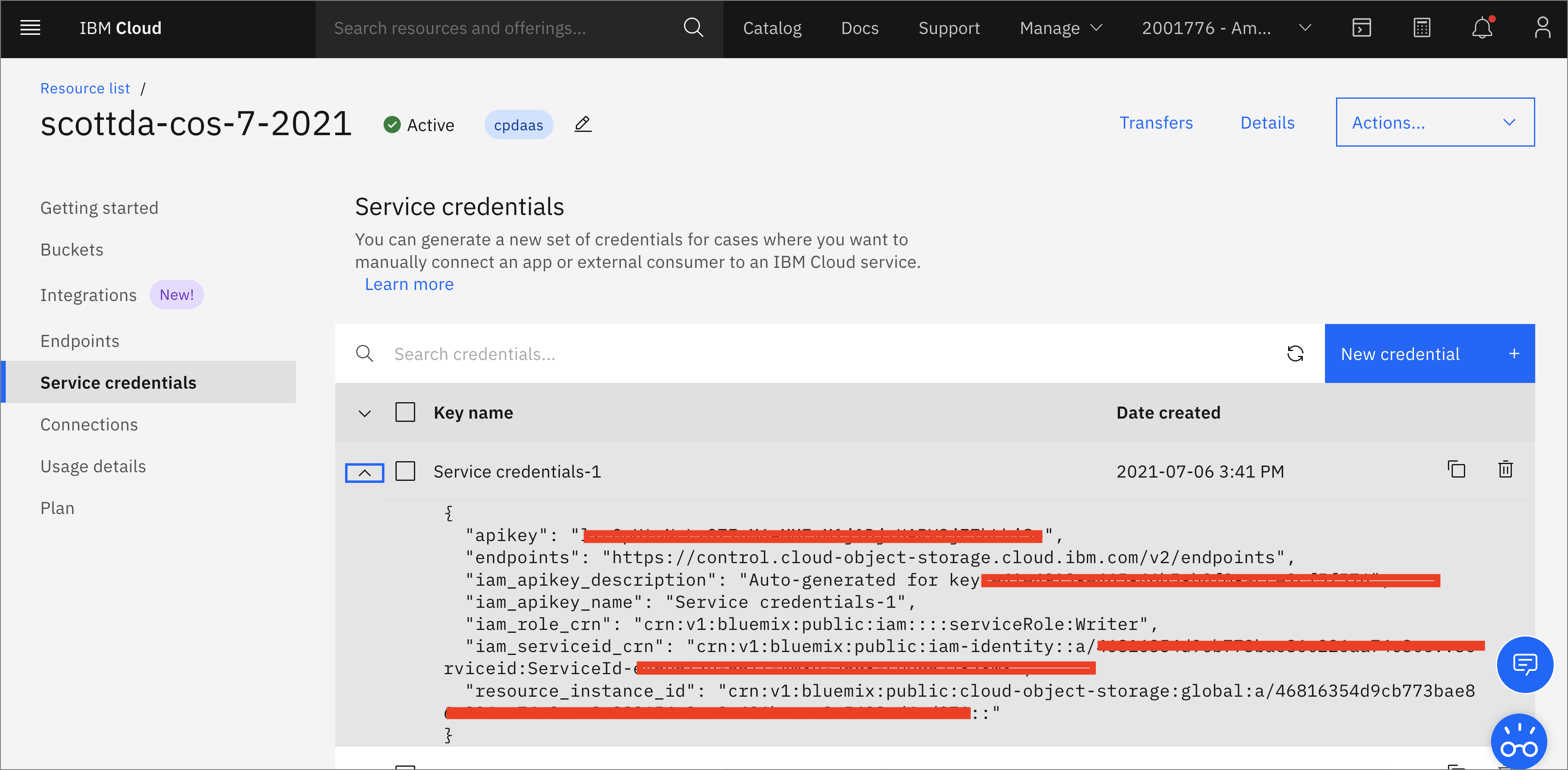

In your IBM Cloud Object Storage instance, get the Service Credentials for use as

COS_API_KEY_ID,COS_RESOURCE_CRN, andCOS_ENDPOINT: -

Add the COS credentials in cell 1.3

-

Insert your BUCKET_NAME in the cell 1.3.1 Add the BUCKET_NAME.

-

In the cell after

2.1 Bind SageMaker machine learning engineenter yourSAGEMAKER_ENGINE_CREDENTIALSusing the keys you obtained in the step above for Get AWS keys. -

After running

3.1 Add subscriptionsyou will get asource_uidto enter in the cell that follows. -

Move your cursor to each code cell and run the code in it. Read the comments for each cell to understand what the code is doing. Important when the code in a cell is still running, the label to the left changes to In [*]:. Do not continue to the next cell until the code is finished running.

See the example output for CreditModelSagemakerLinearLearner-with-outputs.ipynb See the example output for AIOpenscaleSagemakerMLengine-with-output.ipynb

This code pattern is licensed under the Apache License, Version 2. Separate third-party code objects invoked within this code pattern are licensed by their respective providers pursuant to their own separate licenses. Contributions are subject to the Developer Certificate of Origin, Version 1.1 and the Apache License, Version 2.