Hang Guo, Yawei Li, Taolin Zhang, Jiangshan Wang, Tao Dai, Shu-Tao Xia, Luca Benini

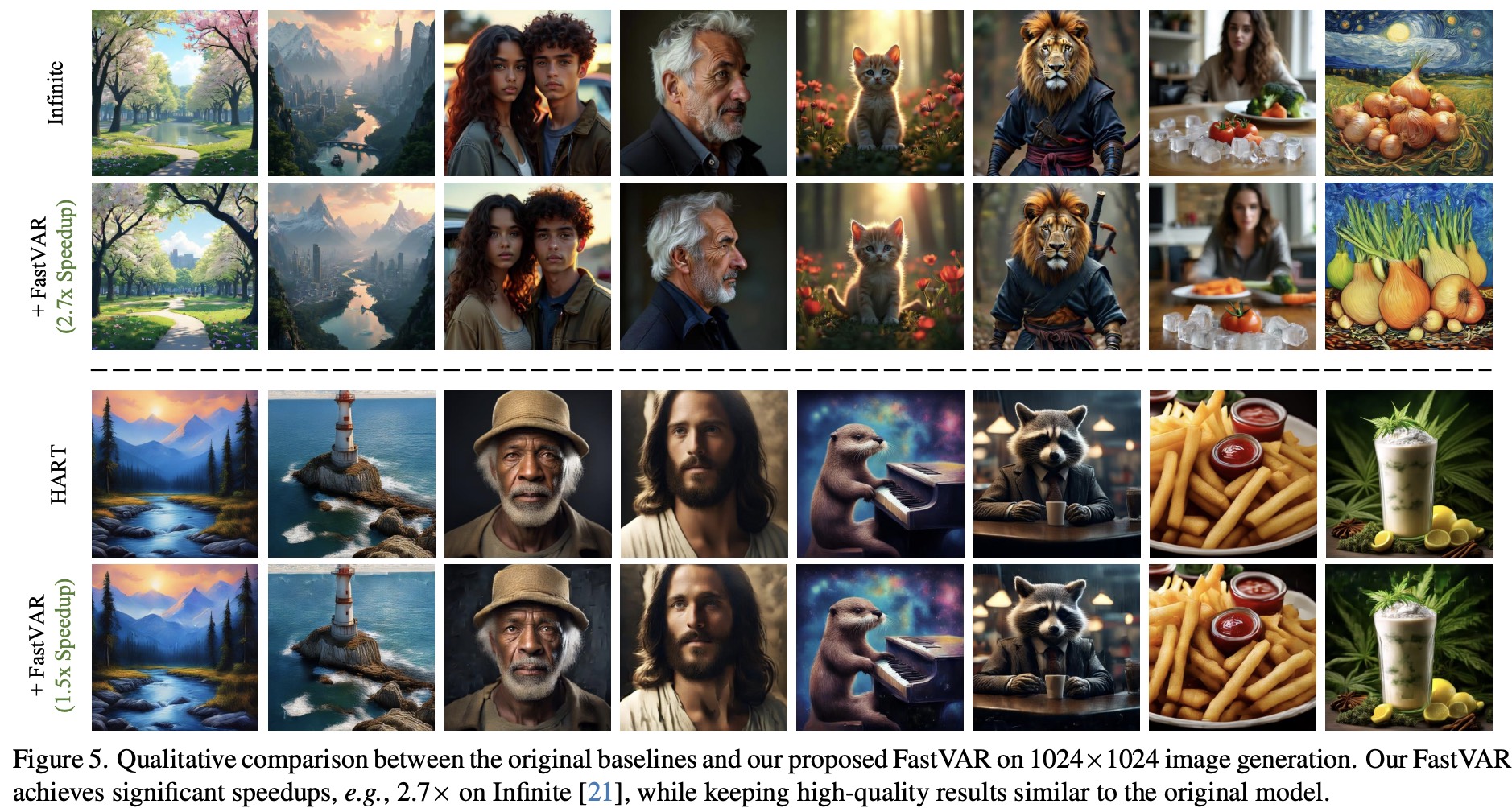

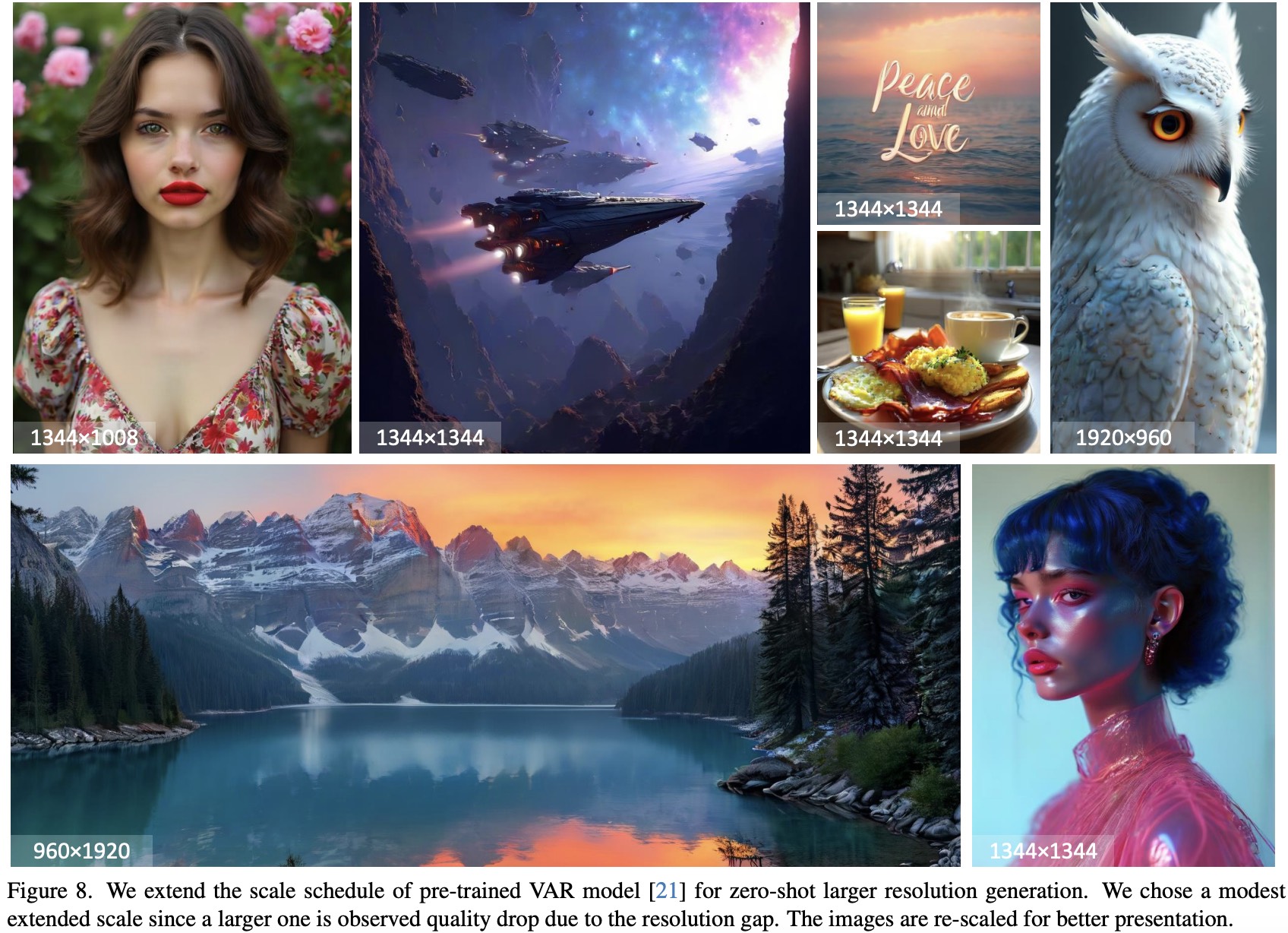

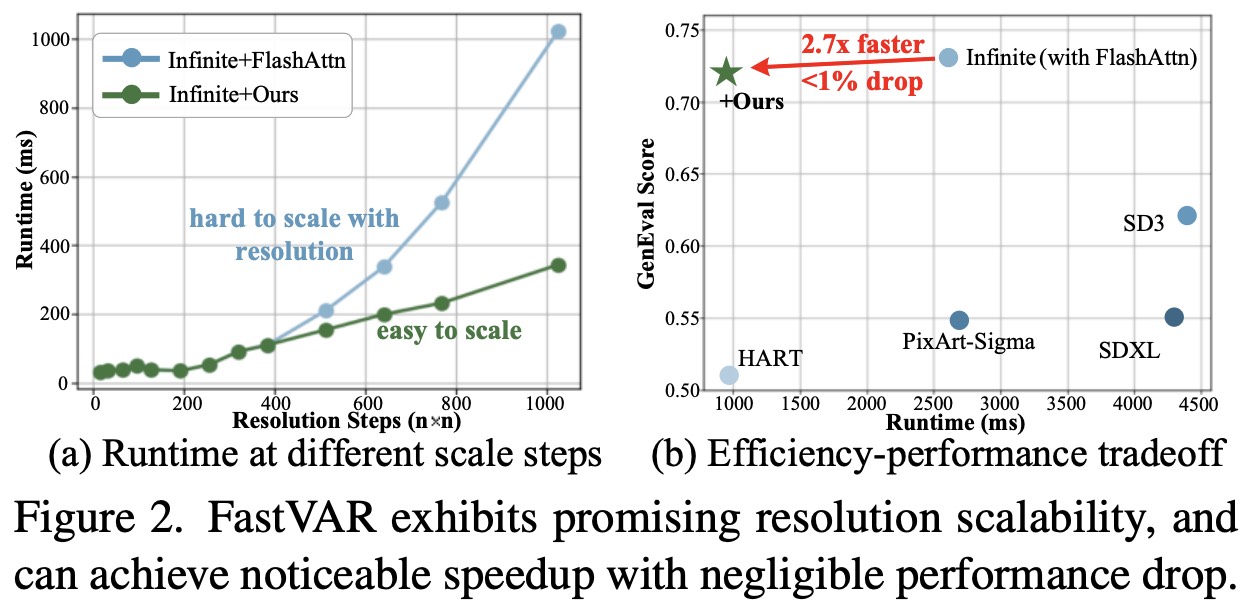

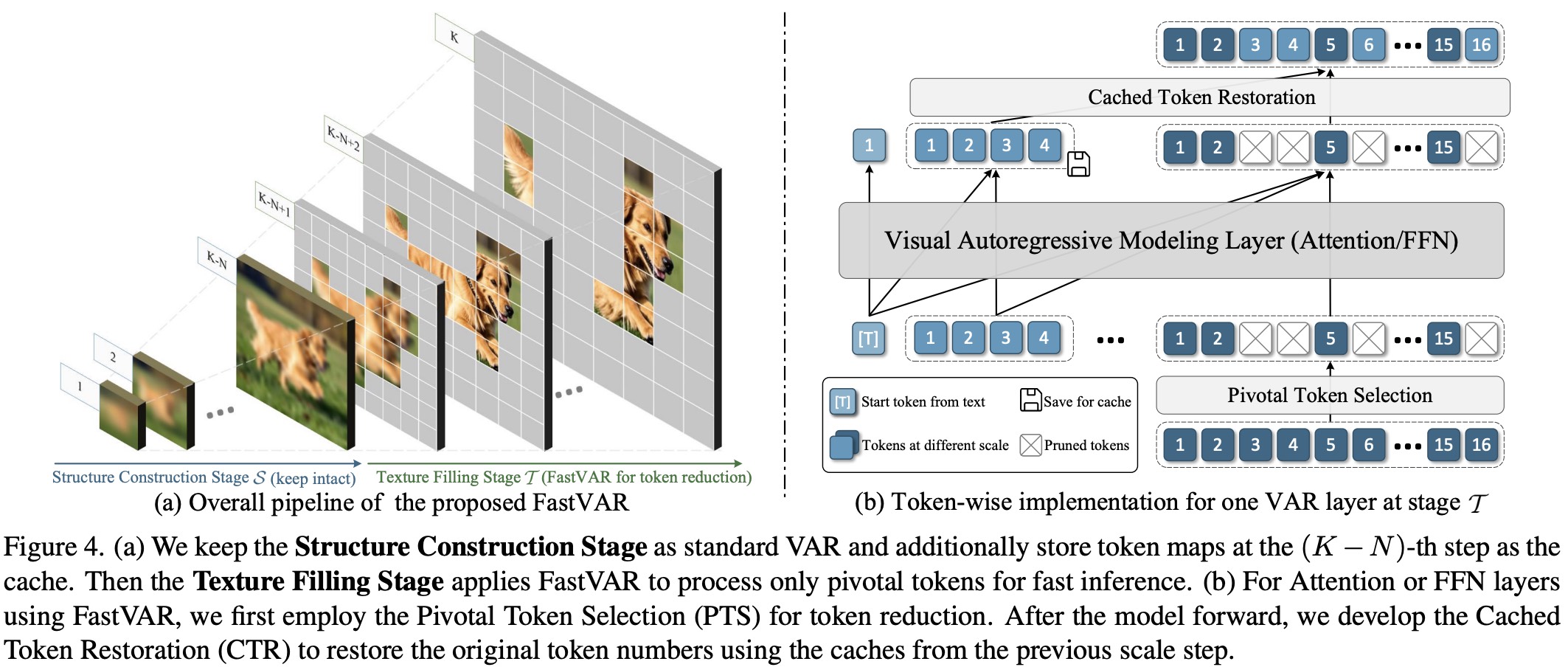

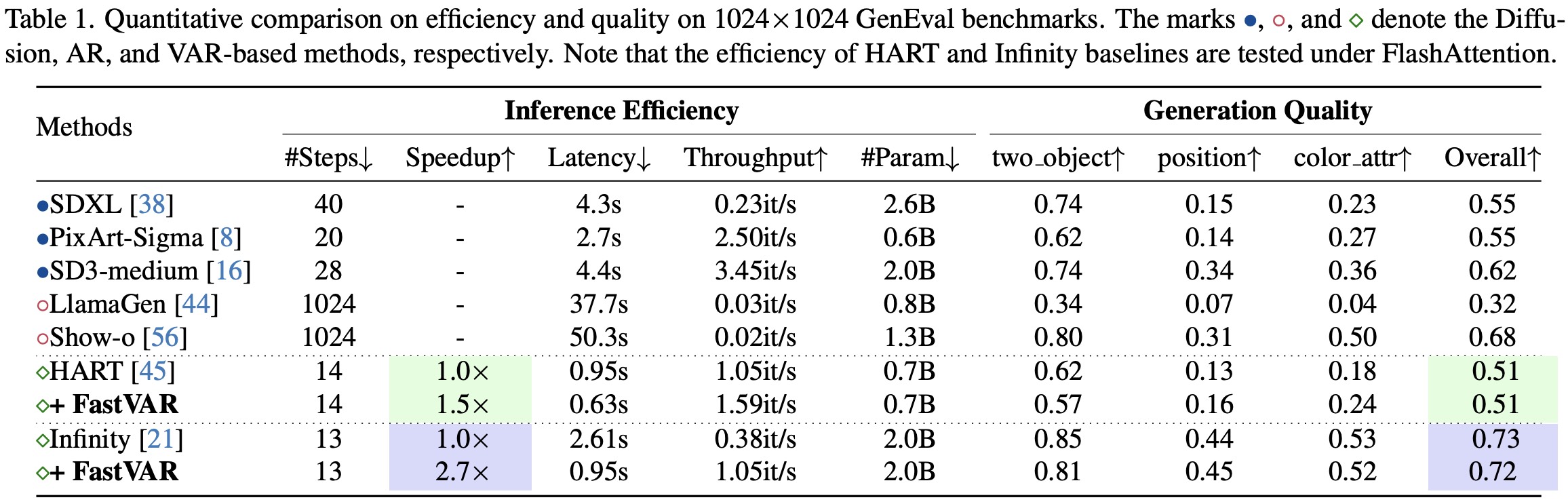

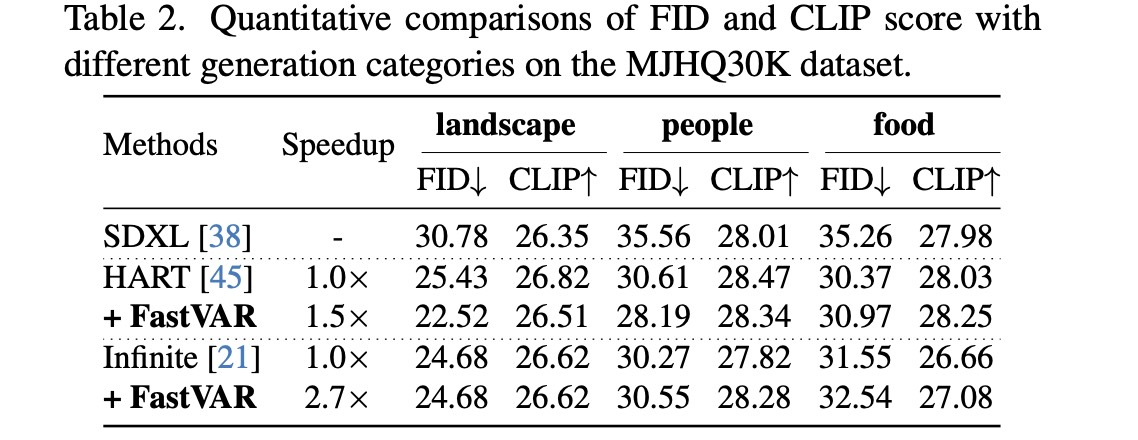

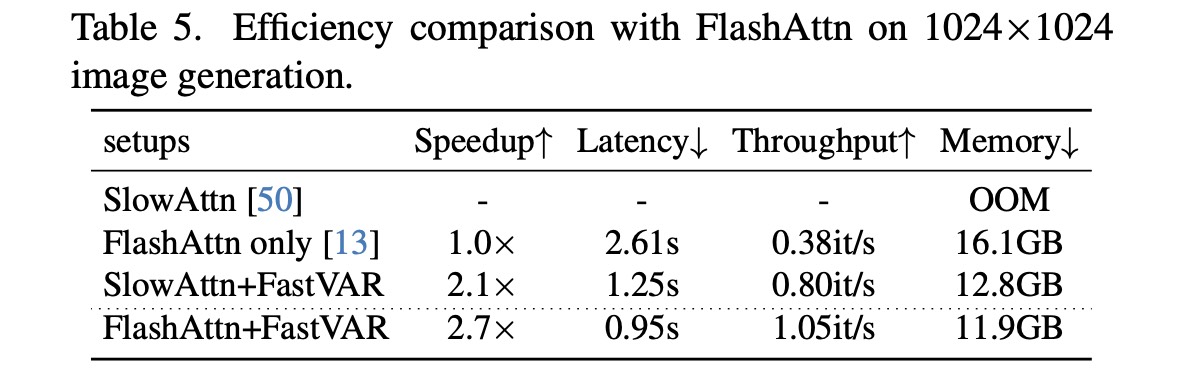

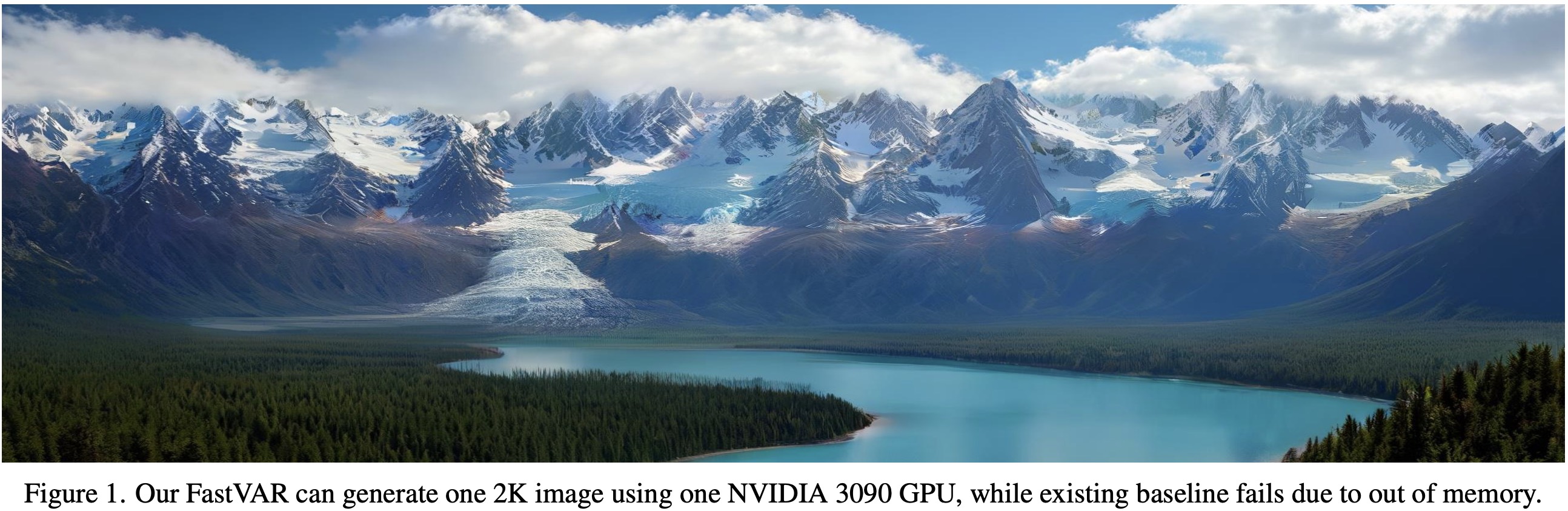

Abstract: Visual Autoregressive (VAR) modeling has gained popularity for its shift towards next-scale prediction. However, existing VAR paradigms process the entire token map at each scale step, leading to the complexity and runtime scaling dramatically with image resolution. To address this challenge, we propose FastVAR, a post-training acceleration method for efficient resolution scaling with VARs. Our key finding is that the majority of latency arises from the large-scale step where most tokens have already converged. Leveraging this observation, we develop the cached token pruning strategy that only forwards pivotal tokens for scalespecific modeling while using cached tokens from previous scale steps to restore the pruned slots. This significantly reduces the number of forwarded tokens and improves the efficiency at larger resolutions. Experiments show the proposed FastVAR can further speedup FlashAttentionaccelerated VAR by 2.7× with negligible performance drop of <1%. We further extend FastVAR to zero-shot generation of higher resolution images. In particular, FastVAR can generate one 2K image with 15GB memory footprints in 1.5s on a single NVIDIA 3090 GPU.

⭐If this work is helpful for you, please help star this repo. Thanks!🤗

1️⃣ Faster VAR Generation without Perceptual Loss

2️⃣ High-resolution Image Generation (even 2K image on single 3090 GPU)

3️⃣ Promising Resolution Scalibility (almost linear complexity)

- 2025-03-30: arXiv paper available.

- 2025-04-04: This repo is released.

- 2025-06-26: Congrats! Our FastVAR has been accepted by ICCV2025 😊

- 2025-06-29: We have open sourced all our code.

- arXiv version available

- Release code

- Further improvements

Our FastVAR introduces the "cached token pruning" which works on the large-scale steps of the VAR models, which is training-free and generic for various VAR backbones.

Our FastVAR can achieve 2.7x speedup with <1% performance drop, even on top of Flash-attention accelerated setups.

Detailed results can be found in the paper.

For learning purpose, we provide the core algorithm of our FastVAR as follows (one may find the complete code in this line). Since our FastVAR is a general technology, other VAR-based models also potentially apply.

def masked_previous_scale_cache(cur_x, num_remain, cur_shape):

B, L, c = cur_x.shape

mean_x = cur_x.view(B, cur_shape[1], cur_shape[2], -1).permute(0, 3, 1, 2)

mean_x = torch.nn.functional.adaptive_avg_pool2d(mean_x,(1,1)).permute(0, 2, 3, 1).view(B, 1,c)

mse_difference = torch.sum((cur_x - mean_x)**2,dim=-1,keepdim=True)

select_indices = torch.argsort(mse_difference,dim=1,descending=True)

filted_select_indices=select_indices[:,:num_remain,:]

def merge(merged_cur_x):

return torch.gather(merged_cur_x,dim=1,index=filted_select_indices.repeat(1,1,c))

def unmerge(unmerged_cur_x, unmerged_cache_x, cached_hw=None):

unmerged_cache_x_ = unmerged_cache_x.view(B, cached_hw[0], cached_hw[1], -1).permute(0, 3, 1, 2)

unmerged_cache_x_ = torch.nn.functional.interpolate(unmerged_cache_x_, size=(cur_shape[1], cur_shape[2]), mode='area').permute(0, 2, 3, 1).view(B, L, c)

unmerged_cache_x_.scatter_(dim=1,index=filted_select_indices.repeat(1,1,c),src=unmerged_cur_x)

return unmerged_cache_x_

def get_src_tgt_idx():

return filted_select_indices

return merge, unmerge, get_src_tgt_idx

We apply our FastVAR on two Text-to-Image VAR models, i.e., Infinity and HART. The code for the two models can be found in respective folders. For conda environment and related pre-trained LLM/VLM models, we suggest users to refer to the setup in original Infinity and HART repos. In practice, we find both codebase can be compatible to the other.

First cd into the Infinity folder

cd ./Infinity

Then you can adjust pre-trained Infinity backbone weights and then run text-to-image inference to generate a single image using given user text prompts via

python inference.py

If you additionally want to reproduce the reported results in our paper, like GenEval, MJHQ30K, HPSv2.1, and image reward, you may refer to the detailed instruction in this file, which contains all necessary command to run respective experiments.

First cd into the HART folder

cd ./HART

Then you can run text-to-image generation with the following command.

python inference.py --model_path /path/to/model \

--text_model_path /path/to/Qwen2 \

--prompt "YOUR_PROMPT" \

--sample_folder_dir /path/to/save_dir

For evaluating HART on common benchmarks, please refer to this file, which is basicly similar to Infinity model.

Please cite us if our work is useful for your research.

@article{guo2025fastvar,

title={FastVAR: Linear Visual Autoregressive Modeling via Cached Token Pruning},

author={Guo, Hang and Li, Yawei and Zhang, Taolin and Wang, Jiangshan and Dai, Tao and Xia, Shu-Tao and Benini, Luca},

journal={arXiv preprint arXiv:2503.23367},

year={2025}

}

Since this work based on the pre-trained VAR models, users should follow the license of the corresponding backbone models like HART(MIT License) and Infinity(MIT License).

If you have any questions during your reproduce, feel free to approach me at cshguo@gmail.com