This repository contains a curated collection of research papers on generative information retrieval. These papers are organized according to the categorizations outlined in our survey "From Matching to Generation: A Survey on Generative Information Retrieval".

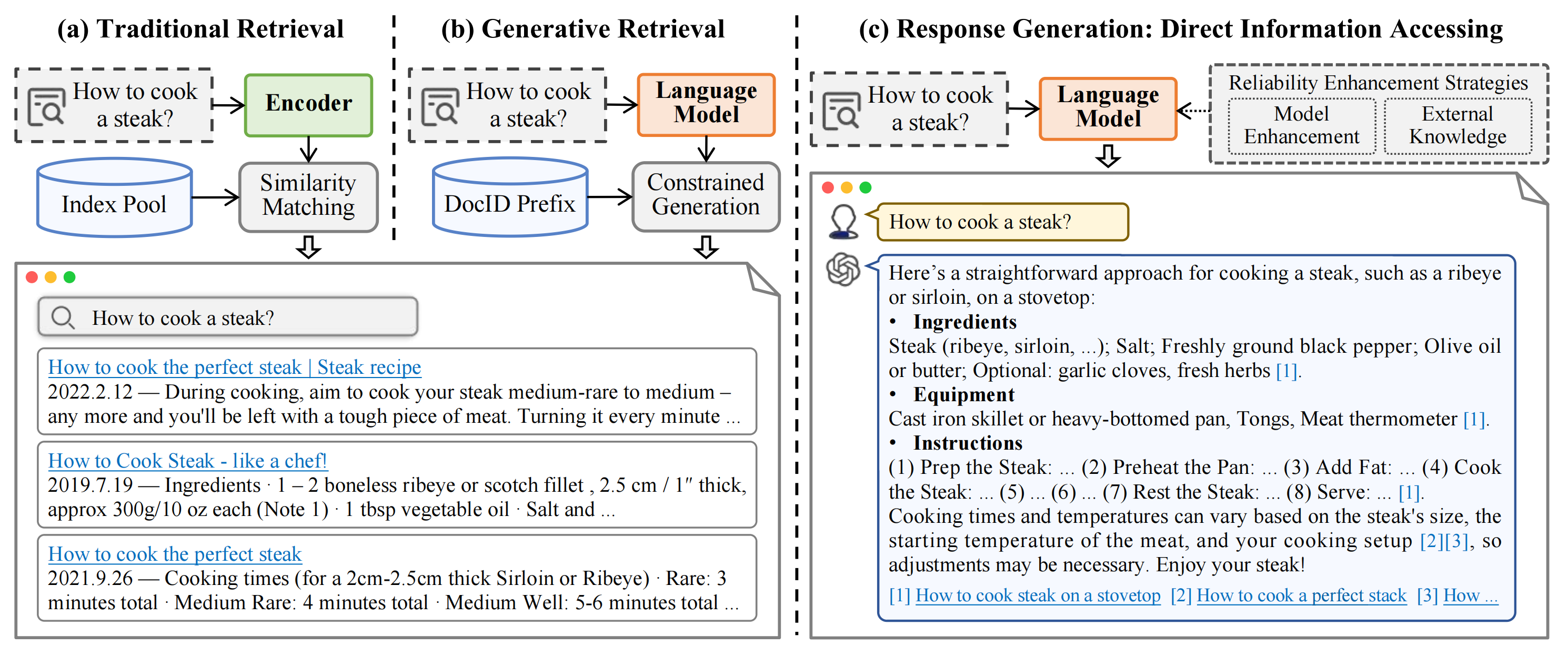

Short Abstract: This survey explores generative information retrieval (GenIR), which marks a paradigm shift from traditional matching-based methods to generative approaches. We divide current GenIR research into two main categories: (1) generative document retrieval, which involves retrieval by directly generating document identifiers, without relying on large-scale indexing, and (2) reliable response generation, which uses language models to generate user-centric and reliable responses, directly meets the users' information need. This review aims to offer a comprehensive reference for researchers in the GenIR field, encouraging further development in this area.

- Generative Document Retrieval

- Reliable Response Generation

- Evaluation

- Challenges and Prospects

- DSI: "Transformer Memory as a Differentiable Search Index". Yi Tay et al. NeurIPS 2022. [Paper]

- DynamicRetriever: "DynamicRetriever: A Pre-trained Model-based IR System Without an Explicit Index". Yujia Zhou et al. Mach. Intell. Res., 2023. [Paper]

- NCI: "A Neural Corpus Indexer for Document Retrieval". Yujing Wang et al. NeurIPS 2022. [Paper]

- DSI-QG: "Bridging the Gap Between Indexing and Retrieval for Differentiable Search Index with Query Generation". Shengyao Zhuang et al. arXiv 2022. [Paper]

- "Understanding Differential Search Index for Text Retrieval". Xiaoyang Chen et al. ACL 2023. [Paper]

- LTRGR: "Learning to Rank in Generative Retrieval". Yongqi Li et al. arXiv 2023. [Paper]

- GenRRL: "Enhancing Generative Retrieval with Reinforcement Learning from Relevance Feedback". Yujia Zhou et al. EMNLP 2023. [Paper]

- DGR: "Distillation Enhanced Generative Retrieval". Peiwen Yuan et al. arXiv 2024. [Paper]

- ListGR: "Listwise Generative Retrieval Models via a Sequential Learning Process". Yubao Tang et al. ACM TOIS, 2024. [Paper]

- TOME: "TOME: A Two-stage Approach for Model-based Retrieval". Ruiyang Ren et al. ACL 2023. [Paper]

- NP Decoding: "Nonparametric Decoding for Generative Retrieval". Hyunji Lee et al. ACL 2023. [Paper]

- MEVI: "Model-enhanced Vector Index". Hailin Zhang et al. arXiv 2023. [Paper]

- DiffusionRet: "Diffusion-Enhanced Generative Retriever using Constrained Decoding". Shanbao Qiao et al. EMNLP 2023. [Paper]

- GDR: "Generative Dense Retrieval: Memory Can Be a Burden". Peiwen Yuan et al. arXiv 2024. [Paper]

- Self-Retrieval: "Self-Retrieval: Building an Information Retrieval System with One Large Language Model". Qiaoyu Tang et al. arXiv 2024. [Paper]

- DSI: "Transformer Memory as a Differentiable Search Index". Yi Tay et al. NeurIPS 2022. [Paper]

- DynamicRetriever: "DynamicRetriever: A Pre-trained Model-based IR System Without an Explicit Index". Yujia Zhou et al. Mach. Intell. Res., 2023. [Paper]

- Ultron: "Ultron: An Ultimate Retriever on Corpus with a Model-based Indexer". Yujia Zhou et al. arXiv 2022. [Paper]

- GenRet: "Learning to Tokenize for Generative Retrieval". Weiwei Sun et al. arXiv 2023. [Paper]

- Tied-Atomic: "Generative Retrieval as Dense Retrieval". Thong Nguyen et al. arXiv 2023. [Paper]

- MEVI: "Model-enhanced Vector Index". Hailin Zhang et al. arXiv 2023. [Paper]

- LMIndexer: "Language Models As Semantic Indexers". Bowen Jin et al. arXiv 2023. [Paper]

- ASI: "Auto Search Indexer for End-to-End Document Retrieval". Tianchi Yang et al. EMNLP 2023. [Paper]

- RIPOR: "Scalable and Effective Generative Information Retrieval". Hansi Zeng et al. arXiv 2023. [Paper]

- GENRE: "Autoregressive Entity Retrieval". Nicola De Cao et al. ICLR 2021. [Paper]

- SEAL: "Autoregressive Search Engines: Generating Substrings as Document Identifiers". Michele Bevilacqua et al. NeurIPS 2022. [Paper]

- Ultron: "Ultron: An Ultimate Retriever on Corpus with a Model-based Indexer". Yujia Zhou et al. arXiv 2022. [Paper]

- LLM-URL: "Large Language Models are Built-in Autoregressive Search Engines". Noah Ziems et al. ACL 2023. [Paper]

- UGR: "A Unified Generative Retriever for Knowledge-Intensive Language Tasks via Prompt Learning". Jiangui Chen et al. SIGIR 2023. [Paper]

- MINDER: "Multiview Identifiers Enhanced Generative Retrieval". Yongqi Li et al. ACL 2023. [Paper]

- AutoTSG: "Term-Sets Can Be Strong Document Identifiers For Auto-Regressive Search Engines". Peitian Zhang et al. arXiv 2023. [Paper]

- SE-DSI: "Semantic-Enhanced Differentiable Search Index Inspired by Learning Strategies". Yubao Tang et al. KDD 2023. [Paper]

- NOVO: "NOVO: Learnable and Interpretable Document Identifiers for Model-Based IR". Zihan Wang et al. CIKM 2023. [Paper]

- GLEN: "GLEN: Generative Retrieval via Lexical Index Learning". Sunkyung Lee et al. EMNLP 2023. [Paper]

- DSI++: "DSI++: Updating Transformer Memory with New Documents". Sanket Mehta Vaibhav et al. arXiv 2022. [Paper]

- DynamicIR: "Exploring the Practicality of Generative Retrieval on Dynamic Corpora". Soyoung Yoon et al. 2023. [Paper]

- "On the Robustness of Generative Retrieval Models: An Out-of-Distribution Perspective". Yu-An Liu et al. arXiv 2023. [Paper]

- IncDSI: "IncDSI: Incrementally Updatable Document Retrieval". Varsha Kishore et al. ICML 2023. [Paper]

- CLEVER: "Continual Learning for Generative Retrieval over Dynamic Corpora". Jiangui Chen et al. CIKM 2023. [Paper]

- CorpusBrain++: "CorpusBrain++: A Continual Generative Pre-Training Framework for Knowledge-Intensive Language Tasks". Jiafeng Guo et al. arXiv 2024. [Paper]

- GERE: "GERE: Generative Evidence Retrieval for Fact Verification". Jiangui Chen et al. arXiv 2022. [Paper]

- CorpusBrain: "CorpusBrain: Pre-train a Generative Retrieval Model for Knowledge-Intensive Language Tasks". Jiangui Chen et al. CIKM 2022. [Paper]

- GMR: "Generative Multi-hop Retrieval". Hyunji Lee et al. EMNLP 2022. [Paper]

- DearDR: "Data-Efficient Autoregressive Document Retrieval for Fact Verification". James Thorne. arXiv 2022. [Paper]

- CodeDSI: "CodeDSI: Differentiable Code Search". Usama Nadeem et al. arXiv 2022. [Paper]

- UGR: "A Unified Generative Retriever for Knowledge-Intensive Language Tasks via Prompt Learning". Jiangui Chen et al. SIGIR 2023. [Paper]

- GCoQA: "Generative retrieval for conversational question answering". Yongqi Li et al. Inf. Process. Manag., 2023. [Paper]

- Re3val: "Re3val: Reinforced and Reranked Generative Retrieval". EuiYul Song et al. arXiv 2024. [Paper]

- UniGen: "UniGen: A Unified Generative Framework for Retrieval and Question Answering with Large Language Models". Xiaoxi Li et al. AAAI 2024. [Paper]

- CorpusLM: "CorpusLM: Towards a Unified Language Model on Corpus for Knowledge-Intensive Tasks". Xiaoxi Li et al. SIGIR 2024. [Paper]

- IRGen: "IRGen: Generative Modeling for Image Retrieval". Yidan Zhang et al. arXiv 2023. [Paper]

- GeMKR: "Generative Multi-Modal Knowledge Retrieval with Large Language Models". Xinwei Long et al. AAAI 2024. [Paper]

- GRACE: "Generative Cross-Modal Retrieval: Memorizing Images in Multimodal Language Models for Retrieval and Beyond". Yongqi Li et al. arXiv 2024. [Paper]

- P5: "Recommendation as Language Processing (RLP): A Unified Pretrain, Personalized Prompt & Predict Paradigm (P5)". Shijie Geng et al. RecSys '22. [Paper]

- GPT4Rec: "GPT4Rec: A generative framework for personalized recommendation and user interests interpretation". Jinming Li et al. arXiv 2023. [Paper]

- TIGER: "Recommender Systems with Generative Retrieval". Shashank Rajput et al. NeurIPS 2023. [Paper]

- SEATER: "Generative Retrieval with Semantic Tree-Structured Item Identifiers via Contrastive Learning". Zihua Si et al. arXiv 2023. [Paper]

- IDGenRec: "Towards LLM-RecSys Alignment with Textual ID Learning". Juntao Tan et al. arXiv 2024. [Paper]

- LCRec: "Adapting large language models by integrating collaborative semantics for recommendation". Bowen Zheng et al. arXiv 2023. [Paper]

- ColaRec: "Enhanced Generative Recommendation via Content and Collaboration Integration". Yidan Wang et al. 2024. [Paper]

- GPT3: "Language models are few-shot learners". Tom B. Brown et al. NeurIPS 2020. [Paper]

- BLOOM: "BLOOM: A 176B-Parameter Open-Access Multilingual Language Model". Teven Le Scao et al. arXiv 2022. [Paper]

- LLaMA: "LLaMA: Open and Efficient Foundation Language Models". Hugo Touvron et al. arXiv 2023. [Paper]

- PaLM: "PaLM: Scaling Language Modeling with Pathways". Aakanksha Chowdhery et al. J. Mach. Learn. Res., 2023. [Paper]

- Mixtral 8x7B: "Mixtral of Experts". Albert Q. Jiang et al. arXiv 2024. [Paper]

- "Unsupervised Improvement of Factual Knowledge in Language Models". Nafis Sadeq et al. EAACL 2023. [Paper]

- FactTune: "Fine-Tuning or Retrieval? Comparing Knowledge Injection in LLMs". Oded Ovadia et al. arXiv 2023. [Paper]

- GenRead: "Generate rather than retrieve: Large language models are strong context generators". Wenhao Yu et al. arXiv 2022. [Paper]

- RECITE: "Recitation-augmented language models". Zhiqing Sun et al. arXiv 2022. [Paper]

- DoLa: "Decoding by Contrasting Layers Improves Factuality in Large Language Models". Yung{-}Sung Chuang et al. arXiv 2023. [Paper]

- Ernie 2.0: "Ernie 2.0: A continual pre-training framework for language understanding". Yu Sun et al. AAAI 2020. [Paper]

- DAP: "Continual pre-training of language models". Zixuan Ke et al. arXiv 2023. [Paper]

- DynaInst: "Large-scale lifelong learning of in-context instructions and how to tackle it". Jisoo Mok et al. ACL 2023. [Paper]

- KE: "Editing Factual Knowledge in Language Models". Nicola De Cao et al. EMNLP 2021. [Paper]

- MEND: "Fast Model Editing at Scale". Eric Mitchell et al. ICLR 2022. [Paper]

- ROME: "Locating and Editing Factual Associations in GPT". Kevin Meng et al. NeurIPS 2022. [Paper]

- RAG: "Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks". Patrick S. H. Lewis et al. NeurIPS 2020. [Paper]

- RRR: "Query Rewriting in Retrieval-Augmented Large Language Models". Xinbei Ma et al. EMNLP 2023. [Paper]

- ARL2: "ARL2: Aligning Retrievers for Black-box Large Language Models via Self-guided Adaptive Relevance Labeling". Lingxi Zhang et al. arXiv 2024. [Paper]

- TOC: "Tree of Clarifications: Answering Ambiguous Questions with Retrieval-Augmented Large Language Models". Gangwoo Kim et al. EMNLP 2023. [Paper]

- BlendFilter: "BlendFilter: Advancing Retrieval-Augmented Large Language Models via Query Generation Blending and Knowledge Filtering". Haoyu Wang et al. arXiv 2024. [Paper]

- REPLUG: "REPLUG: Retrieval-Augmented Black-Box Language Models". Weijia Shi et al. arXiv 2023. [Paper]

- SKR: "Self-Knowledge Guided Retrieval Augmentation for Large Language Models". Yile Wang et al. EMNLP 2023. [Paper]

- Self-DC: "Self-DC: When to retrieve and When to generate? Self Divide-and-Conquer for Compositional Unknown Questions". Hongru Wang et al. arXiv 2024. [Paper]

- Rowen: "Retrieve Only When It Needs: Adaptive Retrieval Augmentation for Hallucination Mitigation in Large Language Models". Hanxing Ding et al. arXiv 2024. [Paper]

- Iter-RetGen: "Enhancing Retrieval-Augmented Large Language Models with Iterative Retrieval-Generation Synergy". Zhihong Shao et al. arXiv 2023. [Paper]

- IR-COT: "Interleaving Retrieval with Chain-of-Thought Reasoning for Knowledge-Intensive Multi-Step Questions". Harsh Trivedi et al. ACL 2023. [Paper]

- FLARE: "Active Retrieval Augmented Generation". Zhengbao Jiang et al. EMNLP 2023. [Paper]

- Self-RAG: "Learning to Retrieve, Generate, and Critique through Self-Reflection". Akari Asai et al. arXiv 2023. [Paper]

- ReAct: "Synergizing Reasoning and Acting in Language Models". Shunyu Yao et al. NeurIPS 2022. [Paper]

- WebGPT: "Browser-assisted question-answering with human feedback". Reiichiro Nakano et al. arXiv 2021. [Paper]

- StructGPT: "A General Framework for Large Language Model to Reason over Structured Data". Jinhao Jiang et al. EMNLP 2023. [Paper]

- ToG: "Think-on-Graph: Deep and Responsible Reasoning of Large Language Model with Knowledge Graph". Jiashuo Sun et al. arXiv 2023. [Paper]

- RoG: "Reasoning on Graphs: Faithful and Interpretable Large Language Model Reasoning". Linhao Luo et al. ICLR 2024. [Paper]

- Toolformer: "Language models can teach themselves to use tools". Timo Schick et al. arXiv 2023. [Paper]

- ToolLLM: "Facilitating Large Language Models to Master 16000+ Real-world APIs". Yujia Qin et al. arXiv 2023. [Paper]

- AssistGPT: "A General Multi-modal Assistant that can Plan, Execute, Inspect, and Learn". Difei Gao et al. arXiv 2023. [Paper]

- HuggingGPT: "Solving AI Tasks with ChatGPT and its Friends in Hugging Face". Yongliang Shen et al. NeurIPS 2023. [Paper]

- Visual ChatGPT: "Talking, Drawing and Editing with Visual Foundation Models". Chenfei Wu et al. arXiv 2023. [Paper]

- According-to Prompting: "According to ...: Prompting Language Models Improves Quoting from Pre-Training Data". Orion Weller et al. EACL 2024. [Paper]

- IFL: "Towards Reliable and Fluent Large Language Models: Incorporating Feedback Learning Loops in QA Systems". Dongyub Lee et al. arXiv 2023. [Paper]

- Fierro et al.: "Learning to Plan and Generate Text with Citations". Constanza Fierro et al. arXiv 2024. [Paper]

- 1-PAGER: "One Pass Answer Generation and Evidence Retrieval". Palak Jain et al. EMNLP 2023. [Paper]

- Credible without Credit: "Domain Experts Assess Generative Language Models". Denis Peskoff et al. ACL 2023. [Paper]

- "Source-Aware Training Enables Knowledge Attribution in Language Models". Muhammad Khalifa et al. 2024. [Paper]

- WebGPT: "Browser-assisted question-answering with human feedback". Reiichiro Nakano et al. arXiv 2021. [Paper]

- WebBrain: "Learning to Generate Factually arXivect Articles for Queries by Grounding on Large Web Corpus". Hongjing Qian et al. arXiv 2023. [Paper]

- RARR: "Researching and Revising What Language Models Say, Using Language Models". Luyu Gao et al. ACL 2023. [Paper]

- Search-in-the-Chain: "Towards the Accurate, Credible and Traceable Content Generation for Complex Knowledge-intensive Tasks". Shicheng Xu et al. arXiv 2023. [Paper]

- LLatrieval: "LLM-Verified Retrieval for Verifiable Generation". Xiaonan Li et al. arXiv 2023. [Paper]

- VTG: "Towards Verifiable Text Generation with Evolving Memory and Self-Reflection". Hao Sun et al. arXiv 2023. [Paper]

- CEG: "Citation-Enhanced Generation for LLM-based Chatbots". Weitao Li et al. arXiv 2024. [Paper]

- APO: "Improving Attributed Text Generation of Large Language Models via Preference Learning". Dongfang Li et al. arXiv 2024. [Paper]

- "Personalizing Dialogue Agents: I have a dog, do you have pets too?" Saizheng Zhang et al. ACL 2018. [Paper]

- P2Bot: "You Impress Me: Dialogue Generation via Mutual Persona Perception". Qian Liu et al. ACL 2020. [Paper]

- "Personalized Response Generation via Generative Split Memory Network". Yuwei Wu et al. NAACL-HLT 2021. [Paper]

- SAFARI: "Large Language Models as Source Planner for Personalized Knowledge-grounded Dialogues". Hongru Wang et al. EMNLP 2023. [Paper]

- Personalized Soups: "Personalized Soups: Personalized Large Language Model Alignment via Post-hoc Parameter Merging". Joel Jang et al. arXiv 2023. [Paper]

- OPPU: "Democratizing Large Language Models via Personalized Parameter-Efficient Fine-tuning". Zhaoxuan Tan et al. arXiv 2024. [Paper]

- Zhongjing: "Zhongjing: Enhancing the Chinese Medical Capabilities of Large Language Model through Expert Feedback and Real-World Multi-Turn Dialogue". Songhua Yang et al. AAAI 2024. [Paper]

- Psy-LLM: "Psy-LLM: Scaling up Global Mental Health Psychological Services with AI-based Large Language Models". Tin Lai et al. arXiv 2023. [Paper]

- PharmacyGPT: "PharmacyGPT: The ai pharmacist". Zhengliang Liu et al. arXiv 2023. [Paper]

- EduChat: "EduChat: A large-scale language model-based chatbot system for intelligent education". Yuhao Dan et al. arXiv 2023. [Paper]

- TidyBot: "TidyBot: personalized robot assistance with large language models". Jimmy Wu et al. Auton. Robots 2023. [Paper]

- MRR: "Mean Reciprocal Rank". Nick Craswell. Encyclopedia of Database Systems, 2009. [Paper]

- nDCG: "Cumulated gain-based evaluation of IR techniques". Kalervo Järvelin and Jaana Kekäläinen. ACM TOIS, 2002. [Paper]

- MS MARCO: "MS MARCO: A Human Generated Machine Reading Comprehension Dataset". Tri Nguyen et al. CoCoNIPS 2016. [Paper]

- NQ: "Natural questions: a benchmark for question answering research". Tom Kwiatkowski et al. TACL, 2019. [Paper]

- TriviaQA: "TriviaQA: A Large Scale Distantly Supervised Challenge Dataset for Reading Comprehension". Hady Elsahar et al. LREC, 2018. [Paper]

- KILT: "KILT: a Benchmark for Knowledge Intensive Language Tasks". Fabio Petroni et al. NAACL 2021. [Paper]

- TREC DL 2019: "Overview of the TREC 2019 deep learning track". Nick Craswell et al. arXiv 2020. [Paper]

- TREC DL 2020: "Overview of the TREC 2020 deep learning track". Nick Craswell et al. arXiv 2021. [Paper]

- "Understanding Differential Search Index for Text Retrieval". Xiaoyang Chen et al. ACL 2023. [Paper]

- "How Does Generative Retrieval Scale to Millions of Passages?". Ronak Pradeep et al. EMNLP 2023. [Paper]

- "On the Robustness of Generative Retrieval Models: An Out-of-Distribution Perspective". Yu-An Liu et al. arXiv 2023. [Paper]

- "Generative Retrieval as Dense Retrieval". Thong Nguyen et al. arXiv 2023. [Paper]

- "Generative Retrieval as Multi-Vector Dense Retrieval". Shiguang Wu et al. SIGIR 2024. [Paper]

- BLEU: "Bleu: a Method for Automatic Evaluation of Machine Translation". Kishore Papineni et al. ACL 2002. [Paper]

- ROUGE: "ROUGE: A Package for Automatic Evaluation of Summaries". Chin-Yew Lin. ACL 2004. [Paper]

- BERTScore: "BERTScore: Evaluating Text Generation with BERT". Tianyi Zhang et al. ICLR 2020. [Paper]

- BLEURT: "BLEURT: Learning Robust Metrics for Text Generation". Thibault Sellam et al. ACL 2020. [Paper]

- GPTScore: "GPTScore: Evaluate as You Desire". Jinlan Fu et al. arXiv 2023. [Paper]

- FActScore: "FActScore: Fine-grained Atomic Evaluation of Factual Precision in Long Form Text Generation". Sewon Min et al. EMNLP 2023. [Paper]

- MMLU: "Measuring Massive Multitask Language Understanding". Dan Hendrycks et al. ICLR 2021. [Paper]

- BIG-bench: "Beyond the Imitation Game: Quantifying and extrapolating the capabilities of language models". Aarohi Srivastava et al. arXiv 2022. [Paper]

- LLM-Eval: "Unified Multi-Dimensional Automatic Evaluation for Open-Domain Conversations with Large Language Models". Yen-Ting Lin et al. arXiv 2023. [Paper]

- API-Bank: "API-Bank: A Comprehensive Benchmark for Tool-Augmented LLMs". Minghao Li et al. EMNLP 2023. [Paper]

- ToolBench: "Generative Retrieval as Dense Retrieval". Thong Nguyen et al. arXiv 2023. [Paper]

- TruthfulQA: "TruthfulQA: Measuring How Models Mimic Human Falsehoods". Stephanie Lin et al. ACL 2022. [Paper]

- ALCE: "Auto Search Indexer for End-to-End Document Retrieval". Tianchi Yang et al. EMNLP 2023. [Paper]

- HaluEval: "A Large-Scale Hallucination Evaluation Benchmark for Large Language Models". Junyi Li et al. EMNLP 2023. [Paper]

- RealTime QA: "RealTime QA: What's the Answer Right Now?". Jungo Kasai et al. NeurIPS 2023. [Paper]

- FreshQA: "FreshLLMs: Refreshing Large Language Models with Search Engine Augmentation". Tu Vu et al. arXiv 2023. [Paper]

- SafetyBench: "SafetyBench: Evaluating the Safety of Large Language Models with Multiple Choice Questions". Zhexin Zhang et al. arXiv 2023. [Paper]

- TrustGPT: "TrustGPT: A Benchmark for Trustworthy and Responsible Large Language Models". Yue Huang et al. arXiv 2023. [Paper]

- TrustLLM: "TrustLLM: Trustworthiness in Large Language Models". Lichao Sun et al. arXiv 2024. [Paper]

- Scalability

- Dynamic Corpora

- Document Representation

- Efficiency

- Accuracy and Factuality

- Real-time Property

- Bias and Fairness

- Privacy and Security

- Unified Framework for Retrieval and Generation

- Towards End2end Framework for Various IR Tasks

- "Gen-IR@SIGIR 2023: The First Workshop on Generative Information Retrieval". Gabriel Bénédict et al. SIGIR 2023. [Link]

- "The 1st Workshop on Recommendation with Generative Models". Wenjie Wang et al. CIKM 2023. [Link]

- "Gen-IR@SIGIR 2024: The Second Workshop on Generative Information Retrieval". Gabriel Bénédict et al. SIGIR 2024. [Link]

- "A Survey of Large Language Models". Wayne Xin Zhao et al. arXiv 2023. [Paper]

- "A Comprehensive Survey of AI-Generated Content (AIGC): A History of Generative AI from GAN to ChatGPT". Yihan Cao et al. arXiv 2023. [Paper]

- "A Survey on Evaluation of Large Language Models". Yupeng Chang et al. arXiv 2023. [Paper]

- "Large language models for information retrieval: A survey". Yutao Zhu et al. arXiv 2023. [Paper]

- "Bias and Fairness in Large Language Models: A Survey". Isabel O. Gallegos et al. arXiv 2023. [Paper]

- "Survey on Factuality in Large Language Models: Knowledge, Retrieval and Domain-Specificity". Cunxiang Wang et al. arXiv 2023. [Paper]

- "A Survey on Detection of LLMs-Generated Content". Xianjun Yang et al. arXiv 2023. [Paper]

- "Knowledge Editing for Large Language Models: A Survey". Song Wang et al. arXiv 2023. [Paper]

- "A Survey of Knowledge Editing of Neural Networks". Vittorio Mazzia et al. arXiv 2023. [Paper]

- "A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions". Lei Huang et al. arXiv 2023. [Paper]

- "A Survey of Large Language Models Attribution". Dongfang Li et al. arXiv 2023. [Paper]

- "Retrieval-Augmented Generation for Large Language Models: A Survey". Yunfan Gao et al. arXiv 2023. [Paper]

- "Unifying Bias and Unfairness in Information Retrieval: A Survey of Challenges and Opportunities with Large Language Models". Sunhao Dai et al. arXiv 2024. [Paper]

- "Rethinking Search: Making Domain Experts out of Dilettantes". Donald Metzler et al. SIGIR Forum 2021. [Paper]

- "Large Search Model: Redefining Search Stack in the Era of LLMs". Liang Wang et al. SIGIR Forum 2023. [Paper]

- "Information Retrieval Meets Large Language Models: A Strategic Report from Chinese IR Community". Qingyao Ai et al. AI Open 2023. [Paper]

Please kindly cite our paper if helps your research:

@article{GenIRSurvey,

author={Xiaoxi Li and

Jiajie Jin and

Yujia Zhou and

Yuyao Zhang and

Peitian Zhang and

Yutao Zhu and

Zhicheng Dou},

title={From Matching to Generation: A Survey on Generative Information Retrieval},

journal={CoRR},

volume={abs/2404.14851},

year={2024},

url={https://arxiv.org/abs/2404.14851},

eprinttype={arXiv},

eprint={2404.14851}

}This project is released under the MIT License.

Feel free to contact us if you find a mistake or have any advice. Email: xiaoxi_li@ruc.edu.cn and dou@ruc.edu.cn.