- MISP to Microsoft Sentinel integration

- Introduction

- Installation

- Configuration

- Setup

- Integration details

- FAQ

- I don't see my indicator in Sentinel

- I don't see my indicator in Sentinel (2)

- I want to ignore the x-misp-object and synchronise all attribute

- Can I get a copy of the requests sent to Sentinel?

- Can I get a copy of the response errors returned by Sentinel?

- An attribute with to_ids to False is sent to Sentinel

- What is tenant, client_id and workspace_id?

- I need help with the MISP event filters

- What are the configuration changes compared to the old Graph API version?

- I want to limit which tags get synchronised to Sentinel

- Error: KeyError: 'access_token'

- Error: Unable to process indicator. Invalid indicator type or invalid valid_until date.

- Additional documentation

The MISP to Microsoft Sentinel integration allows you to upload indicators from MISP to Microsoft Sentinel. It relies on PyMISP to get indicators from MISP and an Azure App to connect to Sentinel.

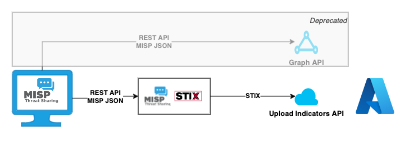

The integration supports two methods for sending threat intelligence from MISP to Microsoft Sentinel:

- The recommend Upload Indicators API, or

- The deprecated Microsoft Graph API. To facilitate the transition the integration supports both APIs.

If you were previously using the old version of MISP2Sentinel via the Microsoft Graph API then take a moment before upgrading.

- The new integration has different dependencies, for example the Python library misp-stix needs to be installed;

- Your Azure App requires permissions on your workplace;

- There are changes in

config.py. The most important changes are listed in the FAQ.

The change in API also has an impact on how data MISP data is used. The Graph API version queries the MISP REST API for results in MISP JSON format, and then does post-processing on the retrieved data. The new Upload Indicators API of Microsoft is STIX based. The integration now relies on MISP-STIX a Python library to handle the conversion between MISP and STIX format. For reference, STIX, is a structured language for describing threat information to make sharing information between systems easier.

From a functional point of view, all indicators that can be synchronised via the Graph API, can also be synchronised via the Upload Indicators API. There are some features missing in the STIX implementation of Sentinel and as a result some context information (identity, attack patterns) is lost. But it is only a matter of time before these are implemented on the Sentinel side, after which you can fully benefit from the STIX conversion.

In addition to the change to STIX, the new API also supports Sentinel Workspaces. This means you can send indicators to just one workspace, instead of pushing them globally. Compared to the previous version of MISP2Sentinel there also has been a clean-up of the configuration settings and the integration no longer outputs to stdout, but writes its activity in a log file.

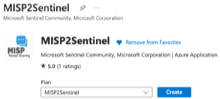

The misp2sentinel solution is in the Market Place or Microsoft Sentinel Content Hub with a corresponding data connector. Note that enabling the solution in Azure isn't sufficient to sync indicators. You still need to setup the Python environment or use the Azure Function.

You need to register a new application in the Microsoft Application Registration Portal.

- Sign in to the Application Registration Portal.

- Choose New registration.

- Enter an application name, and choose Register. The application name does not matter but pick something that's easy recognisable.

- From the overview page of your app note the Application ID (client) and Directory ID (tenant). You will need it later to complete the configuration.

- Under Certificates & secrets (in the left pane), choose New client secret and add a description. A new secret will be displayed in the Value column. Copy this password. You will need it later to complete the configuration and it will not be shown again.

As a next step, you need to grant the necessary permissions.

For the Graph API:

- Under API permissions (left pane), choose Add a permission > Microsoft Graph.

- Under Application Permissions, add ThreatIndicators.ReadWrite.OwnedBy.

- Then grant consent for the new permissions via Grant admin consent for Standaardmap (Standaardmap is replaced with your local tenant setting). Without the consent the application will not have sufficient permissions.

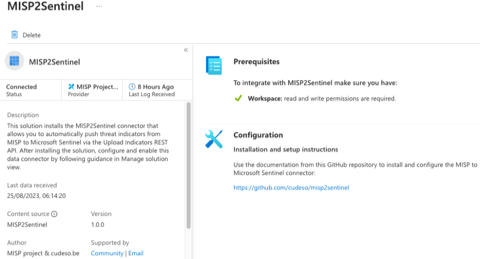

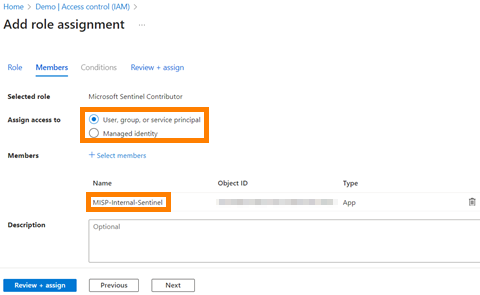

If you plan on using the Upload Indicators API then grant the Azure App Microsoft Sentinel Contributor permissions for the workspaces you want to connect to.

- Select Access control (IAM).

- Select Add > Add role assignment.

- In the Role tab, select the Microsoft Sentinel Contributor role > Next.

- On the Members tab, select Assign access to > User, group, or service principal.

- Select members. By default, Microsoft Entra applications aren't displayed in the available options. To find your application, search for it by name

- Then select Review + assign.

- Also take note of the Workspace ID. You can get this ID by accessing the Overview page of the workspace.

After the registration of the app it's time to add a data connector.

For the Graph API:

- Go to the Sentinel service.

- Under Configuration, Data connectors search for Threat Intelligence Platforms (Preview). Open the connection pane and click connect.

For the Upload Indicators API:

- Go to the Sentinel service.

- Choose the workspace where you want to import the indicators from MISP.

- Under Content management, click on Content hub

- Find and select the MISP2Sentinel solution using the list view

- Select the Install/Update button.

Please note: This step is optional and replaces the need for running the solution directly on the MISP-server itself, instead chosing to run the script in an Azure Function.

- Create an app registration in the same Microsoft tenant where the Sentinel instance resides. The app requires Microsoft Sentinel Contributor assigned on the workspace.

- Create a Keyvault in your Azure subscription

- Add a new secret with the name "tenants" and the following value (its possible to add multiple Sentinel instances, it will loop all occurences):

[

{

"tenantId": "<TENANT_ID_WITH_APP_1>",

"id": "<APP_ID>",

"secret": "<APP_SECRET>",

"workspaceId": "<WORKSPACE_ID>"

},

{

"tenantId": "<TENANT_ID_WITH_APP_N>",

"id": "<APP_ID>",

"secret": "<APP_SECRET_N>",

"workspaceId": "<WORKSPACE_ID_N>"

}

]- Add a new secret with the name "mispkey" and the value of your MISP API key

- Create an Azure Function in your Azure subscription, this needs to be a Linux based Python 3.9 function.

- Modify config.py to your needs (event filter).

- Upload the code to your Azure Function.

- If you are using VSCode, this can be done by clicking the Azure Function folder and selecting "Deploy to Function App", provided you have the Azure Functions extension installed.

- If using Powershell, you can upload the ZIP file using the following command:

Publish-AzWebapp -ResourceGroupName <resourcegroupname> -Name <functionappname> -ArchivePath <path to zip file> -Force. If you want to make changes to the ZIP-file, simply send the contents of theAzureFunction-folder (minus any.venv-folder you might have created) to a ZIP-file and upload that. - If using AZ CLI, you can upload the ZIP file using the following command:

az functionapp deployment source config-zip --resource-group <resourcegroupname> --name <functionappname> --src <path to zip file>. - You can also use the

WEBSITE_RUN_FROM_PACKAGEconfiguration setting, which will allow you to upload the ZIP-file to a storage account (or Github repository) and have the Azure Function run from there. This is useful if you want to use a CI/CD pipeline to deploy the Azure Function, meaning you can just update the ZIP-file and have the Azure Function automatically update.

- Add a "New application setting" (env variable) to your Azure Function named

tenants. Create a reference to the key vault previously created (@Microsoft.KeyVault(SecretUri=https://<keyvaultname>.vault.azure.net/secrets/tenants/)). - Do the same for the

mispkeysecret (@Microsoft.KeyVault(SecretUri=https://<keyvaultname>.vault.azure.net/secrets/mispkey/)) - Add a "New application setting" (env variable) called

mispurland add the URL to your MISP-server (https://<mispurl>) - Add a "New application setting" (env variable)

timerTriggerScheduleand set it to run. If you're running against multiple tenants with a big filter, set it to run once every two hours or so.

- The

timerTriggerScheduletakes a cron expression. For more information, see Timer trigger for Azure Functions. - Run once every two hours cron expression:

0 */2 * * *

For a more in-depth guidance, check out the INSTALL.MD guidance, or read Use Update Indicators API to push Threat Intelligence from MISP to Microsoft Sentinel.

The MISP2Sentinel integrations requires access to the MISP REST API and you need an API key to access it.

Create the key under Global Actions, My Profile and then choose Auth keys. Add a new key. The key can be set to read-only as the integration does not alter MISP data.

You then need Python3, a Python virtual environment and PyMISP.

- Verify you have

python3installed on your system - Download the repository

git clone https://github.com/cudeso/misp2sentinel.git - Go to directory

cd misp2sentinel - Create a virtual environment

virtualenv sentineland activate the environmentsource sentinel/bin/activate - Install the Python dependencies

pip install -r requirements.txt

The configuration is handled in the config.py file.

By default the config.py will look to use Azure Key Vault if configured, if you set a "key_vault_name" value in your environment variables, to the name of the Azure Key Vault you have deployed, this will be the default store for all secret and configuration values.

If you do not set the above value, the config.py will then fall-back to using environment variables and lastly, values directly written inside of the config.py file.

Guidance for assigning a Management Service Indeitity to Function App

Assigning your function app permissions to Azure Key Vault - NOTE - you only need to assign "Secret GET" permission to your function app Management Service Identity.

First define the Microsoft authentication settings in the dictionary ms_auth. The tenant (Directory ID), client_id (Application client ID), and client_secret (secret client value) are the values you obtained when setting up the Azure App. You can then choose between the Graph API or the recommended Upload Indicators API. To use the former : set graph_api to True and choose as scope 'https://graph.microsoft.com/.default'. To use the Upload Indicators API, set graph_api to False, choose as scope 'https://management.azure.com/.default' and set the workspace ID in workspace_id.

ms_auth = {

'tenant': '<tenant>',

'client_id': '<client_id>',

'client_secret': '<client_secret>',

'graph_api': False, # Set to False to use Upload Indicators API

#'scope': 'https://graph.microsoft.com/.default', # Scope for GraphAPI

'scope': 'https://management.azure.com/.default', # Scope for Upload Indicators API

'workspace_id': '<workspace_id>'

}

Next there are settings that influence the interaction with the Microsoft Sentinel APIs.

The settings for the Graph API are

ms_passiveonly = False: Determines if the indicator should trigger an event that is visible to an end-user.- When set to ‘true,’ security tools will not notify the end user that a 'hit' has occurred. This is most often treated as audit or silent mode by security products where they will simply log that a match occurred but will not perform the action. This setting no longer exists in the Upload Indicators API.

ms_action = 'alert: The action to apply if the indicator is matched from within the targetProduct security tool.- Possible values are: unknown, allow, block, alert. This setting no longer exists in the Upload Indicators API.

The settings for the Upload Indicators API are

ms_api_version = "2022-07-01": The API version. Leave this to "2022-07-01".ms_max_indicators_request = 100: Throttling limits for the API. Maximum indicators that can be send per request. Max. 100.ms_max_requests_minute = 100: Throttling limits for the API. Maximum requests per minute. Max. 100.

ms_passiveonly = False # Graph API only

ms_action = 'alert' # Graph API only

ms_api_version = "2022-07-01" # Upload Indicators API version

ms_max_indicators_request = 100 # Upload Indicators API: Throttle max: 100 indicators per request

ms_max_requests_minute = 100 # Upload Indicators API: Throttle max: 100 requests per minute

Set misp_key to your MISP API key and misp_domain to the URL of your MISP server. You can also specify if the script should validate the certificate of the misp instance with misp_verifycert (usually relevant for self-signed certificates)

misp_key = '<misp_api_key>'

misp_domain = '<misp_url>'

misp_verifycert = False (by default this is False, however this is determined by an environment variable set "local_mode", see config.py

The dictionary misp_event_filters defines which filters you want to pass on to MISP. This applies to both Graph API and Upload Indictors API. The suggested settings are

"published": 1: Only include events that are published"tags": [ "workflow:state=\"complete\""]: Only events with the workflow state 'complete'"enforceWarninglist": True: Skip indicators that match an entry with a warninglist. This is highly recommended, but obviously also depends on if you have enable MISP warninglists."includeEventTags": True: Include the tags from events for additional context"publish_timestamp": "14d: Include events published in the last 14 days

There's one MISP filter commonly used that does not have an impact for this integration: to_ids. In MISP to_ids defines if an indicator is actionable or not. Unfortunately the REST API only supports the to_ids filter when querying for attributes. This integration queries for events. Does this mean that indicators with to_ids set to False are uploaded? No. In the Graph API version, only attributes with to_ids set to True are used. The Upload Indicators API relies on the MISP-STIX conversion of attributes (and objects). This conversion checks for the to_ids flag for indicators, the only exception being attributes part of an object (also see #48).

misp_event_filters = {

"published": 1,

"tags": [ "workflow:state=\"complete\""],

"enforceWarninglist": True,

"includeEventTags": True,

"publish_timestamp": "14d",

}

There's one additional setting for the Upload Indicators API and that's misp_event_limit_per_page. This setting defines how many events per search query are processed. Use this setting to limit the memory usage of the integration.

misp_event_limit_per_page = 50

To avoid having secrets in cleartext saved, you can integrate with an Azure Key Vault.

- Enable a managed identity for the virtual machine

- Create an Azure Key Vault

- Create the secrets

MISP-KeyandClientSecretin the secrets tab - Give the virtual machine managed identity access to the

Readerrole on the Azure Key Vault - Give the same managed identity

GetandListsecret actions in the Access Policy - Make sure to run installation of

requirements.txtagain, as this requires two new libraries:- azure-keyvault-secrets

- azure-identity

The rest of the configuration is done in config.py:

# Code for supporting storage of secrets in key vault (only works for VMs running on Azure)

import os

from azure.keyvault.secrets import SecretClient

from azure.identity import DefaultAzureCredential

# Key vault section

# Key Vault name must be a globally unique DNS name

keyVaultName = "<unique-name>"

KVUri = f"https://{keyVaultName}.vault.azure.net"

# Log in with the virtual machines managed identity

credential = DefaultAzureCredential()

client = SecretClient(vault_url=KVUri, credential=credential)

# Retrieve values from KV (client secret, MISP-key most importantly)

retrieved_mispkey = client.get_secret('MISP-Key')

retrieved_clientsecret = client.get_secret('ClientSecret')- Firstly, uncomment the lines in

config.pyso it matches the above - Make sure the variable

keyVaultNameis set to the name of the key vault you set up earlier (this is also a good time to double check that the managed identity has the correct access to the KV) - Replace the value for the

misp_keyvariable:misp_key = retrieved_mispkey.value - Replace the value for the

client_secretvariable in thems_auth-blob:'client_secret': retrieved_clientsecret.value

The remainder of the settings deal with how the integration is handled.

Ignore local tags and network destination

These settings only apply for the Graph API:

ignore_localtags = True: When converting tags from MISP to Sentinel, ignore the MISP local tags (this applies to tags on event and attribute level).network_ignore_direction = True: When set to true, not only store the indicator in the "Source/Destination" field of Sentinel (networkDestinationIPv4, networkDestinationIPv6 or networkSourceIPv4, networkSourceIPv6), also map in the fields without network context (networkIPv4,networkIPv6).

ignore_localtags = True

network_ignore_direction = True # Graph API only

Indicator confidence level

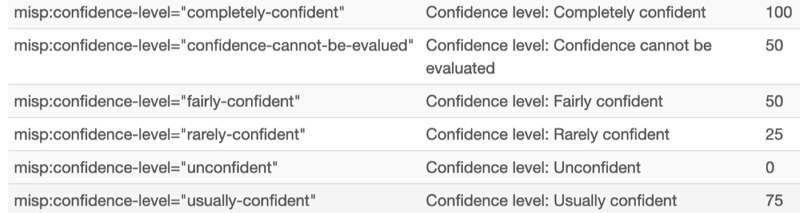

default_confidence = 50: The default confidence level of indicators. This is a value between 0 and 100 and is used by both the Graph API and Upload Indicators API. You can set a confidence level per indicator, but if you don't set one then this default value is used.- This value is overridden when an attribute is tagged with the MISP confidence level (MISP taxonomy). The tag is translated to a numerical confidence value (defined in

MISP_CONFIDENCEinconstants.py). It's possible to have more fine-grained confidence levels by adjusting the MISP taxonomy and simply adding entries to the predicate 'confidence-level'.

- This value is overridden when an attribute is tagged with the MISP confidence level (MISP taxonomy). The tag is translated to a numerical confidence value (defined in

default_confidence = 50 # Sentinel default confidence level of indicator

Days to expire indicator

These settings apply to both the Graph API and Upload Indicators API.

days_to_expire = 50: The default number of days after which an indictor in Sentinel will expire.

For the Graph API the date is calculated based on the timestamp when the script is executed. The expiration of indicators works slightly different for the Upload Indicators API. There are two additional settings that apply for this API:

days_to_expire_start = "current_date": Define if you want to start counting the "expiration" date (defined indays_to_expire) from the current date (by using the valuecurrent_date) or from the value specified by MISP withvalid_from.days_to_expire_mapping: Is a dictionary mapping specific expiration dates for indicators (STIX patterns). The numerical value is in days. This value overridesdays_to_expire.

days_to_expire = 50 # Graph API and Upload Indicators

days_to_expire_start = "current_date" # Upload Indicators API only. Start counting from "valid_from" | "current_date" ;

days_to_expire_mapping = { # Upload indicators API only. Mapping for expiration of specific indicator types

"ipv4-addr": 150,

"ipv6-addr": 150,

"domain-name": 300,

"url": 400,

}

In MISP you can set the first seen and last seen value of attributes. In the MISP-STIX conversion, this last seen value is translated to valid_until. This valid_until influences the expiration date of the indicator. If the expiration date (calculated with the above values) is after the current date, then it is ignored. In some cases it can be useful to ignore the last seen value set in MISP, and just use your own calculations of the expiration date. You can do this with days_to_expire_ignore_misp_last_seen. This ignores the last seen value, and calculates expiration date based on days_to_expire (and _mapping).

Script output

This version of MISP2Sentinel writes its output to a log file (defined in log_file).

If you're using the Graph API you can output the POST JSON to a log file with write_post_json = True. A similar option exist for the Upload Indicators API. With write_parsed_indicators = True it will output the parsed value of the indicators to a local file.

With verbose_log = True you can increase the verbosity setting of the log output.

log_file = "/tmp/misp2sentinel.log"

write_post_json = False # Graph API only

verbose_log = False

write_parsed_indicators = False # Upload Indicators only

It is best to run the integration is from the cron of user www-data.

# Sentinel

00 5 * * * cd /home/misp/misp2sentinel/ ; /home/misp/misp2sentinel/venv/bin/python /home/misp/misp2sentinel/script.py

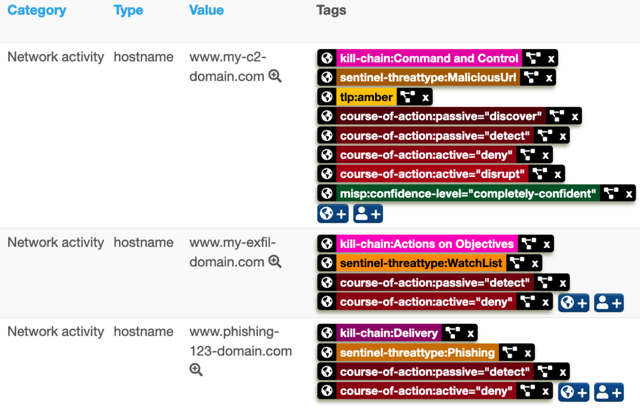

To make the most of the Sentinel integration you have to enable these MISP taxonomies:

- MISP taxonomy

- sentinel-threattype

- kill-chain

- diamond-model (only used with Graph API)

These taxonomies are used to provide additional context to the synchronised indicators and are strictly not necessary for the well-functioning of the integration. But they provide useful information for Sentinel users to understand what the threat is about and which follow-up actions need to be taken.

The attack patterns (TTPType and others) are not yet implemented by Microsoft. This means that information from Galaxies and Clusters (such as those from MITRE) added to events or attributes are included in the synchronisation. Once there is full STIX support from Microsoft these attack patterns will be imported.

The "Created by" field refers to the UUID of the organisation that created the event in MISP. The identity concept is not yet implemented on the Sentinel side. It is in the STIX export from MISP but as identity objects are not yet created in Sentinel, the reference is only a textual link to the MISP organisation UUID.

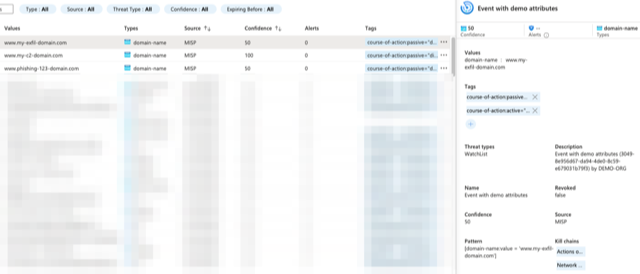

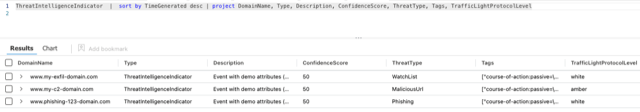

The numerical value from the tags of the confidence level in the (MISP taxonomy) are translated to the indicator confidence level.

You can identify the Sentinel threat type on event and attribute level with the taxonomy sentinal-threattype. The Graph API translates the tags from the sentinal-threattype taxonomy to the Sentinel values for threattype. In STIX (and thus also for the Upload Indicators API) there is no sentinal-threattype. In this case the integration translates the indicators to indicator_types, which the Sentinel interface then represents under Threat type.

The Kill Chain tags are translated by the Graph API to the Kill Chain values of Microsoft. Note that Sentinel uses C2 and Actions instead of "Command and Control" and "Actions on Objectives". The Upload Indicators API translates them to the STIX Kill Chain entities. In addition, the integration for the Upload Indicators API will also translate the MISP category into a Kill Chain.

The TLP (Traffic Light Protocol) tags of an event and attribute are translated to the STIX markers. If there's a TLP set on the attribute level then this takes precedence. If no TLP is set (on event or attribute), then tlp-white is applied (set via SENTINEL_DEFAULT_TLP.)

The Graph API translates the tags from the diamond-model taxonomy to Sentinel. properties for diamondModel. The Diamond model is not used by the Upload Indicators API.

The Graph API translates the MISP attributes threat-actor to Sentinel properties for activityGroupNames. The MISP attributes comment are added to the Sentinel description. This is not used by the Upload Indicators API. Future versions of the Microsoft API will support attack patterns etc.

Only indicators of type stix are used, as such the attributes of type yara or sigma are not synchronised.

For the Upload Indicators API:

- If the attribute type is in

days_to_expire_mapping, use the days defined in the mapping - If the there is no mapping, then use the default

days_to_expire - Start counting from today if

days_to_expire_startis "current_date" (or from the "valid_from" time) - If the end count date is beyond the date set in "valid_until", then discard the indicator

The valid_until value is set in MISP with the last_seen of an attribute. Depending on your use case you might want to ignore the last_seen of an attribute, and consequently ignore the valid_until value. Do this by setting the configuration option days_to_expire_ignore_misp_last_seen to True.

days_to_expire_ignore_misp_last_seen = True

The attribute type matchings are defined in constants.py.

ATTR_MAPPING = {

'AS': 'networkSourceAsn',

'email-dst': 'emailRecipient',

'email-src-display-name': 'emailSenderName',

'email-subject': 'emailSubject',

'email-x-mailer': 'emailXMailer',

'filename': 'fileName',

'malware-type': 'malwareFamilyNames',

'mutex': 'fileMutexName',

'port': 'networkPort',

'published': 'isActive',

'size-in-bytes': 'fileSize',

'url': 'url',

'user-agent': 'userAgent',

'uuid': 'externalId',

'domain': 'domainName',

'hostname': 'domainName'

}

There are also special cases covered in other sections of the Python code.

MISP_SPECIAL_CASE_TYPES = frozenset([

*MISP_HASH_TYPES,

'url',

'ip-dst',

'ip-src',

'domain|ip',

'email-src',

'ip-dst|port',

'ip-src|port'

])

- ip-dst and ip-src

-

- mapped to either

networkDestinationIPv4,networkDestinationIPv6ornetworkSourceIPv4,networkSourceIPv6

- mapped to either

-

- if the configuration value

network_ignore_directionis set to true then the indicator is also mapped tonetworkIPv4,networkIPv6

- if the configuration value

- domain|IP

-

- Mapped to a domain and an IP

domainName

- Mapped to a domain and an IP

-

- An IP without a specification of a direction

networkIPv4,networkIPv6

- An IP without a specification of a direction

- email-src

-

- Mapped to a sender address

emailSenderAddress

- Mapped to a sender address

-

- And to a source domain

emailSourceDomain

- And to a source domain

- ip-dst|port and ip-src|port

-

- apped to either

networkDestinationIPv4,networkDestinationIPv6ornetworkSourceIPv4,networkSourceIPv6

- apped to either

-

- if the configuration value

network_ignore_directionis set to true then the indicator is also mapped tonetworkIPv4,networkIPv6

- if the configuration value

-

- The port is mapped to

networkSourcePort,networkDestinationPort

- The port is mapped to

-

- if the configuration value

network_ignore_directionis set to true then the indicator is also mapped tonetworkPort

- if the configuration value

- url

-

- MISP URL values that do not start with http or https or changed to start with http. Azure does not accept URLs that do not start with http

The supported hashes are defined in the set MISP_HASH_TYPES.

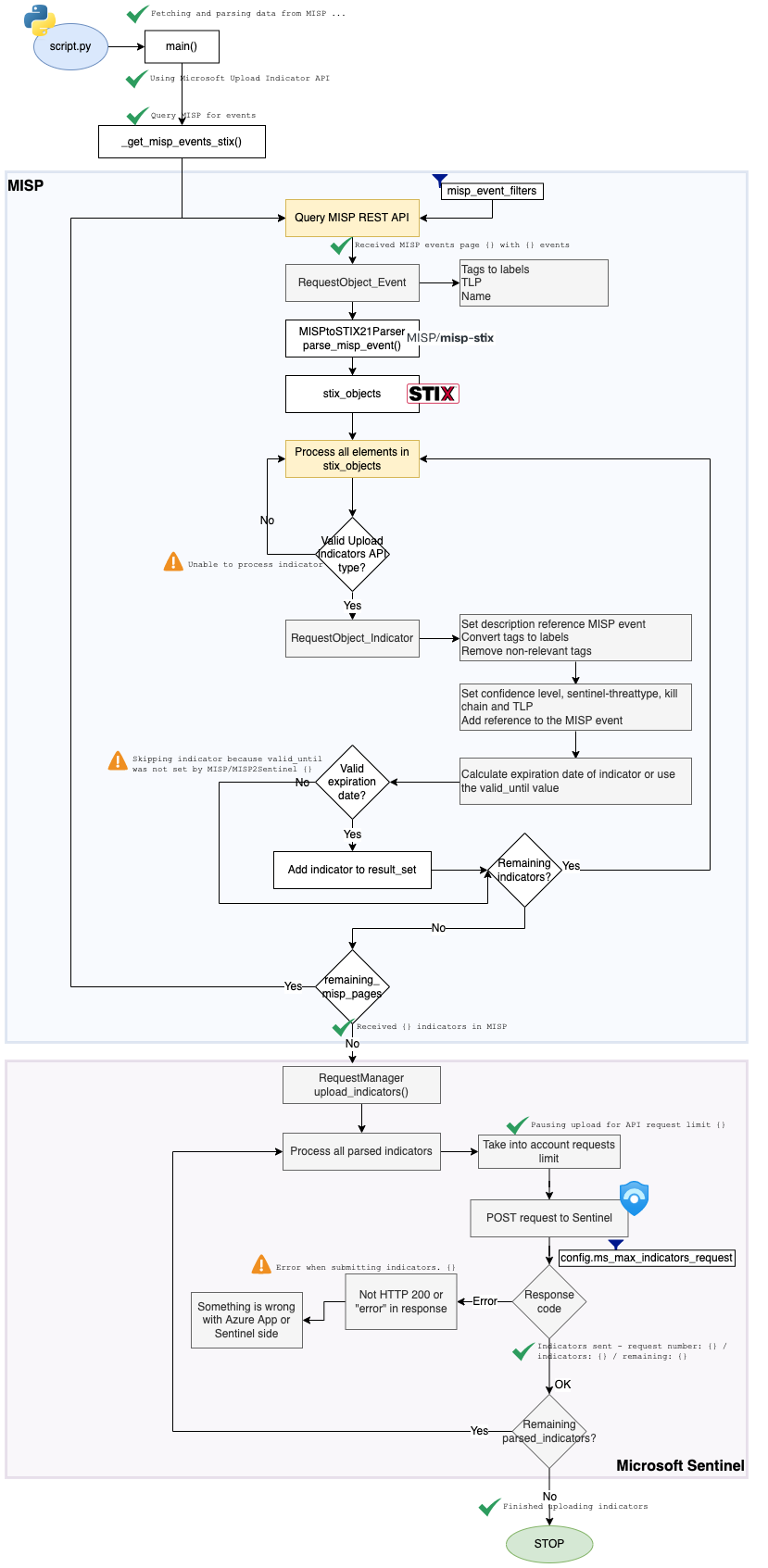

The integration workflow is as follows:

script.py- The

mainfunction starts the request for the MISP events via_get_misp_events_stix() - In

_get_misp_events_stix()the MISP REST API is queried for events matching your search queries (defined inmisp_event_filters) - This REST API returns a paged result in JSON format

- DEBUG: If you get the message

Received MISP events page {} with {} eventsin the log then the script was successful in querying MISP events - The function loops through the events, as long as the result set is not empty.

- If it is empty, then

remaining_misp_pagesis set to False, exiting the loop - If you provided a limit in

misp_event_filters, then events are queried in one go - Note that the MISP REST API supports returning STIX2 format, but at the time of development the API didn't handle requests for non-existing pages (pages with a result set of 0 events; the API does not return the number of pages, so you have to query until the result set is 0)

- This was raised as a bug report with issue 44 in misp-stix and solved with MISP/MISP@18fd906

- On the roadmap withissue issue 52

- If it is empty, then

- DEBUG: If you get the message

- In

RequestObject_Eventthe event tags are converted to tags, the event name is set and a TLP is set - For each event, it will parse and convert the JSON to STIX with

MISPtoSTIX21Parserwith the functionparse_misp_event()(this is part of misp-stix) - This conversion returns stix_objects. If the conversion returns errors, the script will continue. The reason is that we want the upload to continue, even if the conversion for one event fails.

- DEBUG You can track these errors with the message

Error when processing data in event {} from MISP {}

- DEBUG You can track these errors with the message

- It then loops through the stix objects

- Only valid types for uploading to the Upload Indicators API are considered

- An invalid type is ignored and processing continues

- For example indicators of type YARA are ignored, but other indicators in the event should still be processed

- For each indicator, the class

RequestObject_Indicatoris instantiated- This sets the description to refer to the event

- Converts the MISP tags to labels, removing some of the non-relevant tags

- Adds the confidence level

- Sets the sentinel-threattype, kill chain and tlp labels

- Adds a reference to the MISP event

- Sets the expiration date of the attribute

- Only if there is a valid expiration date of the indicator (either calculated or set in the threat event), the indicator is considered

- Errors are logged with

Skipping indicator because valid_until was not set by MISP/MISP2Sentinel {}

- Errors are logged with

- Valid indicators are added to the list

result_set

- Only valid types for uploading to the Upload Indicators API are considered

- When all indicators are processed, it logs

Processed {} indicators(if debug is set to True) - When all remaining pages with results are processed, it logs

Received {} indicators in MISP - Then

upload_indicators()fromRequestManageris called- At this stage the script will start interacting with Microsoft Sentinel. All actions before this step are "local", related to MISP

- It takes into account the upload limits. If it needs to wait an message is logged with

Pausing upload for API request limit {}.

- It starts processing all

processed_indicators- Uploads are done in batches of

config.ms_max_indicators_requestindicators - A POST request is done to Microsoft Sentinel

- If the HTTP status code is not 200, or if the "error" key is in the response then something went wrong. This is logged with

Error when submitting indicators. {}.- An error indicates the Azure App does not have sufficient permissions, or that something on the receiving (Sentinel) side is not OK.

- If the request was successful, it logs this with

Indicators sent - request number: {} / indicators: {} / remaining: {}

- If the HTTP status code is not 200, or if the "error" key is in the response then something went wrong. This is logged with

- Uploads are done in batches of

- When it's done, it will log

Finished uploading indicators

- The

- Is the event published?

- Is the to_ids flag set to True?

- Is the indicator stored in a valid attribute type (

UPLOAD_INDICATOR_MISP_ACCEPTED_TYPES)?

If you are using the new Upload Indicators API then the integration with Sentinel relies on https://github.com/MISP/misp-stix. The MISP attributes and objects are transformed to STIX objects. After that, only the indicators (defined in UPLOAD_INDICATOR_API_ACCEPTED_TYPES) are synchronised with Sentinel. As a consequence, if the conversion by MISP-STIX does not translate MISP attributes or objects to STIX objects, then the value does not get synchronised with Sentinel.

Almost all MISP objects are translated, but there can be situations where the MISP object is not recognised. It is then translated to x-misp-object and not to an indicator STIX object. Elements from x-misp-object are not synchronised. If you run into this situation then open an issue with https://github.com/MISP/misp-stix. Examples in the past include the hashlookup object.

This little Python snippet can help you find out if elements are correctly translated. Adjust misp_event_filters to query only for the event with a non-default object.

misp = PyMISP(config.misp_domain, config.misp_key, config.misp_verifycert, False)

misp_page = 1

config.misp_event_limit_per_page = 100

result = misp.search(controller='events', return_format='json', **config.misp_event_filters, limit=config.misp_event_limit_per_page, page=misp_page)

misp_event=result[0]

parser = MISPtoSTIX21Parser()

parser.parse_misp_event(misp_event)

stix_objects = parser.stix_objects

for el in stix_objects:

print(el.type)

if el.type == 'indicator':

print(el)

When the option misp_flatten_attributes is set to True, the script extracts all attributes that are part of MISP objects and adds them as “atomic” attributes. You lose some contextual information (although the integration adds a comment to the attribute that it used to be part of an object) when you set this to True, but you are then sure that all attributes that can be translated to indicators in STIX are synchronised.

When you use the Upload Indicators API you can print the STIX package sent to Microsoft Sentinel by setting write_parsed_indicators to True. This writes all packages to parsed_indicators.txt. This file is overwritten at each execution of the script.

When you use the Upload Indicators API you can print the errors returned by Sentinel by setting sentinel_write_response to True. This writes the response strings from Microsoft Sentinel that contain an "error" key to sentinel_response.txt.

With the Upload Indicators API the conversion to STIX2 is done with misp-stix. Unfortunately the current version does not take into account the to_ids flag set on attributes in objects. See #48.

tenantis the Directory ID. Get get it by searching for Tenant Properties in Azureclient_idis Application client ID. Get it by listing the App Registrations in Azure and using the column Application (client) IDworkspace_idis the workspace ID. Get it by opening the Log Analytics workspace and the Workspace ID in the Essentials overview

The blog post Figuring out MISP2Sentinel Event Filters can help you defining the misp_event_filters. If you want to be more granular with time based filters then take a look at the MISP playbook Using timestamps in MISP. And lastly, have a look at the different MISP Open API specifications for the event search.

| Old | New |

|---|---|

| graph_auth | ms_auth (now requires a 'scope') |

| targetProduct | ms_target_product (Graph API only) |

| action | ms_action (Graph API only) |

| passiveOnly | ms_passiveonly (Graph API only) |

| defaultConfidenceLevel | default_confidence |

| ms_api_version (Upload indicators) | |

| ms_max_indicators_request (Upload indicators) | |

| ms_max_requests_minute (Upload indicators) | |

| misp_event_limit_per_page (Upload indicators) | |

| days_to_expire_start (Upload indicators) | |

| days_to_expire_mapping (Upload indicators) | |

| days_to_expire_ignore_misp_last_seen (Upload indicators) | |

| log_file (Upload indicators) | |

| misp_remove_eventreports (Upload indicators) | |

| sentinel_write_response (Upload indicators) |

Have a look at _init_configuration() for all the details.

You can control the list of tags that get synchronised with variables in the constants.py file.

- MISP_TAGS_IGNORE : A list of tags to ignore. This list now contains the default tags that are set automatically as "labels" during the conversion by MISP to STIX. You can add your own list here.

- MISP_ALLOWED_TAXONOMIES : The list of allowed taxonomies. This means that if a tag is not part of the taxonomy (technically, if it does not start with

taxonomy:), then it is ignored. If you leave the value empty then all taxonomies / tags are included. For example use["tlp", "admiralty-scale", "type"]

This error occurs when the client_id, tenant, client_secret or workspace_id are invalid. Check the values in the Azure App.

If the error is followed with the message Ignoring non STIX pattern type yara then this means that there’s an indicator type that’s not accepted by Sentinel, in this case yara.

- https://www.vanimpe.eu/2022/04/20/misp-and-microsoft-sentinel/

- https://techcommunity.microsoft.com/t5/microsoft-sentinel-blog/integrating-open-source-threat-feeds-with-misp-and-sentinel/ba-p/1350371

- https://learn.microsoft.com/en-us/graph/api/tiindicators-list?view=graph-rest-beta&tabs=http

- Microsoft Graph Security Documentation

- Microsoft Graph Explorer

- https://github.com/cudeso/misp2sentinel/blob/main/docs/INSTALL.MD

- https://www.infernux.no/MicrosoftSentinel-MISP2SentinelUpdate/

- Microsoft code samples

- MISP to Microsoft Graph Security connector