This repository contains the implementation of the ICCV2023 paper:

DiffGuard: Semantic Mismatch-Guided Out-of-Distribution Detection using Pre-trained Diffusion Models

Ruiyuan Gao$\dagger$ , Chenchen Zhao$\dagger$ , Lanqing Hong$\ddagger$ , Qiang Xu$\dagger$

The Chinese University of Hong Kong$\dagger$ , Huawei Noah’s Ark Lab$\ddagger$

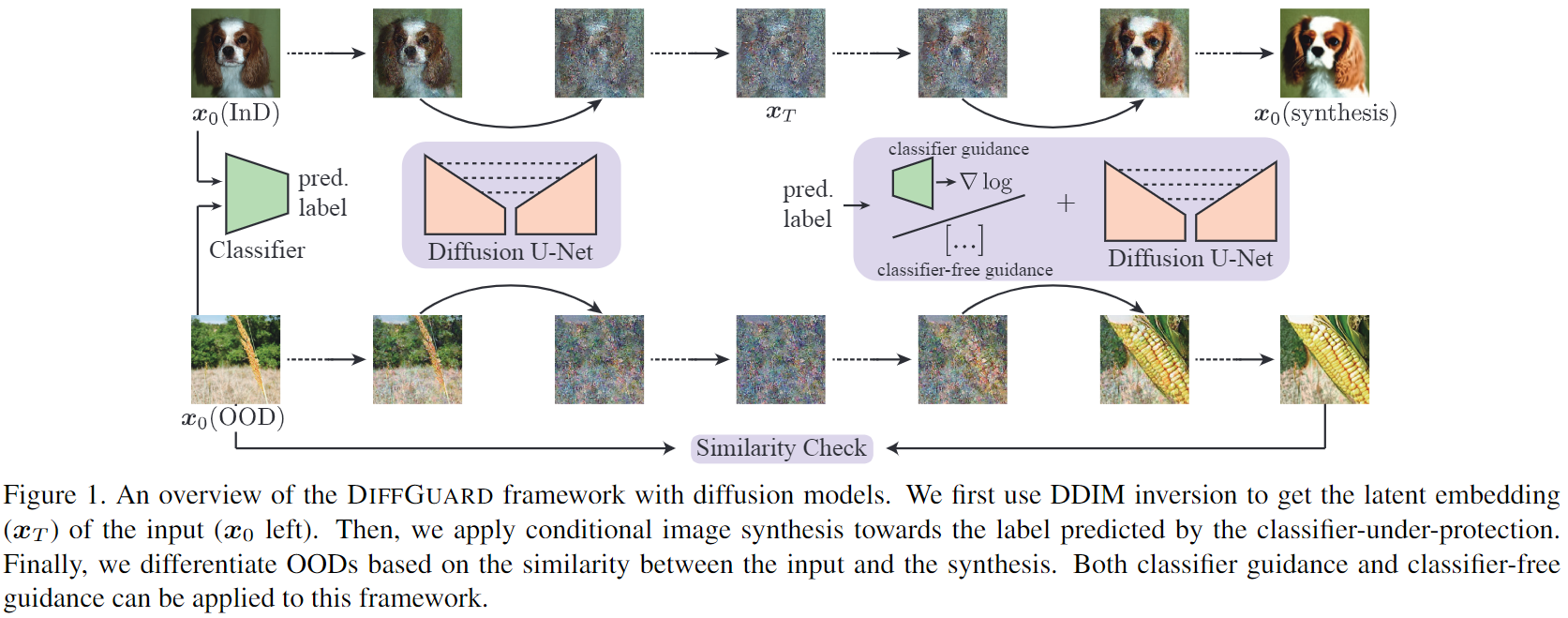

Given a classifier, the inherent property of semantic Out-of-Distribution (OOD) samples is that their contents differ from all legal classes in terms of semantics, namely semantic mismatch. In this work, we propose to directly use pre-trained diffusion models for semantic mismatch-guided OOD detection, named DiffGuard. Specifically, given an OOD input image and the predicted label from the classifier, we try to enlarge the semantic difference between the reconstructed OOD image under these conditions and the original input image. We also present several test-time techniques to further strengthen such differences.

The code is tested with Pytorch==1.10.2 and torchvision==0.11.3.

You should have these packages before starting. To install additional packages, follow:

git clone --recursive https://github.com/cure-lab/DiffGuard.git

cd ${ROOT}

pip install -r requirements.txt

# install external

cd ${ROOT}/external

pip install -e taming-transformers

ln -s pytorch-grad-cam/pytorch_grad_cam

# install current project (i.e., guided-diffusion package)

cd ${ROOT}

pip install -e .Since we use the pretrained diffusion models, all the pretrained weights can be downloaded from the corresponding open-sourced projects:

-

GDM: 256x256 diffusion (not class conditional), 256x256_diffusion_uncond.pt

-

LDM: LDM-VQ-8 on ImageNet, cin.zip

NOTICE: As pointed by #1, LDM used the OpenImage dataset for pre-training. This weight may not fit in OOD benchmarks.

-

ResNet50 classifier: either from torchvision (ImageNet V1) or OpenOOD

We assume you put them at ${ROOT}/../pretrained/ as follows:

${ROOT}/../pretrained/

├── guided-diffusion

│ └── 256x256_diffusion_uncond.pt

├── ldm

│ └── cin256-v2

├── openood

│ └── imagenet_res50_acc76.10.pth

└── torch_cache (we change the default cache dir of torchvision)

└── checkpointsNote that, we change torch cache directory with

torch.hub.set_dir("../pretrained/torch_cache")in testOOD/test_openood.py. You can comment this line to use the default place.

This project use ImageNet benchmark following OpenOOD. We assume the data directory locates at ${ROOT}/../data as follow

${ROOT}/../data/

../data/

├── imagenet_1k

│ └── val

└── images_largescale

├── imagenet_o

│ ├── n01443537

│ └── ...

├── inaturalist

│ └── images

├── openimage_o

│ └── images

└── species_subtogether with the image list files from OpenOOD:

${ROOT}/useful_data/

└── benchmark_imglist

└── imagenet

└── *.txtOur default log directory is ${ROOT}/../DiffGuard-log/, with is outside of ${ROOT}. Please be prepared.

All experiments can be started with testOOD/start_job.py with parse the override params from both .yaml files and command line. Check the example as follows to reproduce the results in Table.2 of our paper (only with DiffGuard):

- GDM with 8 GPUs (V100 32Gb, default config takes about 23Gb)

python testOOD/start_job.py --overrides_file testOOD/exp/exp6.1.yaml \ --world_size 8 [--init_method ... --task_index 6.1] - LDM with 8 GPUs (V100 32Gb, default config takes about 20Gb)

python testOOD/start_job.py --overrides_file testOOD/exp/ldm-exp2.25.yaml \ --world_size 8 [--init_method ... --task_index 2.25]

We use Hydra to manage the configs. Using testOOD/start_job.py is similar to testOOD/test_openood.py. However, you need to parse the override parameters through command line to use the latter one.

Besides, we provides config to reproduce the results with oracle classifier, check configs in testOOD/exp/oracle/*.yaml

testOOD/show_openood.py: used for more visualizationsscripts_cls/*: analyze the classifier-under-protect, as in Fig.2testOOD/exp/oracle/*: configs assuming an oracle classifiertestOOD/exp/speed/*: used to test inference speedtestOOD/exp/ablation/*: for ablation study on GDM

@inproceedings{gao2023diffguard,

title={{DiffGuard}: Semantic Mismatch-Guided Out-of-Distribution Detection using Pre-trained Diffusion Models},

author={Gao, Ruiyuan and Zhao, Chenchen and Hong, Lanqing and Xu, Qiang},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

year={2023}

}We thank following open-sourced projects:

- Our code use tools: pytorch-grad-cam and PyTorch Image Quality (PIQ)

- Diffusion models from: latent-diffusion (with taming-transformers) and guided-diffusion

- CIFAR-10 and ImageNet Benchmark for OOD detection: OpenOOD