Training-free Style Transfer Emerges from h-space in Diffusion models

Training-free Style Transfer Emerges from h-space in Diffusion models

Jaeseok Jeong*, Mingi Kwon*, Youngjung Uh *denotes equal contribution

Arxiv preprint. Abstract:

Diffusion models (DMs) synthesize high-quality images in various domains. However, controlling their generative process is still hazy because the intermediate variables in the process are not rigorously studied. Recently, StyleCLIP-like editing of DMs is found in the bottleneck of the U-Net, named$h$ -space. In this paper, we discover that DMs inherently have disentangled representations for content and style of the resulting images:$h$ -space contains the content and the skip connections convey the style. Furthermore, we introduce a principled way to inject content of one image to another considering progressive nature of the generative process. Briefly, given the original generative process, 1) the feature of the source content should be gradually blended, 2) the blended feature should be normalized to preserve the distribution, 3) the change of skip connections due to content injection should be calibrated. Then, the resulting image has the source content with the style of the original image just like image-to-image translation. Interestingly, injecting contents to styles of unseen domains produces harmonization-like style transfer. To the best of our knowledge, our method introduces the first training-free feed-forward style transfer only with an unconditional pretrained frozen generative network.

Description

This repo includes the official Pytorch implementation of DiffStyle, Training-free Style Transfer Emerges from h-space in Diffusion models.

- DiffStyle offers training-free style mixing and harmonization-like style transfer capabilities through content injection on h-space of diffusion models.

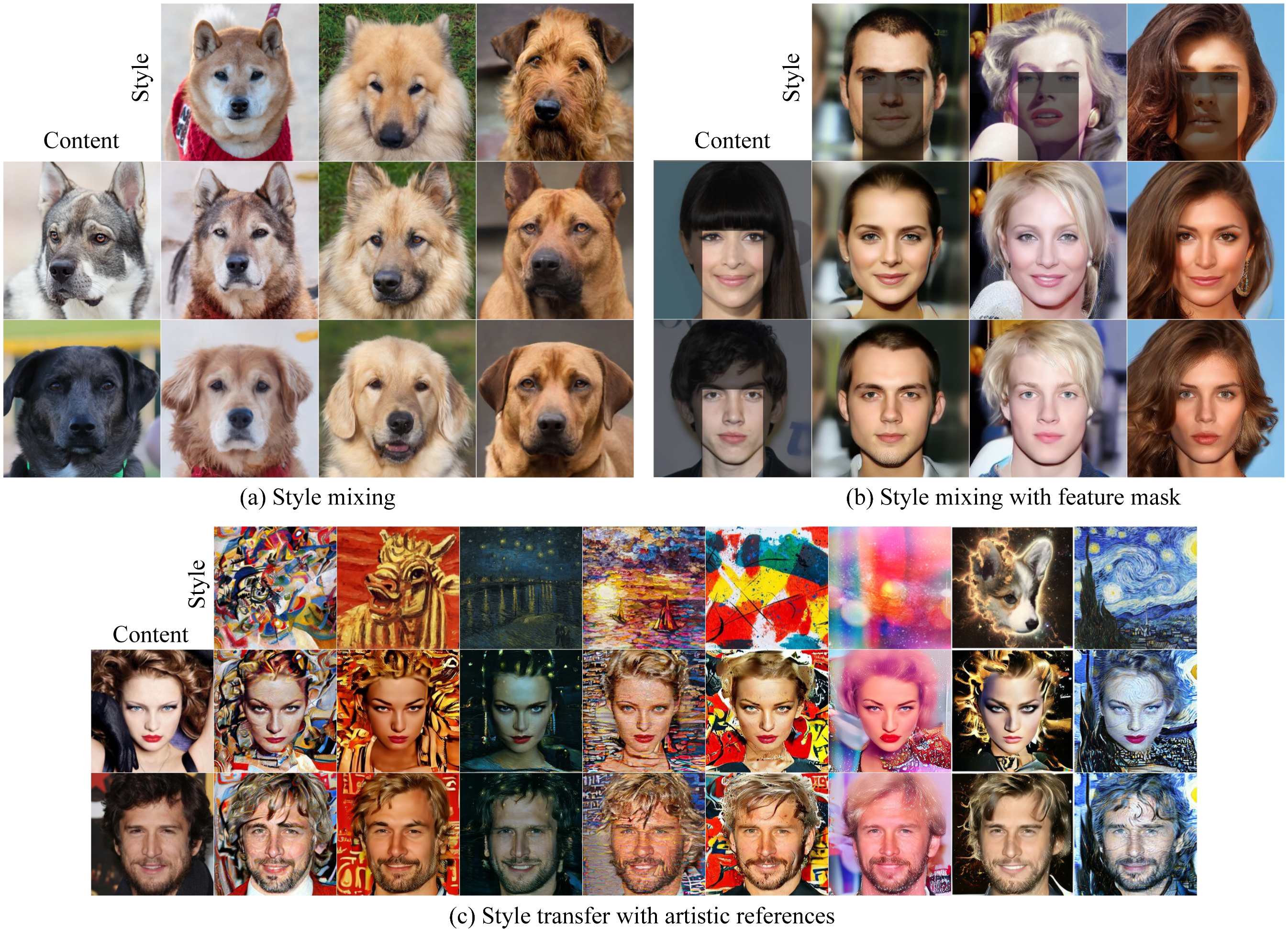

- DiffStyle allows (a) style mixing by content injection within the trained domain, (b) local style mixing by injecting masked content features, and (c) harmonization-like style transfer with out-of-domain style references. All results are pro- duced by frozen pretrained diffusion models. Furthermore, flexibility of DiffStyle enables content injection into any style.

Getting Started

We recommend running our code using NVIDIA GPU + CUDA, CuDNN.

Pretrained Models for DiffStyle

To manipulate soure images, the pretrained Diffuson models are required.

| Image Type to Edit | Size | Pretrained Model | Dataset | Reference Repo. |

|---|---|---|---|---|

| Human face | 256×256 | Diffusion (Auto) | CelebA-HQ | SDEdit |

| Human face | 256×256 | Diffusion | FFHQ | P2 weighting |

| Church | 256×256 | Diffusion (Auto) | LSUN-Bedroom | SDEdit |

| Bedroom | 256×256 | Diffusion (Auto) | LSUN-Church | SDEdit |

| Dog face | 256×256 | Diffusion | AFHQ-Dog | ILVR |

| Painting face | 256×256 | Diffusion | METFACES | P2 weighting |

| ImageNet | 256x256 | Diffusion | ImageNet | Guided Diffusion |

- The pretrained Diffuson models on 256x256 images in CelebA-HQ, LSUN-Church, and LSUN-Bedroom are automatically downloaded in the code. (codes from DiffusionCLIP)

- In contrast, you need to download the models pretrained on other datasets in the table and put it in

./pretraineddirectory. - You can manually revise the checkpoint paths and names in

./configs/paths_config.pyfile.

Datasets

To precompute latents and find the direction of h-space, you need about 100+ images in the dataset. You can use both sampled images from the pretrained models or real images from the pretraining dataset.

If you want to use real images, check the URLs :

You can simply modify ./configs/paths_config.py for dataset path.

DiffStyle

We provide some examples of inference script for DiffStyle. (script_diffstyle.sh)

- Determine

content_dir,style_dir,save_dir.

#AFHQ

config="afhq.yml"

save_dir="./results/afhq" # output directory

content_dir="./test_images/afhq/contents"

style_dir="./test_images/afhq/styles"

h_gamma=0.3 # Slerp ratio

t_boost=200 # 0 for out-of-domain style transfer.

n_gen_step=1000

n_inv_step=50

omega=0.0

python main.py --diff_style \

--content_dir $content_dir \

--style_dir $style_dir \

--save_dir $save_dir \

--config $config \

--n_gen_step $n_gen_step \

--n_inv_step $n_inv_step \

--n_test_step 1000 \

--hs_coeff $h_gamma \

--t_noise $t_boost \

--sh_file_name $sh_file_name \

--omega $omega \

#CelebA_HQ style mixing with feature mask

config="celeba.yml"

save_dir="./results/masked_style_mixing" # output directory

content_dir="./test_images/celeba/contents"

style_dir="./test_images/celeba/styles"

h_gamma=0.3 # Slerp ratio

dt_lambda=0.9985 # 1.0 for out-of-domain style transfer.

t_boost=200 # 0 for out-of-domain style transfer.

n_gen_step=1000

n_inv_step=50

omega=0.0

python main.py --diff_style \

--content_dir $content_dir \

--style_dir $style_dir \

--save_dir $save_dir \

--config $config \

--n_gen_step $n_gen_step \

--n_inv_step $n_inv_step \

--n_test_step 1000 \

--dt_lambda $dt_lambda \

--hs_coeff $h_gamma \

--t_noise $t_boost \

--sh_file_name $sh_file_name \

--omega $omega \

--use_mask \

#Harmonization-like style mixing with artistic references

config="celeba.yml"

save_dir="./results/style_literature" # output directory

content_dir="./test_images/celeba/contents2"

style_dir="./test_images/style_literature"

h_gamma=0.4

n_gen_step=1000

n_inv_step=1000

CUDA_VISIBLE_DEVICES=$gpu python main.py --diff_style \

--content_dir $content_dir \

--style_dir $style_dir \

--save_dir $save_dir \

--config $config \

--n_gen_step $n_gen_step \

--n_inv_step $n_inv_step \

--n_test_step 1000 \

--hs_coeff $h_gamma \

--sh_file_name $sh_file_name \

Acknowledge

Codes are based on Asryp and DiffusionCLIP.