Ruoshi Liu*1, Alper Canberk*1, Shuran Song1,2, Carl Vondrick1

1Columbia University, 2Stanford University, * Equal Contribution

Project Page | Video | Arxiv

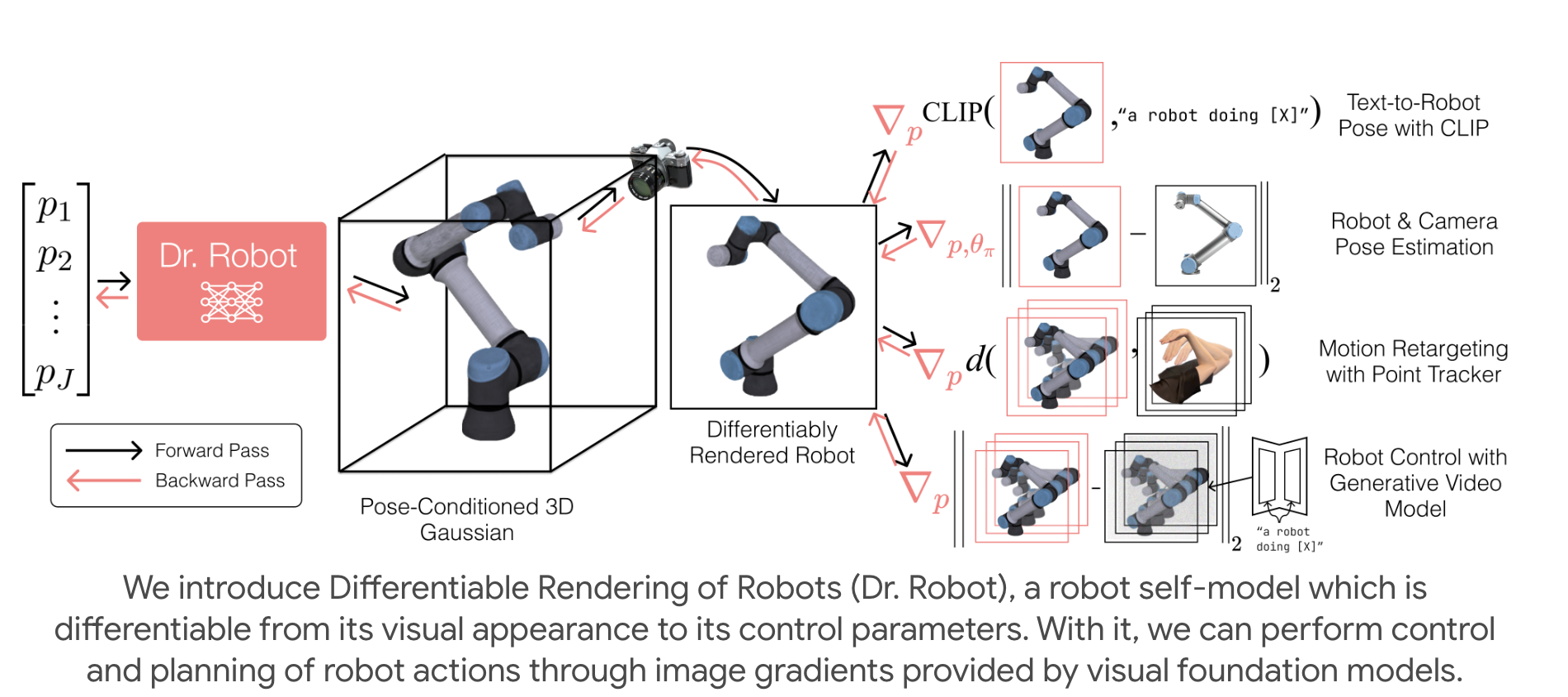

This is the official repository for Differentiable Robot Rendering. It includes the code for training robot models and optimizing them at inference time with respect to image gradients.

Our setup has been tested with miniforge and CUDA 12.1. To install all of our dependencies, simply run

mamba create -n dr python=3.10 -y

mamba activate dr

mamba install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch -c nvidia

pip install gsplat

pip install tensorboard ray tqdm mujoco open3d plyfile pytorch-kinematics random-fourier-features-pytorch pytz gradio

The most tricky dependency of our codebase is gsplat, which is used for rasterizing Gaussians. We recommend visiting their installation instructions if the plain pip install doesn't work.

To launch the real-time reconstruction demo, you may use the pre-trained UR5 robot model that we include in this repo by simply running

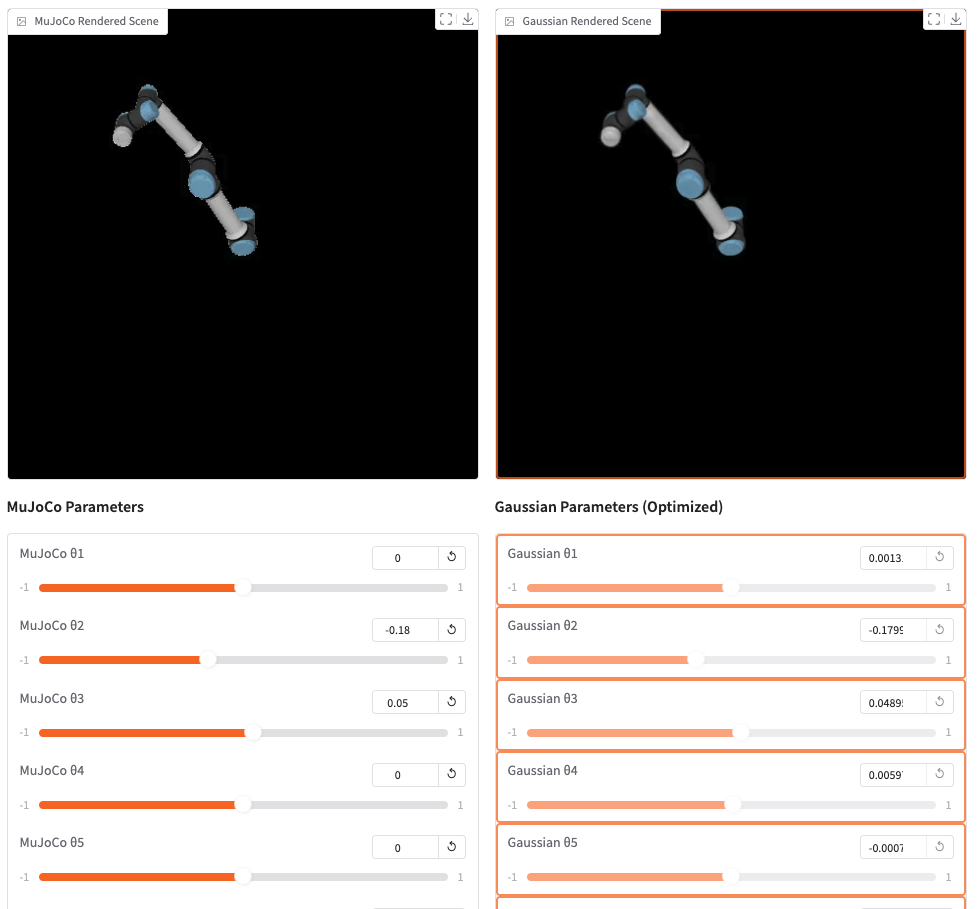

python gradio_app_realtime.py --model_path assets/urIn this demo, you may tweak with MuJoCo robot parameters through the sliders, and the 3D Gaussian robot will be optimized in real-time to reconstruct the ground-truth robot pose.

The training is divided into two phases

- Data generation, which takes in a URDF file and generates randomly sampled combinations of images + camera poses + robot joint angles.

- Differentiable robot training stage, which trains a canonical 3D Gaussian and a deformation field on the data generated. This part is subdivided into 3 stages:

- Canonical 3D Gaussian training

- Deformation field training

- Joint training

Our code generates data from a URDF specified as an XML file. We provide several standard robot URDFs from Mujoco Menagerie to train on, which you can check out under mujoco_menagerie/. As an example, we will be using the UR5e robot arm. To generate data for this robot, simply run

python generate_robot_data.py --model_xml_dir mujoco_menagerie/universal_robots_ur5e

This script will launch many Mujoco workers to generate your data as fast as possible.

The corresponding directory containing the data should appear under data/ directory.

data/

└── universal_robots_ur5e/

├── canonical_sample_0/

│ ├── image_0.jpg

│ ├── image_1.jpg

│ ...

│ ├── intrinsics.npy

│ ├── extrinsics.npy

│ ├── joint_positions.npy

│ └── pc.ply

├── canonical_sample_1/

├── ...

├── sample_0/

├── sample_1/

├── ...

├── test_sample_0/

├── test_sample_1/

├── ...

P.S. this script is mostly standalone and quite hackable, so you may customize it to your needs.

To train a differentiable robot model, run:

python train.py --dataset_path data/universal_robots_ur5e --experiment_name universal_robots_ur5e_experiment

This script will automatically run through all three stages of training. The latest robot model will be saved under output/universal_robots_ur5e/.

To visualize the model training process, you may run tensorboard --logdir output/

- Fix the bug that tampers with training of some robots midway through training, some parameters were changed during the code clean-up, so please bear with us while we try to address this

- Our codebase is heavily built on top of 3D Gaussians and 4D Gaussians

- Our renderer uses Gsplat

- Many robot models that we use come directyl from Mujoco Menagerie, we thank them for providing a diverse and clean repository of robot models.

- Pytorch Kinematics, which allows us to differentiate the forward kinematics of the robot out-of-the-box