Seokju Cho1 · Jiahui Huang2 · Jisu Nam1 · Honggyu An1 · Seungryong Kim1 · Joon-Young Lee2

1Korea University 2Adobe Research

ECCV 2024

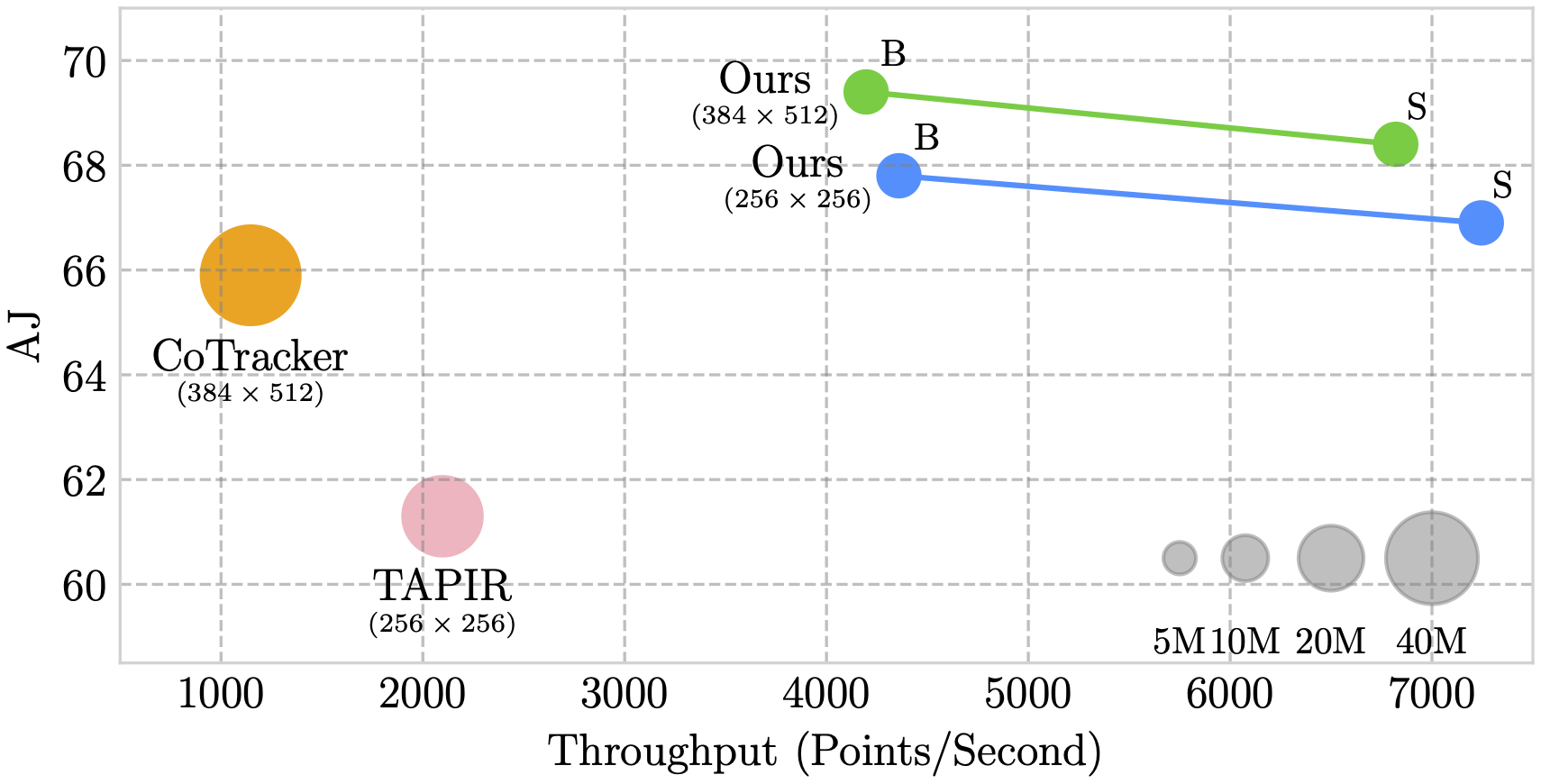

LocoTrack is an incredibly efficient model, enabling near-dense point tracking in real-time. It is 6x faster than the previous state-of-the-art models.- 2024-07-22: LocoTrack is released.

- 2024-08-03: PyTorch inference and training code released.

- 2024-08-05: Interactive demo released.

Please stay tuned for an easy-to-use API for LocoTrack, coming soon!

Try our interactive demo on Huggingface. To run the demo locally, please follow these steps:

-

Install Dependencies: Ensure you have all the necessary packages by running:

pip install -r demo/requirements.txt

-

Run the Demo: Launch the interactive Gradio demo with:

python demo/demo.py

For detailed instructions on training and evaluation, please refer to the README file for your chosen implementation:

First, download the evaluation datasets:

# TAP-Vid-DAVIS dataset

wget https://storage.googleapis.com/dm-tapnet/tapvid_davis.zip

unzip tapvid_davis.zip

# TAP-Vid-RGB-Stacking dataset

wget https://storage.googleapis.com/dm-tapnet/tapvid_rgb_stacking.zip

unzip tapvid_rgb_stacking.zip

# RoboTAP dataset

wget https://storage.googleapis.com/dm-tapnet/robotap/robotap.zip

unzip robotap.zipFor downloading TAP-Vid-Kinetics, please refer to official TAP-Vid repository.

Download the panning-MOVi-E dataset used for training (approximately 273GB) from Huggingface using the following script. Git LFS should be installed to download the dataset. To install Git LFS, please refer to this link. Additionally, downloading instructions for the Huggingface dataset are available at this link

git clone git@hf.co:datasets/hamacojr/LocoTrack-panning-MOVi-EPlease use the following bibtex to cite our work:

@article{cho2024local,

title={Local All-Pair Correspondence for Point Tracking},

author={Cho, Seokju and Huang, Jiahui and Nam, Jisu and An, Honggyu and Kim, Seungryong and Lee, Joon-Young},

journal={arXiv preprint arXiv:2407.15420},

year={2024}

}

This project is largely based on the TAP repository. Thanks to the authors for their invaluable work and contributions.