This is the official Pytorch implementation of "Affine Medical Image Registration with Coarse-to-Fine Vision Transformer" (CVPR 2022), written by Tony C. W. Mok and Albert C. S. Chung.

Python 3.5.2+Pytorch 1.3.0 - 1.7.1NumPyNiBabel

This code was tested with Pytorch 1.7.1 and NVIDIA TITAN RTX GPU.

-

Train_C2FViT_pairwise.py: Train a C2FViT model in an unsupervised manner for pairwise registration (Inter-subject registration). -

Train_C2FViT_pairwise_semi.py: Train a C2FViT model in an semi-supervised manner for pairwise registration (Inter-subject registration). -

Train_C2FViT_template_matching.py: Train a C2FViT model in an unsupervised manner for brain template-matching (MNI152 space). -

Train_C2FViT_template_matching_semi.py: Train a C2FViT model in an semi-supervised manner for brain template-matching (MNI152 space). -

Test_C2FViT_template_matching.py: Register an image pair with a pretrained C2FViT model (Template-matching). -

Test_C2FViT_pairwise.py: Register an image pair with a pretrained C2FViT model (Pairwise image registration).

Template-matching (MNI152):

python Test_C2FViT_template_matching.py --modelpath {model_path} --fixed ../Data/MNI152_T1_1mm_brain_pad_RSP.nii.gz --moving {moving_img_path}

Pairwise image registration:

python Test_C2FViT_pairwise.py --modelpath {model_path} --fixed {fixed_img_path} --moving {moving_img_path}

Pre-trained model weights can be downloaded with the links below:

Unsupervised:

- C2FViT_affine_COM_pairwise_stagelvl3_118000.pth

- C2FViT_affine_COM_template_matching_stagelvl3_116000.pth

Semi-supervised:

- C2FViT_affine_COM_pairwise_semi_stagelvl3_95000.pth

- C2FViT_affine_COM_template_matching_semi_stagelvl3_130000.pth

Step 0 (optional): Download the preprocessed OASIS dataset from https://github.com/adalca/medical-datasets/blob/master/neurite-oasis.md and place it under the Data folder.

Step 1: Replace /PATH/TO/YOUR/DATA with the path of your training data, e.g., ../Data/OASIS, and make sure imgs and labels are properly loaded in the training script.

Step 2: Run python {training_script}, see "Training and testing scripts" for more details.

If you find this repository useful, please cite:

- Affine Medical Image Registration with Coarse-to-Fine Vision Transformer

Tony C. W. Mok, Albert C. S. Chung

CVPR2022. eprint arXiv:2203.15216

Some codes in this repository are modified from PVT and ViT. The MNI152 brain template is provided by the FLIRT (FMRIB's Linear Image Registration Tool).

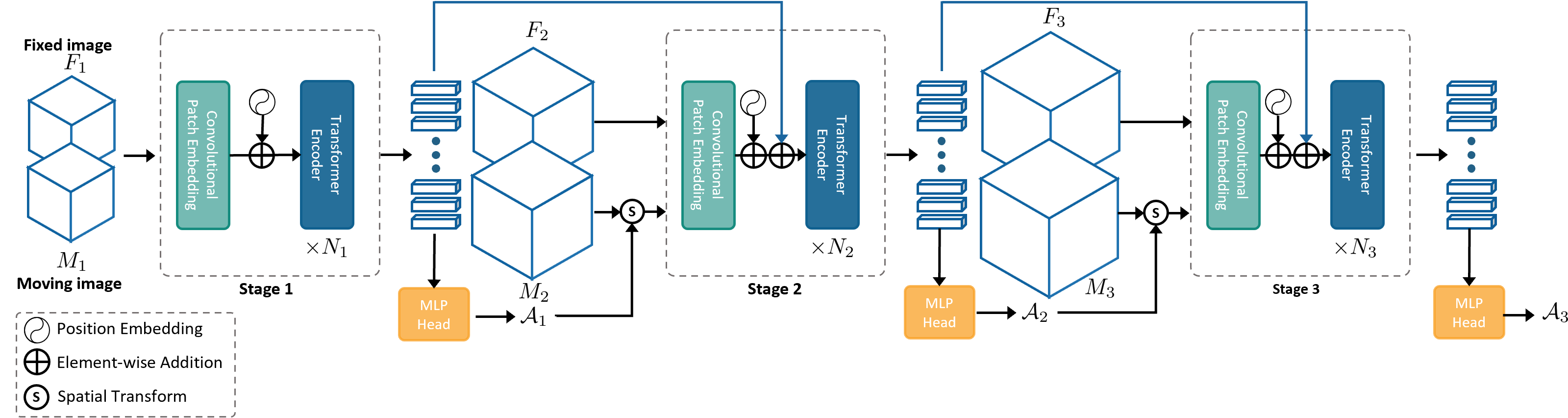

Keywords: Affine registration, Coarse-to-Fine Vision Transformer, 3D Vision Transformer