Great thanks to Jun-Yan Zhu et al. for their contribution of the CycleGAN paper. Original project and paper -

CycleGAN: Project | Paper | Torch

The code is adopted from the authors' implementation but simplified into just a few files. If you use this code for your research, please cite Jun-Yan Et al.:

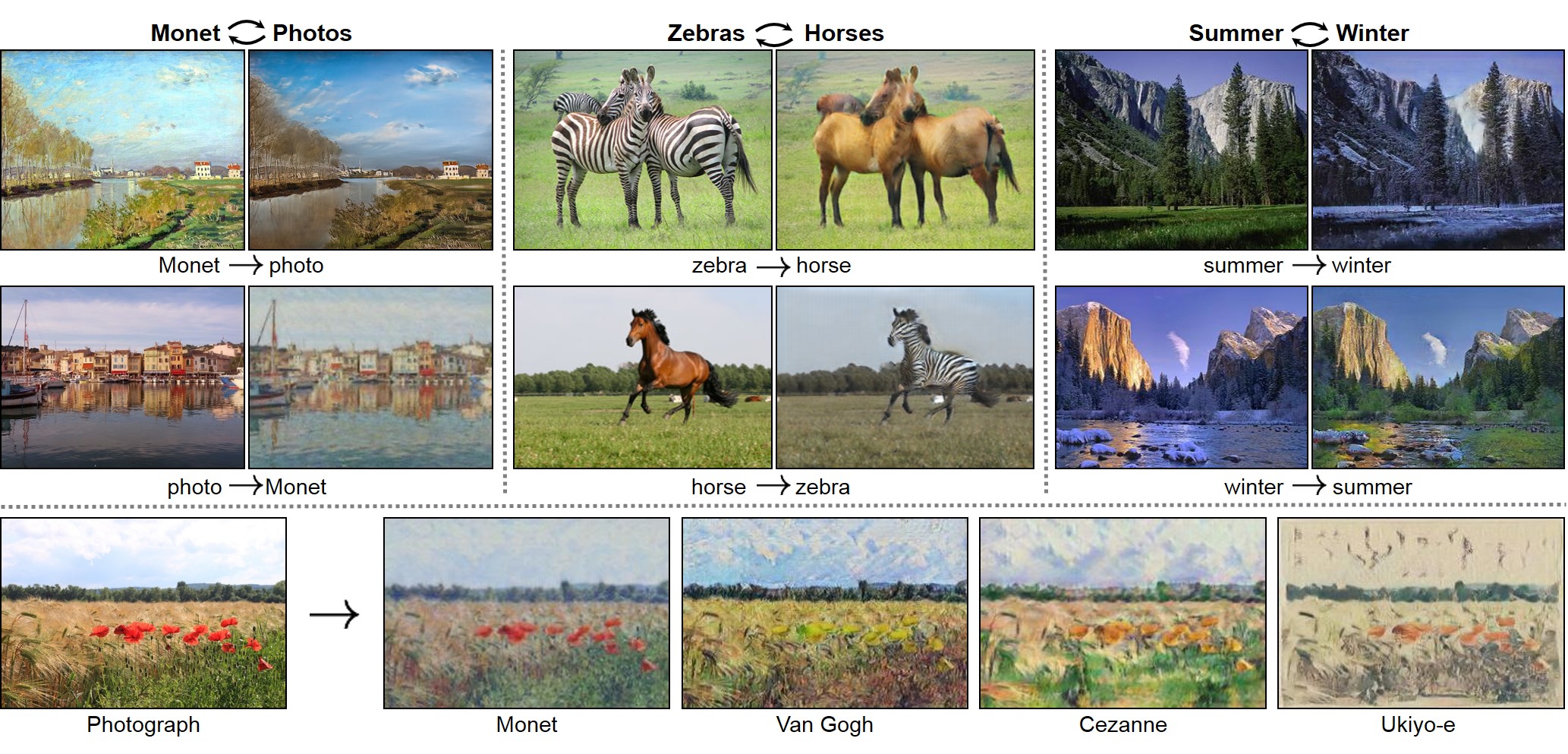

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks.

Jun-Yan Zhu*, Taesung Park*, Phillip Isola, Alexei A. Efros. In ICCV 2017. (* equal contributions) [Bibtex]

Image-to-Image Translation with Conditional Adversarial Networks.

Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, Alexei A. Efros. In CVPR 2017. [Bibtex]

- Linux or macOS

- Python 3

- CPU or NVIDIA GPU + CUDA CuDNN

- Install PyTorch 0.4+ (1.0 tested) with GPU support.

- Clone this repo:

git clone https://github.com/cy-xu/simple_CycleGAN

cd simple_CycleGAN- The command

pip install -r requirements.txtwill install all required dependencies.

- Download a CycleGAN dataset from the authors (e.g. horse2zebra):

bash ./util/download_cyclegan_dataset.sh horse2zebra- Train a model (different from original implementation):

python simple_cygan.py train-

Change training options in

simple_cygan.py, all options will be saved to a txt file -

A new directory by name of

opt.namewill be created inside the checkpoints directory -

Inside

checkpoints\project_name\you will findcheckpointsfor training processing resultsmodelsfor saved modelstest_resultsfor runningpython simple_cygan.py teston testing dataset

-

Test the model:

python simple_cygan.py testFollow the naming pattern of trainA, trainB, testA, and place them in datasets\your_dataset\. You can also change directories inside simple_cygan.py.

If you use this code for your research, please cite Jun-Yan et al's papers.

@inproceedings{CycleGAN2017,

title={Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networkss},

author={Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A},

booktitle={Computer Vision (ICCV), 2017 IEEE International Conference on},

year={2017}

}

@inproceedings{isola2017image,

title={Image-to-Image Translation with Conditional Adversarial Networks},

author={Isola, Phillip and Zhu, Jun-Yan and Zhou, Tinghui and Efros, Alexei A},

booktitle={Computer Vision and Pattern Recognition (CVPR), 2017 IEEE Conference on},

year={2017}

}

CycleGAN-Torch | pix2pix-Torch | pix2pixHD | iGAN | BicycleGAN | vid2vid