[OpenReview] [arXiv] [Code]

The official implementation of Periodic Graph Transformers for Crystal Material Property Prediction (NeurIPS 2022).

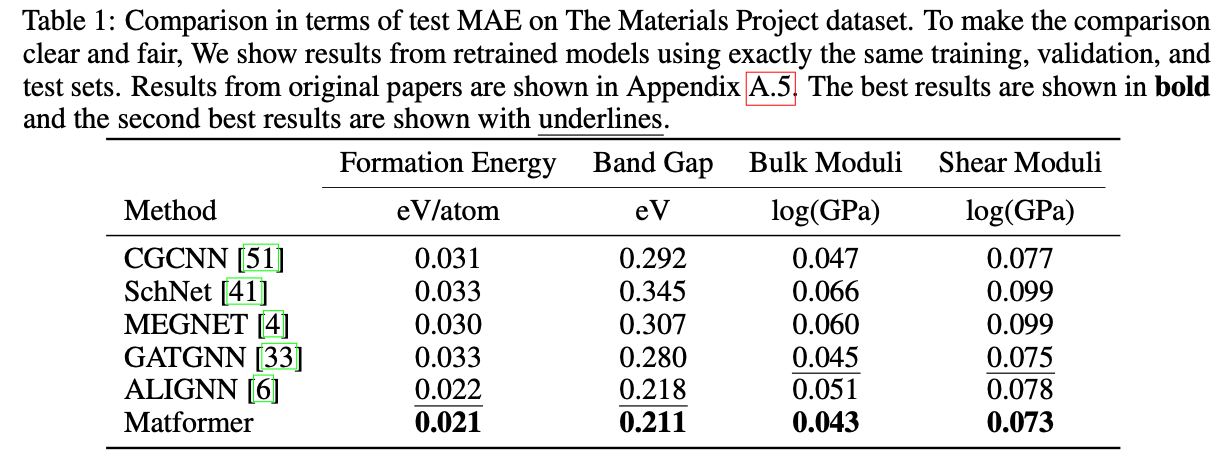

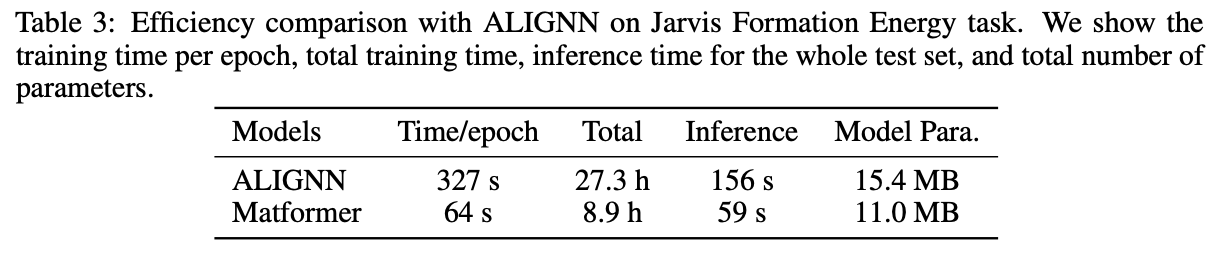

We provide benchmark results for previous works, including CGCNN, SchNet, MEGNET, GATGNN, ALIGNN on The Materials Project Dataset.

In particular, for tasks of formation energy and band gap, we directly follow ALIGNN and use the same training, validation, and test set, including 60000, 5000, and 4239 crystals, respectively. For tasks of Bulk Moduli and Shear Moduli, we follow GATGNN, the recent state-of-the-art method for these two tasks, and use the same training, validation, and test sets, including 4664, 393, and 393 crystals. In Shear Moduli, one validation sample is removed because of the negative GPa value. We either directly use the publicly available codes from the authors, or re-implement models based on their official codes and configurations to produce the results.

For bulk and shear datasets, we published the datasets at https://figshare.com/projects/Bulk_and_shear_datasets/165430

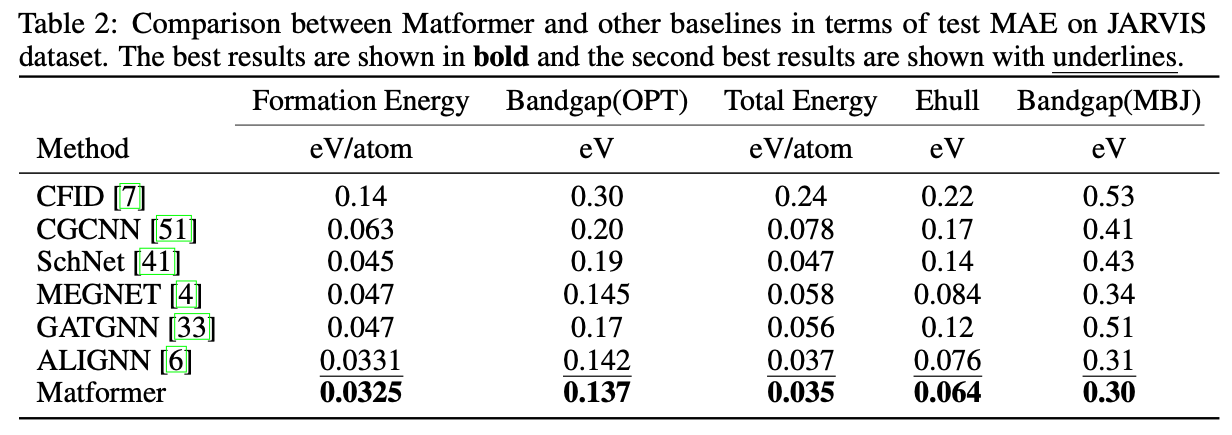

We also provide benchmark results for previous works, including CGCNN, SchNet, MEGNET, GATGNN, ALIGNN on JARVIS Dataset.

JARVIS is a newly released database proposed by Choudhary et al.. For JARVIS dataset, we follow ALIGNN and use the same training, validation, and test set. We evaluate our Matformer on five important crystal property tasks, including formation energy, bandgap(OPT), bandgap(MBJ), total energy, and Ehull. The training, validation, and test set contains 44578, 5572, and 5572 crystals for tasks of formation energy, total energy, and bandgap(OPT). The numbers are 44296, 5537, 5537 for Ehull, and 14537, 1817, 1817 for bandgap(MBJ). The used metric is test MAE. The results for CGCNN and CFID are taken from ALIGNN, other baseline results are obtained by retrained models.

You can train and test the model with the following commands:

conda create --name matformer python=3.10

conda activate matformer

conda install pytorch torchvision torchaudio pytorch-cuda=11.6 -c pytorch -c nvidia

conda install pyg -c pyg

pip install jarvis-tools==2022.9.16

python setup.py

# Training Matformer for the Materials Project

cd matformer/scripts/mp

python train.py

# Training Matformer for JARVIS

cd matformer/scripts/jarvis

python train.pyPlease cite our paper if you find the code helpful or if you want to use the benchmark results of the Materials Project and JARVIS. Thank you!

@inproceedings{yan2022periodic,

title={Periodic Graph Transformers for Crystal Material Property Prediction},

author={Keqiang Yan and Yi Liu and Yuchao Lin and Shuiwang Ji},

booktitle={The 36th Annual Conference on Neural Information Processing Systems},

year={2022}

}

This repo is built upon the previous work ALIGNN's [codebase]. Thank you very much for the excellent codebase.

If you have any question, please contact me at keqiangyan@tamu.edu.