Official PyTorch implementation for our URST (Ultra-Resolution Style Transfer) framework.

URST is a versatile framework for ultra-high resolution style transfer under limited GPU memory resources, which can be easily plugged in most existing neural style transfer methods.

With the growth of the input resolution, the memory cost of our URST hardly increases. Theoretically, it supports style transfer of arbitrary resolution images.

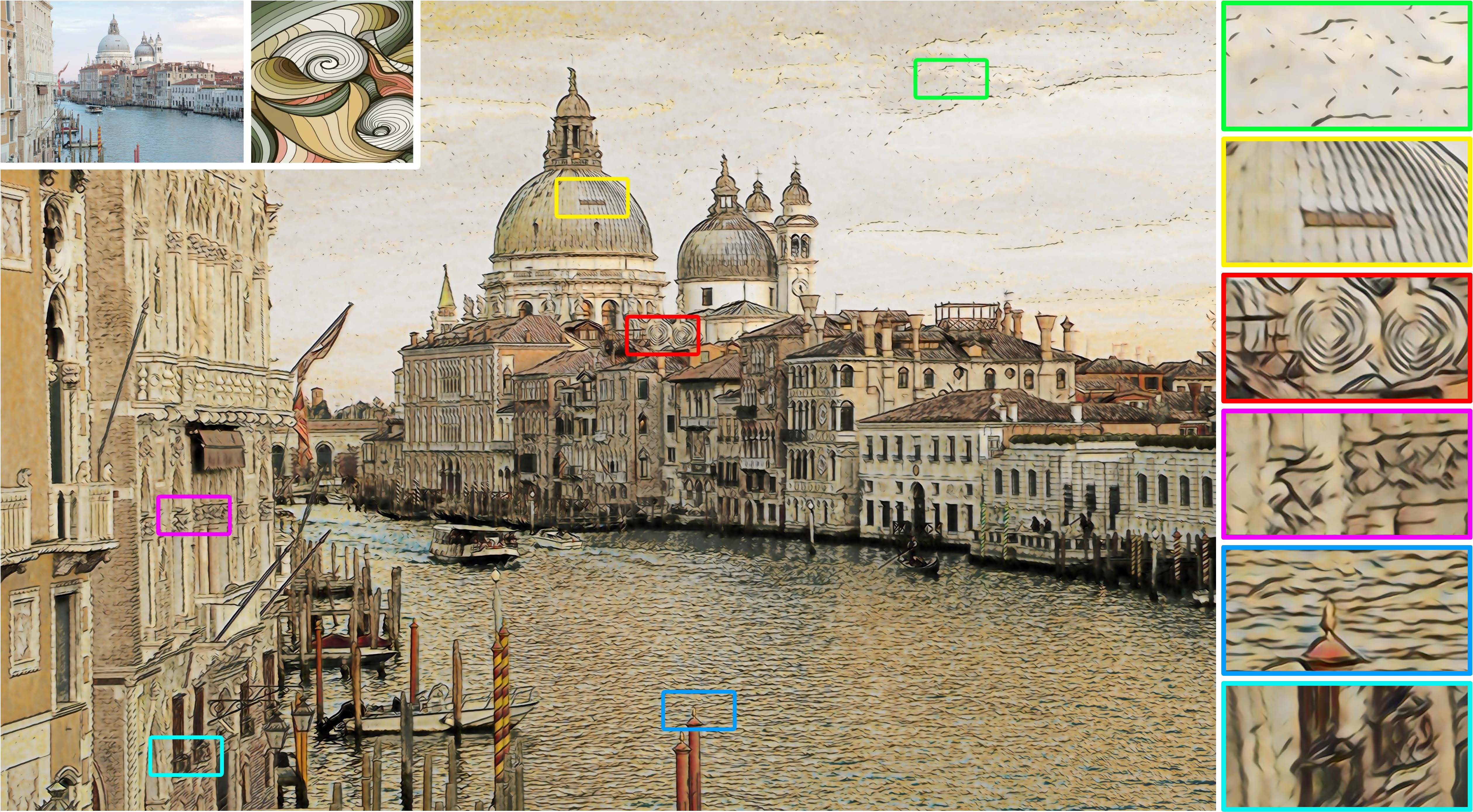

One ultra-high resolution stylized result of 12000 x 8000 pixels (i.e., 96 megapixels).

This repository is developed based on six representative style transfer methods, which are Johnson et al., MSG-Net, AdaIN, WCT, LinearWCT, and Wang et al. (Collaborative Distillation).

For details see Towards Ultra-Resolution Neural Style Transfer via Thumbnail Instance Normalization.

If you use this code for a paper please cite:

@inproceedings{chen2022towards,

title={Towards Ultra-Resolution Neural Style Transfer via Thumbnail Instance Normalization},

author={Chen, Zhe and Wang, Wenhai and Xie, Enze and Lu, Tong and Luo, Ping},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

year={2022}

}

-

python3.6, pillow, tqdm, torchfile, pytorch1.1+ (for inference)

pip install pillow pip install tqdm pip install torchfile conda install pytorch==1.1.0 torchvision==0.3.0 -c pytorch

-

tensorboardX (for training)

pip install tensorboardX

Then, clone the repository locally:

git clone https://github.com/czczup/URST.gitStep 1: Prepare images

- Content images and style images are placed in

examples/. - Since the ultra-high resolution images are quite large, we not place them in this repository. Please download them from this google drive.

- All content images used in this repository are collected from pexels.com.

Step 2: Prepare models

- Download models from this google drive. Unzip and merge them into this repository.

Step 3: Stylization

First, choose a specific style transfer method and enter the directory.

Then, please run the corresponding script. The stylized results will be saved in output/.

-

For Johnson et al., we use the PyTorch implementation Fast-Neural-Style-Transfer.

cd Johnson2016Perceptual/ CUDA_VISIBLE_DEVICES=<gpu_id> python test.py --content <content_path> --model <model_path> --URST

-

For MSG-Net, we use the official PyTorch implementation PyTorch-Multi-Style-Transfer.

cd Zhang2017MultiStyle/ CUDA_VISIBLE_DEVICES=<gpu_id> python test.py --content <content_path> --style <style_path> --URST

-

For AdaIN, we use the PyTorch implementation pytorch-AdaIN.

cd Huang2017AdaIN/ CUDA_VISIBLE_DEVICES=<gpu_id> python test.py --content <content_path> --style <style_path> --URST

-

For WCT, we use the PyTorch implementation PytorchWCT.

cd Li2017Universal/ CUDA_VISIBLE_DEVICES=<gpu_id> python test.py --content <content_path> --style <style_path> --URST

-

For LinearWCT, we use the official PyTorch implementation LinearStyleTransfer.

cd Li2018Learning/ CUDA_VISIBLE_DEVICES=<gpu_id> python test.py --content <content_path> --style <style_path> --URST

-

For Wang et al. (Collaborative Distillation), we use the official PyTorch implementation Collaborative-Distillation.

cd Wang2020Collaborative/PytorchWCT/ CUDA_VISIBLE_DEVICES=<gpu_id> python test.py --content <content_path> --style <style_path> --URST

-

For Multimodal Transfer, we use the PyTorch implementation multimodal_style_transfer

cd Wang2017Multimodal/ CUDA_VISIBLE_DEVICES=<gpu_id> python test.py --content <content_path> --model <model_name> --URST

Optional options:

--patch_size: The maximum size of each patch. The default setting is 1000.--style_size: The size of the style image. The default setting is 1024.--thumb_size: The size of the thumbnail image. The default setting is 1024.--URST: Use our URST framework to process ultra-high resolution images.

Step 1: Prepare datasets

Download the MS-COCO 2014 dataset and WikiArt dataset.

-

MS-COCO

wget http://msvocds.blob.core.windows.net/coco2014/train2014.zip

-

WikiArt

- Either manually download from kaggle.

- Or install kaggle-cli and download by running:

kg download -u <username> -p <password> -c painter-by-numbers -f train.zip

Step 2: Prepare models

As same as the Step 2 in the test phase.

Step 3: Train the decoder with our stroke perceptual loss

-

For AdaIN:

cd Huang2017AdaIN/ CUDA_VISIBLE_DEVICES=<gpu_id> python trainv2.py --content_dir <coco_path> --style_dir <wikiart_path>

-

For LinearWCT:

cd Li2018Learning/ CUDA_VISIBLE_DEVICES=<gpu_id> python trainv2.py --contentPath <coco_path> --stylePath <wikiart_path>

This repository is released under the Apache 2.0 license as found in the LICENSE file.