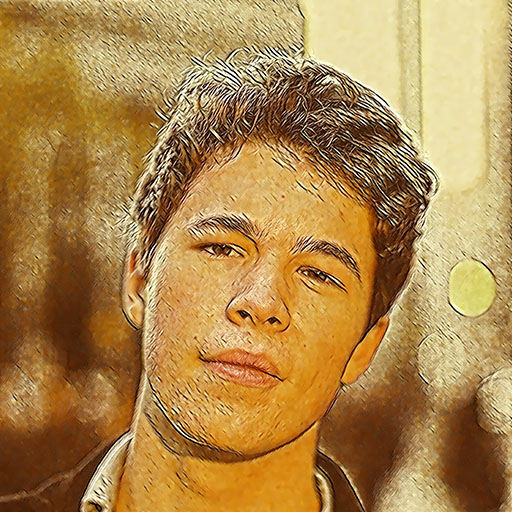

For high-resolution images, most mobile devices and personal computers cannot stylize them due to memory limitations. To solve this problem, we proposed a novel method named block shuffle, which can stylize high-resolution images with limited memory. In our experiments, we used Logan Engstrom's implementation of Fast Style Transfer as the baseline. In this repository, we provided the source code and 16 trained models. In addition, we developed an Android demo app, if you are interested in it, please click here.

- CUDA 9, cudnn 7

- Python 3.6

- Python packages: tensorflow-gpu==1.9, opencv-python, numpy

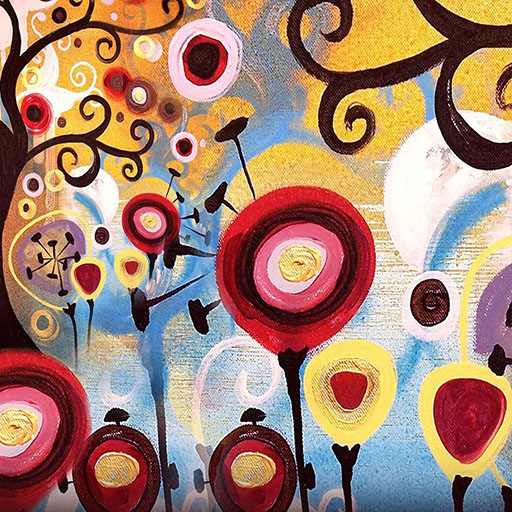

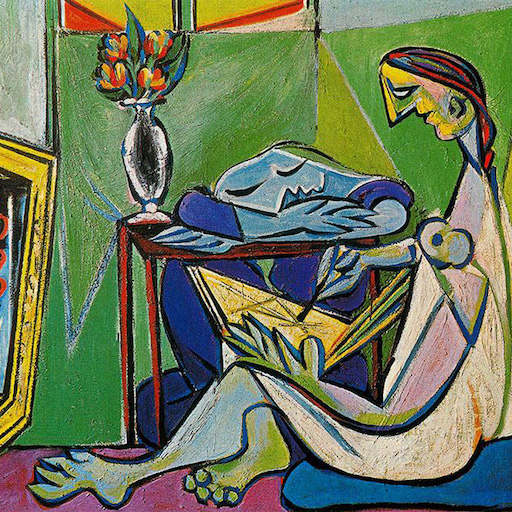

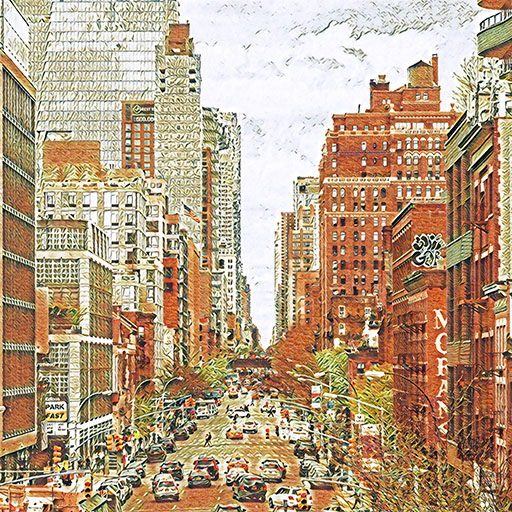

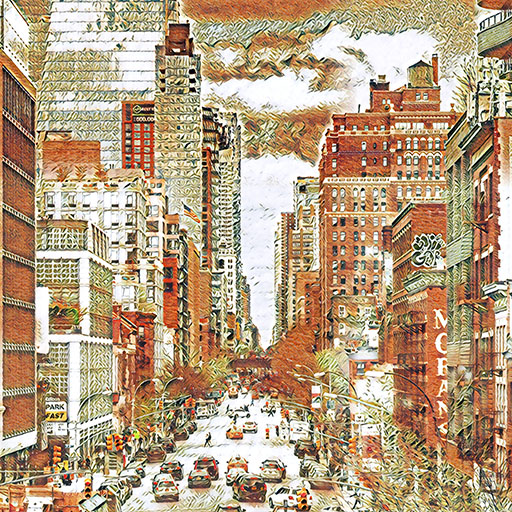

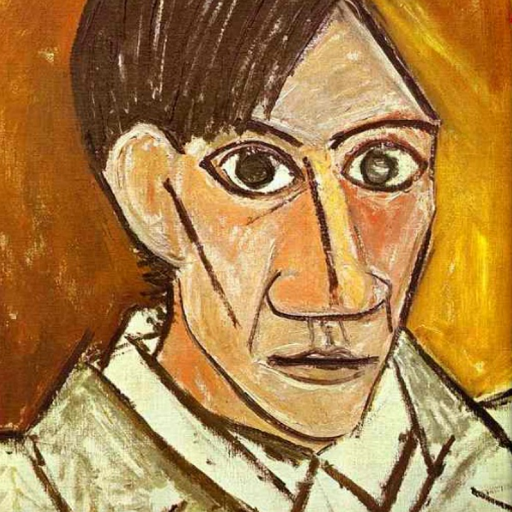

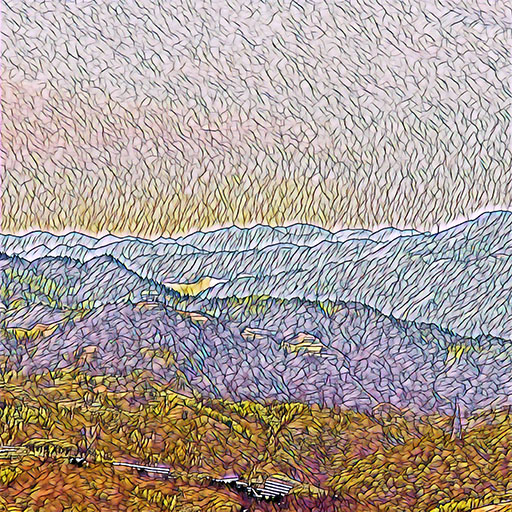

| #01 | #02 | #03 | #04 | #05 | #06 | #07 | #08 |

|---|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

| #09 | #10 | #11 | #12 | #13 | #14 | #15 | #16 |

|

|

|

|

|

|

|

|

Use baseline.py to stylize a high-resolution image. A GPU with 12GB memory can stylize up to 4000*4000 images (if your GPU doesn't have enough memory, it will throw an OOM error). Example usage:

python baseline.py --input examples/content/xxx.jpg \

--output examples/result/xxx.jpg \

--model models/01/model.pb \

--gpu 0Use feathering-based.py to stylize a high-resolution image. This method is very simple, but it doesn't work well. Example usage:

python feathering-based.py --input examples/content/xxx.jpg \

--output examples/result/xxx.jpg \

--model models/01/model.pb \

--gpu 0Use block_shuffle.py to stylize a high-resolution image. In our experiments, we set the max-width to 1000. If your GPU cannot stylize a 1000*1000 image, you can change this parameter to a smaller value. Example usage:

python block_shuffle.py --input examples/content/xxx.jpg \

--output examples/result/xxx.jpg \

--model models/01/model.pb \

--max-width 1000 \

--gpu 0We provided 16 trained fast style transfer models. If you want to train a new model, please download the COCO2014 dataset and the pre-trained VGG-19. If not, you can skip this step.

You can run setup.sh to download the COCO2014 dataset and the pre-trained VGG-19. Or you can download them from the following link (place them in data/):

Use style.py to train a new style transfer network. Training takes 26 hours on a Nvidia Telas K80 GPU. Example usage:

python style.py --style examples/style/style.jpg \

--checkpoint-dir checkpoint/style01 \

--test examples/content/chicago.jpg \

--test-dir checkpoint/style01Use export.py to export .pb files. Example usage:

python export.py --input checkpoint/xxx/fns.ckpt \

--output checkpoint/xxx/models.pb \

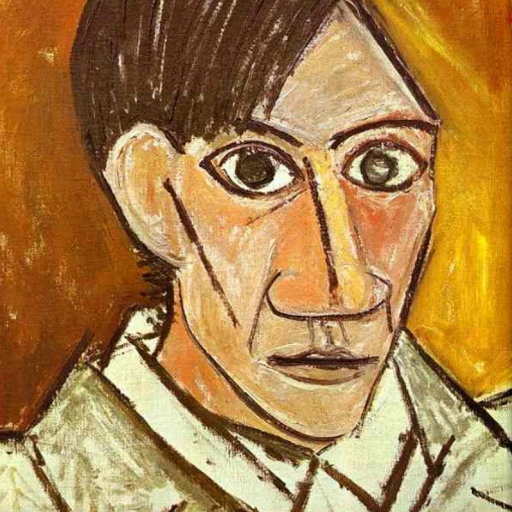

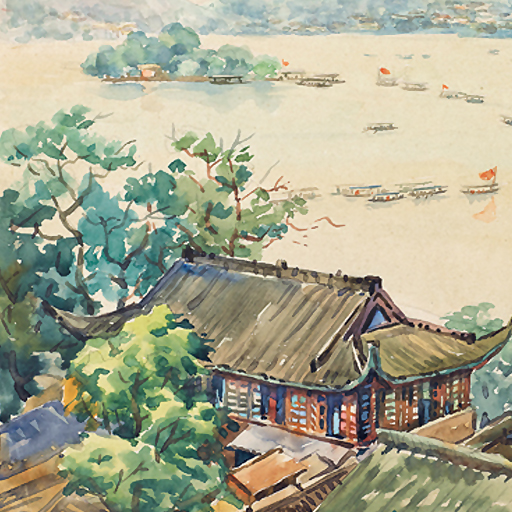

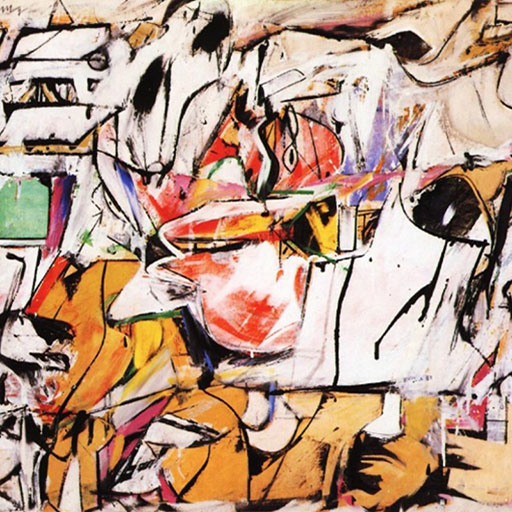

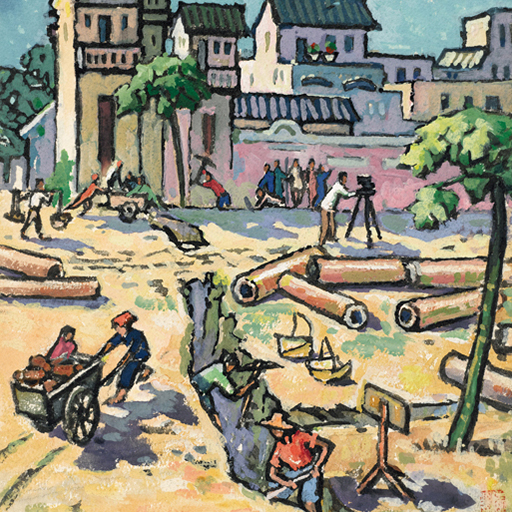

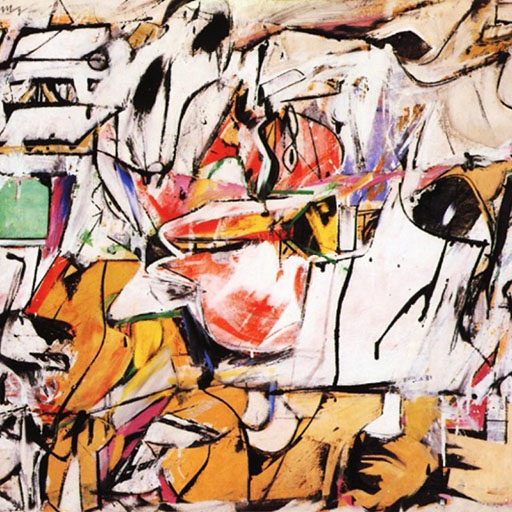

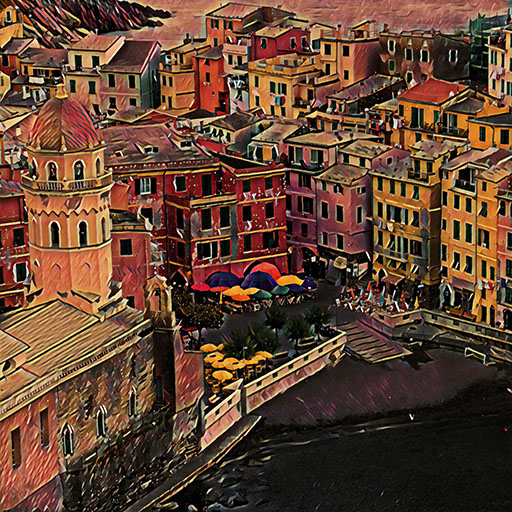

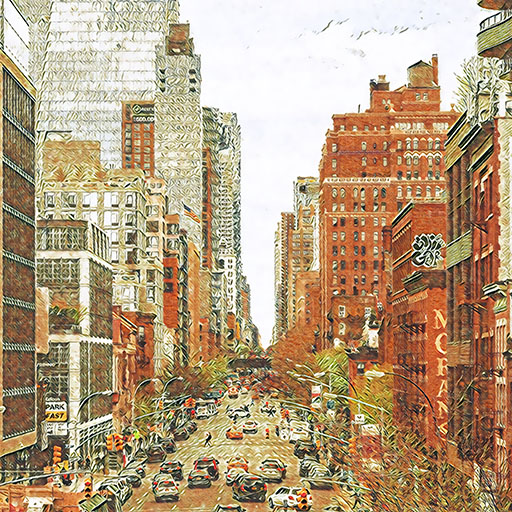

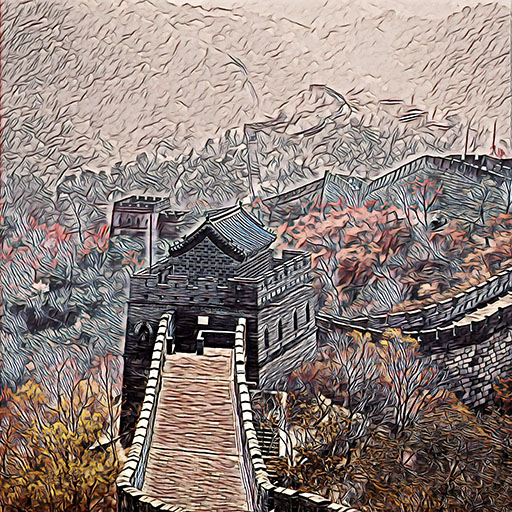

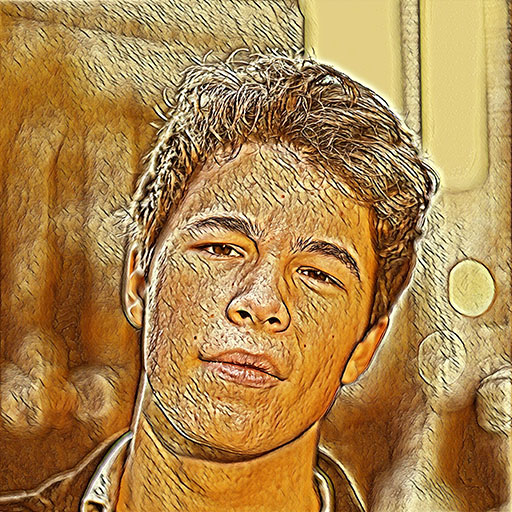

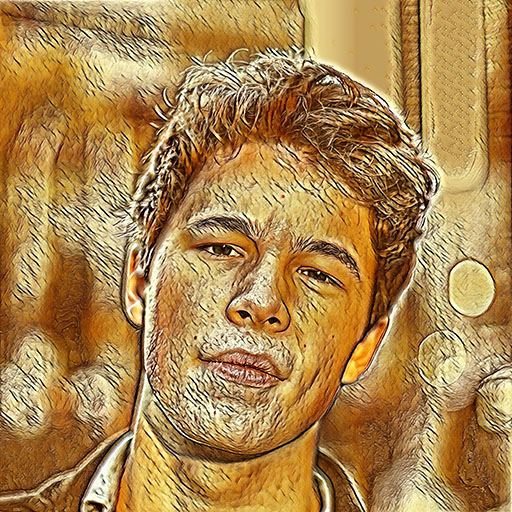

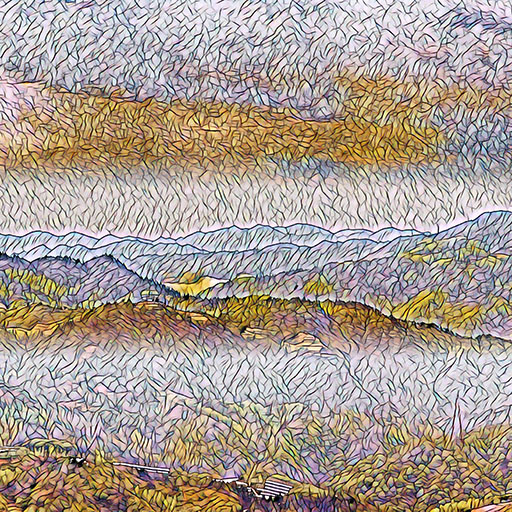

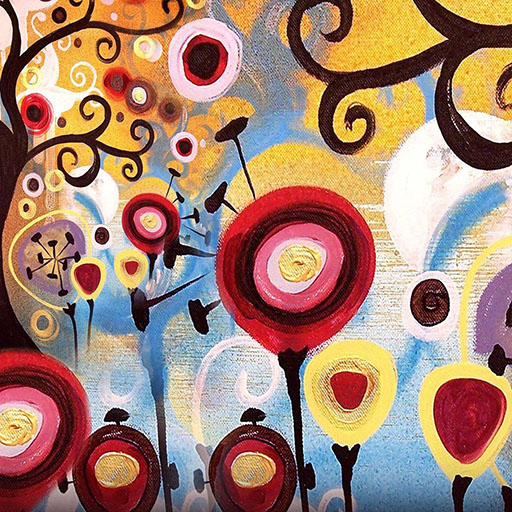

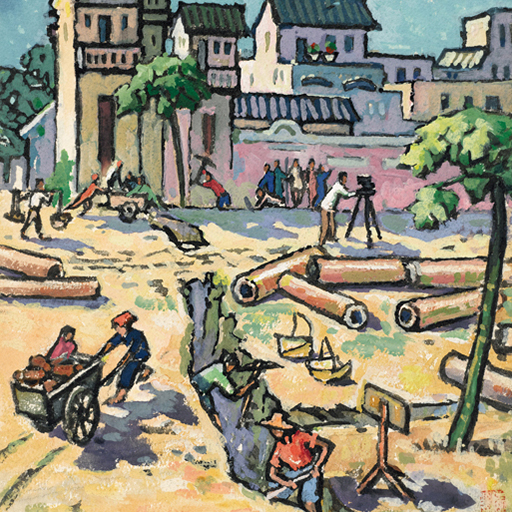

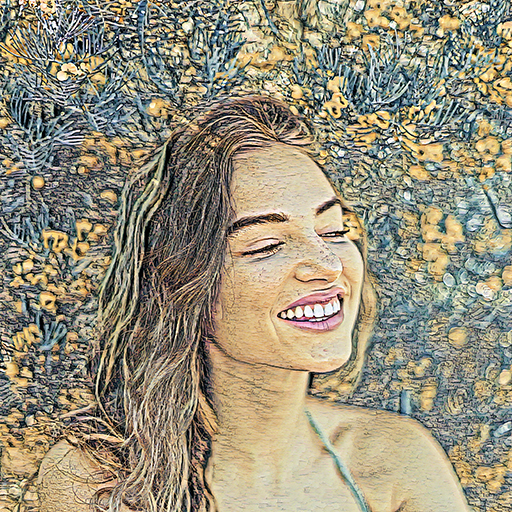

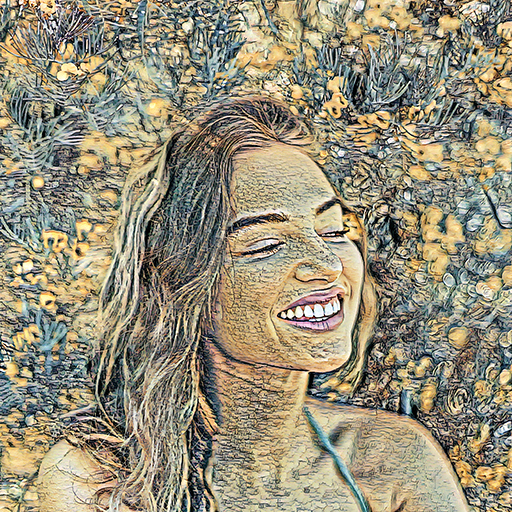

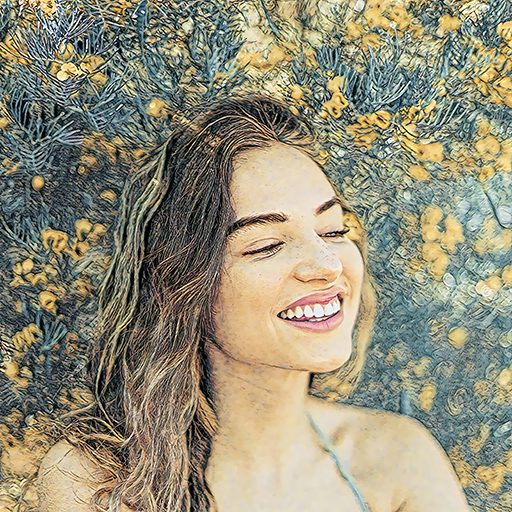

--gpu 0 | Style | Content | Baseline | Baseline+Feathering | Baseline+Block Shuffle (Ours) |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|