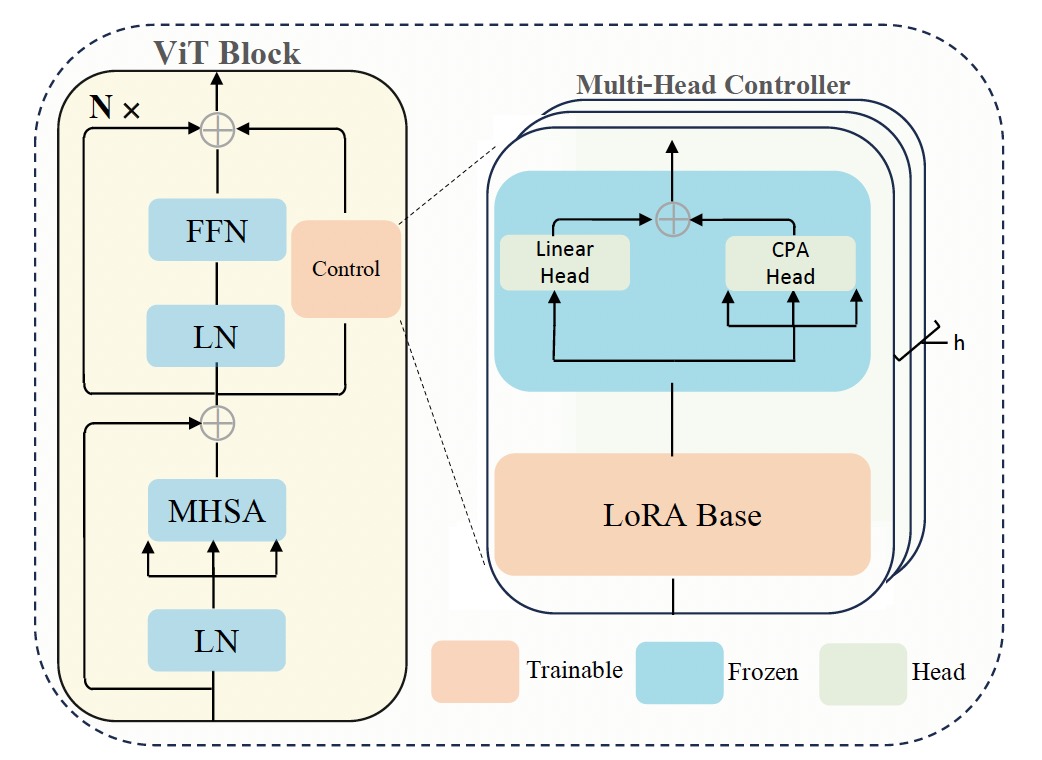

This is a PyTorch implementation of the paper Parameter-Efficient Fine-Tuning with Controls. The goal of our work is to provide a pure control view for the well-known LoRA algorithm.

- CUDA 11.2 + PyTorch 2.1.0 + torchvision 0.16.0

- timm 1.0.7

- easydict

The mae_pretrain_vit_b model is available here.

Start

# image

python main.py \

--batch_size 128 --cls_token \

--drop_path 0.0 --lr_decay 0.97 \

--dataset cifar100 --ffn_adaptThe project is based on MAE and AdaptFormer. Thank all the authors for their awesome works.

@inproceedings{zhangparameter,

title={Parameter-Efficient Fine-Tuning with Controls},

author={Zhang, Chi and Jingpu, Cheng and Xu, Yanyu and Li, Qianxiao},

booktitle={Forty-first International Conference on Machine Learning}

}

This project is under the MIT license. See LICENSE for details.