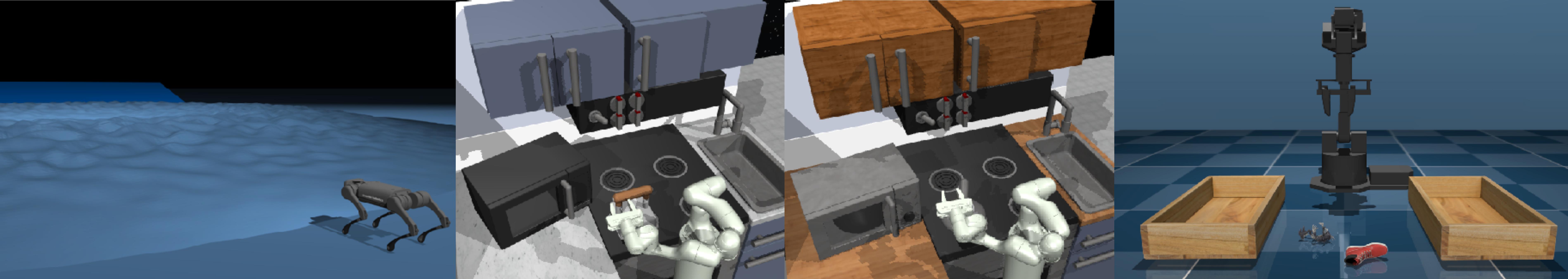

Offline reinforcement learning algorithms hold the promise of enabling data-driven RL methods that do not require costly or dangerous real-world exploration and benefit from large pre-collected datasets. This in turn can facilitate real-world applications, as well as a more standardized approach to RL research. Furthermore, offline RL methods can provide effective initializations for online finetuning to5 overcome challenges with exploration. However, evaluating progress on offline RL algorithms requires effective and challenging benchmarks that capture properties of real-world tasks, provide a range of task difficulties, and cover a range of challenges both in terms of the parameters of the domain (e.g., length of the horizon, sparsity of rewards) and the parameters of the data (e.g., narrow demonstration data or broad exploratory data). While considerable progress in offline RL in recent years has been enabled by simpler benchmark tasks, the most widely used datasets are increasingly saturating in performance and may fail to reflect properties of realistic tasks. We propose a new benchmark for offline RL that focuses on realistic simulations of robotic manipulation and locomotion environments, based on models of real-world robotic systems, and comprising a variety of data sources, including scripted data, play-style data collected by human teleoperators, and other data sources. Our proposed benchmark covers state-based and image-based domains, and supports both offline RL and online fine-tuning evaluation, with some of the tasks specifically designed to require both pre-training and fine-tuning. We hope that our proposed benchmark will facilitate further progress on both offline RL and fine-tuning algorithms.

Run

conda create --name d5rl python=3.9

conda activate d5rl

pip install --upgrade pip

pip install -r requirements.txt

pip install --upgrade "jax[cuda]" -f https://storage.googleapis.com/jax-releases/jax_releases.html # Note: wheels only available on linux.See instructions for other versions of CUDA here.

Download the Standard Franka Kitchen data with this command:

mkdir /PATH/TO/datasets/standard_kitchen

cd /PATH/TO/datasets/standard_kitchen

gsutil -m cp -r "gs://d5rl_datasets/KITCHEN_DATA/kitchen_demos_multitask_lexa_view_and_wrist_npz" .Then set

export STANDARD_KITCHEN_DATASETS=/PATH/TO/datasets/standard_kitchen/kitchen_demos_multitask_lexa_view_and_wrist_npzDownload the Randomized Franka Kitchen data with this command:

mkdir /PATH/TO/datasets/randomized_kitchen

cd /PATH/TO/datasets/randomized_kitchen

gsutil -m cp -r "gs://d5rl_datasets/KITCHEN_DATA/expert_demos" .

gsutil -m cp -r "gs://d5rl_datasets/KITCHEN_DATA/play_data" .Then set

export KITCHEN_DATASETS=/PATH/TO/datasets/randomized_kitchenData download for the WidowX Sorting environments is handled internally in the environment code.

Download the data for the A1 environments with these commands:

mkdir /PATH/TO/datasets/a1

cd /PATH/TO/datasets/a1

gsutil -m cp -r "gs://d5rl_datasets/a1/a1_extrapolate_above.zip" .

gsutil -m cp -r "gs://d5rl_datasets/a1/a1_hiking.zip" .

gsutil -m cp -r "gs://d5rl_datasets/a1/a1_interpolate.zip" .To replicate the results from the paper, please see the following locations for examples of launching experiments

Standard Kitchen Environment: ./examples/kitchen_launch_scripts/standardkitchen

Randomized Kitchen Environment: ./examples/kitchen_launch_scripts/randomizedkitchen

WidowX Sorting Environments: ./examples/run_bc_widowx.sh