Here we provide code to reproduce our results.

- PyTorch

- transformers (

pip install transformers)

All dataset files and log files during inference are included in this repo, with the exception of large training files maintained under Git LFS. Model checkpoints are stored on Google Drive. The folder containing all checkpoints can be found at this link.

- 4 X 4 Mult - GPT-2: data model log

- 4 X 4 Mult - GPT-2 Medium: data model log

- 5 X 5 Mult - GPT-2: data model log

- 5 X 5 Mult - GPT-2 Medium: data model log

- GSM8K - GPT-2: data model log

- GSM8K - GPT-2 Medium: data model log

We use 4 X 4 Mult with GPT2-Small as an example. We assume that the working directory is implicit_chain_of_thought throughout this document.

The format of training, validation, and test files looks like below:

[input 1]||[chain-of-thought 1] #### [output 1]

[input 2]||[chain-of-thought 2] #### [output 3]

[input 3]||[chain-of-thought 2] #### [output 3]

...

As an example, let's take a look at the first line from the 4 X 4 Mult test set in data/4_by_4_mult/test_bigbench.txt:

9 1 7 3 * 9 4 3 3||1 7 4 3 3 + 0 6 7 8 4 1 ( 1 3 2 2 8 1 ) + 0 0 7 5 1 1 1 ( 1 3 9 7 9 2 1 ) + 0 0 0 7 5 1 1 1 #### 1 3 9 4 5 4 2 1

In this example, the input is 9 1 7 3 * 9 4 3 3 (corresponding to 3719*3349), the chain-of-thought is 1 7 4 3 3 + 0 6 7 8 4 1 ( 1 3 2 2 8 1 ) + 0 0 7 5 1 1 1 ( 1 3 9 7 9 2 1 ) + 0 0 0 7 5 1 1 1, and the output is 1 3 9 4 5 4 2 1 (corresponding to 12454931).

Note that for Teacher Training, (a) Mind-Reading the Teacher, and (b) Thought Emulation, the chain-of-thought steps are used; but for (c) Couple and Optimize the chain-of-thought steps are not used.

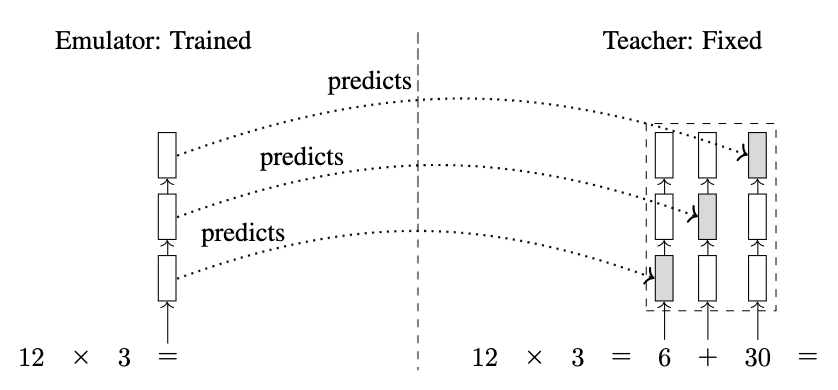

Our approach is based on distilling a teacher models horizontal reasoning process into the vertical reasoning process of the emulator and the student. Therefore, we need to first train a teacher on the task of explicit chain-of-thought reasoning.

export FOLDER=data/4_by_4_mult

export MODEL=gpt2

export EPOCHS=1

export LR=5e-5

export BSZ=32

export SAVE=train_models/4_by_4_mult/gpt2/teacher

echo $SAVE

mkdir -p $SAVE

TOKENIZERS_PARALLELISM=false CUDA_VISIBLE_DEVICES=0 stdbuf -oL -eL python src/train_teacher.py \

--train_path ${FOLDER}/train.txt \

--val_path ${FOLDER}/valid.txt \

--epochs $EPOCHS \

--lr $LR \

--batch_size $BSZ \

--base_model $MODEL \

--save_model $SAVE \

> ${SAVE}/log.train 2>&1&

export FOLDER=data/4_by_4_mult

export DELTA=dynamic

export MODEL=gpt2

export EPOCHS=40

export LR=5e-5

export BSZ=32

export TEACHER=train_models/4_by_4_mult/gpt2/teacher/checkpoint_0

export SAVE=train_models/4_by_4_mult/gpt2/student_initial

mkdir -p $SAVE

TOKENIZERS_PARALLELISM=false CUDA_VISIBLE_DEVICES=0 stdbuf -oL -eL python src/train_mind_reading_student.py \

--train_path ${FOLDER}/train.txt \

--val_path ${FOLDER}/valid.txt \

--epochs $EPOCHS \

--lr $LR \

--batch_size $BSZ \

--base_model $MODEL \

--teacher $TEACHER \

--save_model $SAVE \

--delta $DELTA \

> ${SAVE}/log.train 2>&1&

export FOLDER=data/4_by_4_mult

export DELTA=dynamic

export MODEL=gpt2

export EPOCHS=40

export LR=5e-5

export BSZ=32

export MIXTURE_SIZE=1

export TEACHER=train_models/4_by_4_mult/gpt2/teacher/checkpoint_0

export SAVE=train_models/4_by_4_mult/gpt2/emulator_initial

mkdir -p $SAVE

TOKENIZERS_PARALLELISM=false CUDA_VISIBLE_DEVICES=0 stdbuf -oL -eL python src/train_thought_emulator.py \

--train_path ${FOLDER}/train.txt \

--val_path ${FOLDER}/valid.txt \

--epochs $EPOCHS \

--lr $LR \

--batch_size $BSZ \

--base_model $MODEL \

--teacher $TEACHER \

--save_model $SAVE \

--delta $DELTA \

--mixture_size ${MIXTURE_SIZE} \

> ${SAVE}/log.train 2>&1&

export FOLDER=data/4_by_4_mult

export EPOCHS=40

export LR=5e-5

export BSZ=32

export STUDENT=train_models/4_by_4_mult/gpt2/student_initial/checkpoint_6

export EMULATOR=train_models/4_by_4_mult/gpt2/emulator_initial/checkpoint_5

export SAVE=train_models/4_by_4_mult/gpt2/

mkdir -p $SAVE

TOKENIZERS_PARALLELISM=false CUDA_VISIBLE_DEVICES=0 stdbuf -oL -eL python src/train_coupled_emulator_and_student.py \

--train_path ${FOLDER}/train.txt \

--val_path ${FOLDER}/valid.txt \

--epochs $EPOCHS \

--lr $LR \

--batch_size $BSZ \

--student $STUDENT \

--emulator $EMULATOR \

--save_model $SAVE \

> ${SAVE}/log.train 2>&1&

Here we use a pretrained model as an example. Download the folder models/4_by_4_mult/gpt2, then the following command will run inference and evaluate both accuracy and throughput, logged in file generation_logs/4_by_4_mult/log.generate.

export FOLDER=data/4_by_4_mult

export STUDENT=models/4_by_4_mult/gpt2/student

export EMULATOR=models/4_by_4_mult/gpt2/emulator

export BSZ=1

export SAVE=generation_logs/4_by_4_mult

mkdir -p $SAVE

TOKENIZERS_PARALLELISM=false CUDA_VISIBLE_DEVICES=0 stdbuf -oL -eL python src/generate.py \

--batch_size $BSZ \

--test_path ${FOLDER}/test_bigbench.txt \

--student_path $STUDENT \

--emulator_path $EMULATOR \

> ${SAVE}/log.generate 2>&1&

@misc{deng2023implicit,

title={Implicit Chain of Thought Reasoning via Knowledge Distillation},

author={Yuntian Deng and Kiran Prasad and Roland Fernandez and Paul Smolensky and Vishrav Chaudhary and Stuart Shieber},

year={2023},

eprint={2311.01460},

archivePrefix={arXiv},

primaryClass={cs.CL}

}