We provide code to reproduce our paper on markup-to-image generation. Our code is built on top of HuggingFace diffusers and transformers.

Online Demo: https://huggingface.co/spaces/yuntian-deng/latex2im.

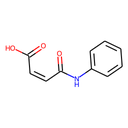

| Scheduled Sampling | Baseline | Ground Truth |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

pip install transformers

pip install datasets

pip install accelerate

pip install -qU git+https://github.com/da03/diffusers

All datasets have been uploaded to Huggingface datasets.

To train the diffusion model,

python src/train.py --save_dir models/math

To train the diffusion model,

python src/train.py --dataset_name yuntian-deng/im2html-100k --save_dir models/tables

In our paper, we trained on the music dataset with 4 A100 GPUs. You might need to tune --batch_size and --gradient_accumulation_steps if you want to use a single GPU to train or if your GPUs have less memory.

We first run

accelerate config

to use 4 GPUs on a single machine. Note that we did not use fp16 or DeepSpeed.

Next, we launch multi-GPU training using accelerate:

accelerate launch src/train.py --dataset_name yuntian-deng/im2ly-35k-syn --save_dir models/music

To train the diffusion model,

python src/train.py --dataset_name yuntian-deng/im2smiles-20k --save_dir models/molecules

To generate,

python scripts/generate.py --model_path models/math/scheduled_sampling/model_e100_lr0.0001.pt.100 --output_dir outputs/math --save_intermediate_every -1

To generate,

python scripts/generate.py --dataset_name yuntian-deng/im2html-100k --model_path models/tables/scheduled_sampling/model_e100_lr0.0001.pt.100 --output_dir outputs/tables --save_intermediate_every -1

To generate,

python scripts/generate.py --dataset_name yuntian-deng/im2ly-35k-syn --model_path models/music/scheduled_sampling/model_e100_lr0.0001.pt.100 --output_dir outputs/music --save_intermediate_every -1

To generate,

python scripts/generate.py --dataset_name yuntian-deng/im2smiles-20k --model_path models/molecules/scheduled_sampling/model_e100_lr0.0001.pt.100 --output_dir outputs/molecules --save_intermediate_every -1

To visualize the generation process, we need to first use the following command to save the intermediate images during generation:

python scripts/generate.py --model_path models/math/scheduled_sampling/model_e100_lr0.0001.pt.100 --output_dir outputs/math/scheduled_sampling_visualization --save_intermediate_every 1 --num_batches 1

Next, we put together a gif image from the generated images:

python scripts/make_gif.py --input_dir outputs/math/scheduled_sampling_visualization/ --output_filename imgs/math_rendering.gif --select_filename 433d71b530.png --show_every 10

We can similarly visualize results from the baseline.

python scripts/generate.py --model_path models/math/baseline/model_e100_lr0.0001.pt.100 --output_dir outputs/math/baseline_visualization --save_intermediate_every 1 --num_batches 1

python scripts/make_gif.py --input_dir outputs/math/baseline_visualization/ --output_filename imgs/math_rendering_baseline.gif --select_filename 433d71b530.png --show_every 10

To visualize the generation process, we need to first use the following command to save the intermediate images during generation:

python scripts/generate.py --dataset_name yuntian-deng/im2html-100k --model_path models/tables/scheduled_sampling/model_e100_lr0.0001.pt.100 --output_dir outputs/tables/scheduled_sampling_visualization --save_intermediate_every 1 --num_batches 1

Next, we put together a gif image from the generated images:

python scripts/make_gif.py --input_dir outputs/tables/scheduled_sampling_visualization/ --output_filename imgs/tables_rendering.gif --select_filename 42725-full.png --show_every 10

We can similarly visualize results from the baseline.

python scripts/generate.py --dataset_name yuntian-deng/im2html-100k --model_path models/tables/baseline/model_e100_lr0.0001.pt.100 --output_dir outputs/tables/baseline_visualization --save_intermediate_every 1 --num_batches 1

python scripts/make_gif.py --input_dir outputs/tables/baseline_visualization/ --output_filename imgs/tables_rendering_baseline.gif --select_filename 42725-full.png --show_every 10

To visualize the generation process, we need to first use the following command to save the intermediate images during generation:

python scripts/generate.py --dataset_name yuntian-deng/im2ly-35k-syn --model_path models/music/scheduled_sampling/model_e100_lr0.0001.pt.100 --output_dir outputs/music/scheduled_sampling_visualization --save_intermediate_every 1 --num_batches 1

Next, we put together a gif image from the generated images:

python scripts/make_gif.py --input_dir outputs/music/scheduled_sampling_visualization/ --output_filename imgs/music_rendering.gif --select_filename comp.17342.png --show_every 10

We can similarly visualize results from the baseline.

python scripts/generate.py --dataset_name yuntian-deng/im2ly-35k-syn --model_path models/music/baseline/model_e100_lr0.0001.pt.100 --output_dir outputs/music/baseline_visualization --save_intermediate_every 1 --num_batches 1

python scripts/make_gif.py --input_dir outputs/music/baseline_visualization/ --output_filename imgs/music_rendering_baseline.gif --select_filename comp.17342.png --show_every 10

To visualize the generation process, we need to first use the following command to save the intermediate images during generation:

python scripts/generate.py --dataset_name yuntian-deng/im2smiles-20k --model_path models/molecules/scheduled_sampling/model_e100_lr0.0001.pt.100 --output_dir outputs/molecules/scheduled_sampling_visualization --save_intermediate_every 1 --num_batches 1

Next, we put together a gif image from the generated images:

python scripts/make_gif.py --input_dir outputs/molecules/scheduled_sampling_visualization/ --output_filename imgs/molecules_rendering.gif --select_filename B-1173.png --show_every 10

We can similarly visualize results from the baseline.

python scripts/generate.py --dataset_name yuntian-deng/im2smiles-20k --model_path models/molecules/baseline/model_e100_lr0.0001.pt.100 --output_dir outputs/molecules/baseline_visualization --save_intermediate_every 1 --num_batches 1

python scripts/make_gif.py --input_dir outputs/molecules/baseline_visualization/ --output_filename imgs/molecules_rendering_baseline.gif --select_filename B-1173.png --show_every 10

@inproceedings{

deng2023markuptoimage,

title={Markup-to-Image Diffusion Models with Scheduled Sampling},

author={Yuntian Deng and Noriyuki Kojima and Alexander M Rush},

booktitle={The Eleventh International Conference on Learning Representations },

year={2023},

url={https://openreview.net/forum?id=81VJDmOE2ol}

}