Data-Free Network Quantization With Adversarial Knowledge Distillation PyTorch (Reproduced)

- Pytorch 1.4.0

- Python 3.6

- Torchvision 0.5.0

- tensorboard

- tensorboardX

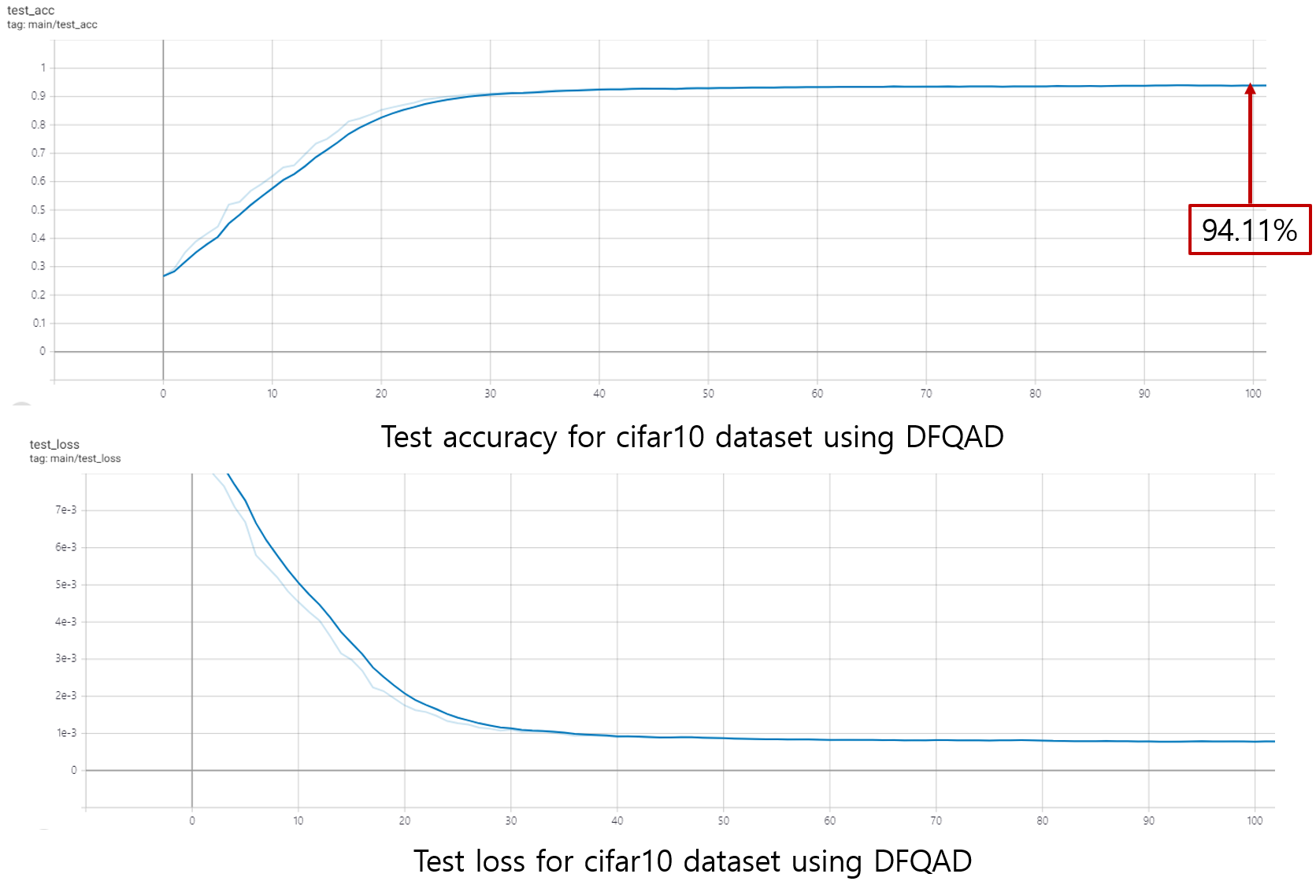

CUDA_VISIBLE_DEVICES=0 python main.py --dataset=cifar10 --alpha=0.01 --do_warmup=True --do_Ttrain=TrueThe generated images and a trained student network from Knowledge distillation will be saved in ./outputs (default) folder.

if you did train the teacher network, let argument "do_Ttrain" be False like as belows:

CUDA_VISIBLE_DEVICES=0 python main.py --dataset=cifar10 --alpha=0.01 --do_warmup=True --do_Ttrain=FalseArguments:

dataset- Choose a dataset name- [cifar10, cifar100]

data- dataset pathteacher_dir- save path for teachern_epochs- Epochsiter- Iterationsbatch_size- Size of the batcheslr_G- learning rate for generatorlr_S- learning rate for studentalpha- Alpha valuelatent_dim- Dimensionality of the latent spaceimg_size- Size of each image dimensionchannels- Number of image channelssaved_img_path- Save path for generated imagessaved_model_path- Save path for trained stduentdo_warmup- Do warm-up??do_Ttrain- Do train teacher network??

Choi, Yoojin, et al. "Data-free network quantization with adversarial knowledge distillation." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. 2020.