The open-source code of Queryable, an iOS app, utilizes the CLIP model to conduct offline searches in the Photos album.

Unlike the object recognition-based search feature built into the iOS gallery, Queryable allows you to use natural language statements, such as a brown dog sitting on a bench, to search your gallery. It operates offline, ensuring that your album privacy won't be leaked to anyone, including Apple/Google.

final.mp4

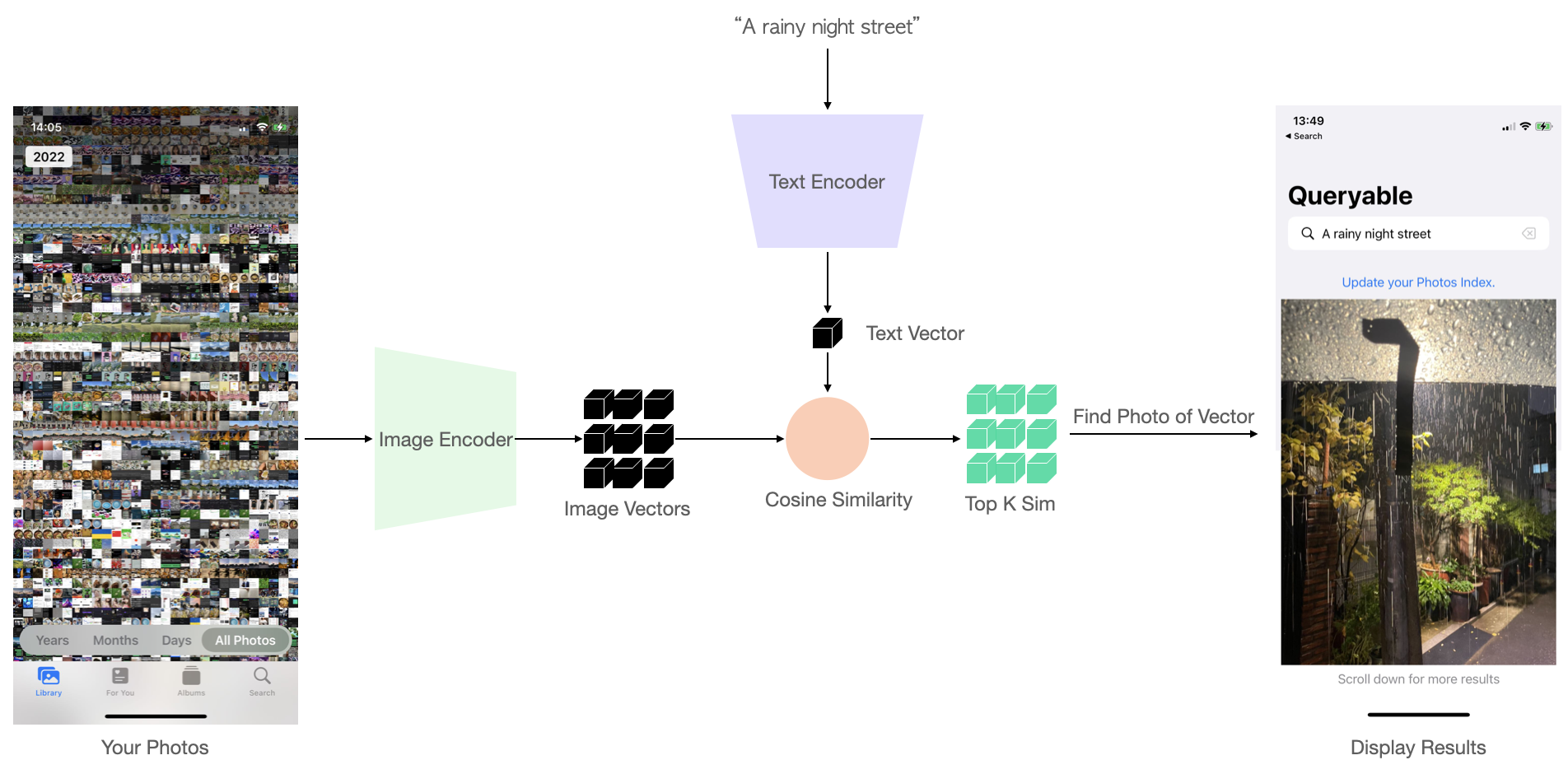

- Process all photos in your album through the CLIP Image Encoder to create a set of local image vectors.

- When a new text query is inputted, convert the text into a text vector using the Text Encoder.

- Compare the text vector with all the stored image vectors, evaluating the level of similarity between the text query and each image.

- Sort and return the top K most similar results.

The process is as follows:

For more details, please refer to my article Run CLIP on iPhone to Search Photos.

Download the ImageEncoder_float32.mlmodelc and TextEncoder_float32.mlmodelc from Google Drive.

Clone this repo, put the downloaded models below CoreMLModels/ path and run Xcode, it should work.

You can apply Queryable to your own business product, but I don't recommend modifying the appearance directly and then listing it on the App Store. You can contribute to this product by submitting commits to this repo, PRs are welcome.

If you have any questions/suggestions, here are some contact methods: Discord | Twitter | Reddit: r/Queryable.

MIT License

Copyright (c) 2023 Ke Fang