#Summary

An Azure Data Lake Store is a flexible, scalable repository for any type of data. It provides unlimited storage with high frequency, low latency throughput capabilities and provides immediate read and analysis capabilities over your data. Once data is captured in the Data Lake, advanced transformation and processing of the data can be performed using Microsoft's extendable and scalable U-SQL language, integrated with Azure Data Lake Analytics, Azure Machine Learning, or any HDFS compliant project, such as Hive running in HD Insight cluster.

Some of the principal benefits of an Azure Data Lake Store include:

-

Unlimited storage space

-

High-throughput read/write

-

Security through integration with Active Directory

-

Automatic data replication

-

Compatibility with the Hadoop Distributed File System (HDFS).

-

Compatibility with HDFS compliant project (e.g. Hive, HBase, Storm, etc.)

The objective of this tutorial is to demonstrate techniques for the movement of data between an external data source, an Azure Data Lake Store and Azure SQL Data Warehouse while demonstrating using U-SQL for processing information in a Data Lake Store and perform advanced analytics through Azure Machine Learning (AML).

This tutorial will be developed in reference to a use case described in the following section.

#Use Case

Switch based telephone companies, both land line and cellular, produce very large volumes of information, principally in the form of call detail records. Each telecom switch records information on the calling and called numbers, incoming and outgoing trunks, and information of the time of the call along with a number of other features.

The duration that telephone companies keep their data has varied between land line and cellular companies from one to many years. Various legislation (e.g. the USA Freedom Act) is being considered that will require telecommunication companies to hold the data for a longer period of time. The amount of data can be extremely large. If you consider a modestly sized telecom carrier with 10M customers can readily produce over 1 billion description messages per day, including call detail records (CDR) at a size close to 1 TB per day. In shortly over ½ of a year this amount of data could begin to surpass the maximum capacity of an Azure Storage blob per storage account per subscription (500TB).

In scenarios such as this, the integrated SQL and C# capabilities of U-SQL, the unlimited data storage capacity, and the ability for high velocity data ingestion of the Azure Data Lake Store makes it an ideal technical solution for the persistence and management of telephony call data.

Telecommunication network optimization techniques can hugely benefit from getting switch overload or malfunction predictions ahead of time. Such predictions help maintain SLA and overall network health by allowing for mitigating actions to be taken proactively, such as possibly rerouting calls and avoid call drops and perhaps an eventual switch shutdown. Microsoft’s capability to manage unlimited volumes of data within an Azure Data Lake Store combined with the powerful means for interacting with the Data Lake Store through U-SQL and the predictive modeling capabilities of Azure Machine Learning (AML) readily address all of the challenges with storage compliance and provide a seamless means for impactful analysis suitable for network optimization and other interaction..

The intent of this tutorial is to provide the engineering steps necessary to capture and reproduce completely the scenario described above.

The tutorial will include:

- The generation and ingestion of CDR Data using an Azure Event Hub and Azure Streaming Analytics.

- The creation of an Azure Data Lake Store (ADLS) to meet long term CDR management requirements.

- Using Azure Data Lake Analytics (ADLA) and Microsoft’s U-SQL to interact with the Data Lake. The ADLA U-SQL job generate aggregate view over the ingested CDR data that stored in ADLS.

- Creation and integration of staging store for storing analytics results from U-SQL and predictions from Azure Machine Learning (AML). This staging store is implemented using Azure SQL Data Warehouse (SQL DW) and provides a backend for Power BI dashboards.

- AML model which predicts the switch overload

The focus of this tutorial is on the architecture, data transformation, and the movement of data between the different storage architectures and the Azure Machine Learning (AML) environment. While this example demonstrates techniques for integrating AML into the solution architecture, the focus is not on machine learning. The machine learning model is used in this tutorial to predict switch overload with time series analysis by using random forest method. Machine learning can be used in telecommunication industry for effective marketing campaign, reducing infrastructure cost and maintenance effort.

#Prerequisites

The steps described later in this tutorial requires the following prerequisites:

-

Azure subscription with login credentials (https://azure.microsoft.com/en-us/)

-

Azure Machine learning Studio subscription (https://azure.microsoft.com/en-us/services/machine-learning/)

-

A Microsoft Power BI account (https://powerbi.microsoft.com/en-us/)

-

Power BI Desktop installation (https://powerbi.microsoft.com/en-us/desktop/?gated=0&number=0)

-

Microsoft Azure Storage Explorer (http://storageexplorer.com/)

-

A local installation of Visual Studio with SQL Server Data Tools (SSDT)

Figure 1 illustrates the Azure architecture developed in this sample.

Call detail record (CDR) data is generated via a data generator which simulates a phone switch and is deployed as an Azure Web Job. The CDR data is sent to an Event Hub. Azure Stream Analytics (ASA) takes in the CDR data flowed through Event hub, processes the data by using ASA SQL and sends the processed data to a) Power BI for real time visualization and b) Azure Data Lake Store for storage. Azure Data Lake Analytics runs a U-SQL job to pre-process the data before sending it to SQL Data Warehouse for Azure Machine Learning to run predictive analytics.

Predictive analytics is done by using the batch endpoint of an experiment published as a web service in the Azure Machine Learning Studio. The AML web service imports call failure number per minute from SQL Data Warehouse and exports the prediction, e.g. the scoring results back to SQL Data Warehouse. We use Azure Data Factory to orchestrate 1) U-SQL job in Azure Data Lake 2) Copy the results of the U-SQL job to SQL Data Warehouse 3) Predictive analytics in AML. The machine learning model here is used as an example experiment. You can use field knowledge and combine the available datasets to build more advanced model to meet your business requirements.

Below are the steps to deploy the use case into your Azure subscription. Note that to condense the steps somewhat, > is used between repeated actions. For example:

- Click: Button A

- Click: Button B

is written as

- Click: Button A > Button B

- Service Bus,

- Event Hub,

- Stream Analytics Job

- SQL Server, SQL Data Warehouse,

- Azure Storage Account

- Azure Data Lake Store Account

- Azure Data Lake Analytics Account

You will need a unique string to identify your deployment. We suggest you use only letters and numbers in this string and the length should not be greater than 9. Please open your memo file and write down "unique:[unique]" with "[unique]" replaced with your actual unique string. To get started, click the below button.

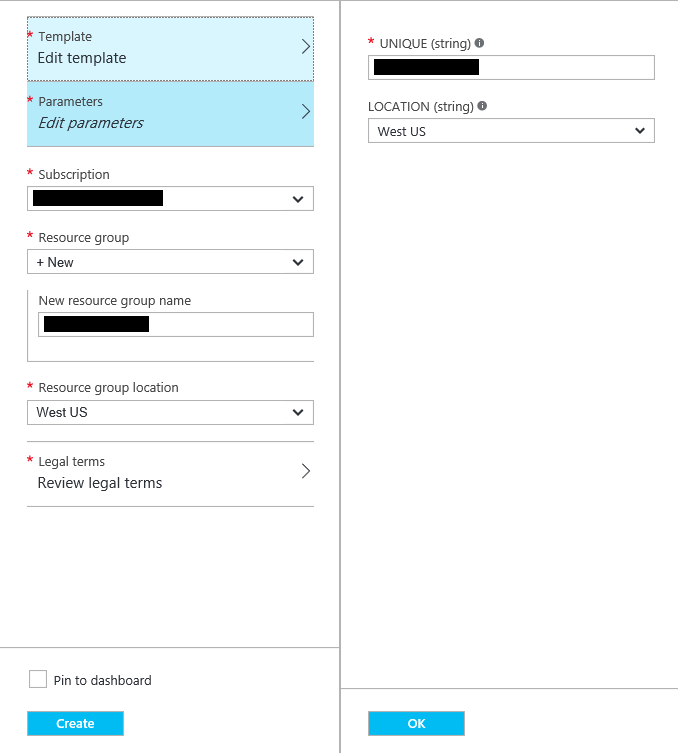

This will create a new "blade" in the Azure portal(https://ms.portal.azure.com).

- Parameters

- Type: UNIQUE (string): [UNIQUE] (You need to select a globally unique string)

- Select: LOCATION: [LOCATION] (The region where everything will be deployed)

- Click: OK

- Select: Subscription: [SUBSCRIPTION] (The Azure subscription you want to use)

- Resource group

- Select: New

- Type: New resource group name: [UNIQUE] (Same as above)

- Select: Resource group location: [LOCATION] (Same as above)

- Click: Review legal terms > Create

- Check: Pin to dashboard (If you want it on your dashboard)

- Click: Create

The resource group will serve as an organizational framework for the associated Azure services.

After deployment, in the Azure Portal https://ms.portal.azure.com , select the “Resource Groups” option from the menu, use the [UNIQUE] to find the resource group you just created and you will find all the resources that have just been deployed. The following table lists some important account information and also information that you need to use in walking through this tutorial. Please note that '[unique]' should be replaced with your own unique string and '[' and ']' should not in your final information.

| Item | Value |

|---|---|

| service bus name space | adlservicebus[unique] |

| event hub name | adleventhub[unique] |

| stream analytic job output power bi | adlstreamanalytics[unique]]powerbi |

| stream analytic job output data lake | adlstreamanalytics[unique]]datalake |

| storage account name | storage[unique] |

| Data Lake Store Account Name | adls[unique] |

| Data Lake Analytic Account Name | adla[unique] |

| SQL Server name | adl-[unique].database.windows.net |

| SQL Server user name | adluser |

| SQL Server user password | pass@word1 |

| SQL Database Name | adlDB |

| Table 1: Resources |

Next you need to create the matching tables in the SQL Data Warehouse. You can do this by following these steps:

- Start Visual Studio. Note that you must have installed the SQL Server Data Tools.

- Select: View: SQL Server Object Explorer

- Right click: SQL Server

- Click: Add SQL Server...

- Type: Server Name: adl-[UNIQUE].database.windows.net

- Select: Authentication: Sql Server Authentication

- Type: User name: adluser

- Type: Password: pass@word1

- Select: Database Name: adlDB

- Click: Connect

- Right click: adllDB

- Select: New Query...

- Copy and paste:

CREATE TABLE [dbo].[SwitchCallInfo] (

[Time] datetime NOT NULL,

[Switch] varchar(100) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[CallCount] bigint NOT NULL,

[CallFailure] bigint NOT NULL

)

WITH (CLUSTERED COLUMNSTORE INDEX, DISTRIBUTION = HASH([Time]));

CREATE TABLE [dbo].[ForcastCallFailure] (

[Time] datetime NOT NULL,

[CallFailure] bigint NOT NULL

)

WITH (CLUSTERED COLUMNSTORE INDEX, DISTRIBUTION = HASH([Time]));

- Click: Execute

- Browse: http://gallery.cortanaintelligence.com/Experiment/CDR-Call-Failure-Prediction-Azure-Data-Lake-1 # You will copy this experiment from the gallery

- Click: Open in Studio

- Select: REGION: [REGION] (Up to you)

- Select: WORKSPACE: [WORKSPACE] (Your workspace)

- Click: Import Data

- Type: Database server name: adl-[UNIQUE].database.windows.net

- Type: Password: pass@word1

- Click: Export Data

- Type: Database server name: adl-[UNIQUE].database.windows.net

- Type: Server user account password: pass@word1

- Click: RUN > DEPLOY WEB SERVICE

Browse: https://manage.windowsazure.com

- Click: STREAM ANALYTICS > adlstreamanalytics[unique]powerbi

- Click: INPUTS> cdreventhubinput >

- Type: EVENT HUB CONSUMER GROUP: powerbi

- Click: SAVE > Yes

- Click: STREAM ANALYTICS > adlstreamanalytics[unique]powerbi>OUTPUTS

- Click: DELETE > Yes

- Click: ADD OUTPUT

- Select: Power BI

- Click: Next > Authorize Now (Login with your credentials)

- Type: OUTPUT ALIAS: callinfoperminute

- Type: DATASET NAME: callinfoperminute (This dataset will be overwritten in PBI should it already exist)

- Type: TABLE NAME: callinfoperminute

- Select: WORKSPACE: My Workspace (Default)

- Click: Finish > Start > Finish (You do not need to specify a custom time)

Browse: https://manage.windowsazure.com

- Click: STREAM ANALYTICS > adlstreamanalytics[unique]datalake

- Click: INPUTS> cdreventhubinput >

- Type: EVENT HUB CONSUMER GROUP: datalake

- Click: SAVE > Yes

- Click: STREAM ANALYTICS > adlstreamanalytics[unique]datalake>OUTPUTS

- Click: DELETE > Yes

- Click: ADD OUTPUT

- Select: Data Lake Store

- Click: Next > Authorize Now (Login with your credentials)

- Click: Next

- Type: OUTPUT ALIAS: datalakestoreoutput

- Select DATA LAKE STORE ACCOUNT: adls[unique]

- Type: PATH PREFIX PATTERN: /cdrdata/input/{date}/{time}

- Select DATE FORMAT: YYYY/MM/DD

- Select TIME FORMAT: HH

- Click: Next

- Select OUTPUT Format: CSV

- Click: Finish > Start > Finish (You do not need to specify a custom time)

- Download data generator: https://github.com/daden-ms/arm/blob/master/datagenerator.zip, Click raw and the file will be downloaded

- Unzip: datagenerator.zip

- Edit: cdr-gen.exe.config

- Replace: EVENTHUBNAME: With: adleventhub[UNIQUE]

- Get CONNECTION STRING

- Browse: https://manage.windowsazure.com (Get the endpoint)

- Click: SERVICE BUS

- Select: adlservicebus[UNIQUE]

- Click: CONNECTION INFORMATION

- Copy: CONNECTION STRING

- Find: key Microsoft.ServiceBus.ConnectionString : replace its value With: CONNECTION STRING;TransportType=Amqp

- Zip: datagenerator.zip

- Browse: https://manage.windowsazure.com

- Click: NEW > COMPUTE > WEB APP > QUICK CREATE

- Type: URL: adl[UNIQUE]

- Select: APP SERVICE PLAN: From your subscription

- Click: adl[UNIQUE] > WEBJOBS > ADD A JOB

- Type: NAME: adl[UNIQUE]

- Browse: datagenerator.zip

- Select: HOW TO RUN: Run continuously

- Click: Finish

Download the script from https://github.com/daden-ms/arm/blob/master/script/cdrSummary.txt, and save it to a folder with name "script"

Download Microsoft Azure Storage Explorer, login with your credentials, and

- Select the storage account:storage[unique]

- Right Click "Create Blob container"

- Type: cdrdata

- Right click cdrdata

- Select Open Blob Container Editor

- On the top of the right panel, Click Upload, Select Upload Folder and upload the script folder

To get started, click the below button.

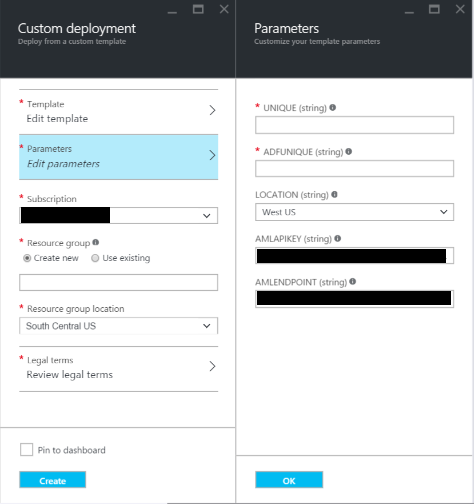

This will create a new "blade" in the Azure portal(https://ms.portal.azure.com).

- Parameters

- Type: UNIQUE (string): [UNIQUE] (Use the one previously entered)

- Type: ADFUNIQUE (string): Azure Data Factory(ADF) Identifier (Use a number)

- Select: LOCATION: [LOCATION] (Use the one previously selected)

- Type: AZUREMLAPIKEY: [AZUREMLAPIKEY]

- Browse: https://studio.azureml.net

- Click: WEB SERVICES > CDR Call Failure Prediction (Azure Data Lake)

- Click: Copy: API key

- Type: AZUREMLENDPOINT: [AZUREMLENDPOINT]

- Browse: https://studio.azureml.net

- Click: WEB SERVICES > CDR Call Failure Prediction (Azure Data Lake) > BATCH EXECUTION

- Copy: POST: REQUEST URI (Everything from "https" up to and including "jobs")

- Click: OK

- Select: Subscription: [SUBSCRIPTION] (Use the one previously selected)

- Select: Resource group: [UNIQUE] (Use the one previously selected)

- Click: Review legal terms > Create

- Check: Pin to dashboard (If you want it on your dashboard)

- Click: Create

- Browse: https://portal.azure.com

- Click: Data factories > dataFactory[UNIQUE][ADFUNIQUE] > Author and deploy

- Hover mouse over the icon, stop at New Data Store, Click New Data Store*, Select "Azure Data Lake Store"

- Copy

{

"name": "AzureDataLakeStoreLinkedService",

"properties": {

"type": "AzureDataLakeStore",

"description": "",

"typeProperties": {

"authorization": "<Click 'Authorize' to allow this data factory and the activities it runs to access this Data Lake Store with your access rights>",

"dataLakeStoreUri": "https://adls[unique].azuredatalakestore.net/webhdfs/v1",

"sessionId": "<OAuth session id from the OAuth authorization session. Each session id is unique and may only be used once>"

}

}

}

To the Editor, replace [unique] with your unique string

- Click Authorize, input your credentials

- Click: Deploy

- Hover mouse over the icon, stop at New Compute, Click New Compute*, Select "Azure Data Lake Analytics"

- Copy

{

"name": "AzureDataLakeAnalyticsLinkedService",

"properties": {

"type": "AzureDataLakeAnalytics",

"description": "",

"typeProperties": {

"authorization": "<Authorization code is automatically retrieved after clicking 'Authorize' and completing the OAuth login>",

"accountName": "adla[unique]",

"sessionId": "<OAuth session id from the OAuth authorization session. Each session id is unique and may only be used once>"

}

}

}

To the Editor, replace [unique] with your unique string

- Click Authorize, input your credentials

- Click: Deploy

Wait until the upper two linked service are deployed by using the portal to check provision state.

-

Hover mouse over the icon, stop at New Data Set, Click New Data Set, Select "Azure Data Lake Store"

-

Copy the content from https://github.com/daden-ms/arm/blob/master/dataset/DataLakeTable.json in to the Editor

-

Click: Deploy

-

Hover mouse over the icon, stop at New Data Set, Click New Data Set, Select "Azure Data Lake Store"

-

Copy the content from https://github.com/daden-ms/arm/blob/master/dataset/DataLakeCDRAggregateTable.json in to the Editor

-

Click: Deploy

-

Hover mouse over the icon, stop at New Pipeline

-

Copy the content from https://github.com/daden-ms/arm/blob/master/pipeline/DataLakeCDRSummary.json in to the Editor

-

Edit: start: 2016-05-12T00:00:00Z: to: Your current time in UTC 24 hour clock (for example http://www.timeanddate.com/worldclock/timezone/utc)

-

Edit: end: 2016-05-12T00:00:00Z: to: Your current time in UTC 24 hour clock plus three hours (for example http://www.timeanddate.com/worldclock/timezone/utc)

-

Edit: "isPaused": true : to "isPaused": false

-

Click: Deploy

-

Hover mouse over the icon, stop at New Pipeline

-

Copy the content from https://github.com/daden-ms/arm/blob/master/pipeline/DataLakeStoreToSqlDW.json in to the Editor

-

Edit: start: 2016-05-12T00:00:00Z: to: Your current time in UTC 24 hour clock (for example http://www.timeanddate.com/worldclock/timezone/utc)

-

Edit: end: 2016-05-12T00:00:00Z: to: Your current time in UTC 24 hour clock plus three hours (for example http://www.timeanddate.com/worldclock/timezone/utc)

-

Edit: "isPaused": true : to "isPaused": false

-

Click: Deploy

- Expand: Pipelines

- Select: MLPipeline

- Edit: start: 2016-05-12T00:00:00Z: to: Your current time in UTC 24 hour clock (for example http://www.timeanddate.com/worldclock/timezone/utc)

- Edit: end: 2016-05-12T00:00:00Z: to: Your current time in UTC 24 hour clock plus three hours (for example http://www.timeanddate.com/worldclock/timezone/utc)

- Edit: "isPaused": true : to "isPaused": false

- Click: Deploy

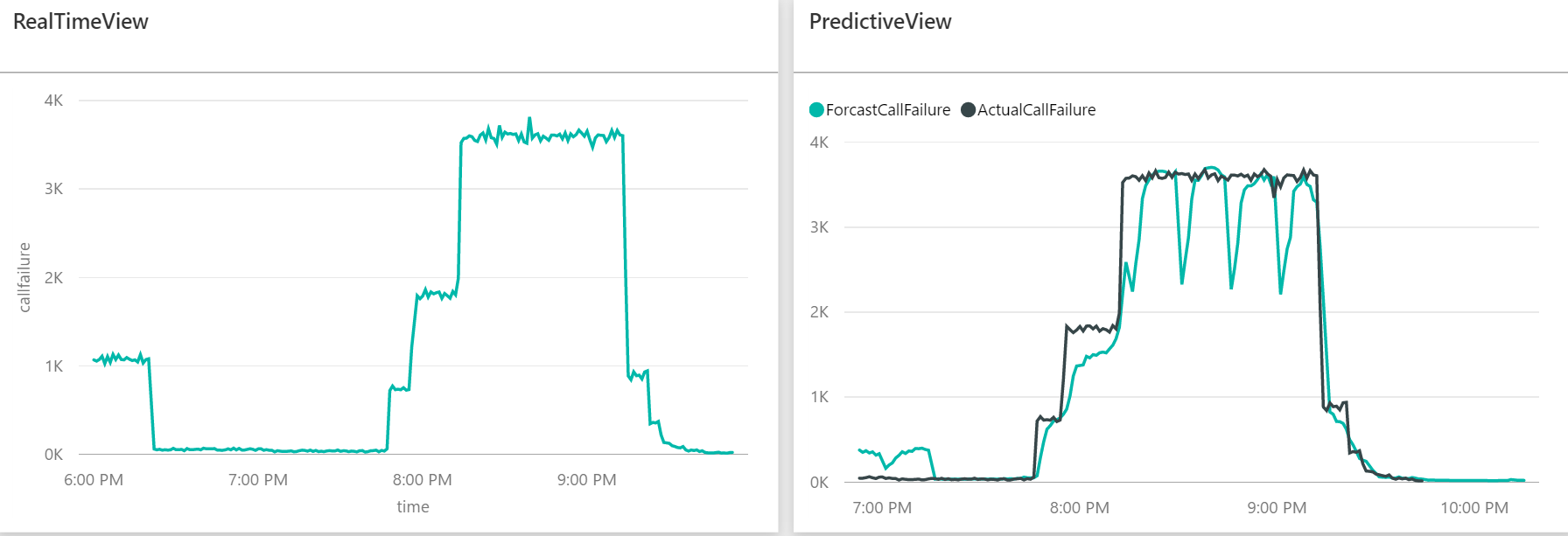

At the end of this section, you will have a dashboard which looks like the following:

- Browse: https://powerbi.microsoft.com

- Click: Sign in (Login with your credentials)

- Show: The navigation pane,

- Scroll to the bottom to the section of Datasets

- Click: callinfoperminute > Line chart Under Visualizations

- Select: Time

- Select: CallFailure

- Click: the icon under Visualizations with tooltip Format

- Click: X-Axis, Toggle Title to On

- Click: Y-Axis, Toggle Title to On

- Click: Title

- Type RealTimeView to Title Text

- Click Pin visual (pin icon on upper-right)

- Type RealTimeView for the report name

- Click Save and Continue

- Select: New dashboard

- Type: Name: CallInfoDashBoard

- Click: Pin

- Download the Power BI Desktop application (https://powerbi.microsoft.com/en-us/desktop)

- Download the Power BI template file https://github.com/daden-ms/arm/blob/master/PowerBI/DataLakeCDRPredictive.pbix (Click Raw to start downloading) and open it with Power BI application

- On the application ribbon menu, choose Edit Queries

- Go to Query Settings on the right pane, double click Source

In the SQL Server Database dialog

- Type: Server Name: adl-[UNIQUE].database.windows.net

- Type: Database Name: adlDB

- Click: OK

- Choose: Database for Authentication method

- Input: adluser for username and pass@word1 for password

- On the application ribbon menu, click "Close and Apply"

- Once data is loaded, On the application ribbon menu, click "Publish"

- When prompt with dialog windows, click "Save"

- Browse: https://powerbi.microsoft.com

- Click: Sign in (Login with your credentials)

- Show: The navigation pane,

- Scroll to the bottom to the section of Datasets

- Right Click: DataLakeCDRPredictive

- Click: Dataset Settings

- Click: Edit credentials

- Input: adluser as user name and pass@word1 as password

- Click: DataLakeCDRPredictive > Line chart Under Visualizations

- Select: Time

- Select: ForcastCallFailure

- Select: ActualCallFailure

- Click: X-Axis, Toggle Title to On

- Click: the icon under Visualizations with tooltip Format

- Click: Title

- Type PredictiveView to Title Text

- Click Pin visual (pin icon on upper-right)

- Type PredictiveView for the report name

- Click Save and Continue*

- Select: Existing dashboard

- Choose: Name: CallInfoDashBoard

- Click: Pin

Congratulations! If you made it to this point, you should have a running sample with real time and predictive pipelines showcasing the power of Azure Data Lake Store and its integration with Azure Machine Learning and many of the other Azure services. The next section lists the steps to tear things down when you are done.

###Undeploy

- Delete Resources (Service Bus, Event Hub, SQL Data Warehouse, Data Factories)

- Browse: https://portal.azure.com

- Click: Resource groups

- Right click: [UNIQUE] (your resource group)

- Select: Delete

- Delete WebApp (data generator)

- Browse: https://manage.windowsazure.com

- Click: WEB APPS

- Select: [UNIQUE]datagenerator (Your web app)

- Click: DELETE

- Delete AML Service

- Browse: https://studio.azureml.net

- Click: WEB SERVICES

- Select: CDR Call Failure Prediction (Azure Data Lake)

- Click: DELETE > EXPERIMENTS

- Select: CDR Call Failure Prediction (Azure Data Lake)

- Click: DELETE

- Delete PBI dashboard

- Browse: https://powerbi.microsoft.com

- Select: Dashboards

- Right click: CallInfoDashBoard

- Select: REMOVE

- Scroll to Reports

- Right click: PredictiveView

- Select: REMOVE

- Scroll to Reports

- Right click: RealTimeView

- Select: REMOVE

- Scroll to Datasets

- Right click: DataLakeCDRPredictive

- Select: REMOVE

- Scroll to Datasets

- Right click: callinfoperminute

- Select: REMOVE