Please visit our project page for more details.

This code has been tested in the following environment:

- Ubuntu 18.04.5 LTS

- Python 3.8

- conda3 or miniconda3

- CUDA capable GPU (one is enough)

Setup conda env:

conda env create -f environment.yml

conda activate SinMDMInstall ganimator-eval-kernel by following these instructions, OR by running:

pip install git+https://github.com/PeizhuoLi/ganimator-eval-kernel.gitData should be under the ./dataset folder.

Mixamo Dataset

Download the motions used for our benchmark:

bash prepare/download_mixamo_dataset.shOr download motions directly from Mixamo and use utils/fbx2bvh.py to convert fbx files to bvh files.

HumanML3D Dataset

Clone HumanML3D, then copy the data dir to our repository:

cd ..

git clone https://github.com/EricGuo5513/HumanML3D.git

unzip ./HumanML3D/HumanML3D/texts.zip -d ./HumanML3D/HumanML3D/

cp -r HumanML3D/HumanML3D sin-mdm/dataset/HumanML3D

cd sin-mdmThen, download the motions used for our benchmark:

bash prepare/download_humanml3d_dataset.shOr download the entire dataset by following the instructions in HumanML3D, then copy the result dataset to our repository:

cp -r ../HumanML3D/HumanML3D ./dataset/HumanML3DTruebones zoo Dataset

Download motions used in our pretrained models:

bash prepare/download_truebones_zoo_dataset.shOr download the full dataset here and use utils/fbx2bvh.py to convert fbx files to bvh files.

Download the model(s) you wish to use using the scripts below. The models will be placed under ./save/.

Mixamo Dataset Models

Download pretrained models used for our benchmark:

bash prepare/download_mixamo_models.shHumanML3D Dataset Models

Download pretrained models used for our benchmark:

bash prepare/download_humanml3d_models.shTruebones Zoo Dataset Models

Download pretrained models:

bash prepare/download_truebones_models.shPretrained model of "Flying Dragon" will be available soon!

To generate motions using a pretrained model use the following command:

python -m sample.generate --model_path ./save/path_to_pretrained_model --num_samples 5 --motion_length 10Where --num_samples is the number of motions that will be generated and --motion_length is the length in seconds. Use --seed to specify a seed.

Running this will get you:

results.npyfile with xyz positions of the generated animationrows_00_to_##.mp4- stick figure animations of all generated motions.sample##.bvh- bvh file for each generated animation that can be visuallized using Blender.

It will look something like this:

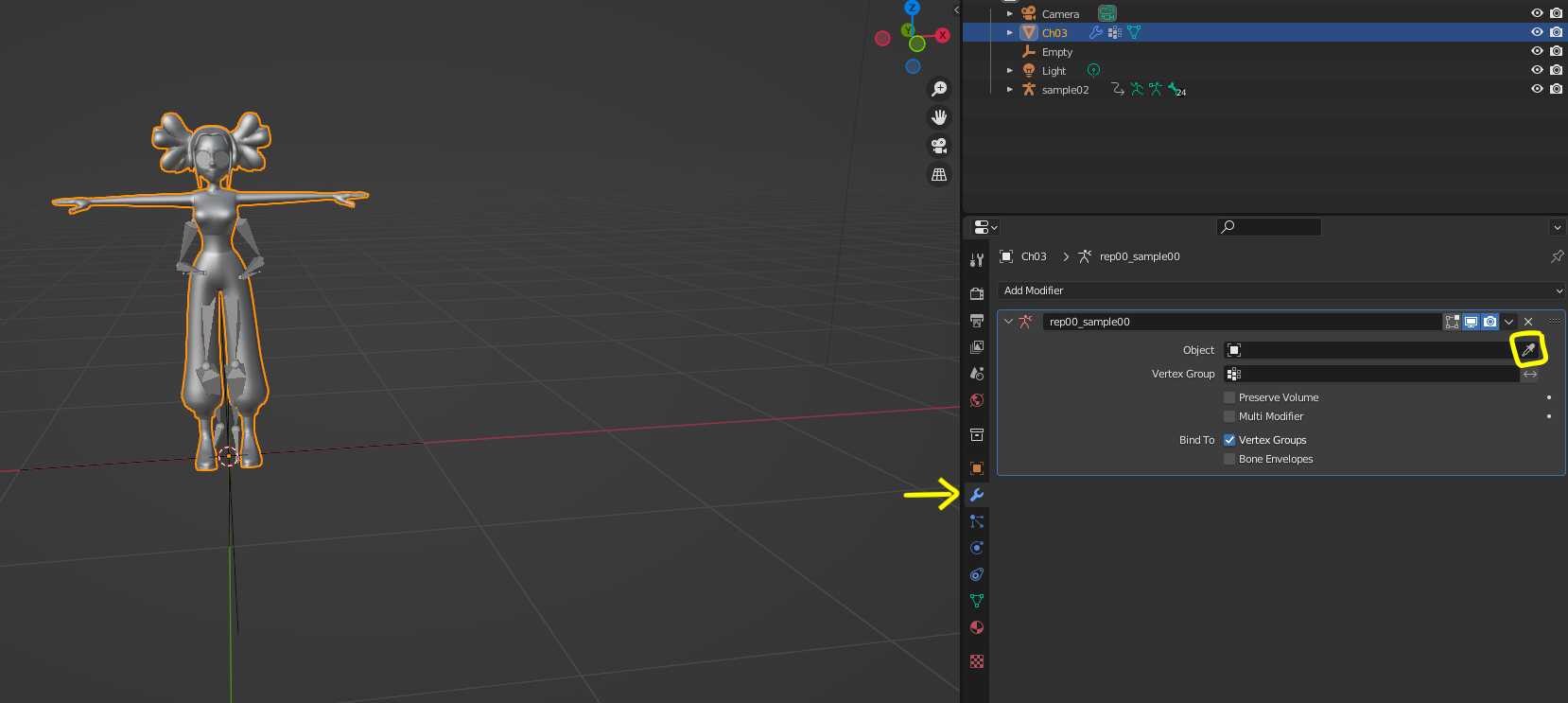

Instructions for adding texture in Blender

To add texture in Blender For Mixamo and Truebones zoo datasets follow these steps:

- In Blender, Import an FBX file that contains the mesh and texture to blender.

- For Mixamo motions use: mixamo_ref.fbx

- For motions generated by the truebones zoo uploaded models use the relevant fbx file from here.

- Select only the skeleton of the imported FBX and delete it. The mesh will then appear in T-pose.

- Import the BVH file that was generated by the model

- Select the mesh, go to modifier properties, and press the tooltip icon. Then, select the generated BVH.

Download HumanML3D dependencies:

bash prepare/download_t2m_evaluators.shpython -m train.train_sinmdm --arch qna --dataset humanml --save_dir <path_to_save_models> --sin_path <'path to .bvh file for mixamo/bvh_general dataset or .npy file for humanml dataset'>-

Specify architecture using

--archOptions: qna, unet -

Specify dataset using

--datasetOptions: humanml, mixamo, bvh_general -

Use

--deviceto define GPU id. -

Use

--seedto specify seed. -

Add

--train_platform_type {ClearmlPlatform, TensorboardPlatform}to track results with either ClearML or Tensorboard. -

Add

--eval_during_trainingto run a short evaluation for each saved checkpoint. -

Add

--gen_during_trainingto synthesize a motion and save its visualization for each saved checkpoint.Evaluation and generation during training will slow it down but will give you better monitoring.

Please refer to file utils/parser_util.py for more arguments.

For in-betweening, the prefix and suffix of a motion are given as input and the model generates the rest according to the motion the network was trained on.

python -m sample.edit --model_path <path_to_pretrained_model> --edit_mode in_betweening --num_samples 3- To specify the motion to be used for the prefix and suffix use

--ref_motion <path_to_reference_motion>. If--ref_motionis not specified, the original motion the network was trained on will be used. - Use

--prefix_endand--suffix_startto specify the length of the prefix and suffix - Use

--seedto specify seed. - Use

--num_samplesto specify number of motions to generate.

For example:

generated parts are colored in an orange scheme, given input is colored in a blue scheme.

For motion expansion, a motion is given as input and new prefix and suffix are generated for it by the model.

python -m sample.edit --model_path <path_to_pretrained_model> --edit_mode expansion --num_samples 3- To specify the input motion use

--ref_motion <path_to_reference_motion>. If--ref_motionis not specified, the original motion the network was trained on will be used. - Use

--prefix_lengthand--suffix_lengthto specify the length of the generated prefix and suffix - Use

--seedto specify seed. - Use

--num_samplesto specify number of motions to generate.

For example:

generated parts are colored in an orange scheme, given input is colored in a blue scheme.

The model is given a reference motion from which to take the upper body, and generates the lower body according to the motion the model was trained on.

python -m sample.edit --model_path <path_to_pretrained_model> --edit_mode lower_body --num_samples 3 --ref_motion <path_to_reference_motion>This application is supported for the mixamo and humanml datasets.

- To specify the reference motion to take upper body from use

--ref_motion <path_to_reference_motion>. If--ref_motionis not specified, the original motion the network was trained on will be used. - Use

--seedto specify seed. - Use

--num_samplesto specify number of motions to generate.

For example: (reference motion is "chicken dance" and lower body is generated with model train on "salsa dancing")

generated lower body is colored in an orange scheme. Upper body which is given as input is colored in a blue scheme.

Similarly to lower body editing, use --edit_mode upper_body

python -m sample.edit --model_path <path_to_pretrained_model> --edit_mode upper_body --num_samples 3 --ref_motion <path_to_reference_motion>This application is supported for the mixamo and humanml datasets.

For example: (reference motion is "salsa dancing" and upper body is generated with model trained on "punching")

generated upper body is colored in an orange scheme. Lower body which is given as input is colored in a blue scheme.

You can use harmonization for style transfer. The model is trained on the style motion. The content motion --ref_motion, unseen by the network, is given as input and adjusted such that it matches the style motion's motifs.

python -m sample.edit --model_path <path_to_pretrained_model> --edit_mode harmonization --num_samples 3 --ref_motion <path_to_reference_motion>- To specify the reference motion use

--ref_motion <path_to_reference_motion> - Use

--seedto specify seed. - Use

--num_samplesto specify number of motions to generate.

For example, here the model was trained on "happy" walk, and we transfer the "happy" style to the input motion:

HumanML3D

bash prepare/download_t2m_evaluators.sh

bash prepare/download_humanml3d_dataset.sh

bash prepare/download_humanml3d_models.shMixamo

bash prepare/download_mixamo_dataset.sh

bash prepare/download_mixamo_models.shTo evaluate a single model (trained on a single sequence), run:

HumanML3D

python -m eval.eval_humanml --model_path ./save/humanml/0000/model000019999.ptMixamo

python -m eval.eval_mixamo --model_path ./save/mixamo/0000/model000019999.ptHumanML3D - reproduce benchmark

with the pre-trained checkpoints:

bash ./eval/eval_only_humanml_benchmark.shHumanML3D - train + benchmark

bash ./eval/train_eval_humanml_benchmark.shMixamo - reproduce benchmark

with the pre-trained checkpoints:

bash ./eval/eval_only_mixamo_benchmark.shMixamo - train + benchmark

bash ./eval/train_eval_mixamo_benchmark.shOur code partially uses each of the following works. We thank the authors of these works for their outstanding contributions and for sharing their code.

MDM, QnA, Ganimator, Guided Diffusion, A Deep Learning Framework For Character Motion Synthesis and Editing.

This code is distributed under the MIT LICENSE.