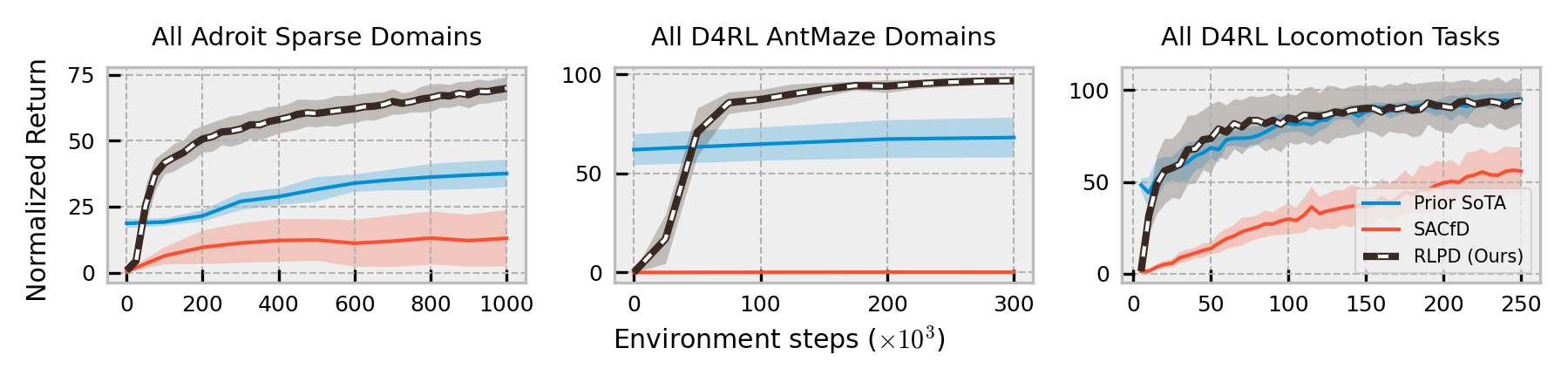

This is code to accompany the paper "Efficient Online Reinforcement Learning with Offline Data", available here. This code can be readily adapted to work on any offline dataset.

conda create -n rlpd python=3.9 # If you use conda.

conda activate rlpd

conda install patchelf # If you use conda.

pip install -r requirements.txt

conda deactivate

conda activate rlpdXLA_PYTHON_CLIENT_PREALLOCATE=false python train_finetuning.py --env_name=halfcheetah-expert-v0 \

--utd_ratio=20 \

--start_training 5000 \

--max_steps 250000 \

--config=configs/rlpd_config.py \

--project_name=rlpd_locomotionXLA_PYTHON_CLIENT_PREALLOCATE=false python train_finetuning.py --env_name=antmaze-umaze-v2 \

--utd_ratio=20 \

--start_training 5000 \

--max_steps 300000 \

--config=configs/rlpd_config.py \

--config.backup_entropy=False \

--config.hidden_dims="(256, 256, 256)" \

--config.num_min_qs=1 \

--project_name=rlpd_antmazeFirst, download and unzip .npy files into ~/.datasets/awac-data/ from here.

Make sure you have mjrl installed:

git clone https://github.com/aravindr93/mjrl

cd mjrl

pip install -e .Then, recursively clone mj_envs from this fork:

git clone --recursive https://github.com/philipjball/mj_envs.gitThen sync the submodules (add the --init flag if you didn't recursively clone):

$ cd mj_envs

$ git submodule update --remoteFinally:

$ pip install -e .Now you can run the following in this directory

XLA_PYTHON_CLIENT_PREALLOCATE=false python train_finetuning.py --env_name=pen-binary-v0 \

--utd_ratio=20 \

--start_training 5000 \

--max_steps 1000000 \

--config=configs/rlpd_config.py \

--config.backup_entropy=False \

--config.hidden_dims="(256, 256, 256)" \

--project_name=rlpd_adroitThese are pixel-based datasets for offline RL (paper here).

Download the 64px Main V-D4RL datsets into ~/.vd4rl here or here.

For instance, the Medium Cheetah Run .npz files should be in ~/.vd4rl/main/cheetah_run/medium/64px.

XLA_PYTHON_CLIENT_PREALLOCATE=false python train_finetuning_pixels.py --env_name=cheetah-run-v0 \

--start_training 5000 \

--max_steps 300000 \

--config=configs/rlpd_pixels_config.py \

--project_name=rlpd_vd4rl