The environmental impact of AI, and particularly Natural Language Processing (NLP), has become significant and is worryingly increasing due to the enormous energy consumption of model training and deployment. Here, we draw on corporate climate reporting standards and proposes a model card for NLP models, aiming to increase reporting relevance, completeness, consistency, transparency, and accuracy.

This repository contains the code and model card templates accompanying the paper Towards Climate Awareness in NLP Research, presented in EMNLP 2022:

@inproceedings{hershcovich-etal-2022-towards,

title = "Towards Climate Awareness in {NLP} Research",

author = "Hershcovich, Daniel and

Webersinke, Nicolas and

Kraus, Mathias and

Bingler, Julia and

Leippold, Markus",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, United Arab Emirates",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.emnlp-main.159",

pages = "2480--2494",

abstract = "The climate impact of AI, and NLP research in particular, has become a serious issue given the enormous amount of energy that is increasingly being used for training and running computational models. Consequently, increasing focus is placed on efficient NLP. However, this important initiative lacks simple guidelines that would allow for systematic climate reporting of NLP research. We argue that this deficiency is one of the reasons why very few publications in NLP report key figures that would allow a more thorough examination of environmental impact, and present a quantitative survey to demonstrate this. As a remedy, we propose a climate performance model card with the primary purpose of being practically usable with only limited information about experiments and the underlying computer hardware. We describe why this step is essential to increase awareness about the environmental impact of NLP research and, thereby, paving the way for more thorough discussions.",

}

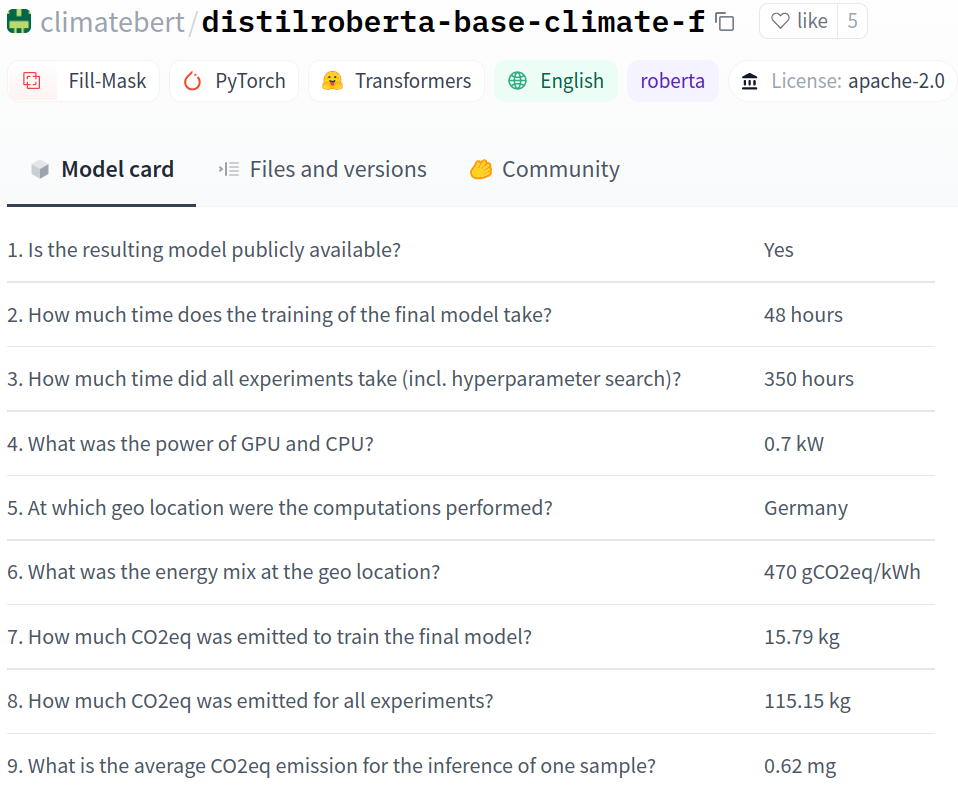

To fill the model card, you should answer the following questions:

- Is the resulting model publicly available? Yes or no. A popular model repository is Hugging Face.

- How much time does the training of the final model take? Training time for one model (the one that is publicaly available, if applicable). We recommend tools like Weights & Biases to track computations.

- How much time did all experiments take (incl. hyperparameter search)? Total time for all training runs of variations of that model.

- What was the energy consumption (GPU/CPU)? Also referred to as Max Thermal Design Power (TDP). Check your GPU specifications (example: A100).

- At which geo location were the computations performed? Country where the server or data center is located.

- What was the energy mix at the geo location? Find your country here. The unit is gCO2eq/kWh.

- How much CO2eq was emitted to train the final model? Can be estimated after computations are done using tools such as the ML CO2 Impact calculator, but the eaiser and most accurate is using CodeCarbon or carbontracker while running the model training.

- How much CO2eq was emitted for all experiments? Total emissions for all training runs of variations of that model.

- What is the average CO2eq emission for the inference of one sample? With a trained model, estimate this for one instance/sentence/document, depending on what makes sense for your task.

- Which positive environmental impact can be expected from this work? Is it fundamental theory? Building block tool? Applicable tool? Deployed application? See Jin et al. (2021).

For a visual tutorial, see our slides, also presented at EMNLP 2022.

The directory model_cards contains model cards for some commonly used NLP models, including GPT-3 and BLOOM.

Here is an example climate performance model card according to the guidelines proposed in this paper. The model is ClimateBert, a language model finetuned on climate-related text. The same information is provided on the Hugging Face model page.

model_card_template.tex is a template that can be used in scientific papers to report the climate performance of models published along with them. The template can be included as part of a Broader Impact section. It requires the bibliography entry provided above. Authors are further encouraged to elaborate in the text on the accuracy of the provided information, possible improvements that can be done, and the positive environmental impact expected from their work.

model_card_template.md is a template that can be used in the model card on Hugging Face, for example, to report the climate performance of the model.

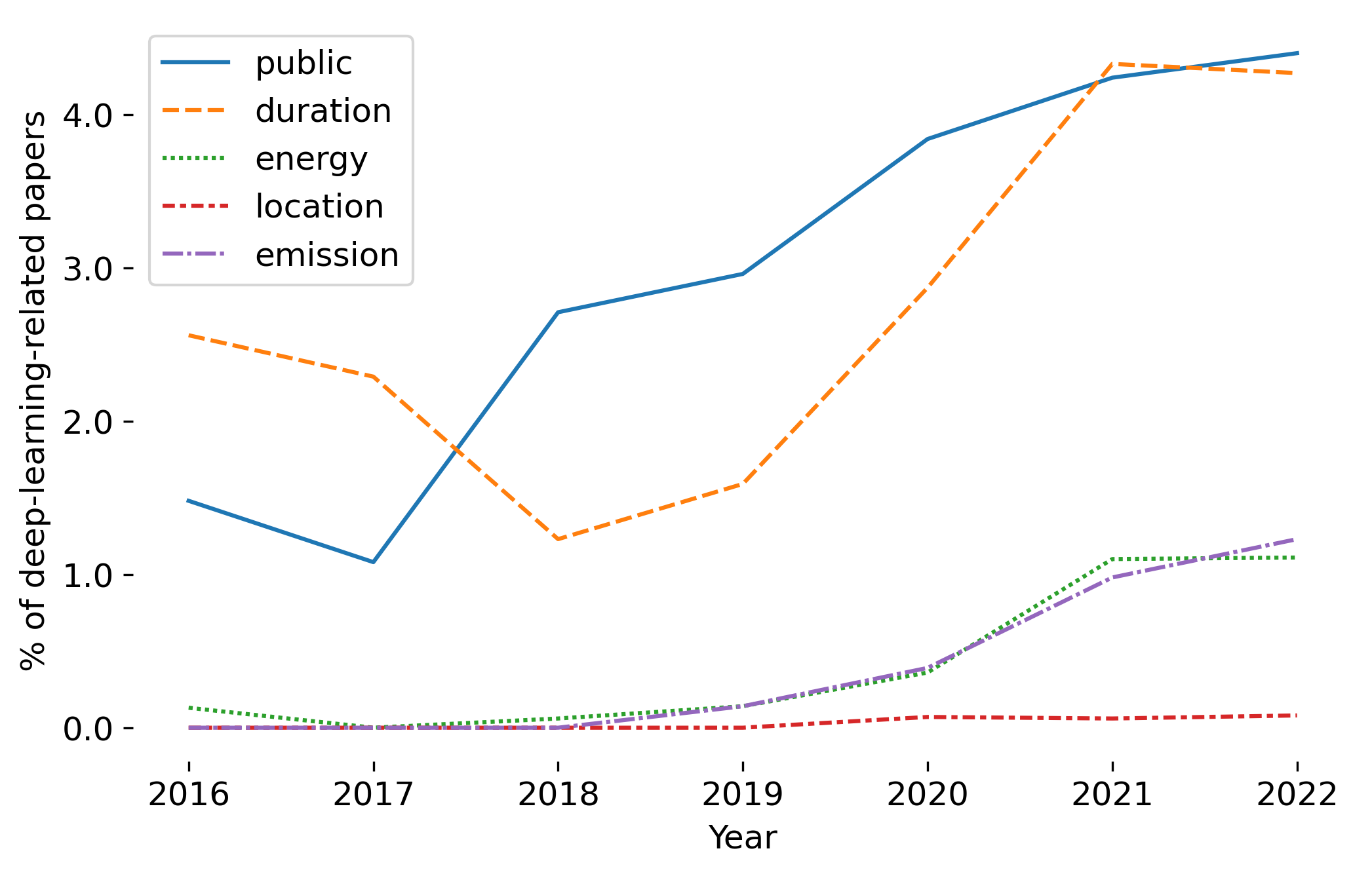

Towards_Climate_Awareness.ipynb is a collaborative notebook with the code used to conduct our survey of 2016-2022 papers from the ACL Anthology. The following figure from the paper visualizes the development of proportions of deep-learning-related *ACL papers discussing public model weights, duration of model training or optimization, energy consumption, location where computations where performed, and emission of GHG.

It's Easy Being Green in Machine Learning, blog post by Dustin Wright.