- Manual link: https://www.conduktor.io/kafka/what-is-apache-kafka/

-

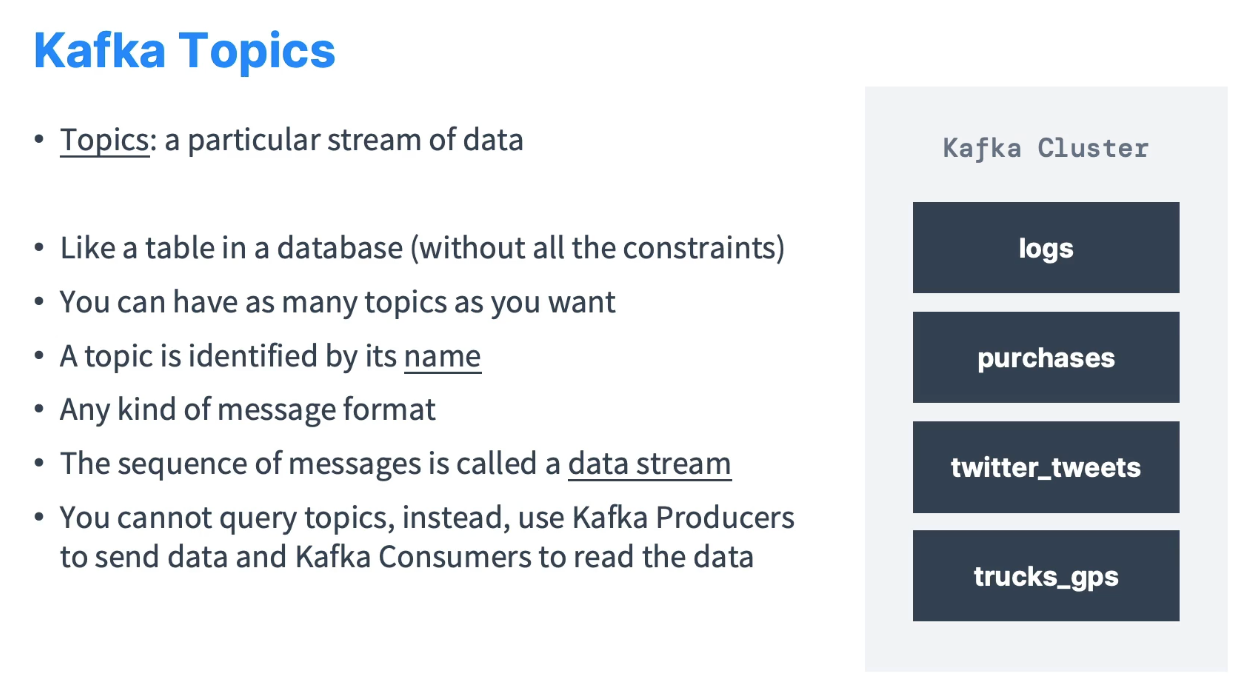

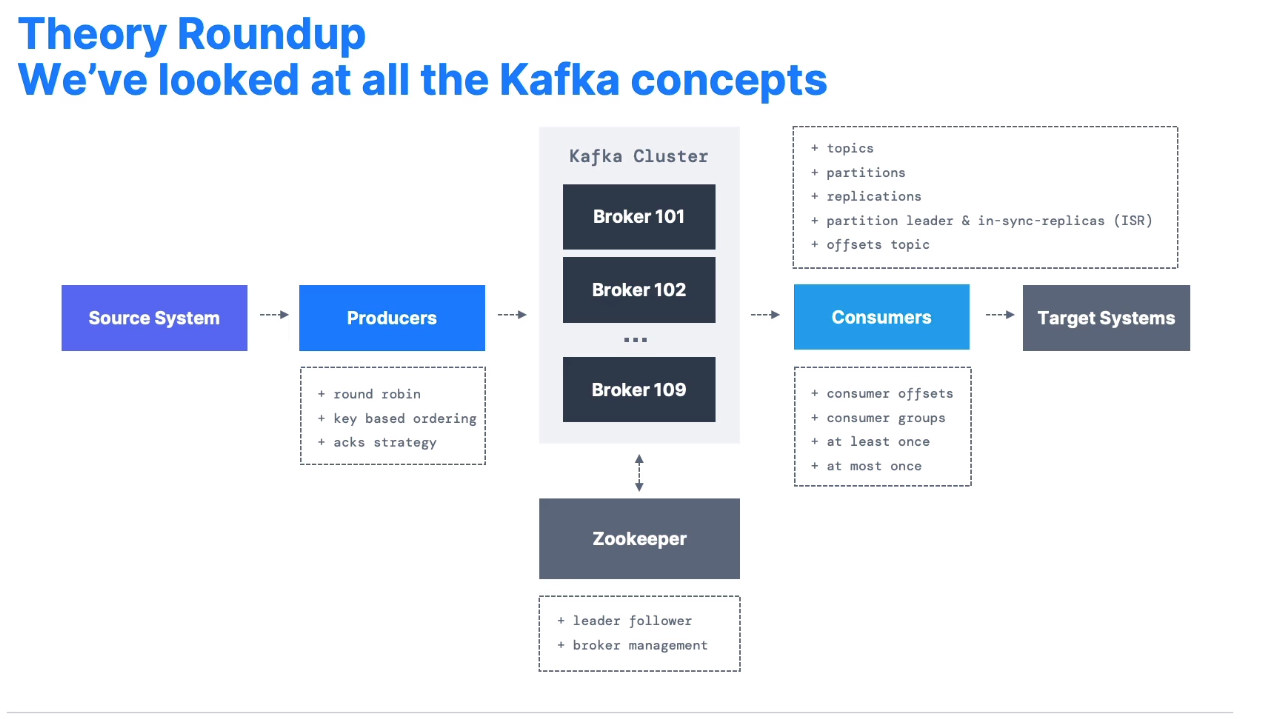

Topics:

-

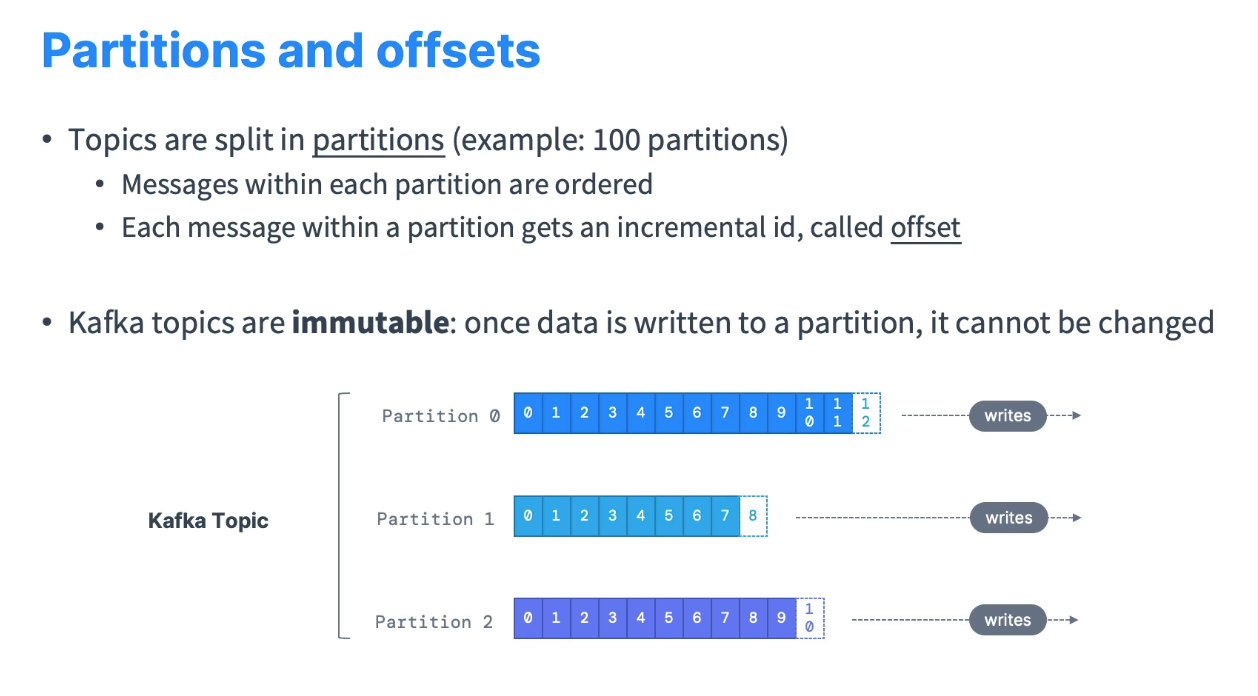

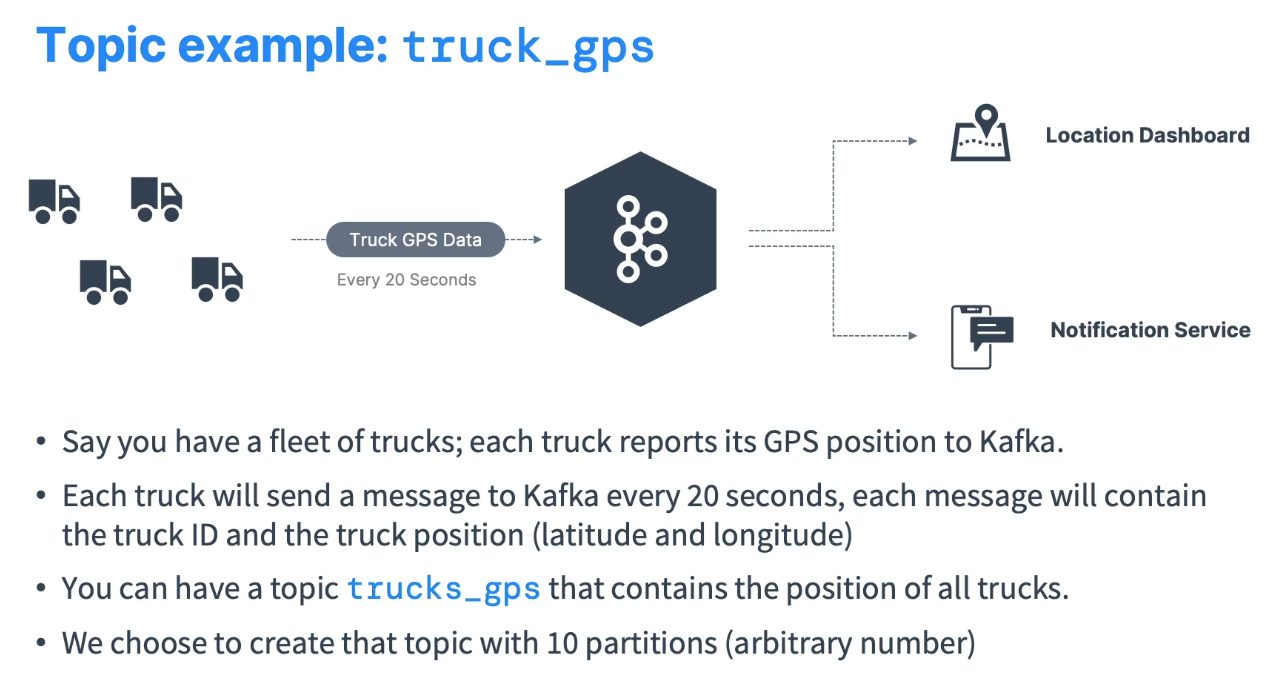

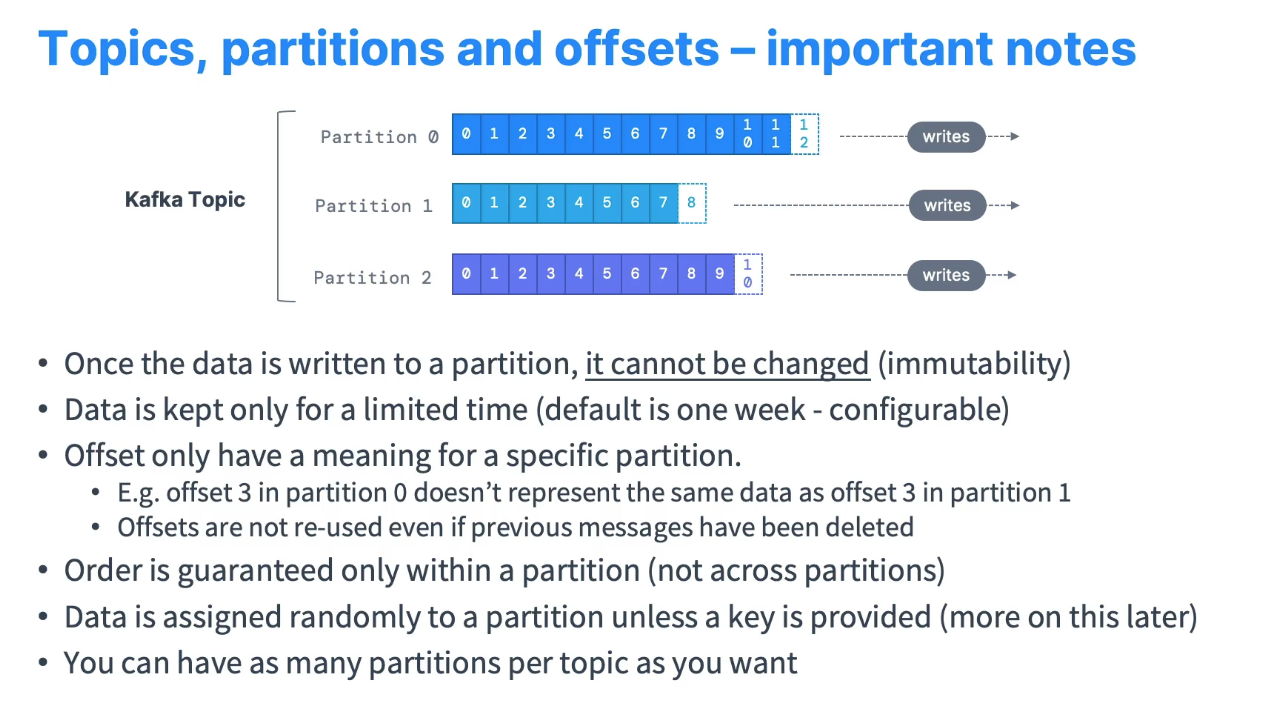

Partitions and offsets:

-

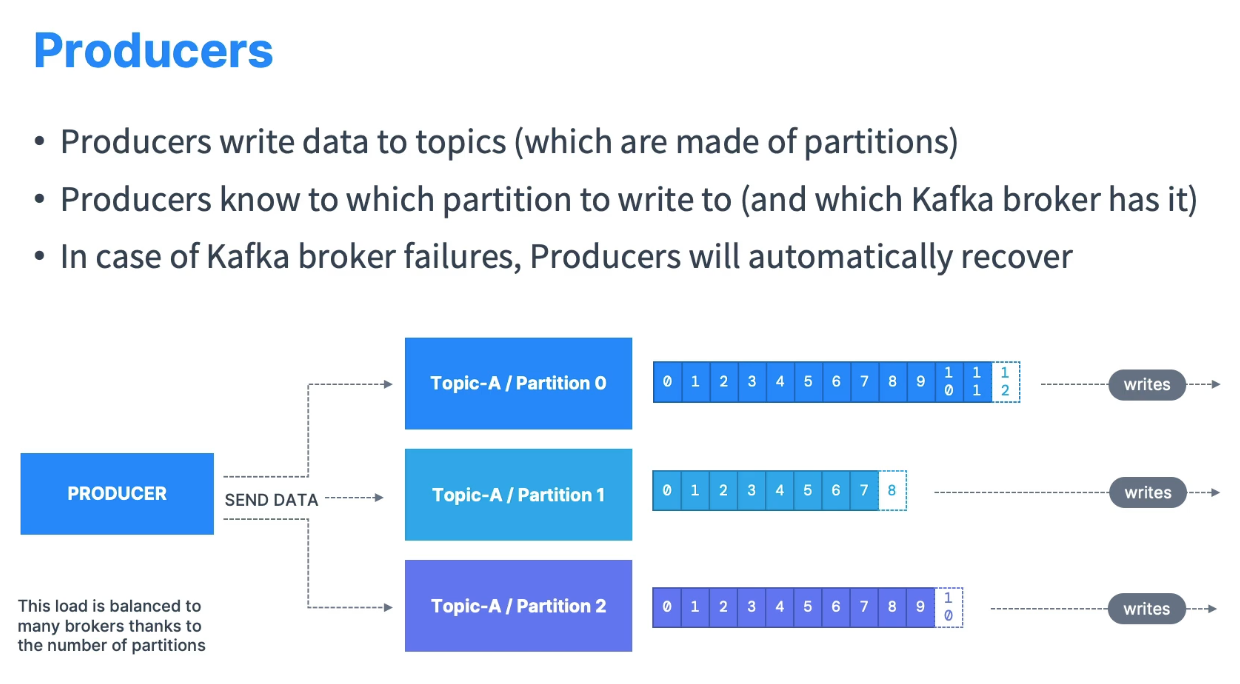

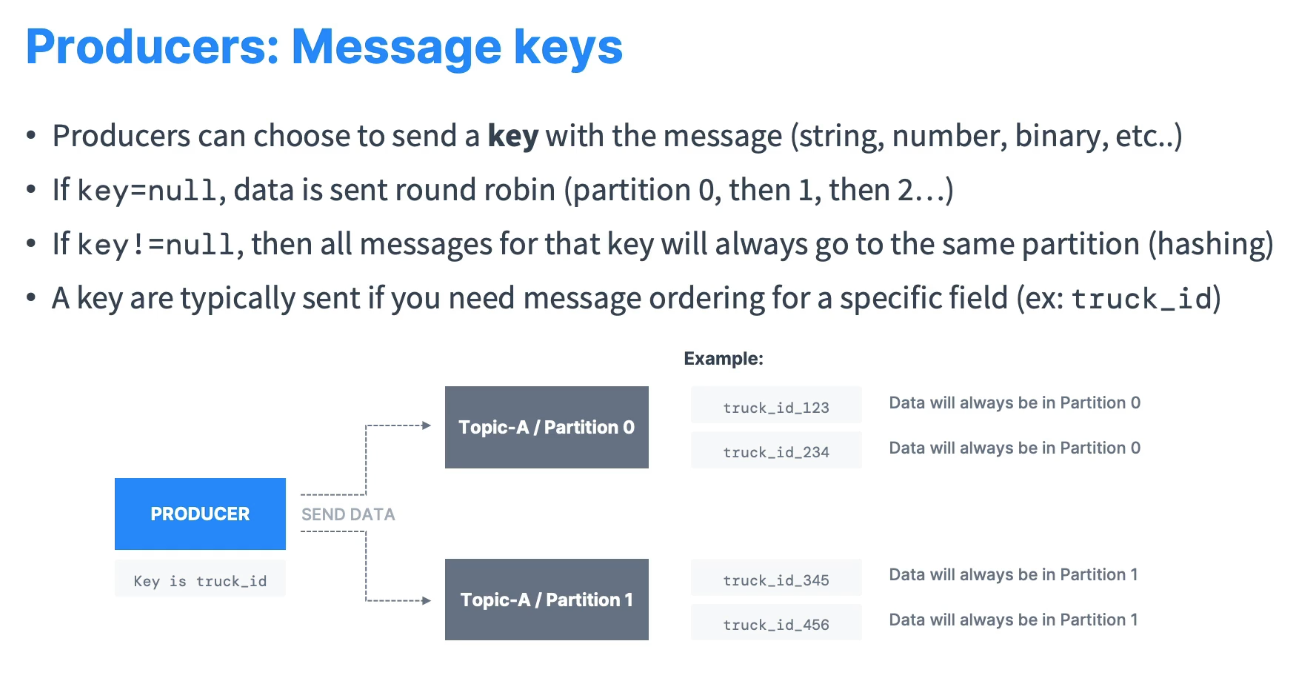

Producers and Message Keys:

-

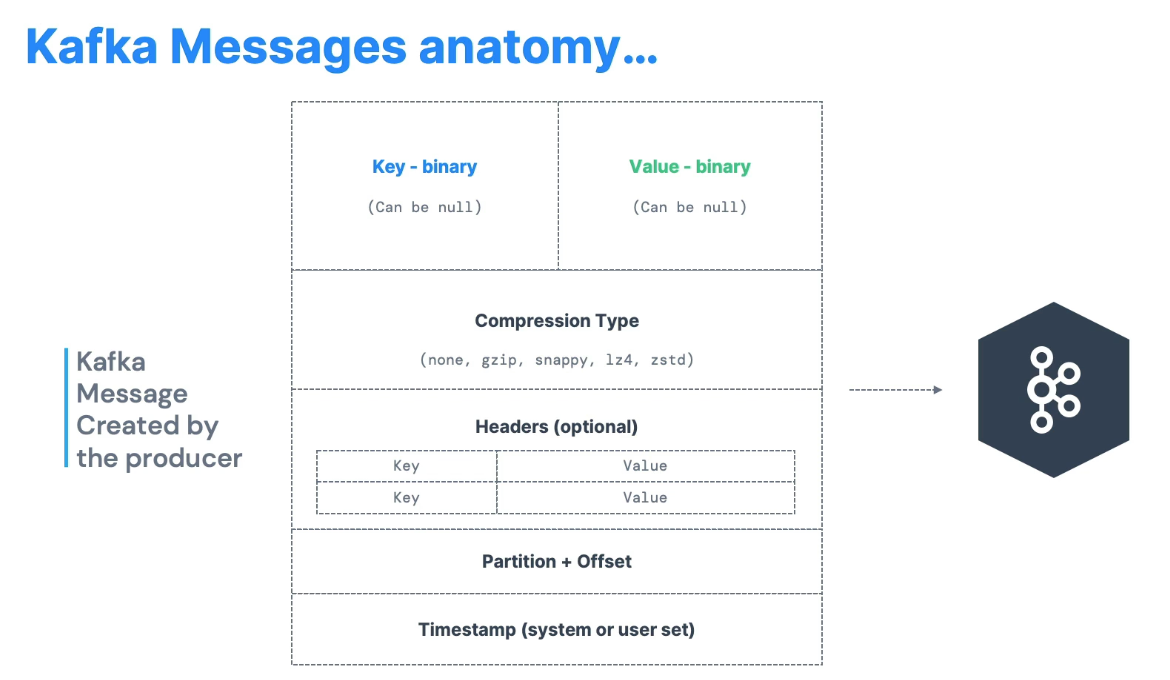

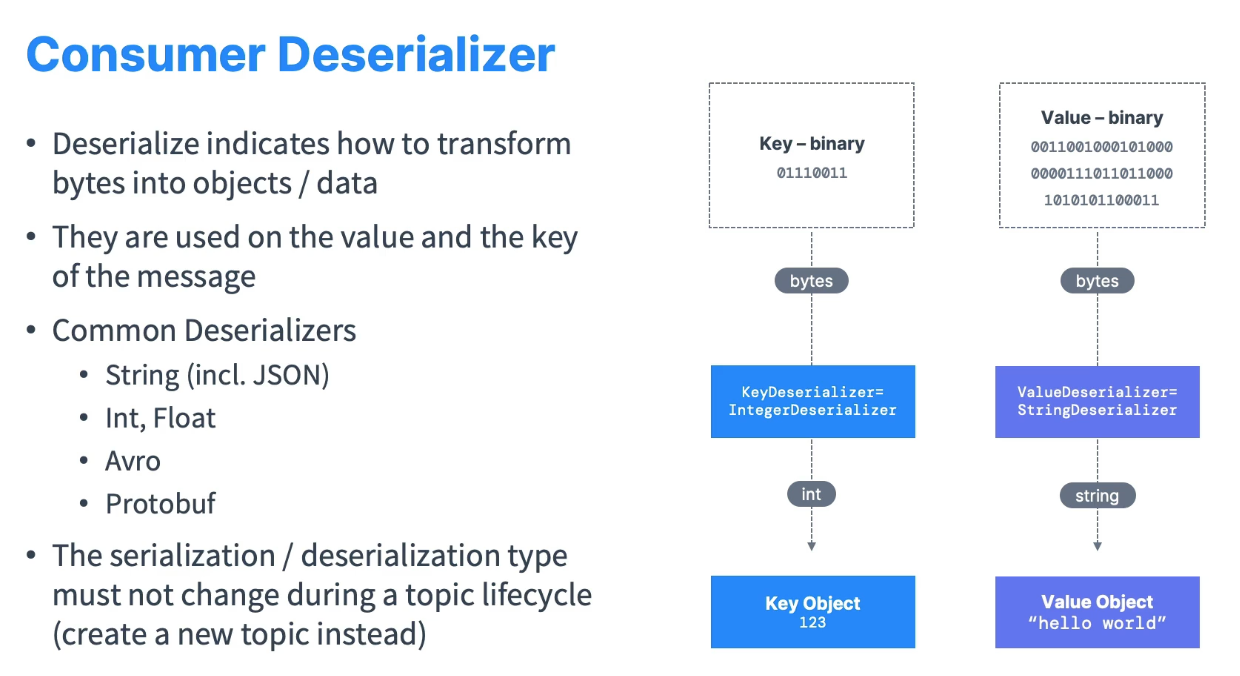

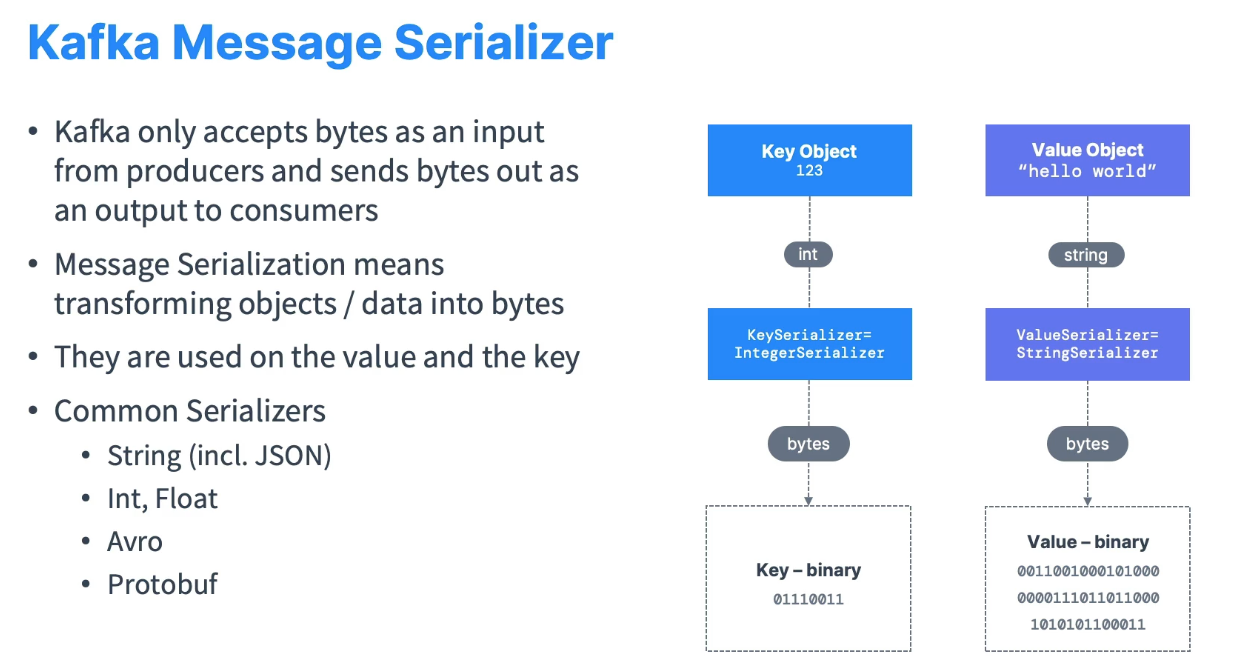

Kafka Message Serialization:

-

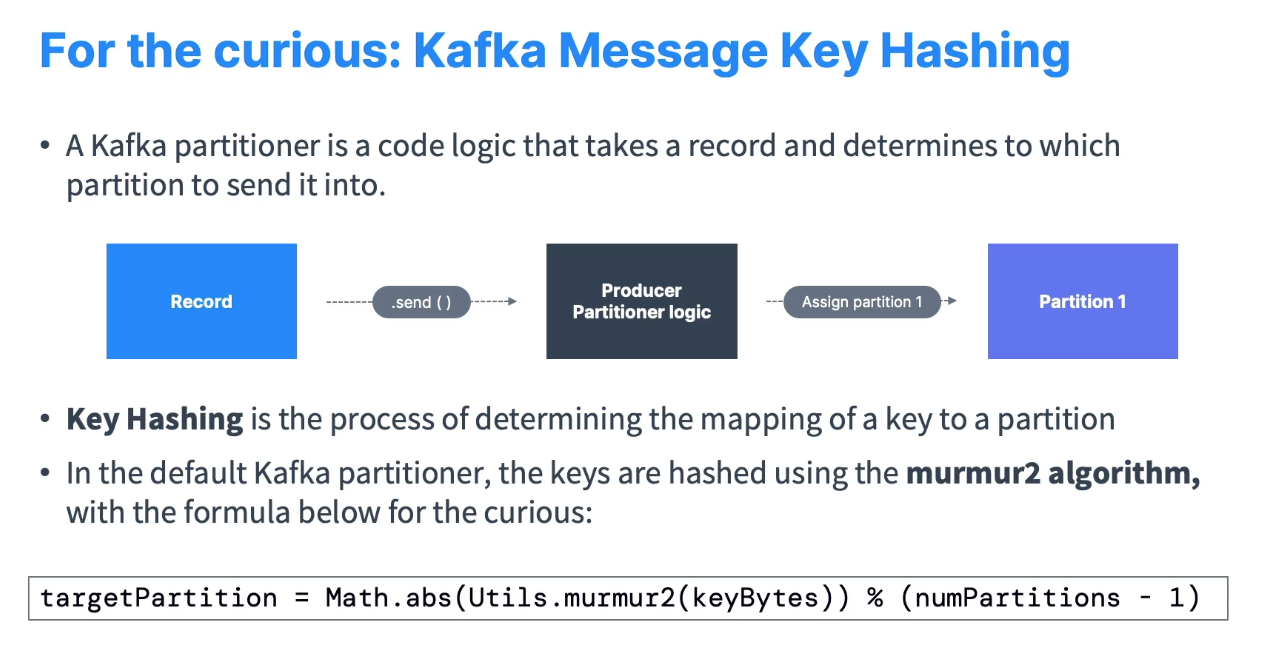

MurmurHash is a non-cryptographic hash function suitable for general hash-based lookup.[1][2][3] It was created by Austin Appleby in 2008[4] and is currently hosted on GitHub along with its test suite named 'SMHasher'. It also exists in a number of variants,[5] all of which have been released into the public domain. The name comes from two basic operations, multiply (MU) and rotate (R), used in its inner loop.

-

Unlike cryptographic hash functions, it is not specifically designed to be difficult to reverse by an adversary, making it unsuitable for cryptographic purposes.

-

-

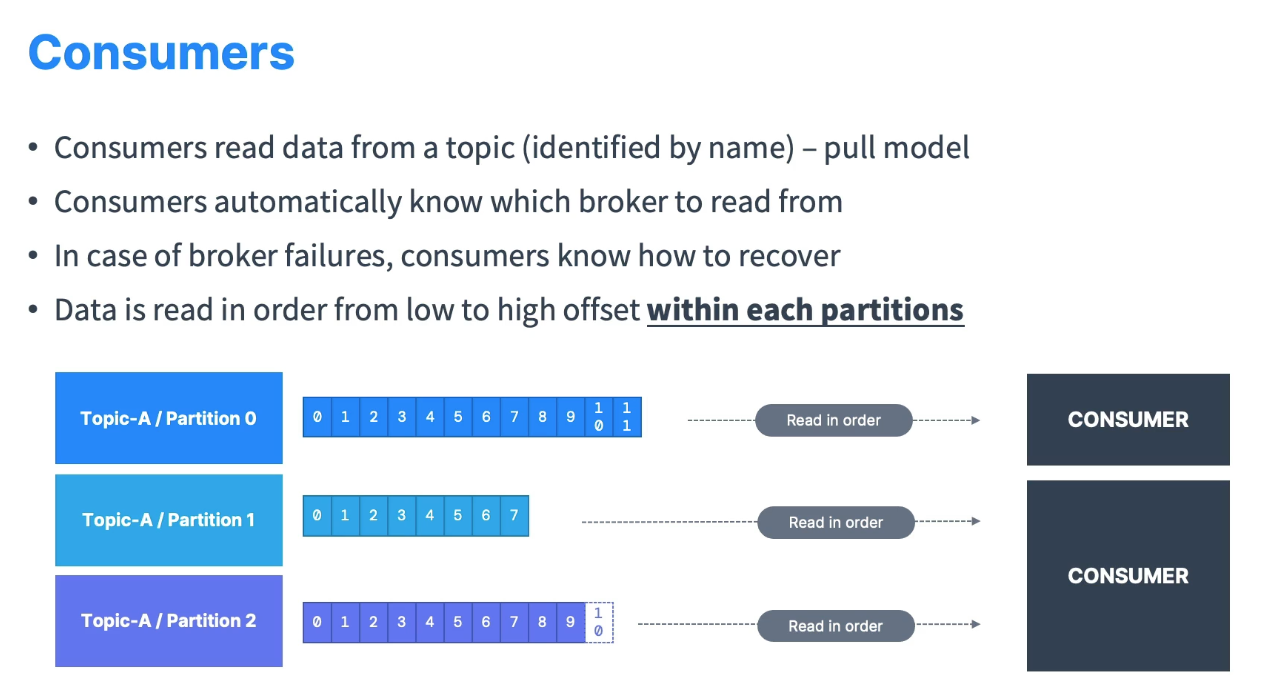

Consumers:

-

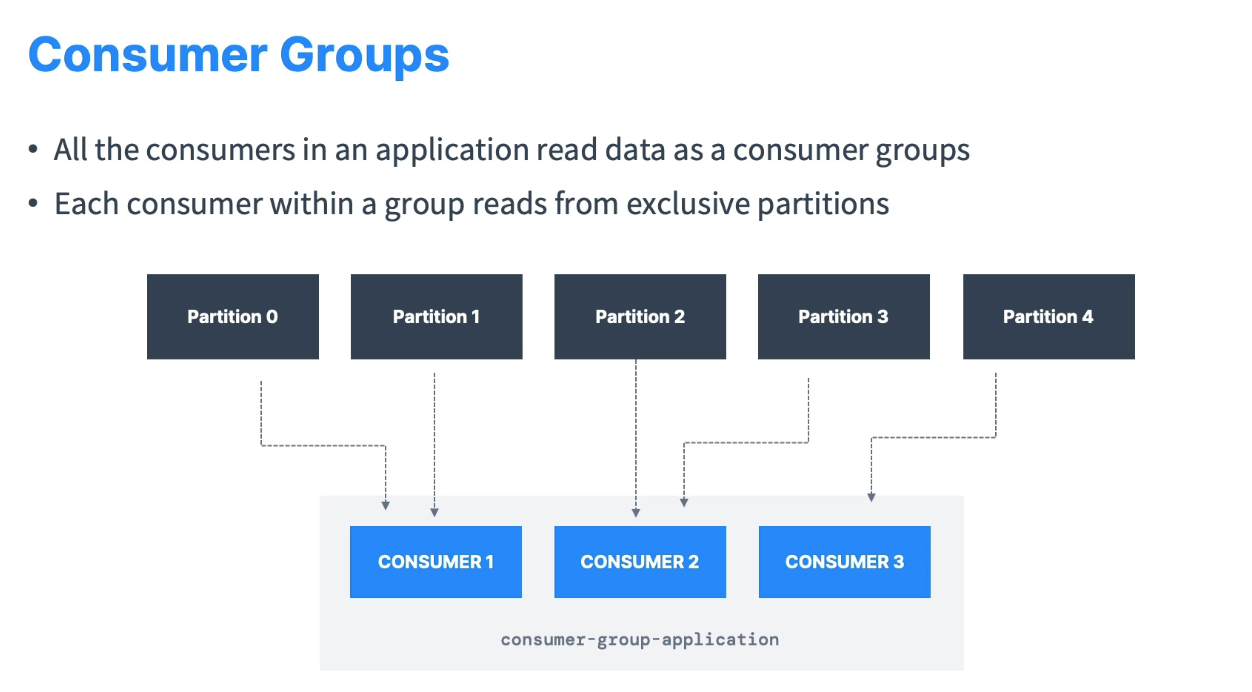

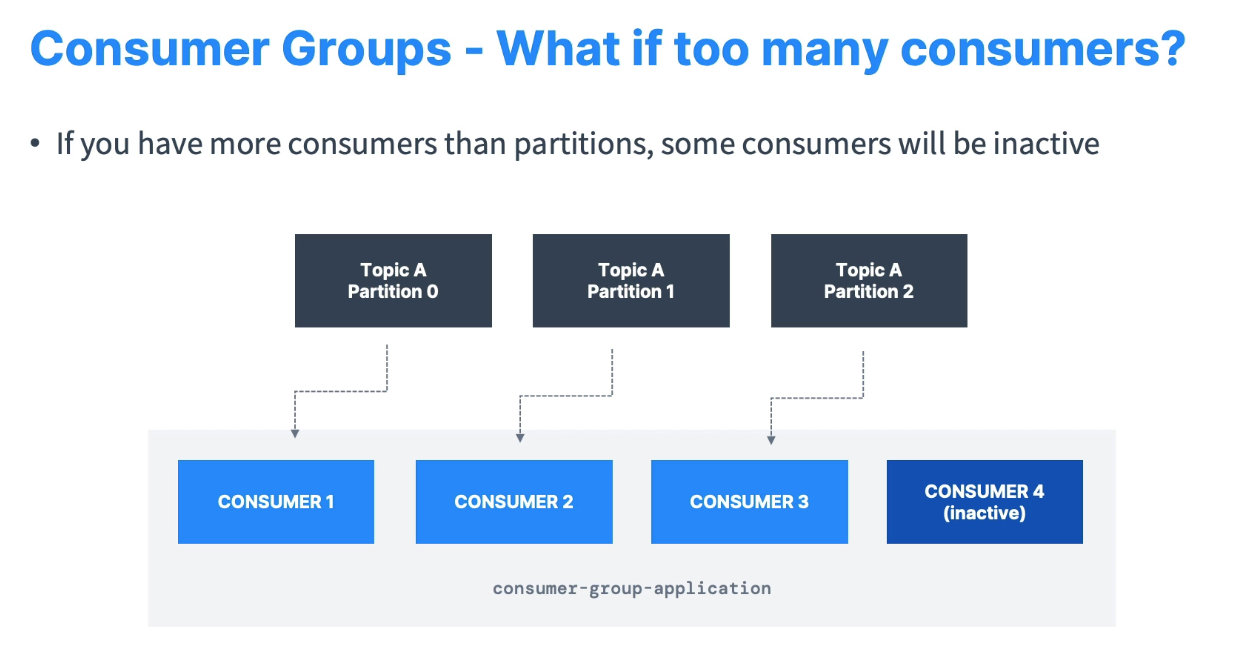

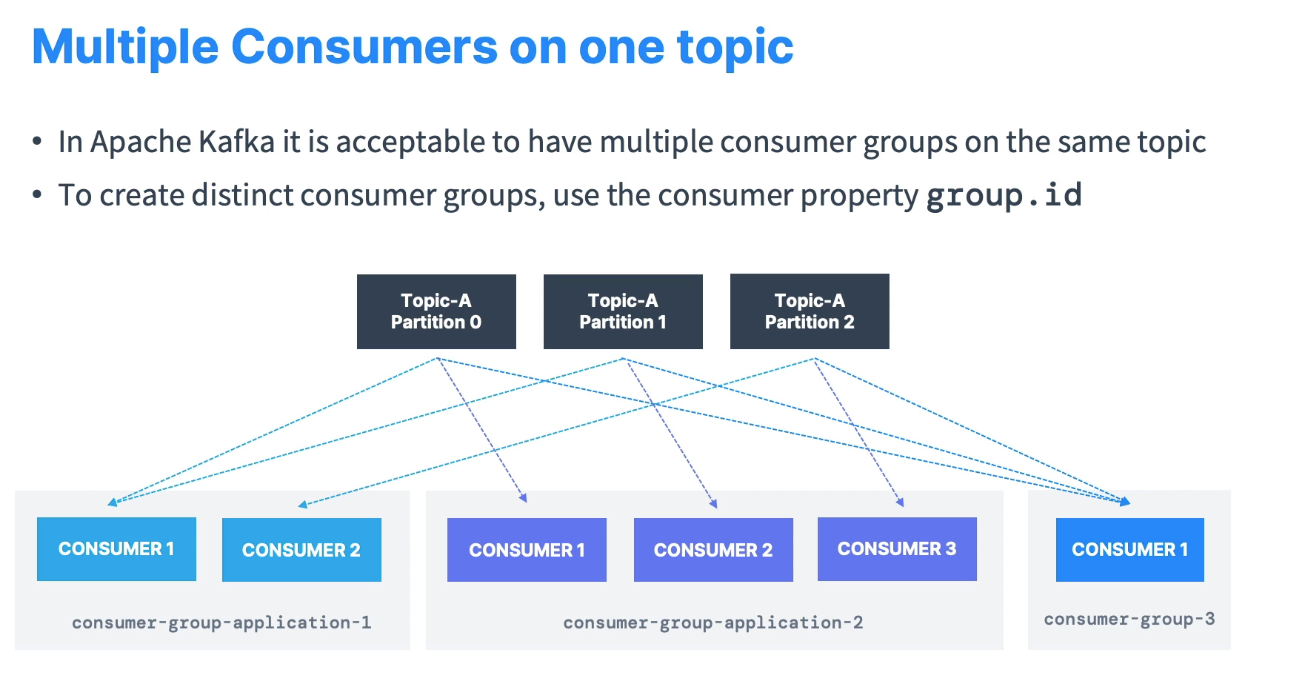

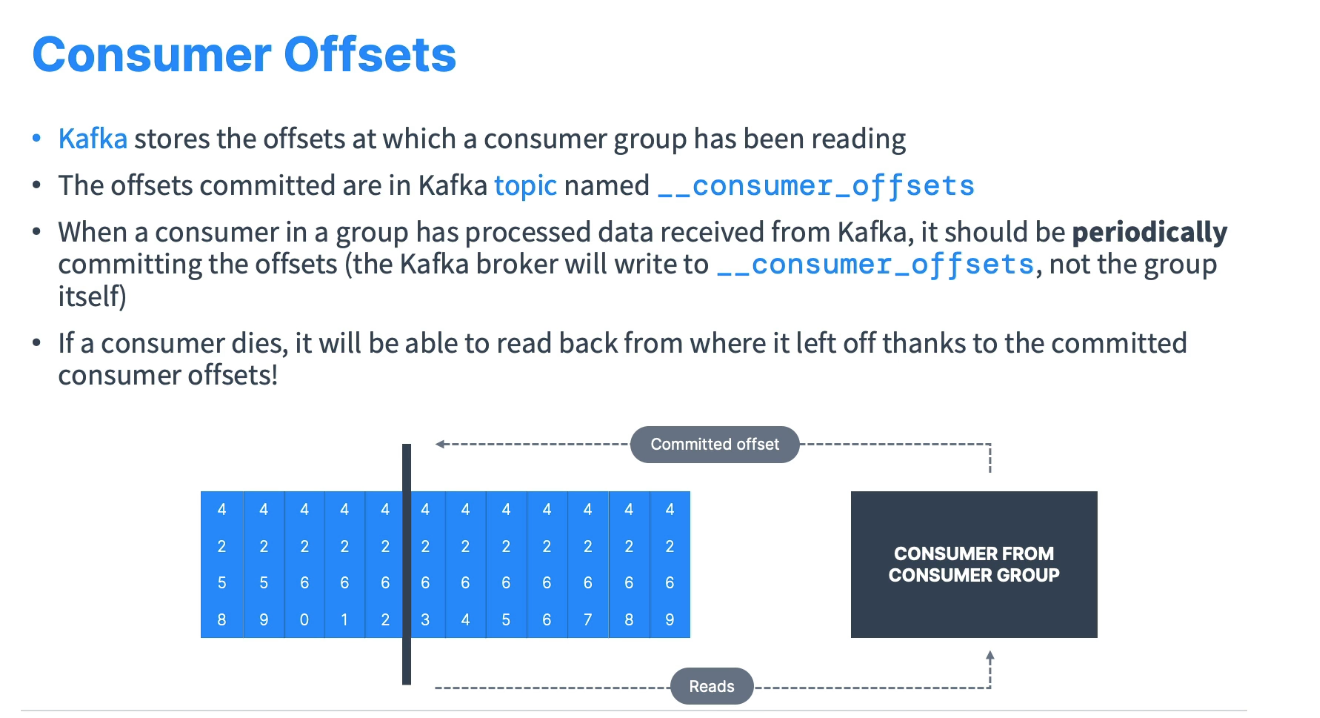

Consumer Groups & Consumer Offsets:

-

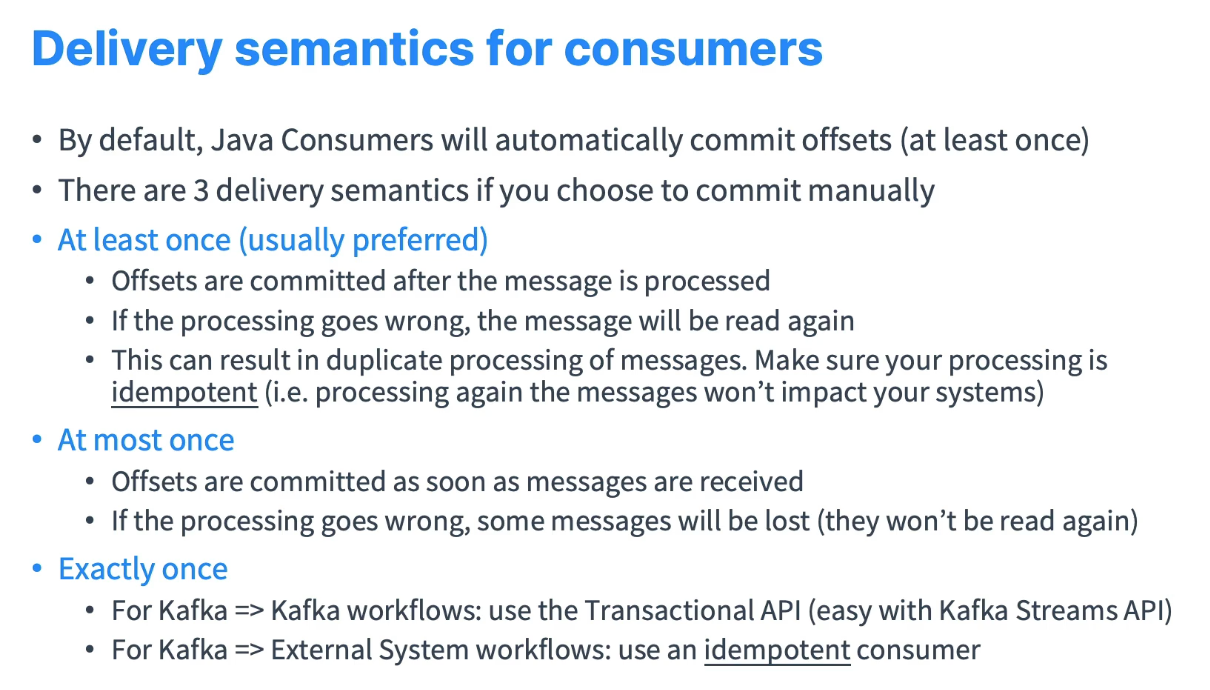

Delivery semantics for consumers:

-

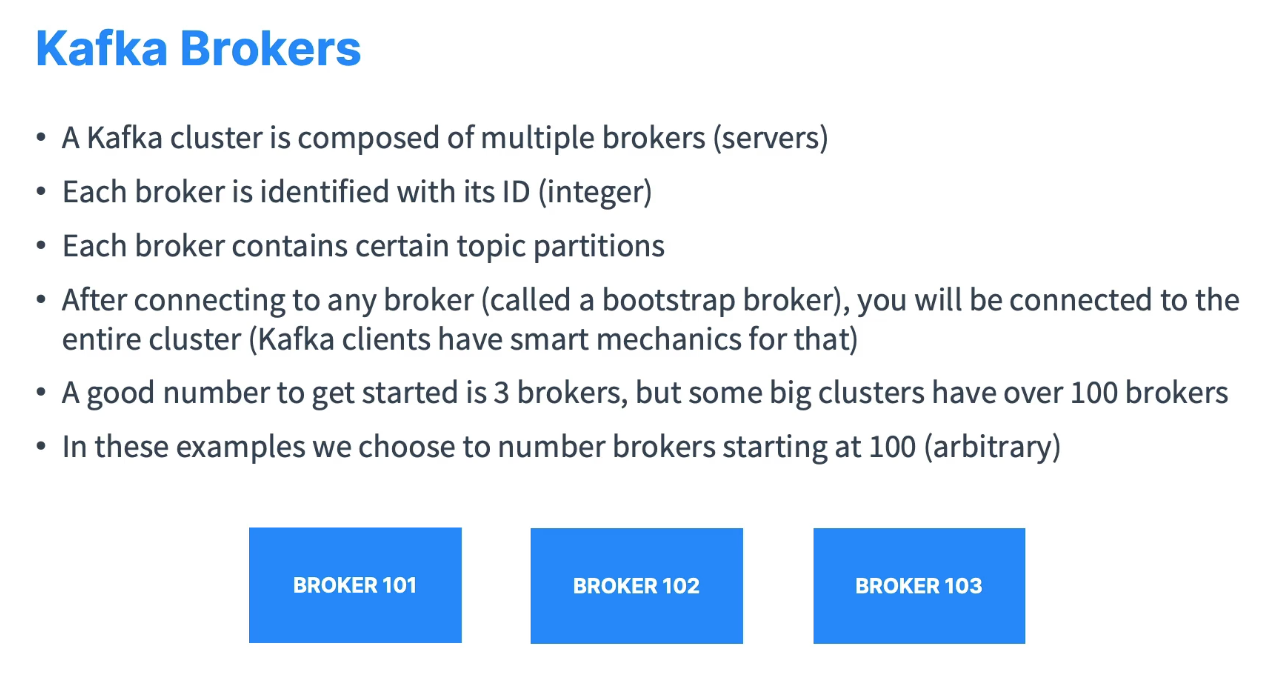

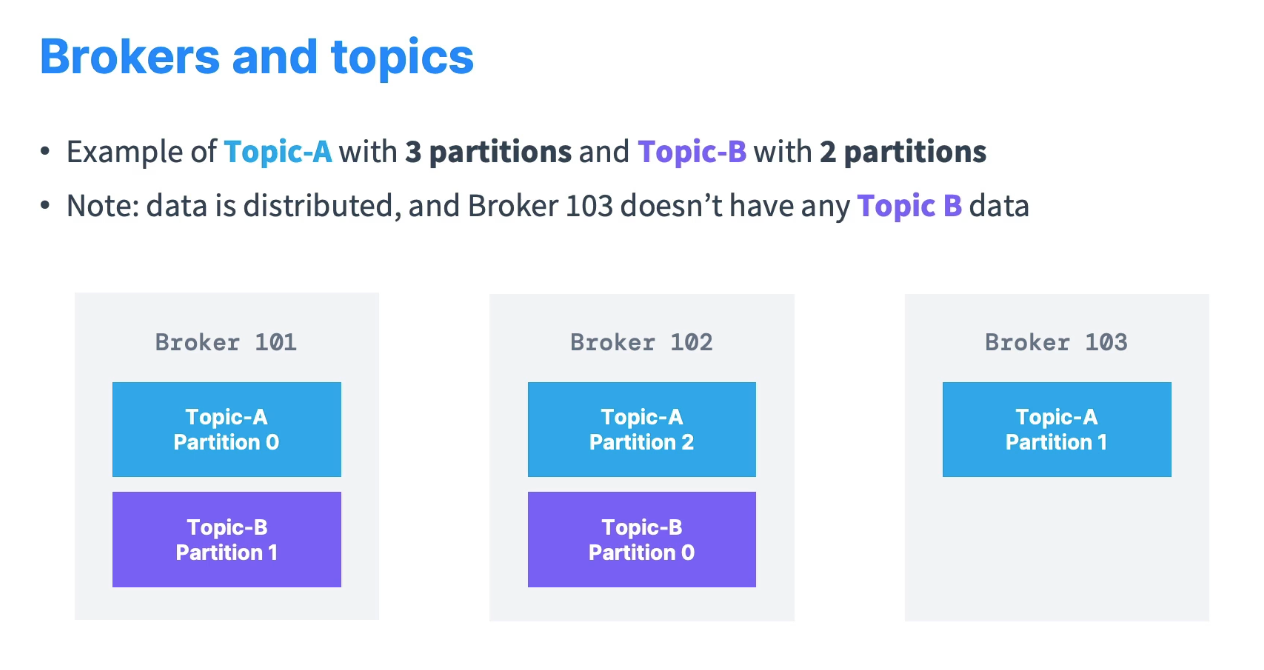

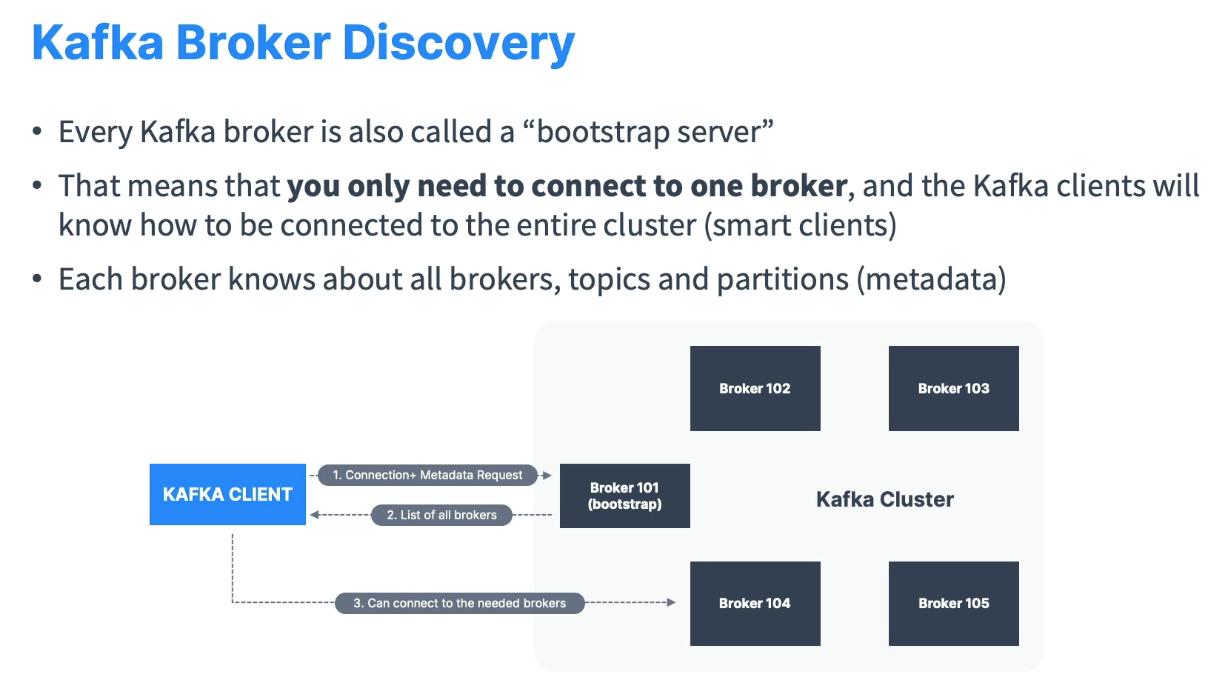

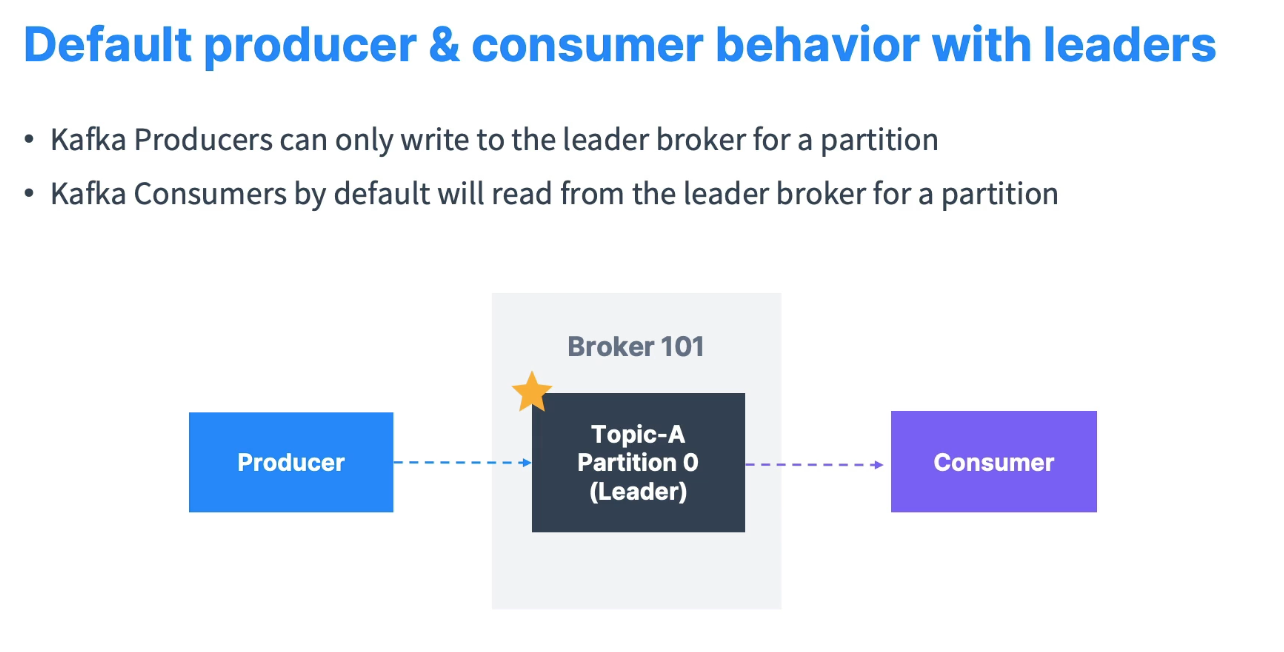

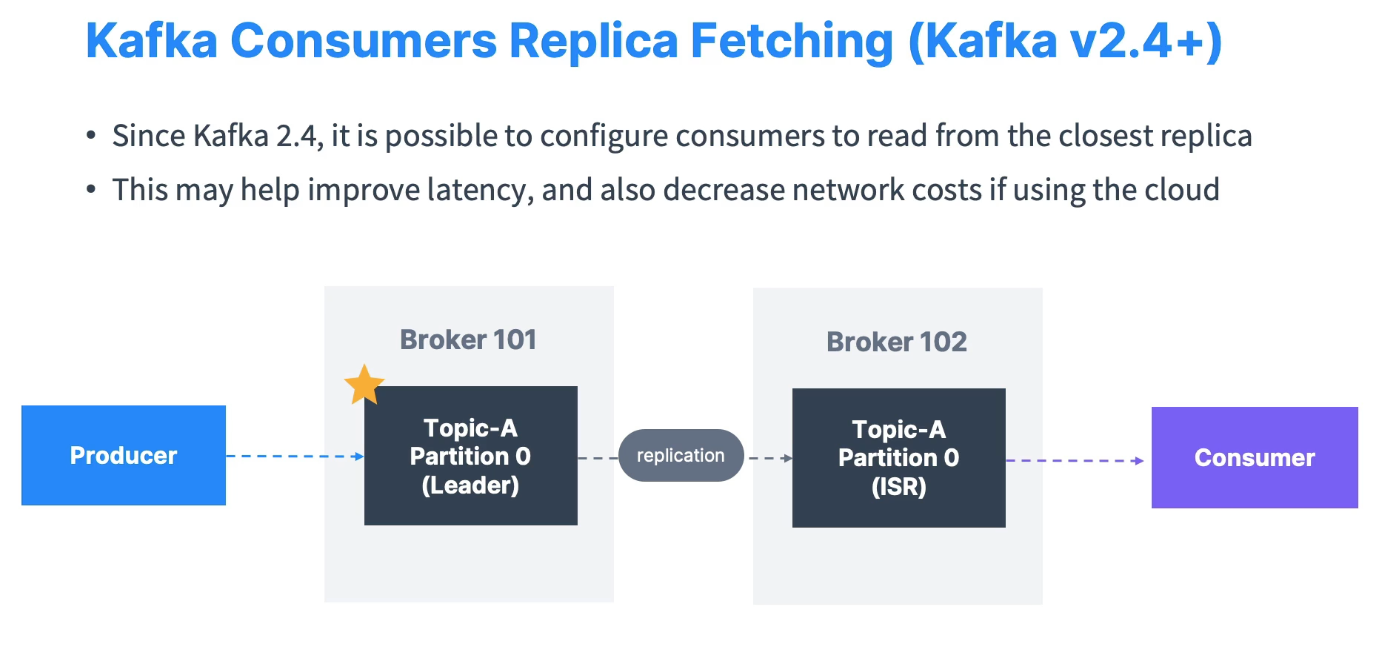

Kafka Brokers

-

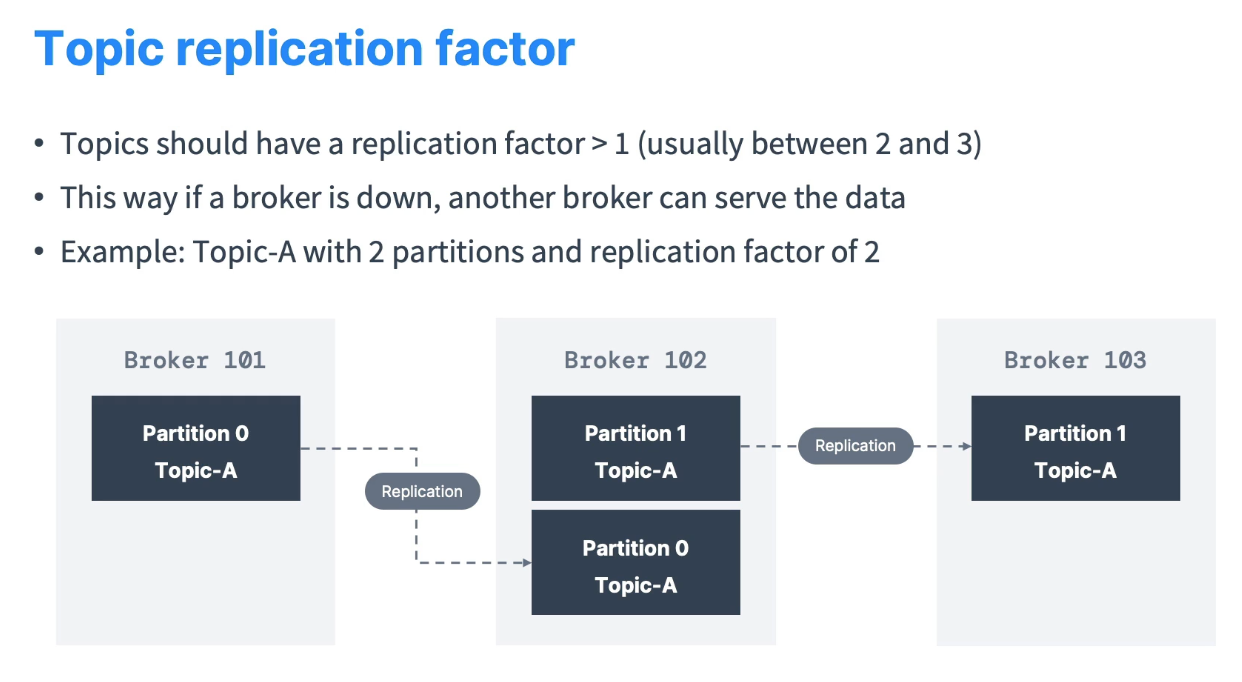

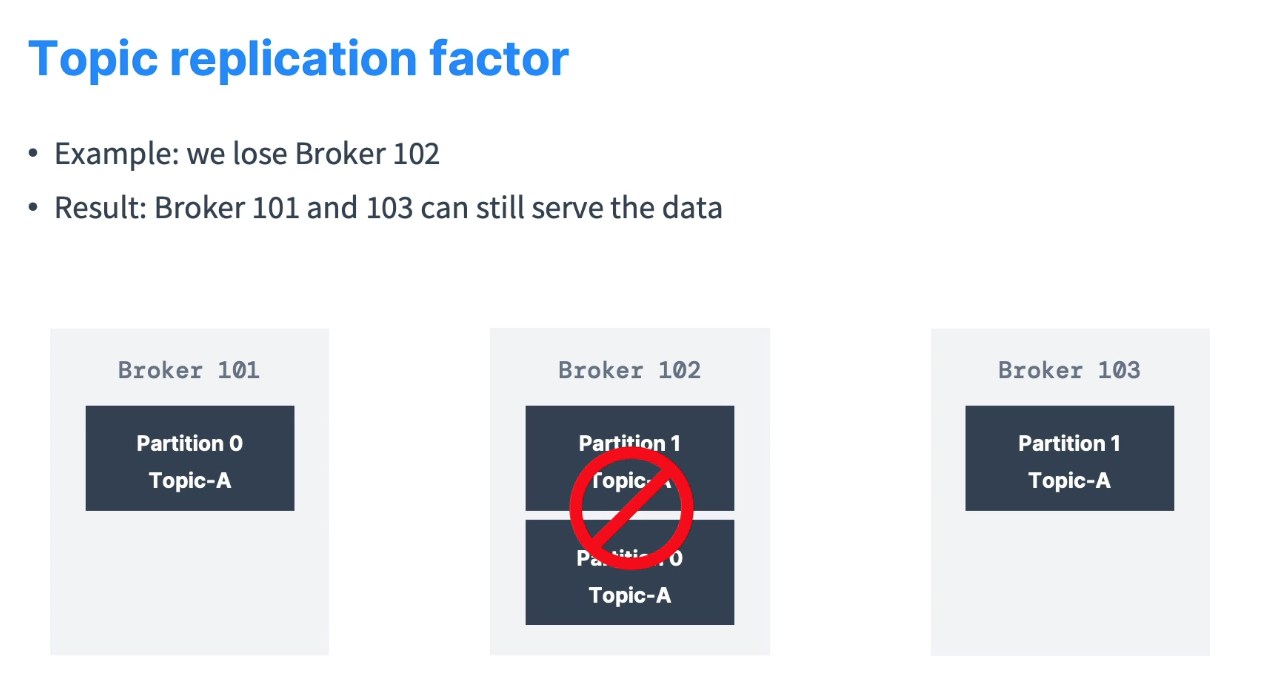

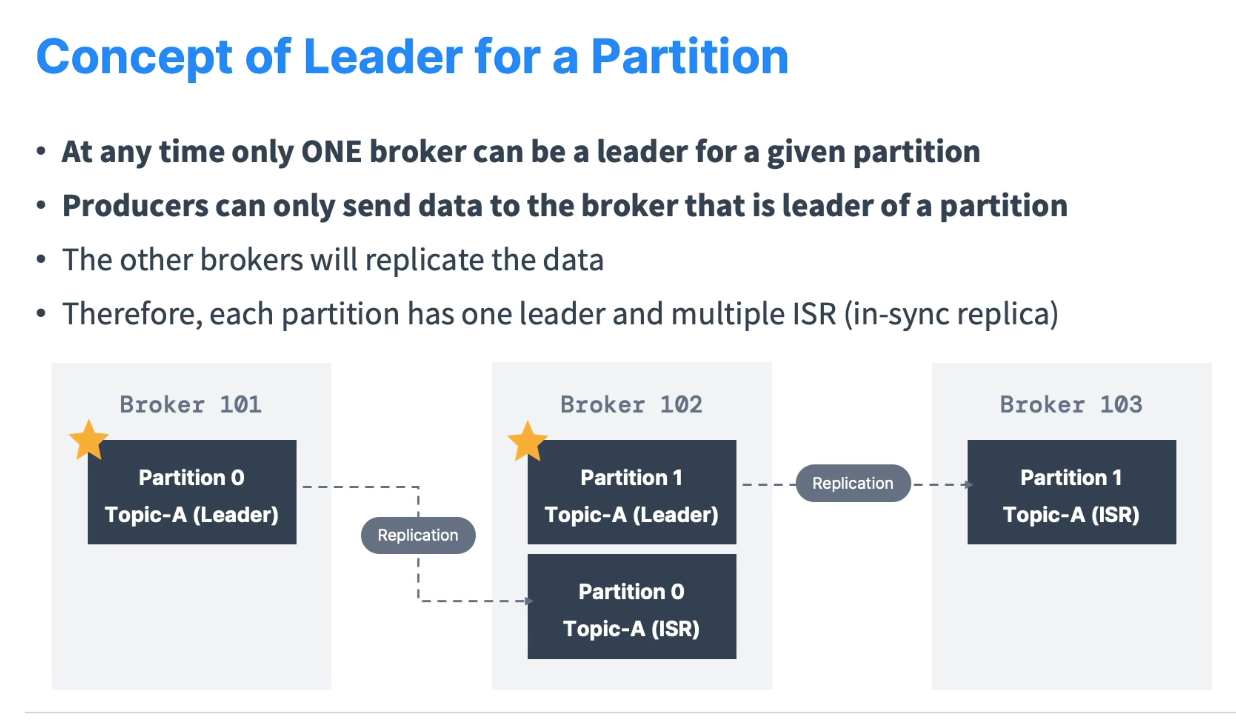

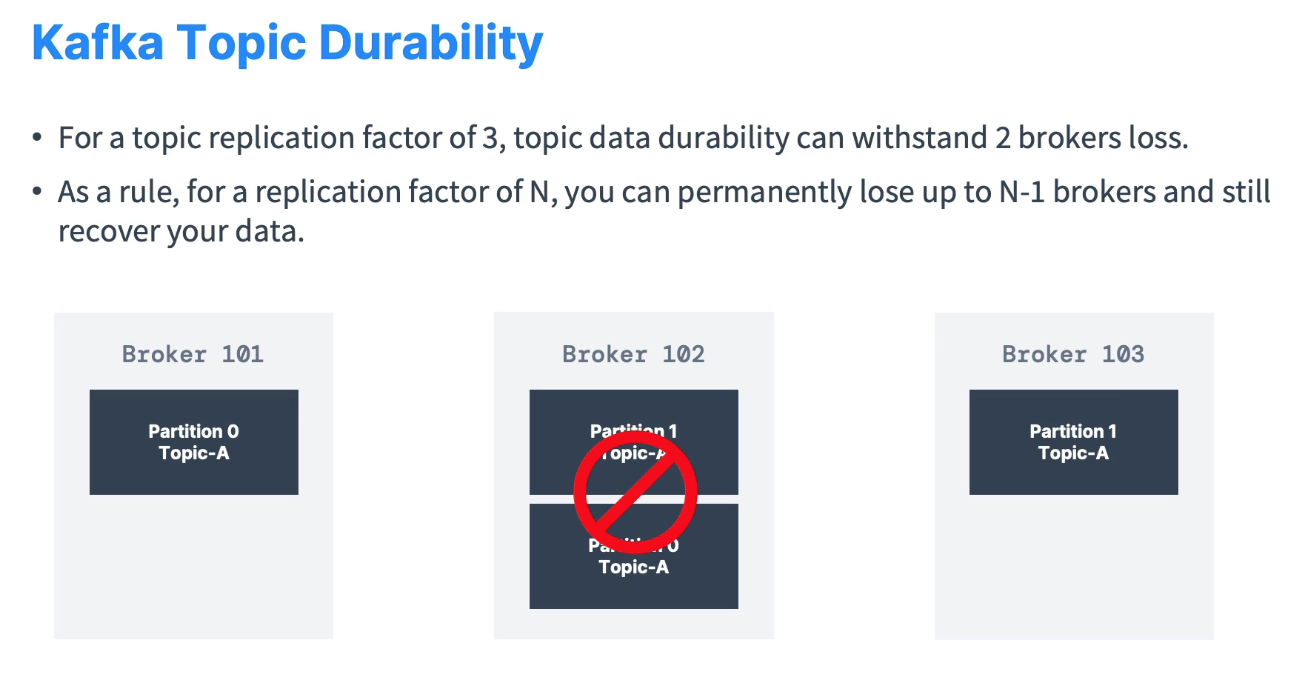

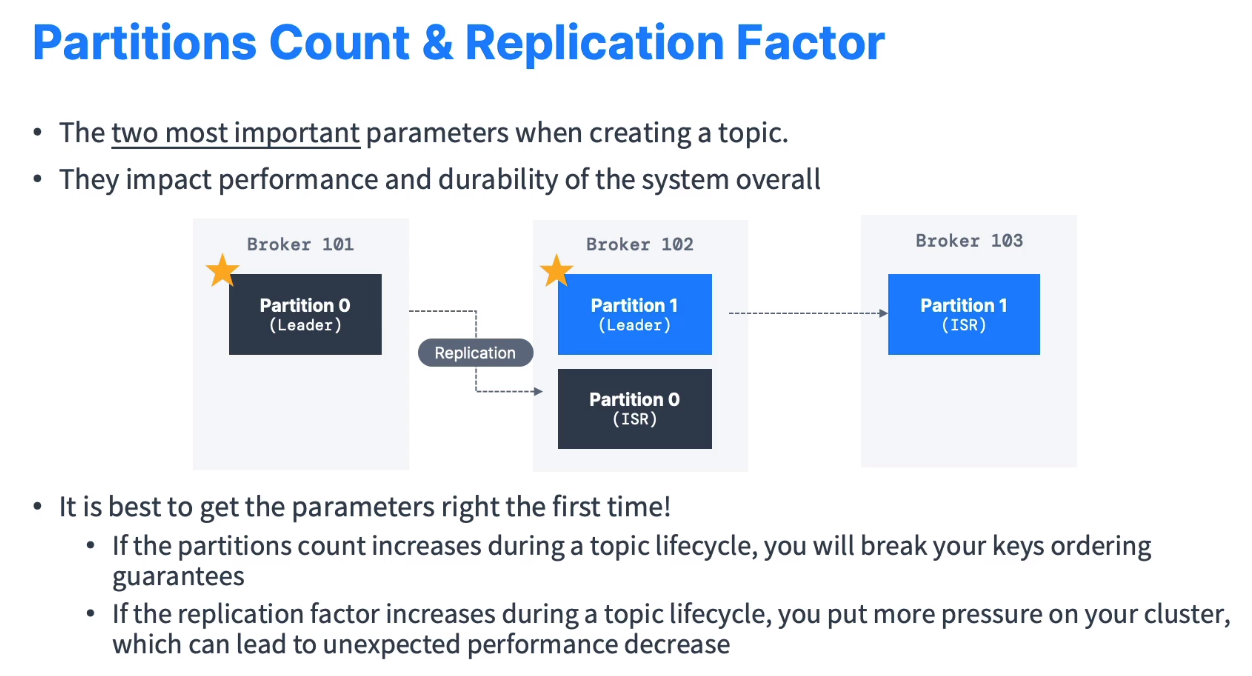

Topic replication factor

-

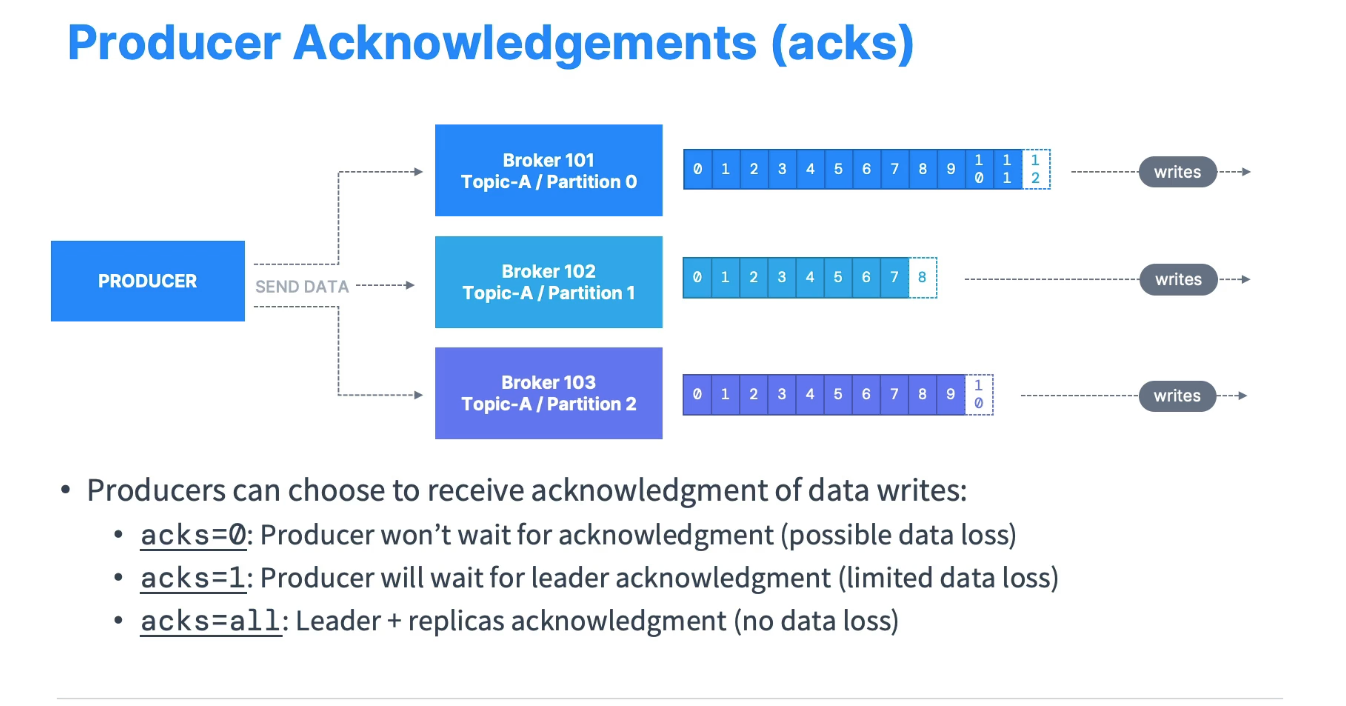

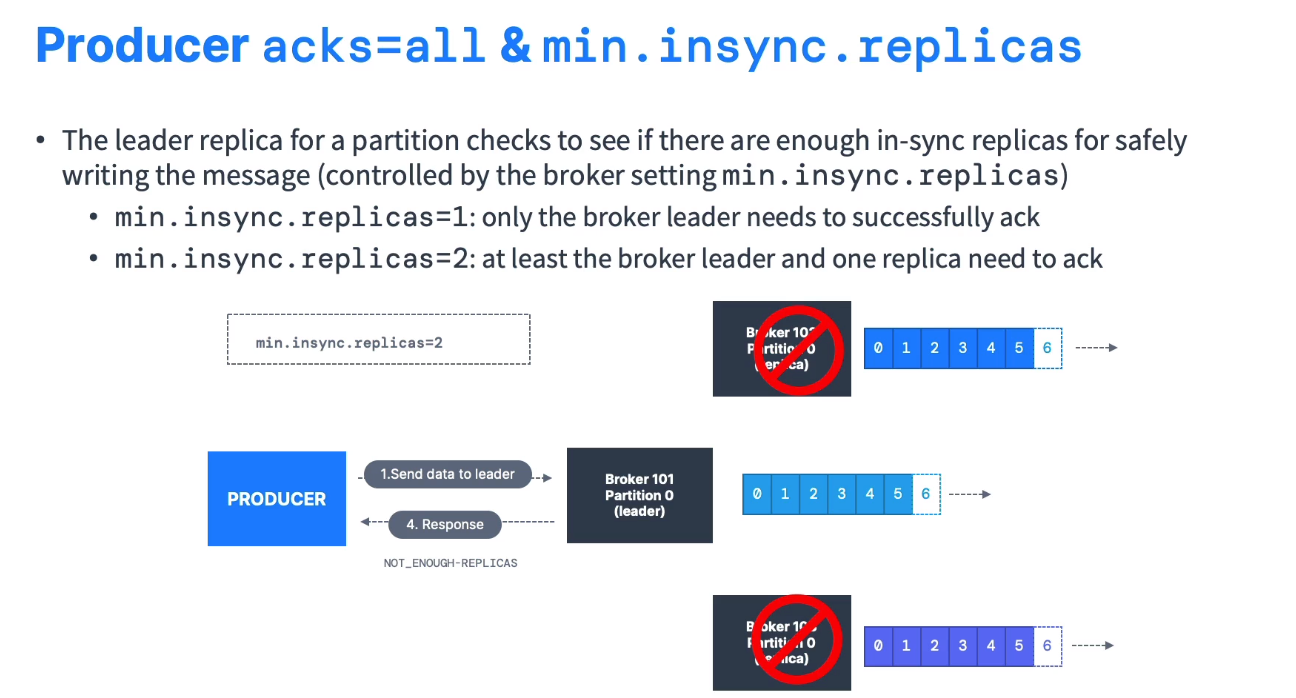

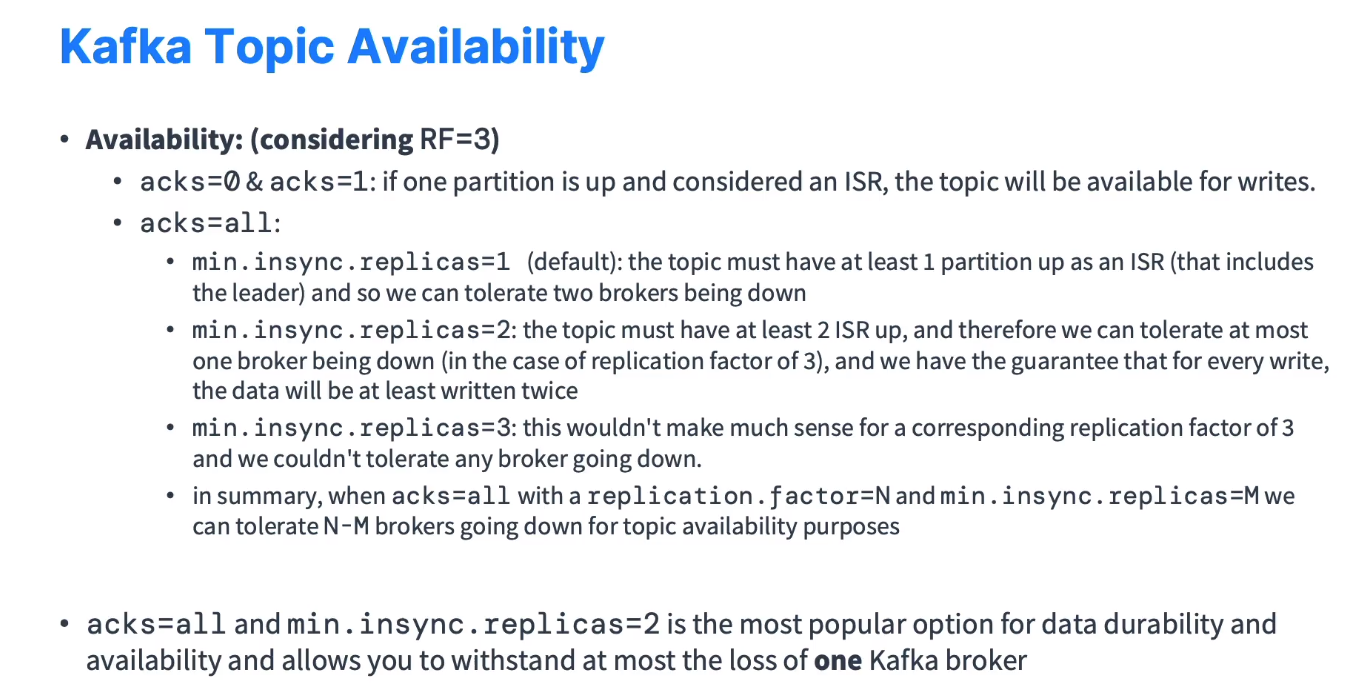

Producer Acknowledgments and Topic Durability

-

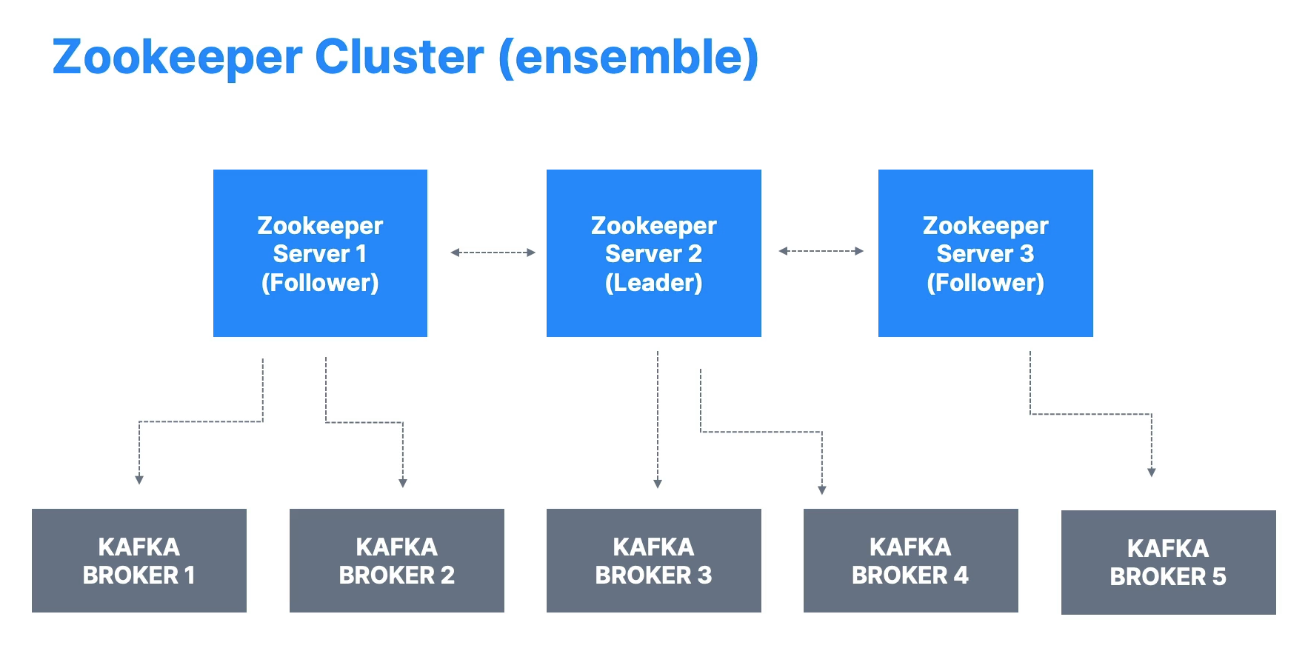

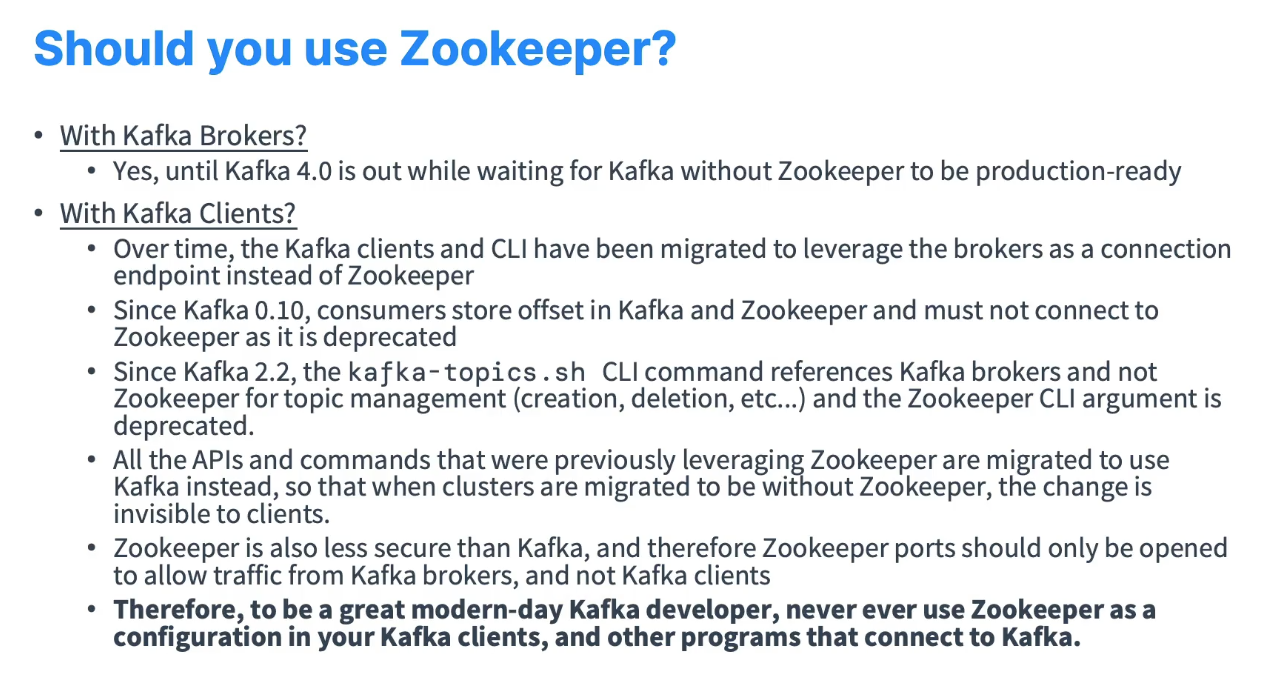

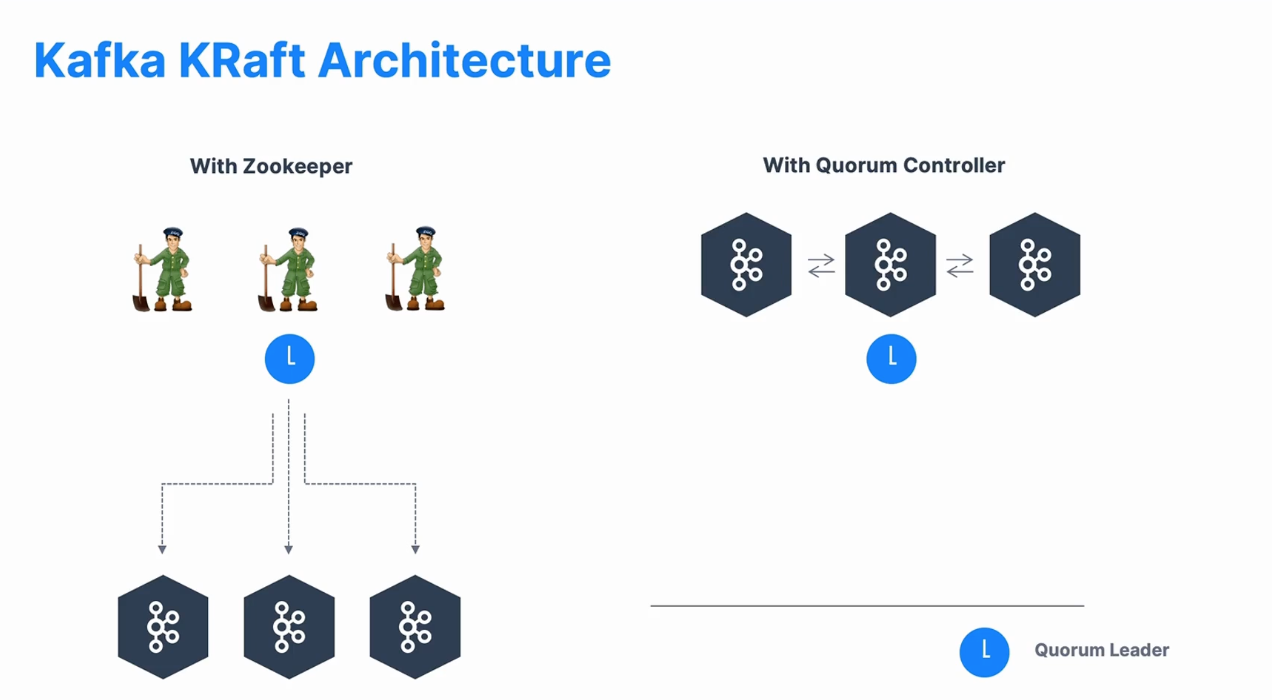

Zookeeper

-

Theory Roundup

-

Navigate to the

labfolder in this repo and rundocker-composecd lab && docker-compose up -d

-

SSH into the Kafka contained by running:

docker-compose exec kafka /bin/bashcd /opt/bitnami/kafka/bin

-

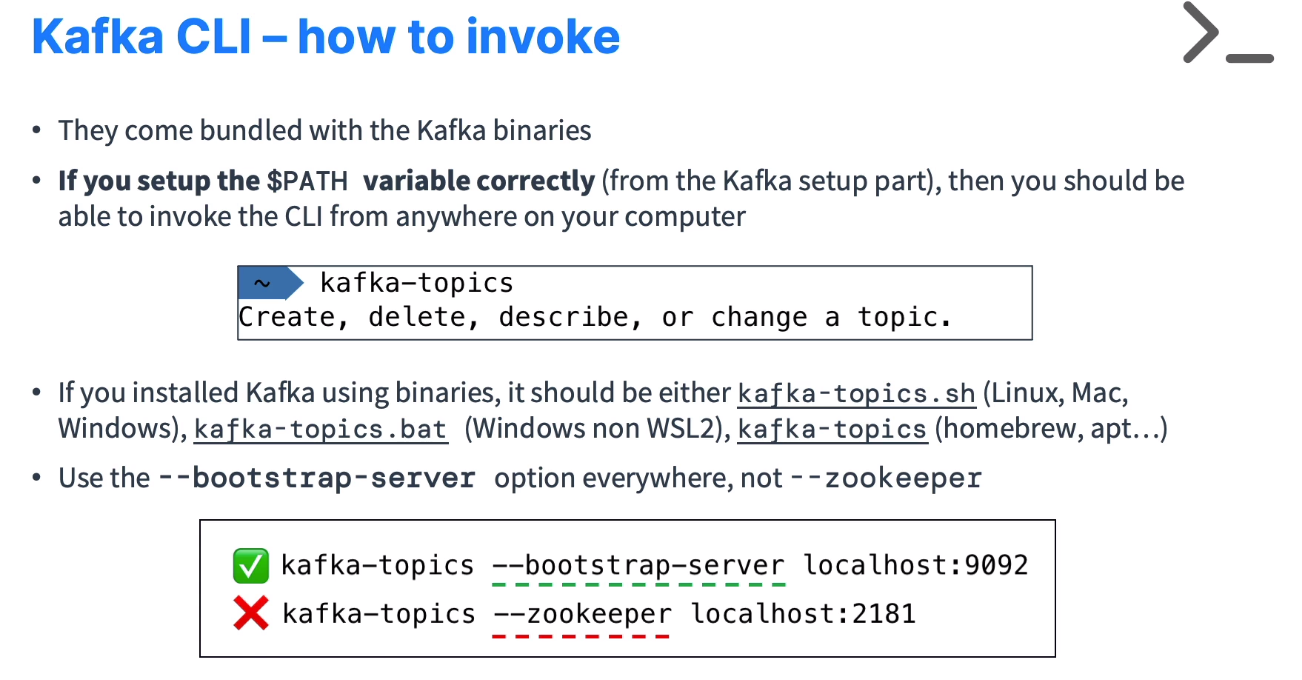

List topics inside our broker:

kafka-topics.sh --list --bootstrap-server 127.0.0.1:9092kafka-console-producer.sh --bootstrap-server 127.0.0.1:9092 --topic testkafka-console-consumer.sh --bootstrap-server 127.0.0.1:9092 --topic test --from-beginningkafka-topics.sh --bootstrap-server 127.0.0.1:9092 --topic test --describe

-

Important note:

-

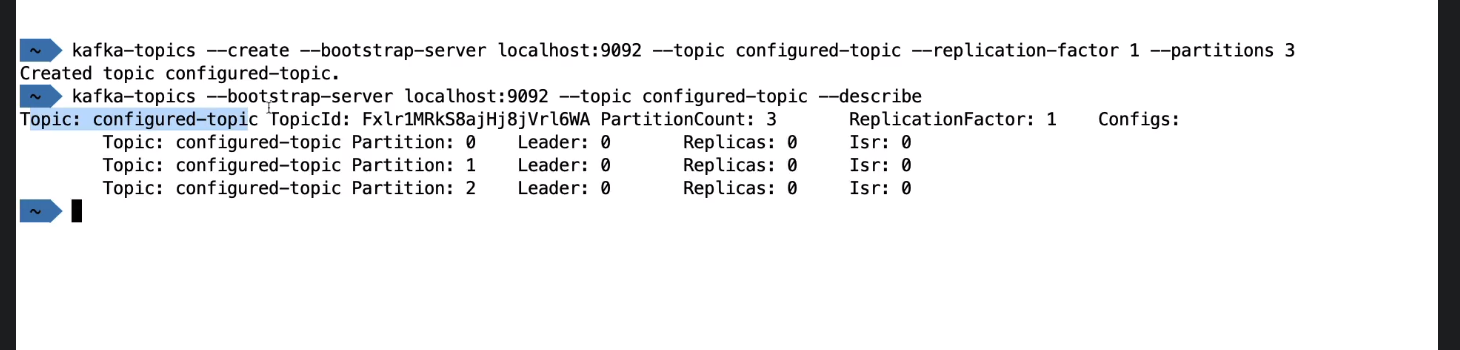

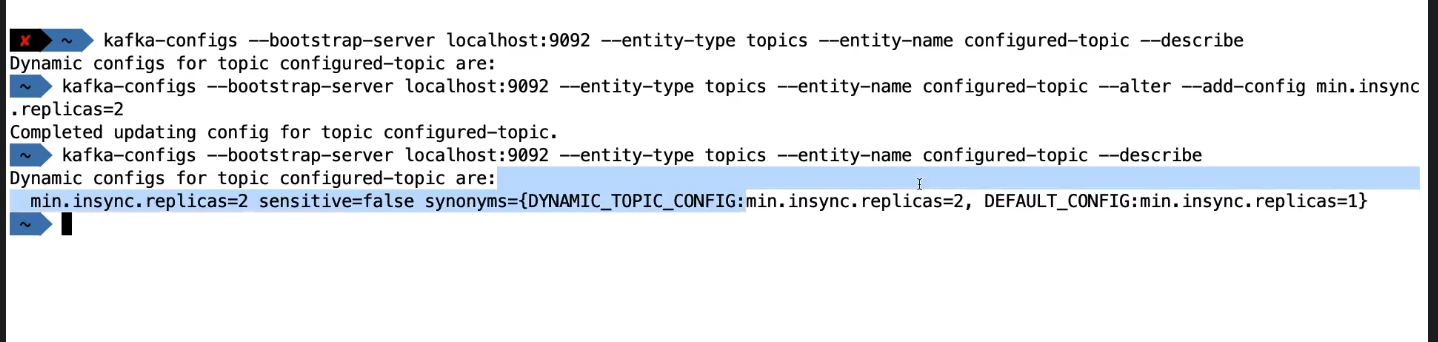

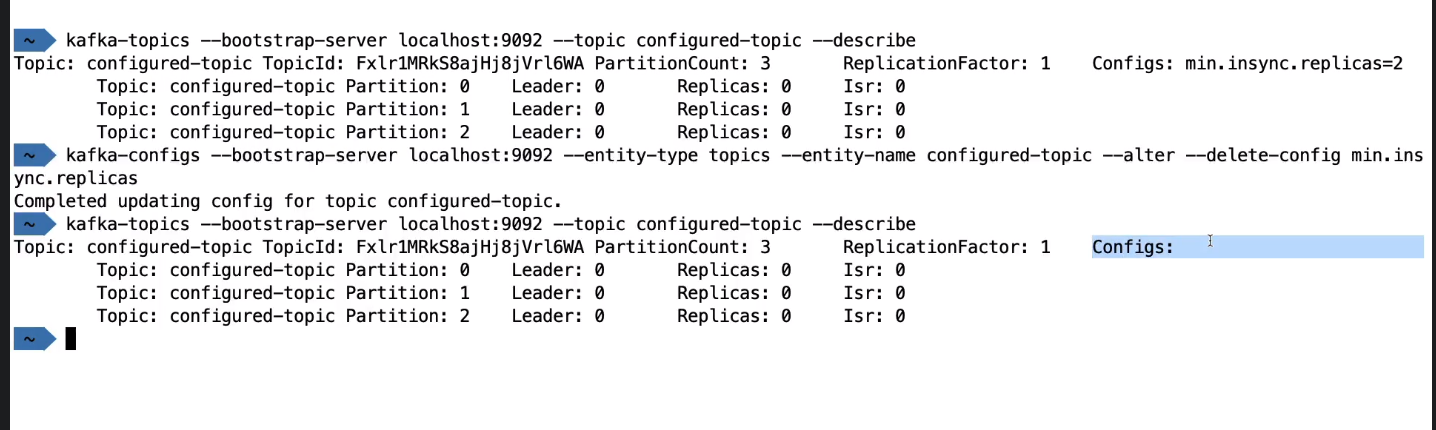

Kafka Topic CLI:

- Create Kafka Topics

kafka-topics.sh --bootstrap-server 127.0.0.1:9092 --create --topic first_topic- We are not defining the number of partitions

kafka-topics.sh --bootstrap-server 127.0.0.1:9092 --create --topic second_topic --partitions 5- This topic contains 5 partitions

kafka-topics.sh --bootstrap-server 127.0.0.1:9092 --create --topic third_topic --partitions 5 --replication-factor 2- The target replication factor of 2 cannot be reached because only 1 broker(s) are registered. Remember, replication is tied to number of brokers

- List Kafka Topics

kafka-topics.sh --bootstrap-server 127.0.0.1:9092 --list

- Describre Kafka Topics

kafka-topics.sh --bootstrap-server 127.0.0.1:9092 --topic first_topic --describe

- Increase Partitions in a Kafka Topic

- If you want to change the number of partitions or replicas of your Kafka topic, you can use a streaming transformation to automatically stream all of the messages from the original topic into a new Kafka topic that has the desired number of partitions or replicas.

- Delete a Kafka Topic

kafka-topics.sh --bootstrap-server 127.0.0.1:9092 --topic first_topic --delete

- Create Kafka Topics

-

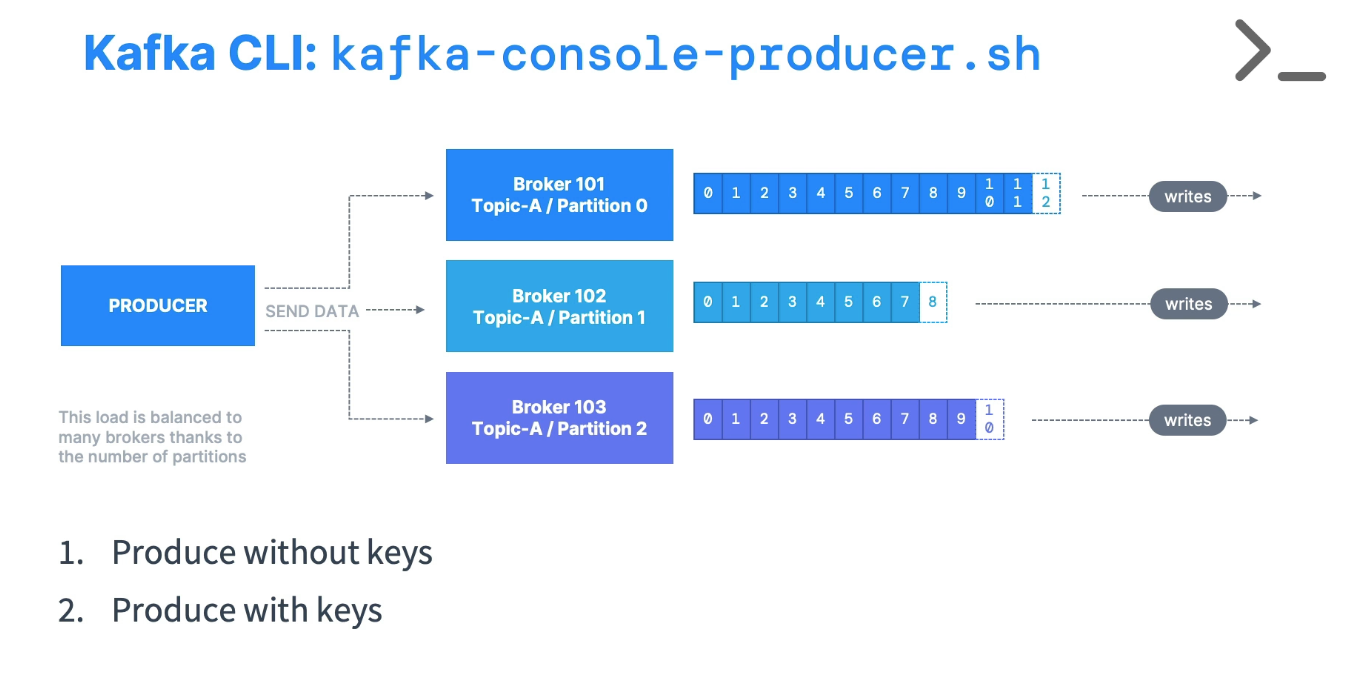

Kafka Console Producer CLI

-

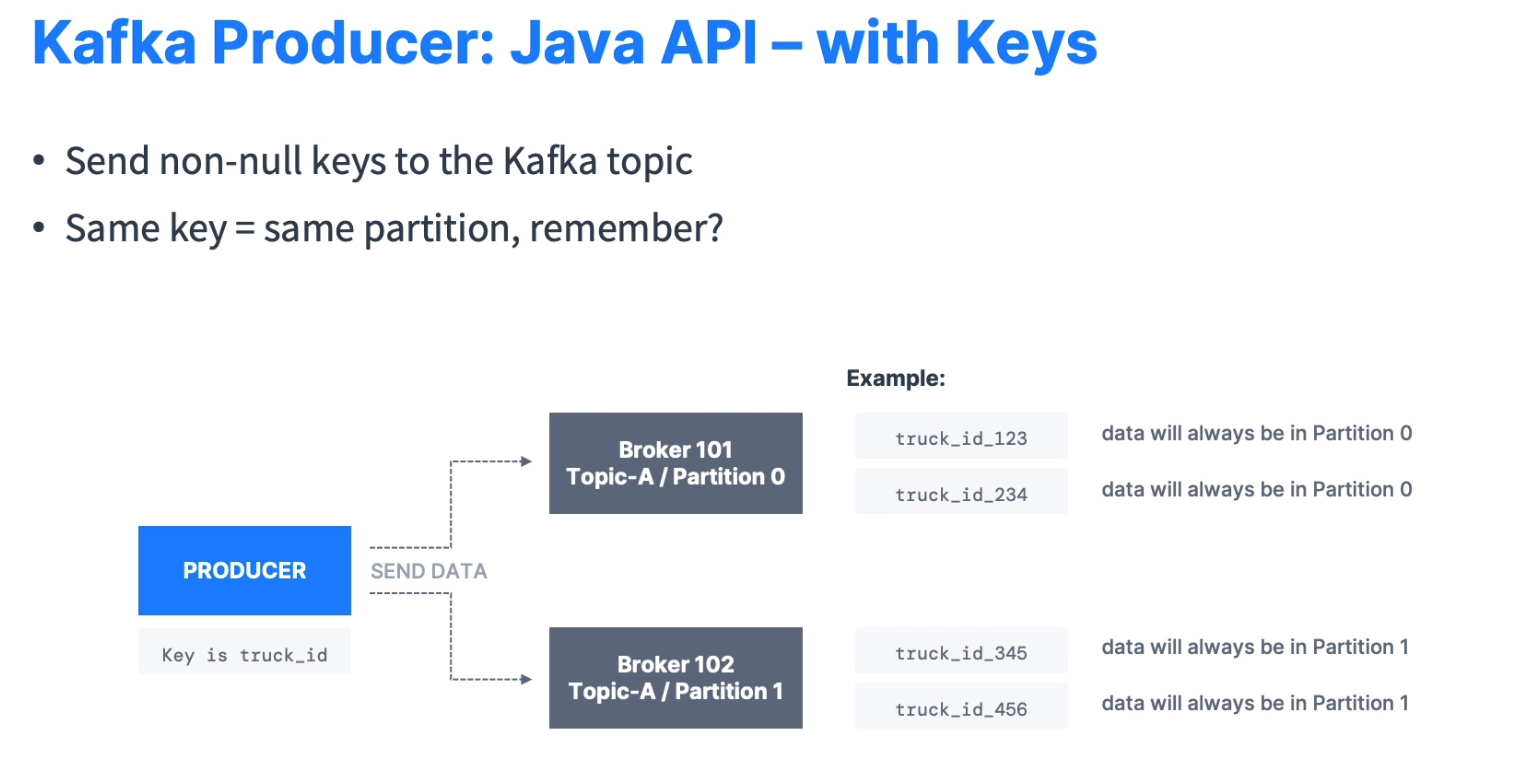

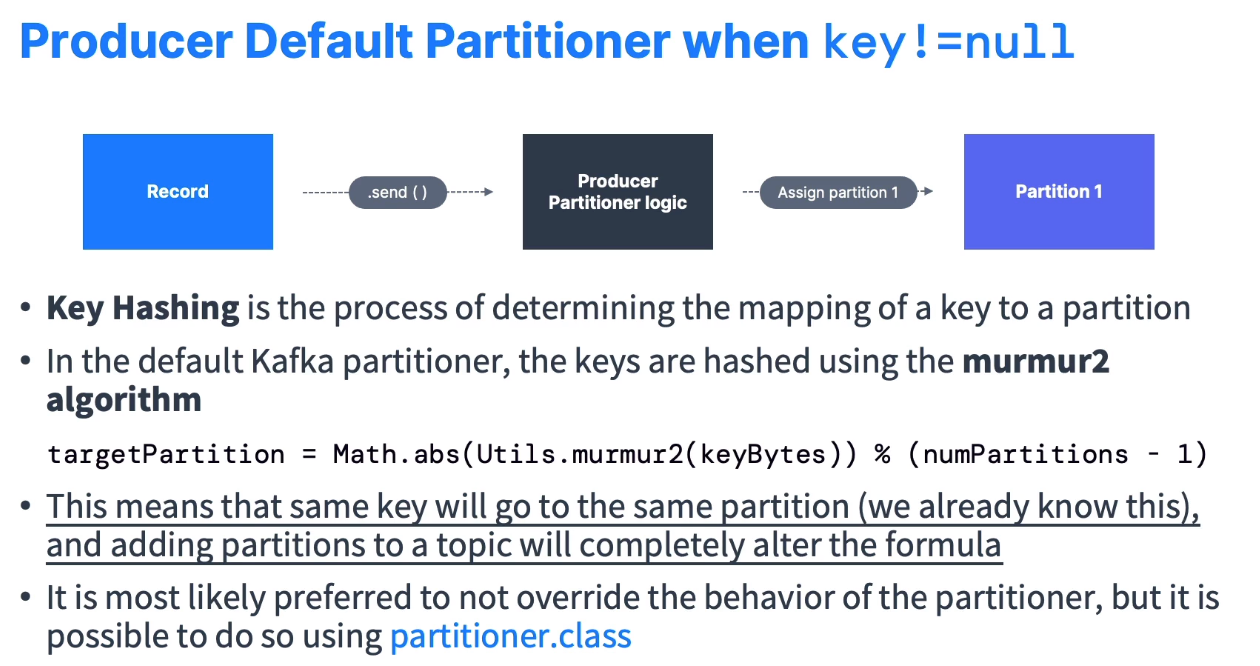

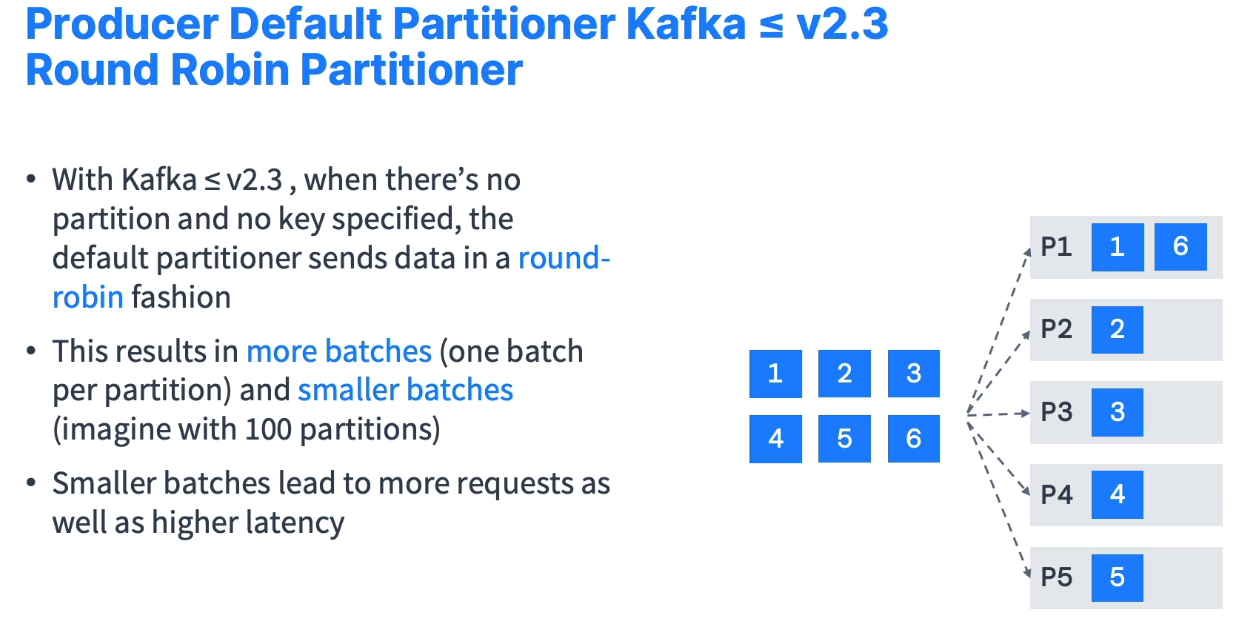

- Producer without keys: It will distribute values using the Robin Hood approach

- Producer using keys: It will distribute on the key hash (

murmur2)

-

Create a topic with one partition:

kafka-topics.sh --bootstrap-server 127.0.0.1:9092 --create --topic producer_topic --partitions 1

-

Produce messages to the topic:

kafka-console-producer.sh --bootstrap-server 127.0.0.1:9092 --topic producer_topic-

>Hello World >My name is Daniel >I love Kafka > Control + C

-

-

Produce messages to the topic with extra properties:

kafka-console-producer.sh --bootstrap-server 127.0.0.1:9092 --topic producer_topic --producer-property acks=all

-

Produce messages to a topic that does not exist:

kafka-console-producer.sh --bootstrap-server 127.0.0.1:9092 --topic producer_topic_new[2023-05-31 19:03:57,926] WARN [Producer clientId=console-producer] Error while fetching metadata with correlation id 4 : {producer_topic_new=UNKNOWN_TOPIC_OR_PARTITION} (org.apache.kafka.clients.NetworkClient)- It will fail, but it will create the topic nontheless. - auto creationg

- You can edit

config/server.propertiesto set the default number of partitions.

-

Produce messages with a key:

kafka-console-producer.sh --bootstrap-server 127.0.0.1:9092 --topic producer_topic_new --property parse.key=true --property key.separator=:-

>example key:example value >name:daniel

-

kafka-console-consumer.sh --bootstrap-server 127.0.0.1:9092 --from-beginning --topic producer_topic_new > new topic > kafka-topics.sh --bootstrap-server 127.0.0.1:9092 --list > testtest > example value > daniel

-

-

-

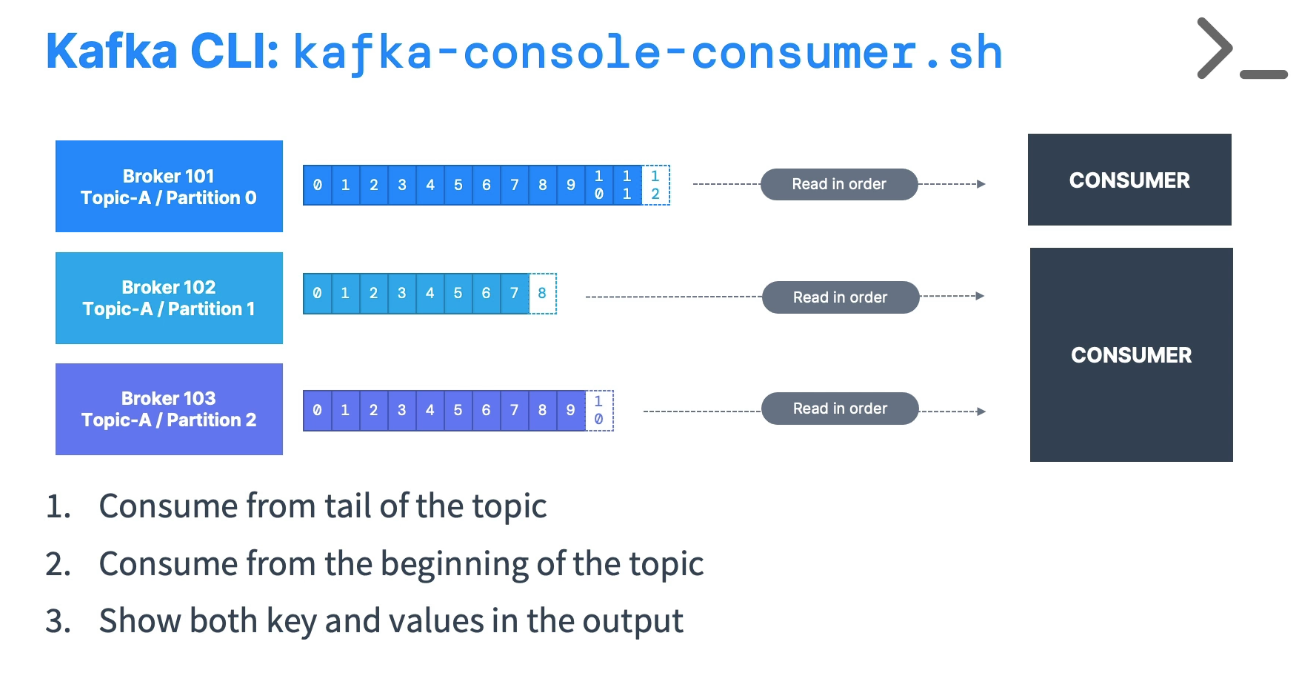

Kafka Console Consumer CLI

-

Consuming messages from the beginning

kafka-console-consumer.sh --bootstrap-server 127.0.0.1:9092 --topic test --from-beginning

-

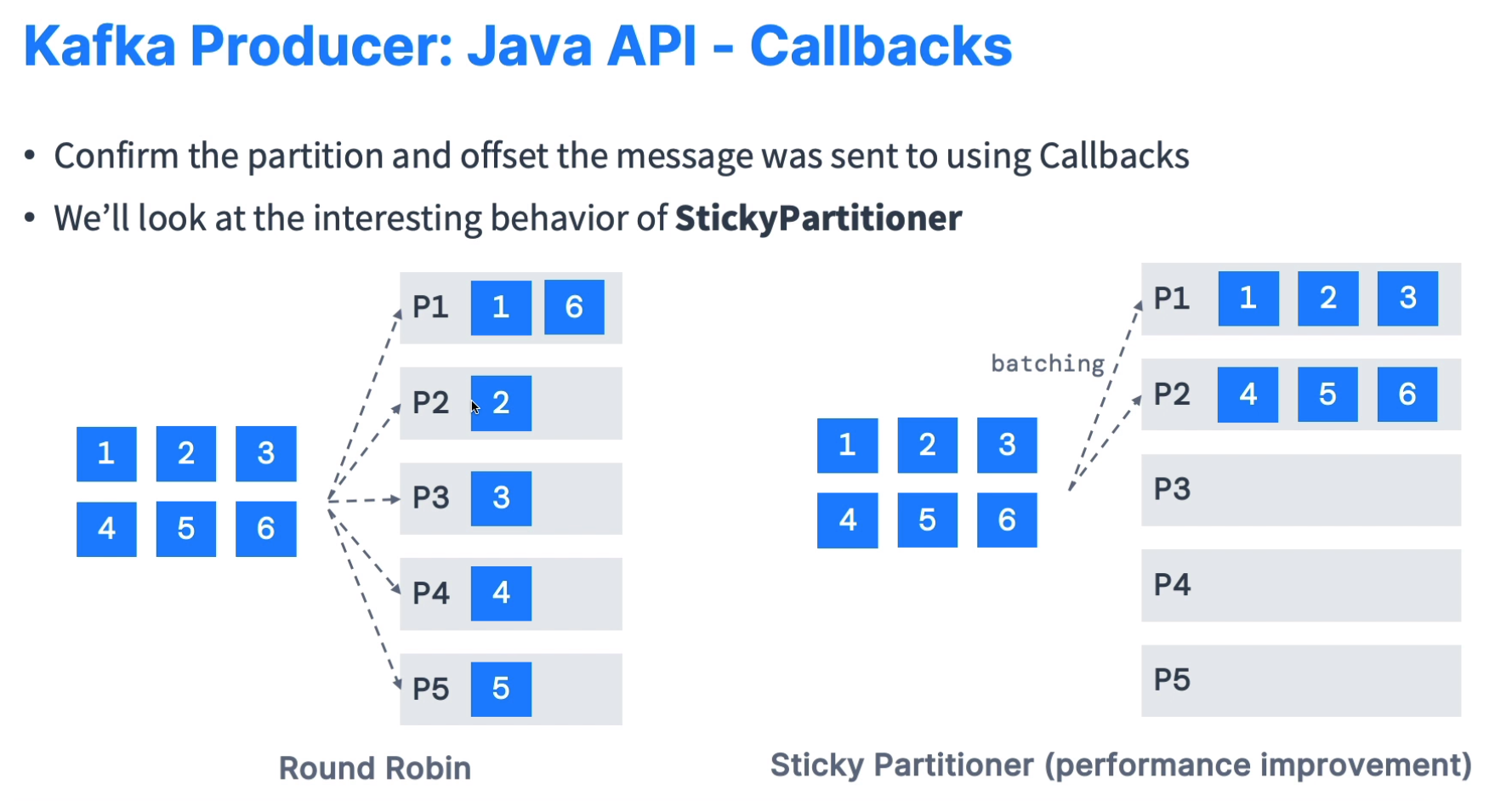

Producing messages

kafka-console-producer.sh --bootstrap-server 127.0.0.1:9092 --topic producer_topic_new --producer-property partitioner.class=org.apache.kafka.clients.producer.RoundRobinPartitioner- We want to use the Round Robin partitioner to distribute keys equaly between patitions. If we don't use, Kafka will probably send the data to the same partition.

-

Consuming messages with key

kafka-console-consumer.sh --bootstrap-server 127.0.0.1:9092 --topic producer_topic_new --formatter kafka.tools.DefaultMessageFormatter --property print.timestamp=true --property print.key=true --property print.value=true --property print.partition=true --from-beginning

-

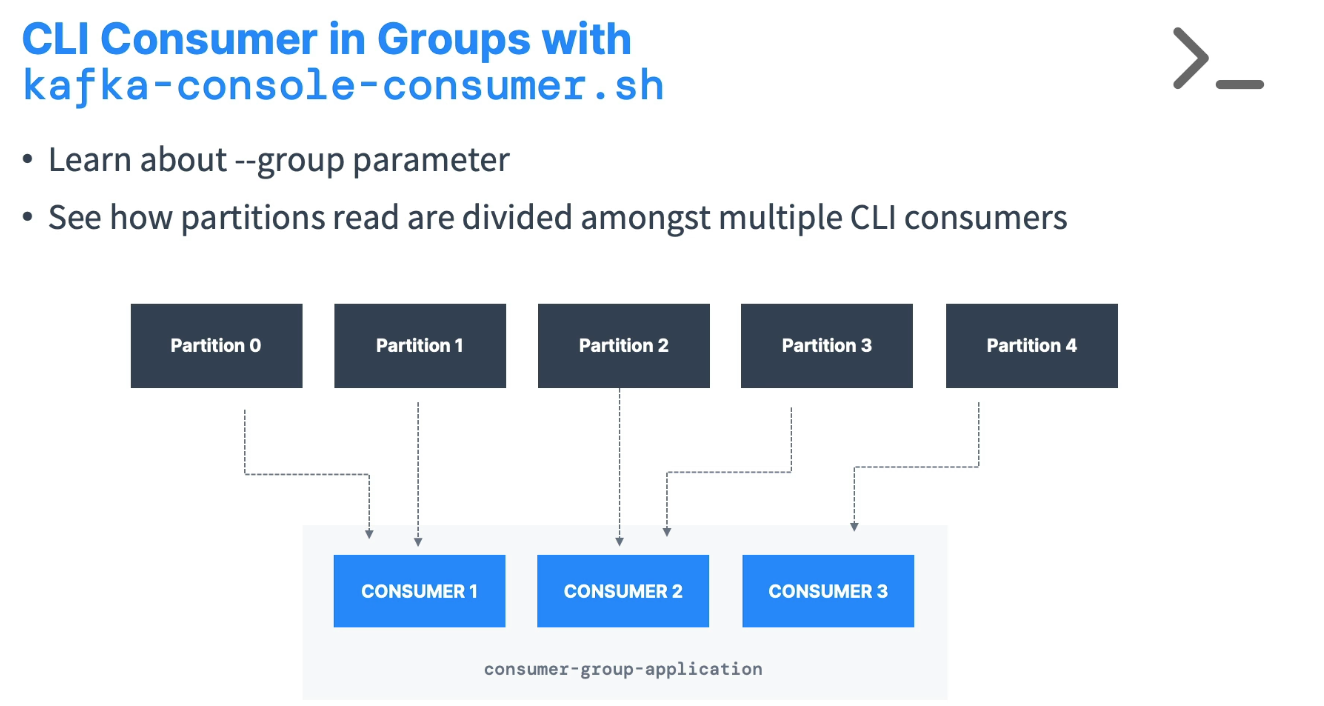

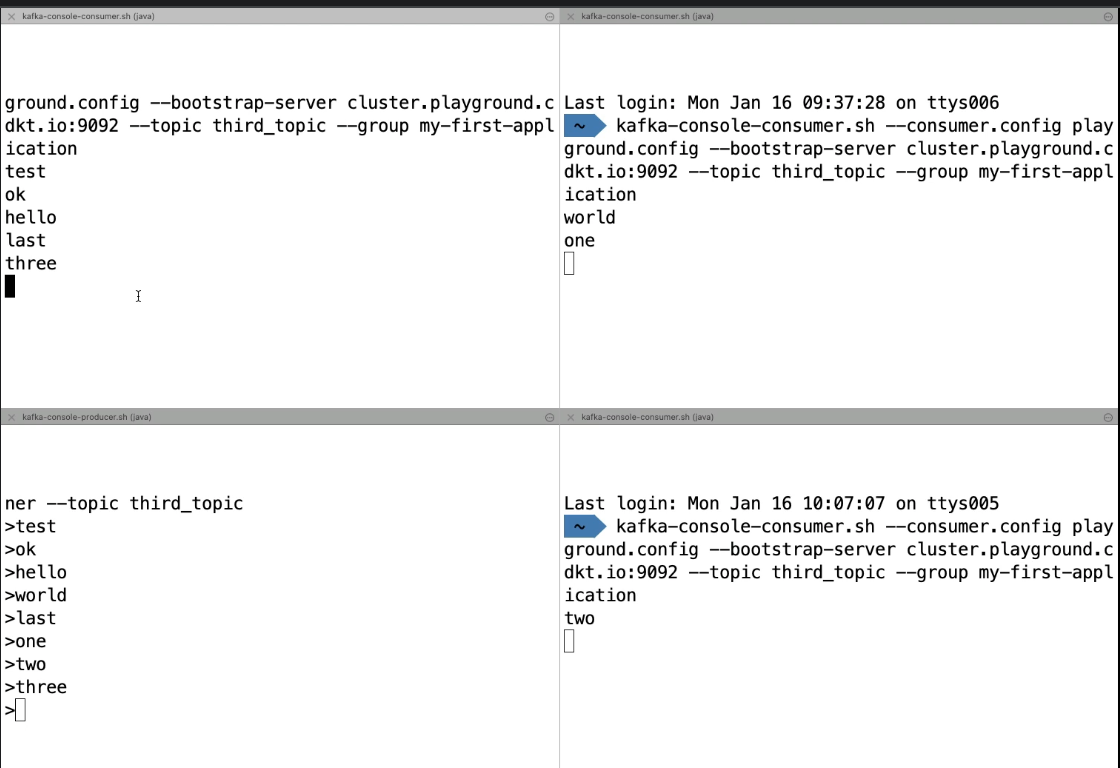

Kafka Consumers in Groups

-

Create a topic

kafka-topics.sh --bootstrap-server 127.0.0.1:9092 --create --topic group_topic --partitions 5

-

Consume the topic from a group

-

kafka-console-consumer.sh --bootstrap-server 127.0.0.1:9092 --topic group_topic --group my-first-application- If we create multiple consumers using the same group, each consumer will take care of some unique partitions. e.g. consumer 1 takes care of partition 0 and consumer 2 takes care of partition 1.

-

kafka-console-consumer.sh --bootstrap-server 127.0.0.1:9092 --topic group_topic --group my-first-application --from-beginning- The group will ignore the

--from-beginningflag if he already read some messages (commited an offset)

- The group will ignore the

-

-

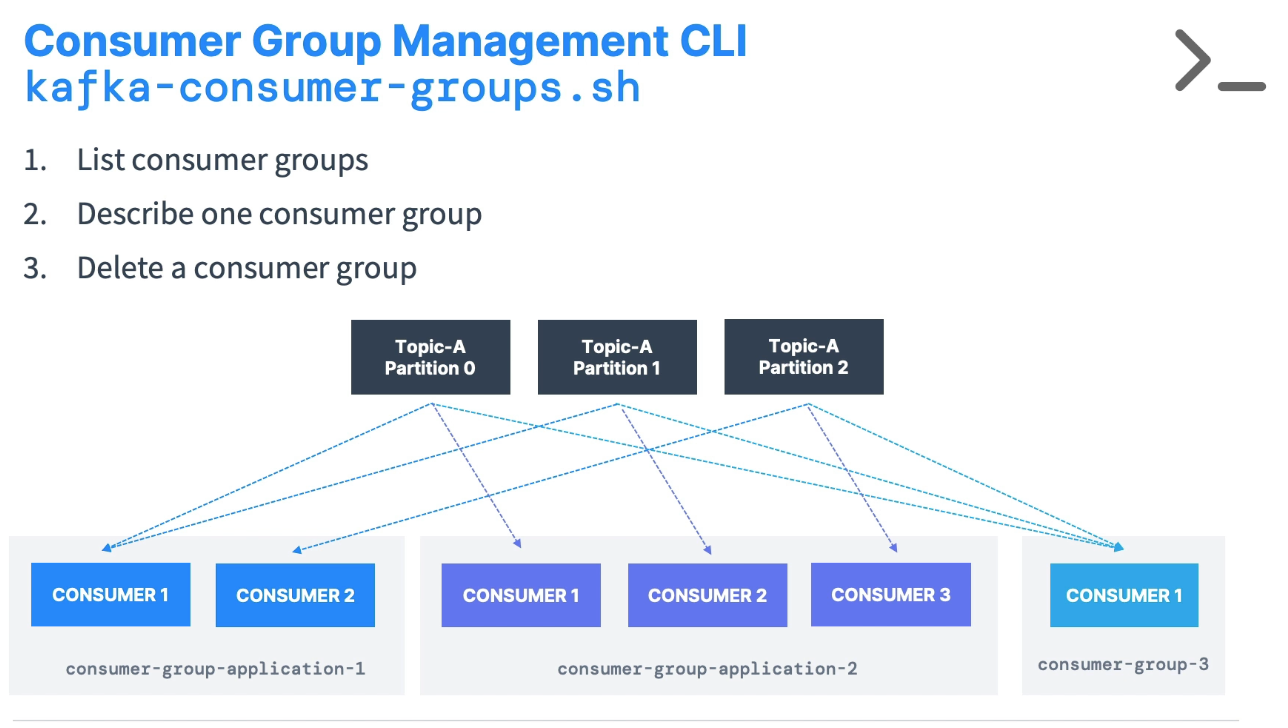

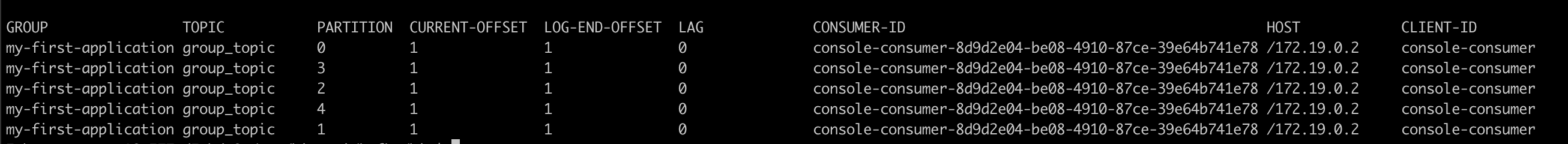

Consumer Group Managment CLI

-

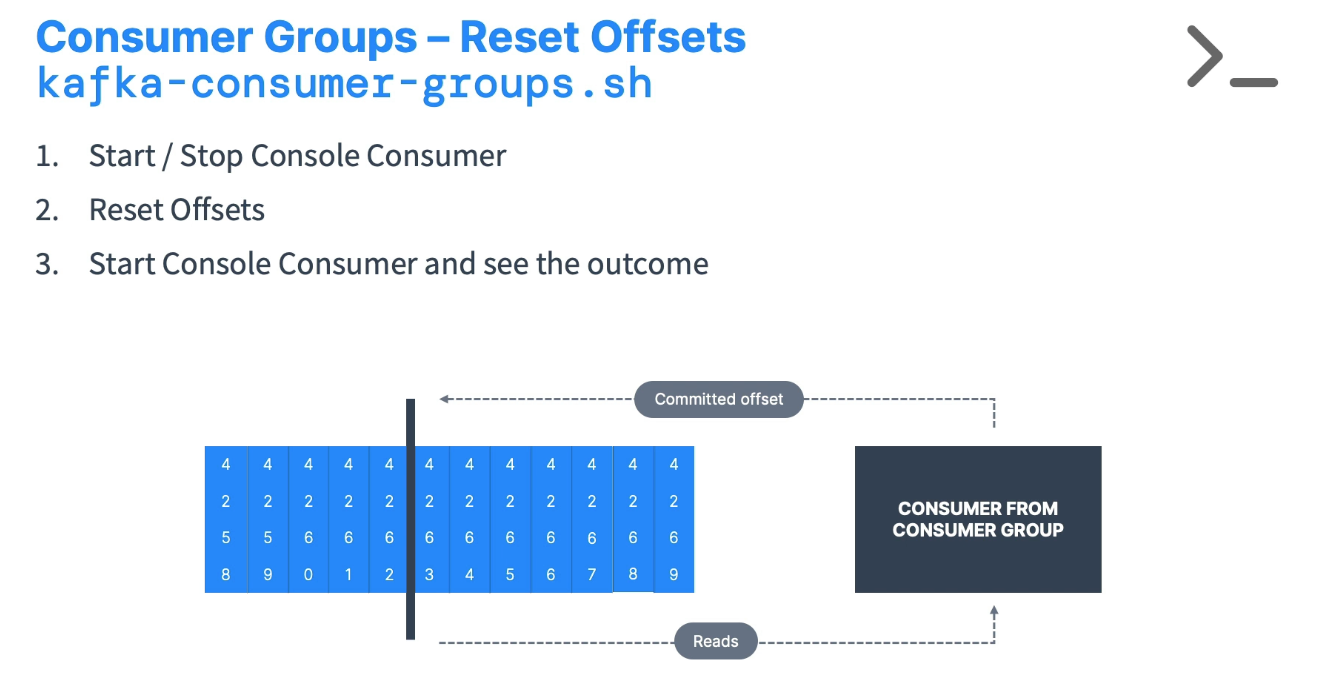

Consumer Group - Reset Offset

-

Describe group

kafka-consumer-groups.sh --bootstrap-server 127.0.0.1:9092 --group my-first-application --describe

-

Reset the offset of this group to read all messages

-

kafka-consumer-groups.sh --bootstrap-server 127.0.0.1:9092 --group my-first-application --reset-offsets --to-earliest --dry-run --topic group_topic--dry-runshows the changes that will be applied, but it does not apply them.

-

kafka-consumer-groups.sh --bootstrap-server 127.0.0.1:9092 --group my-first-application --reset-offsets --to-earliest --execute --topic group_topic--executeapplies the changes to the group offset.

-

-

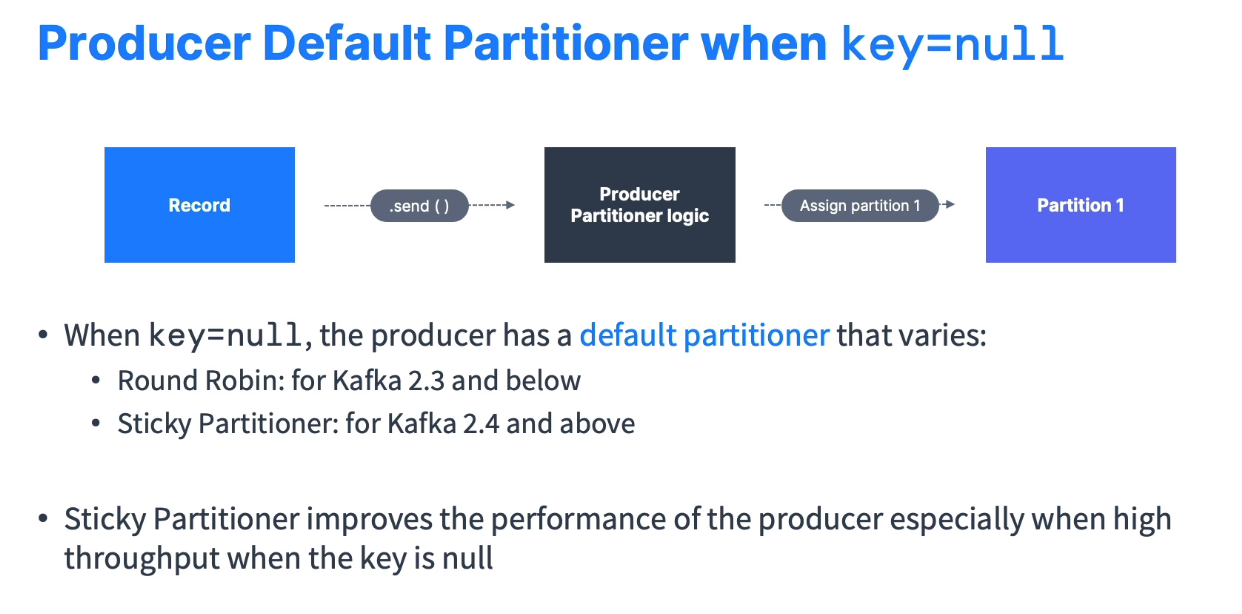

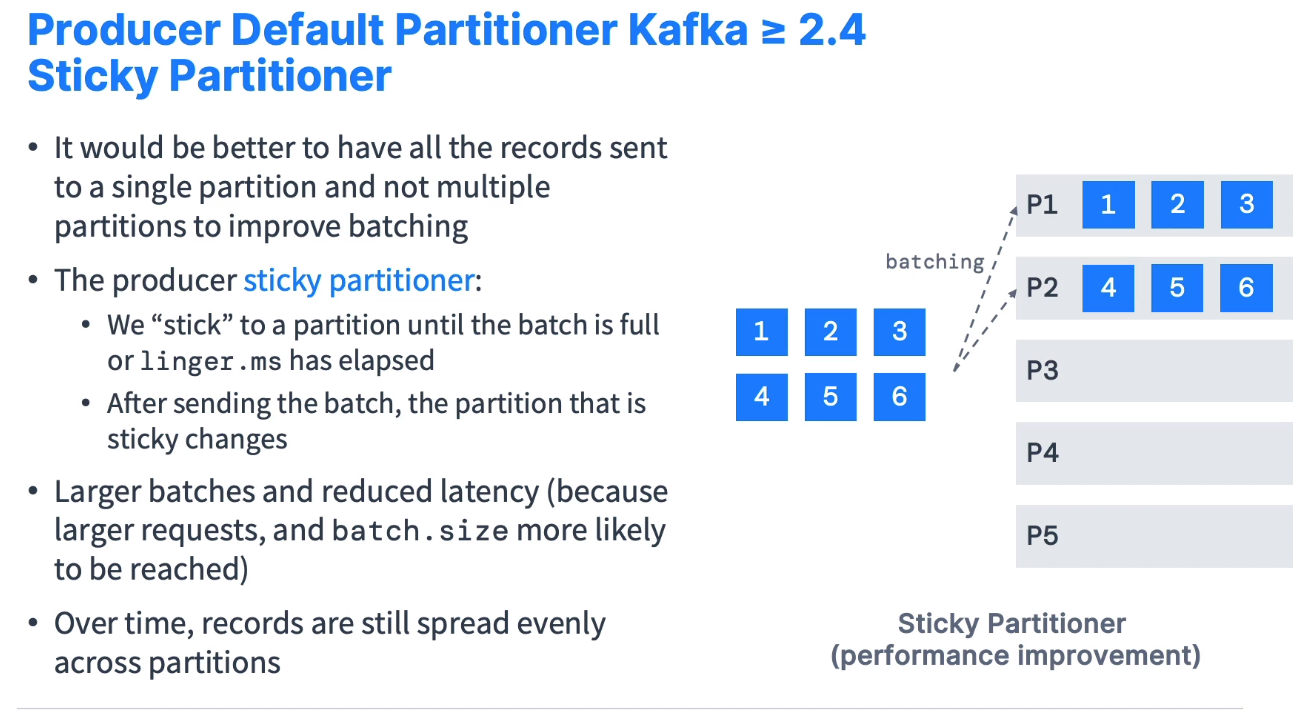

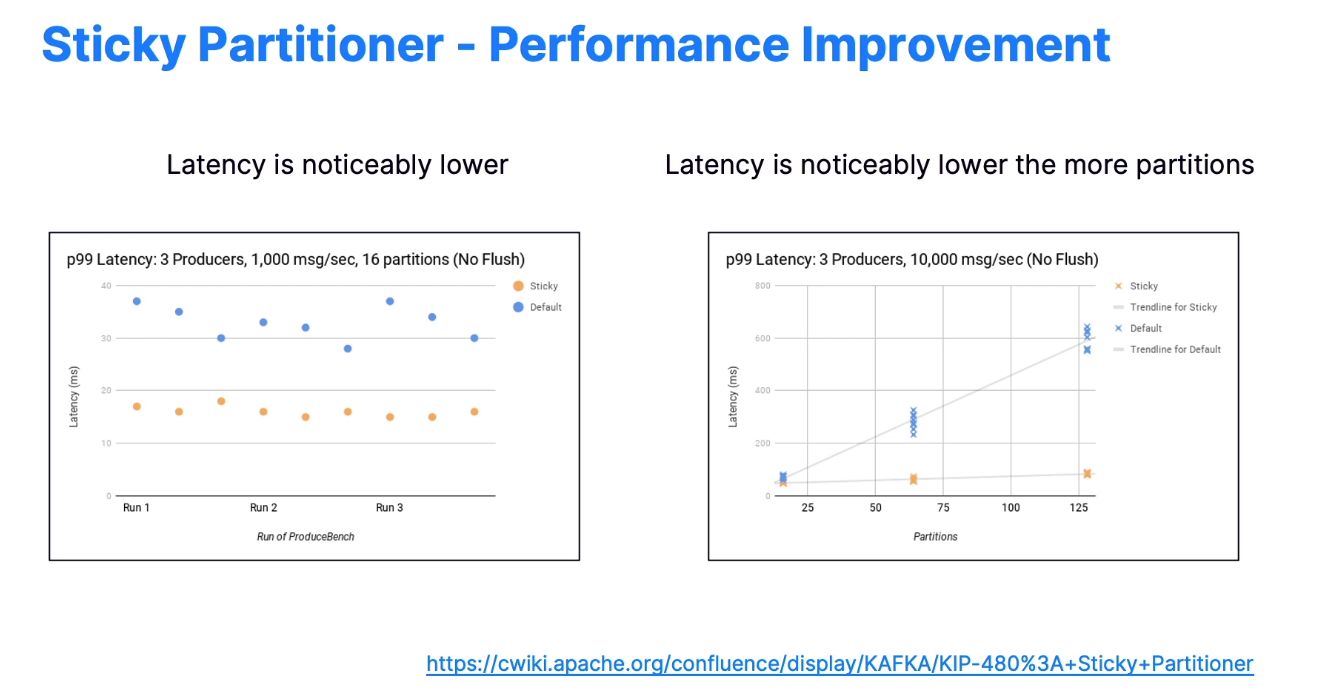

Producer - Sticky Partition

-

Producer - Key

-

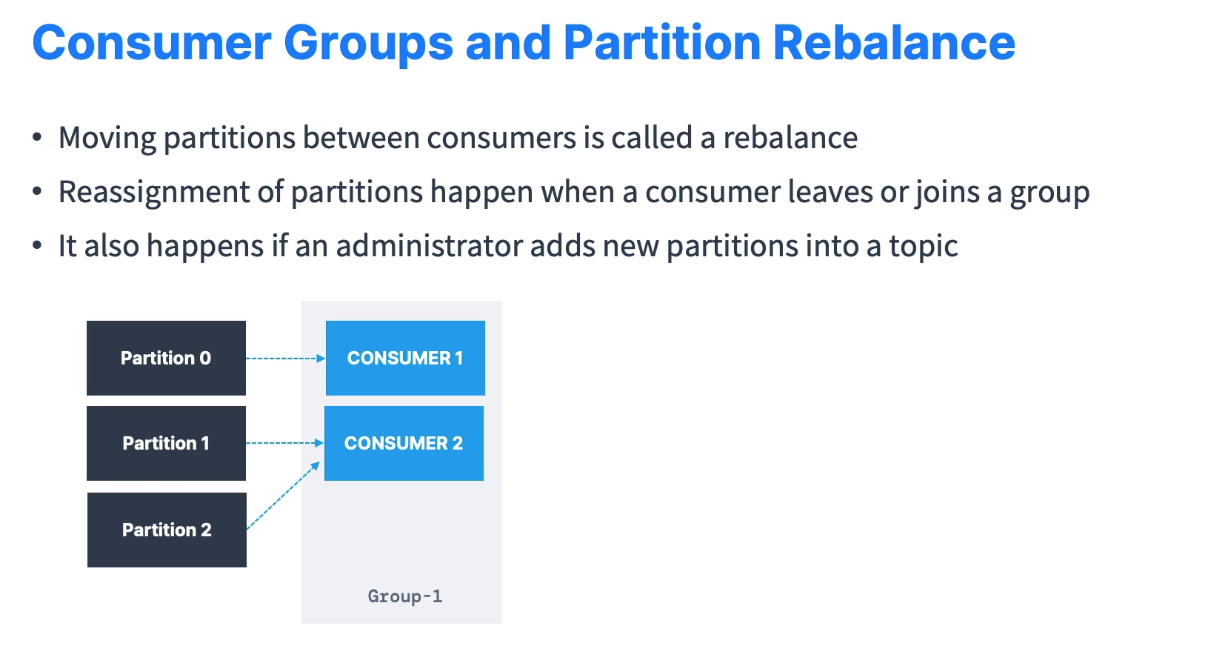

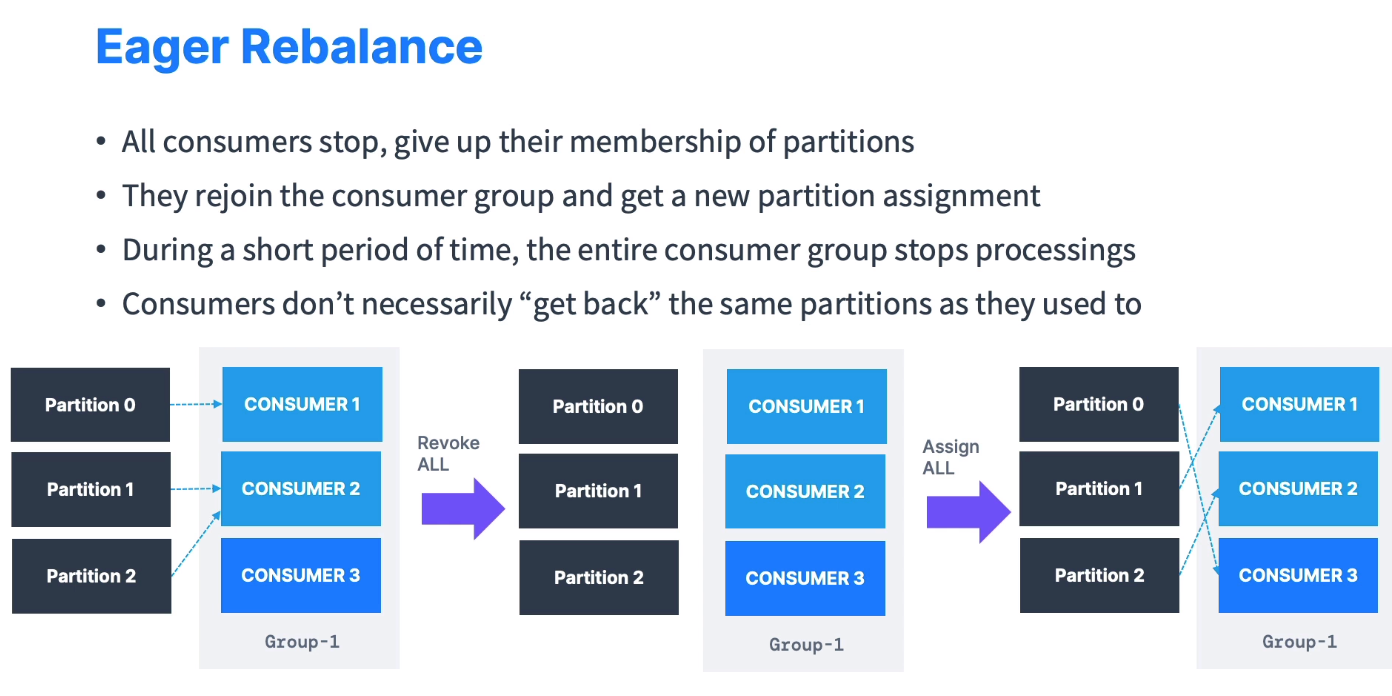

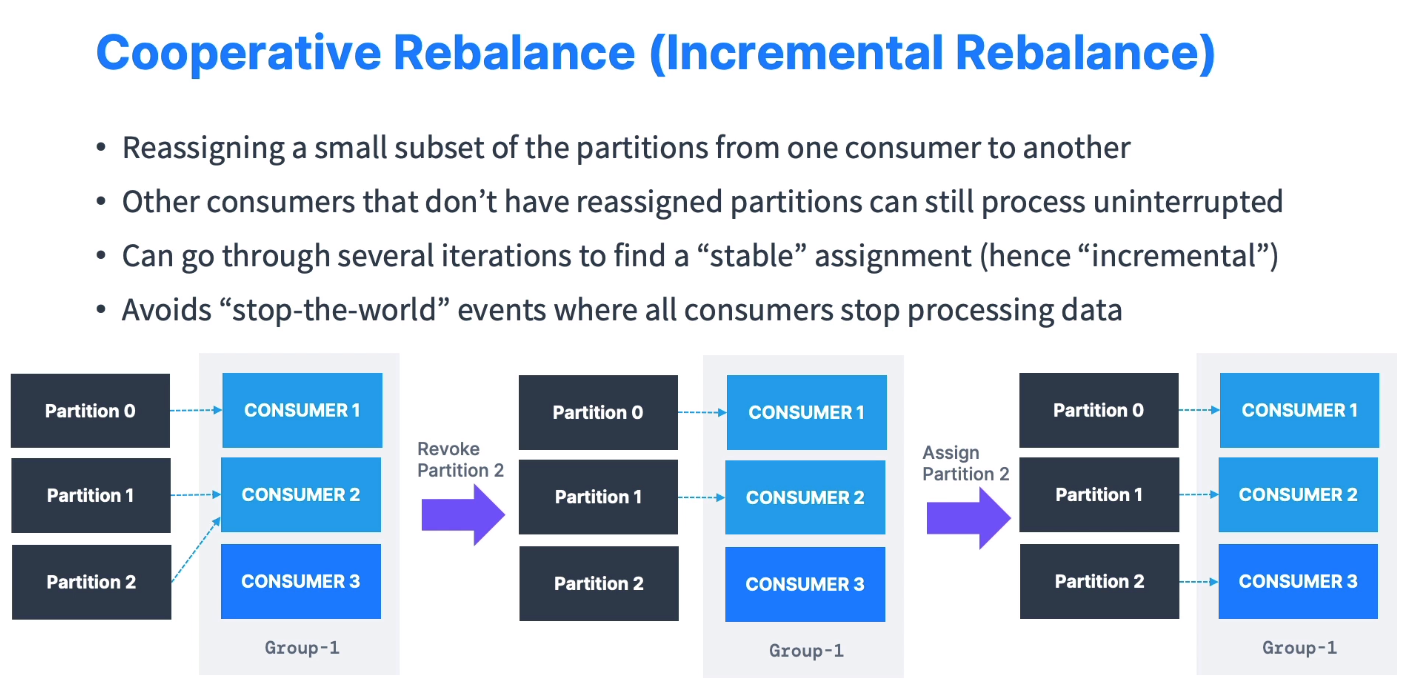

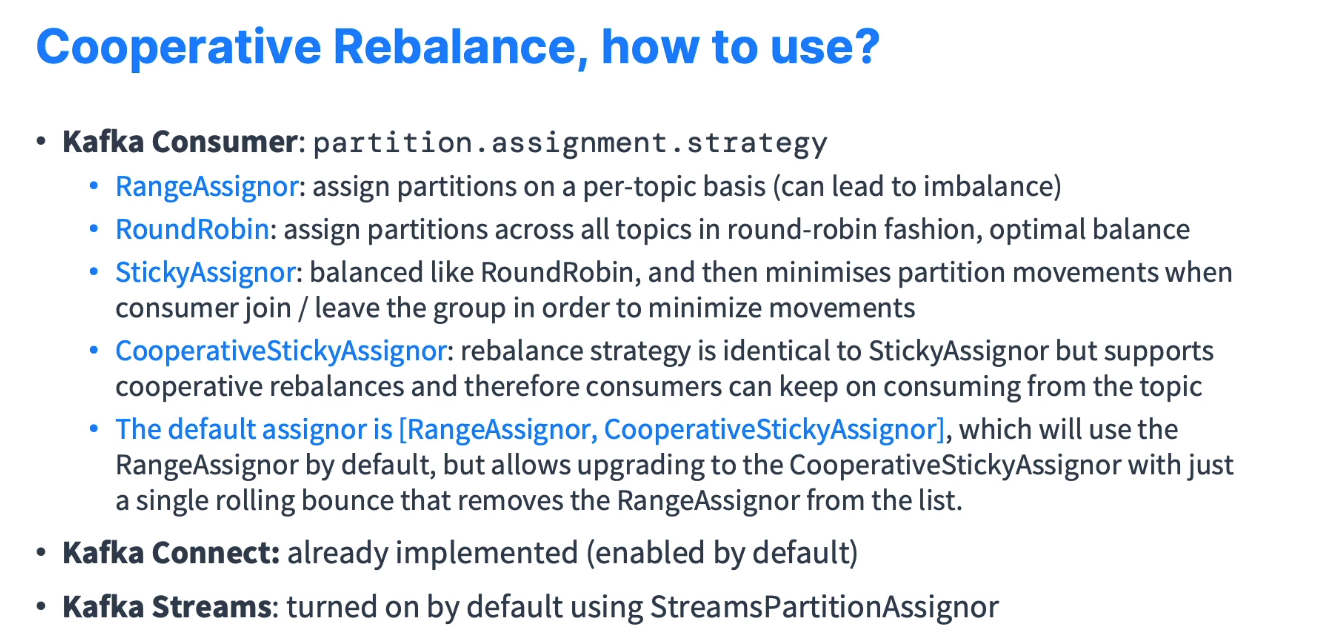

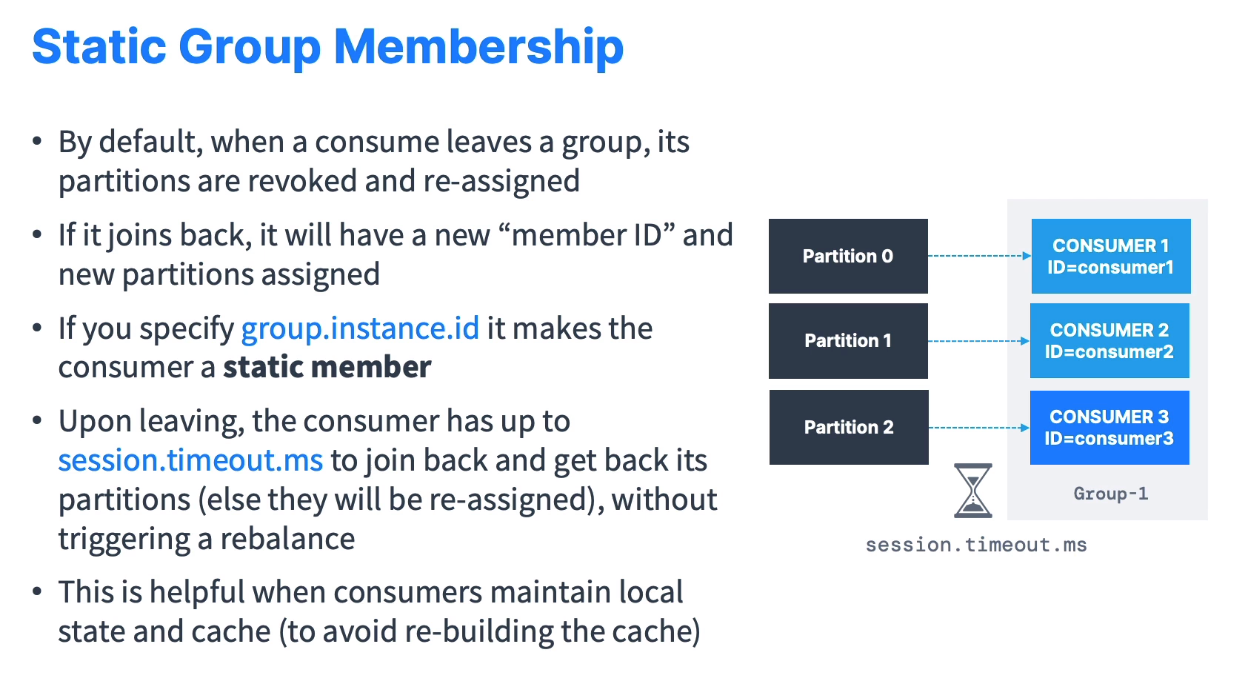

Consumer Group - Rebalancing

-

The previous code examples should be enough for 90% of your use cases.

-

Here, we have included advanced examples for the following use cases:

-

Consumer Rebalance Listener: in case you're doing a manual commit of your offsets to Kafka or externally, this allows you to commit offsets when partitions are revoked from your consumer.

-

Consumer Seek and Assign: if instead of using consumer groups, you want to start at specific offsets and consume only specific partitions, you will learn about the .seek() and .assign() APIs.

-

Consumer in Threads: very advanced code samples, this is for those who want to run their consumer

.poll()loop in a separate thread.

-

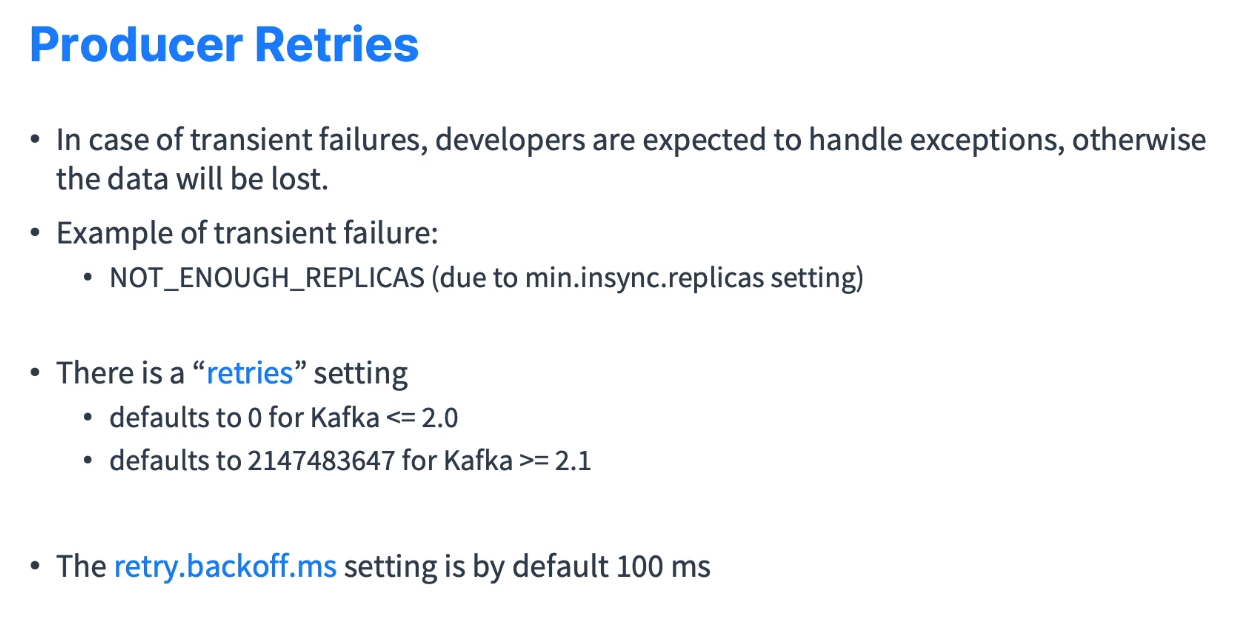

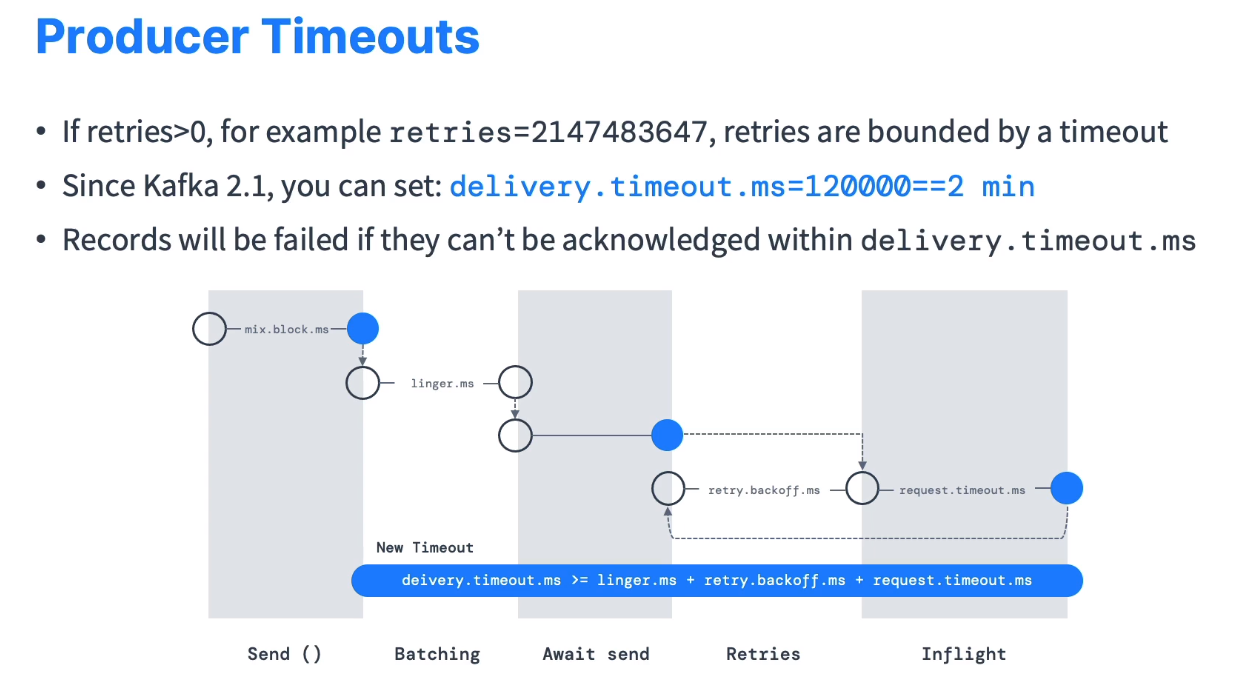

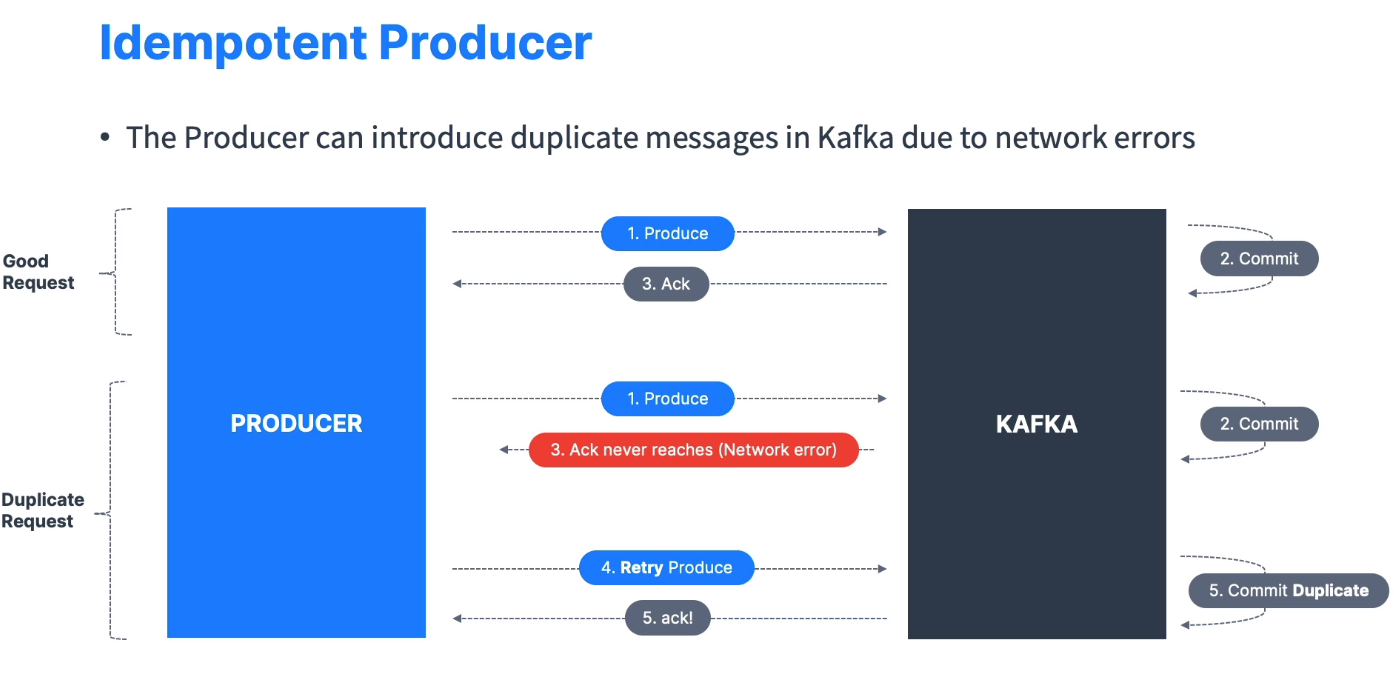

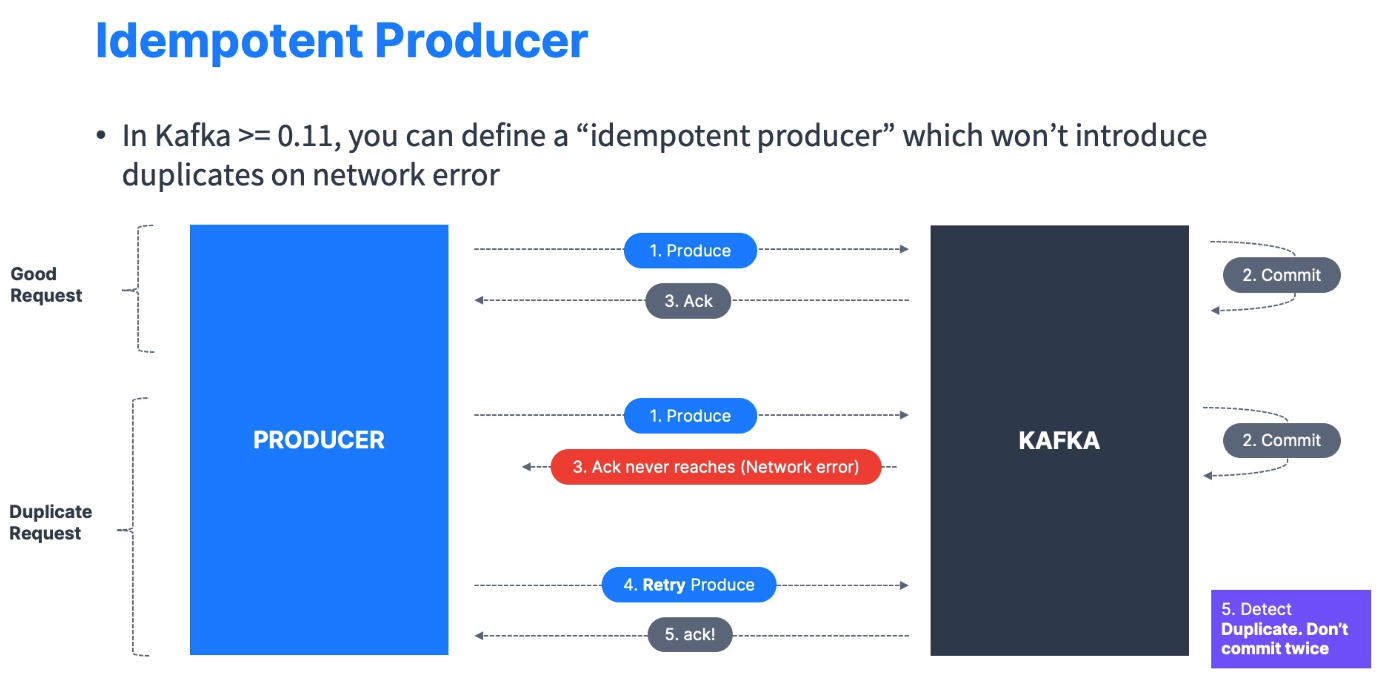

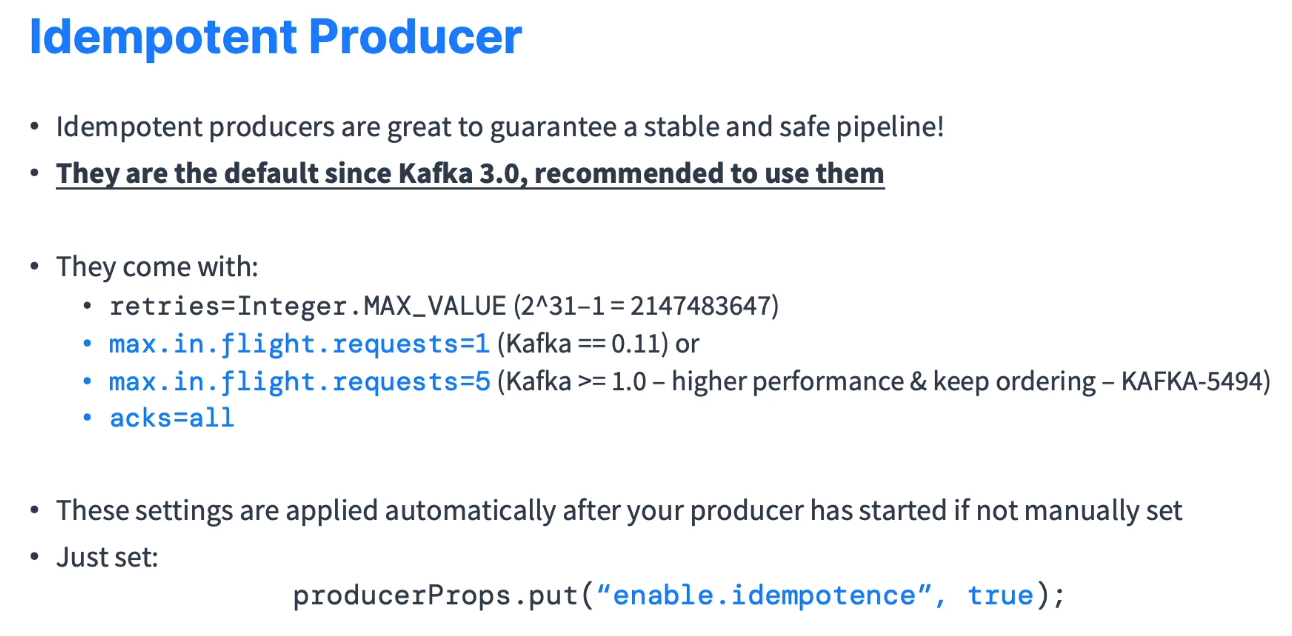

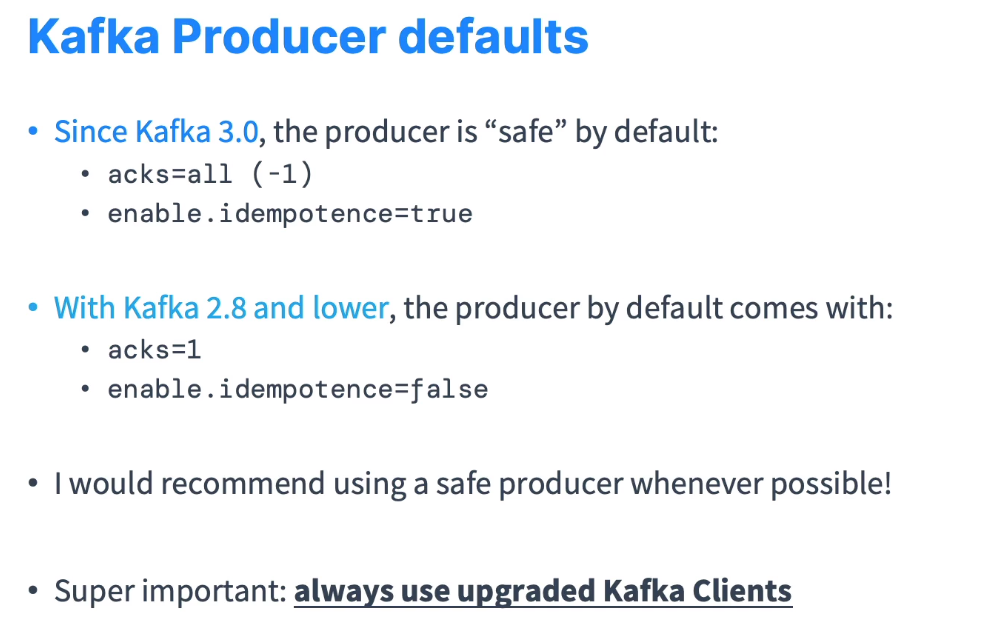

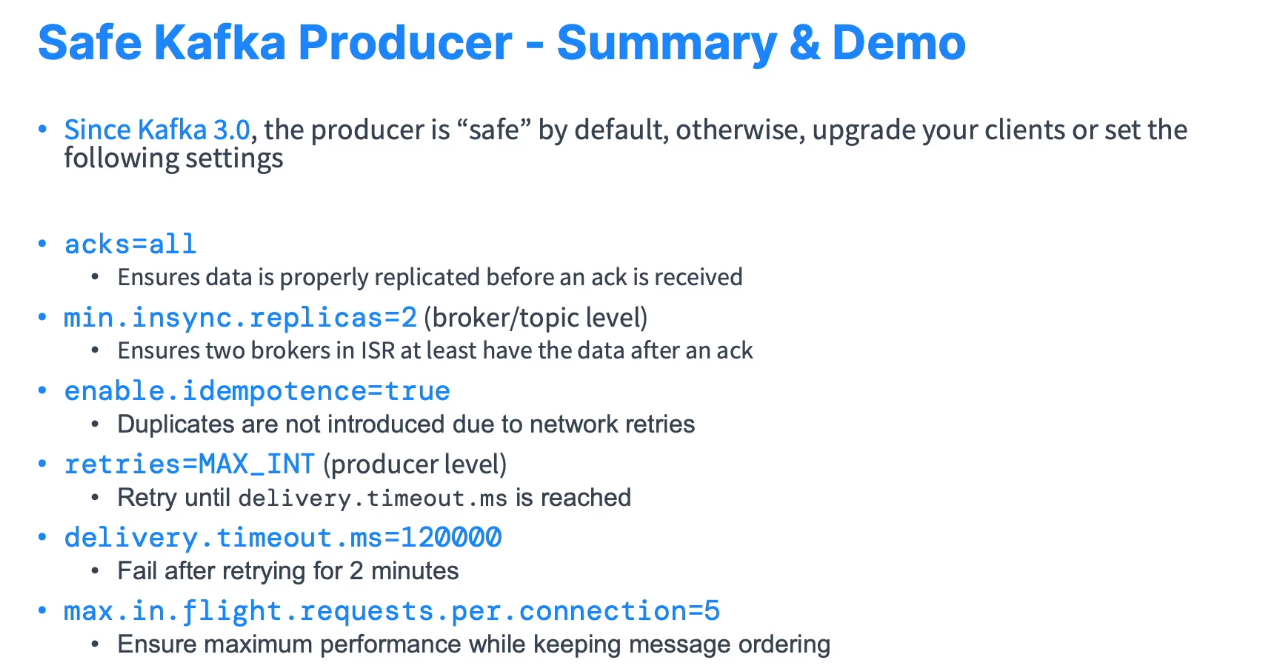

Producer ACKs and idempotency

-

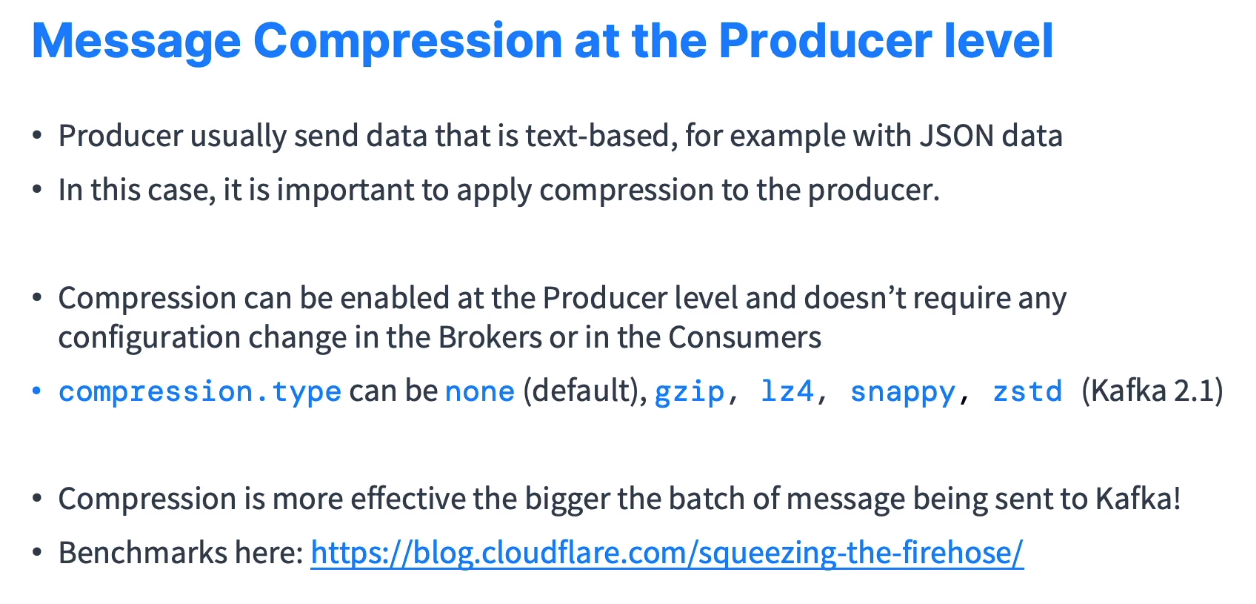

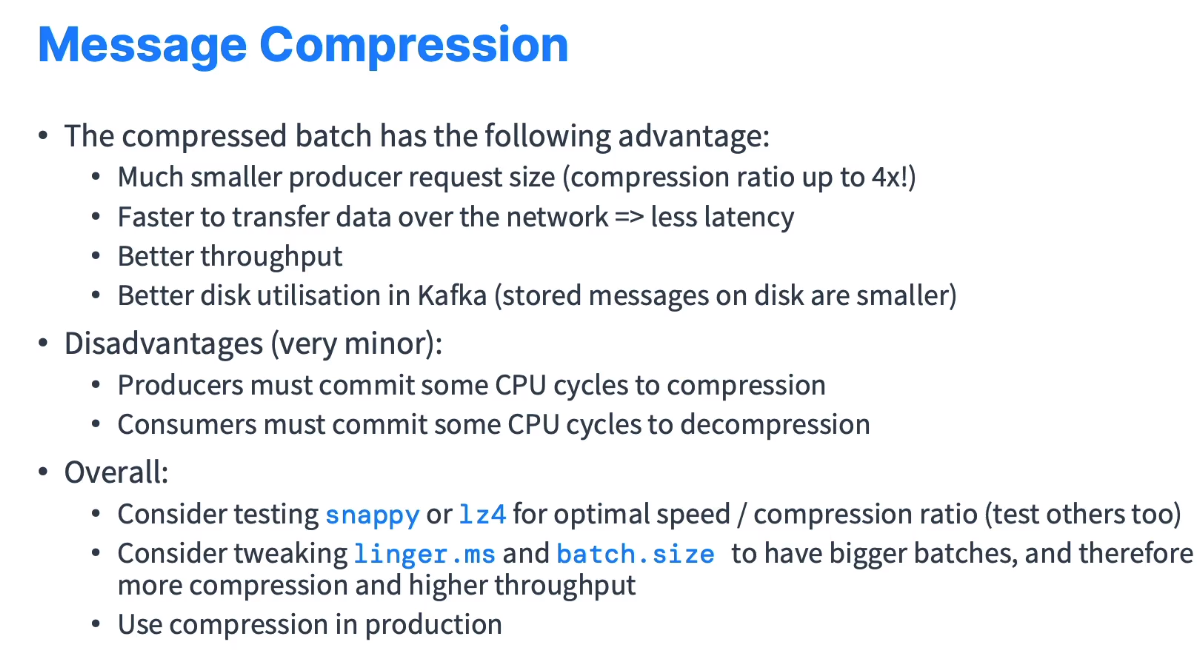

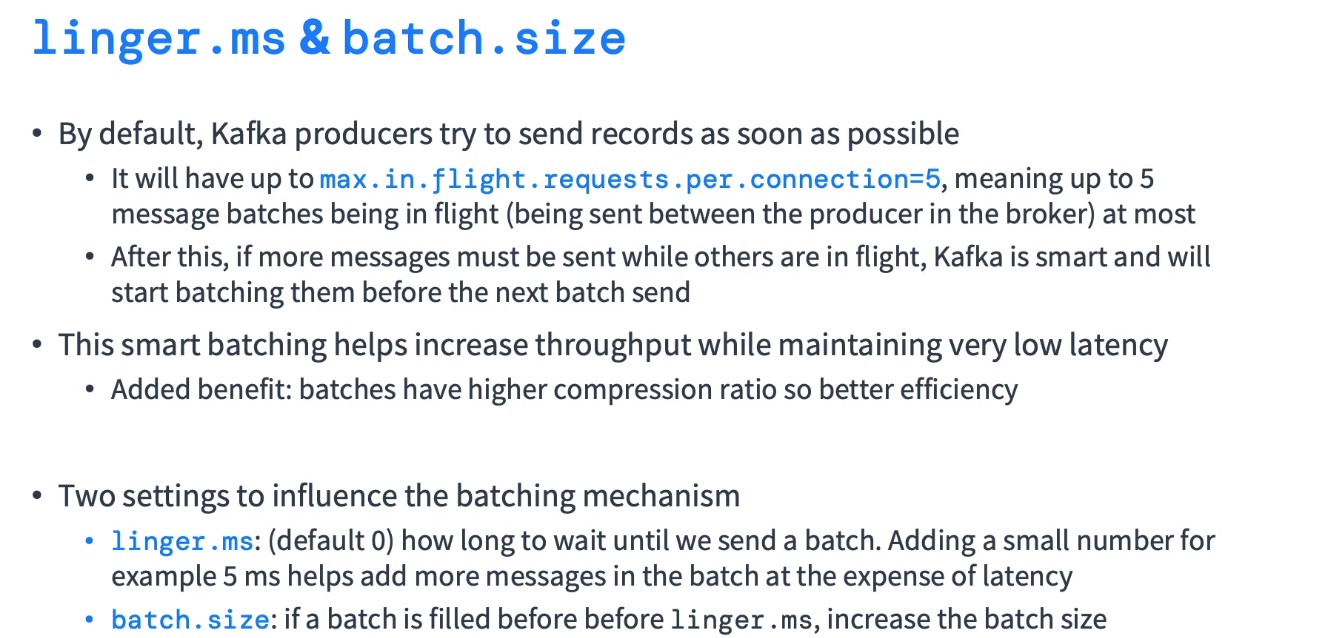

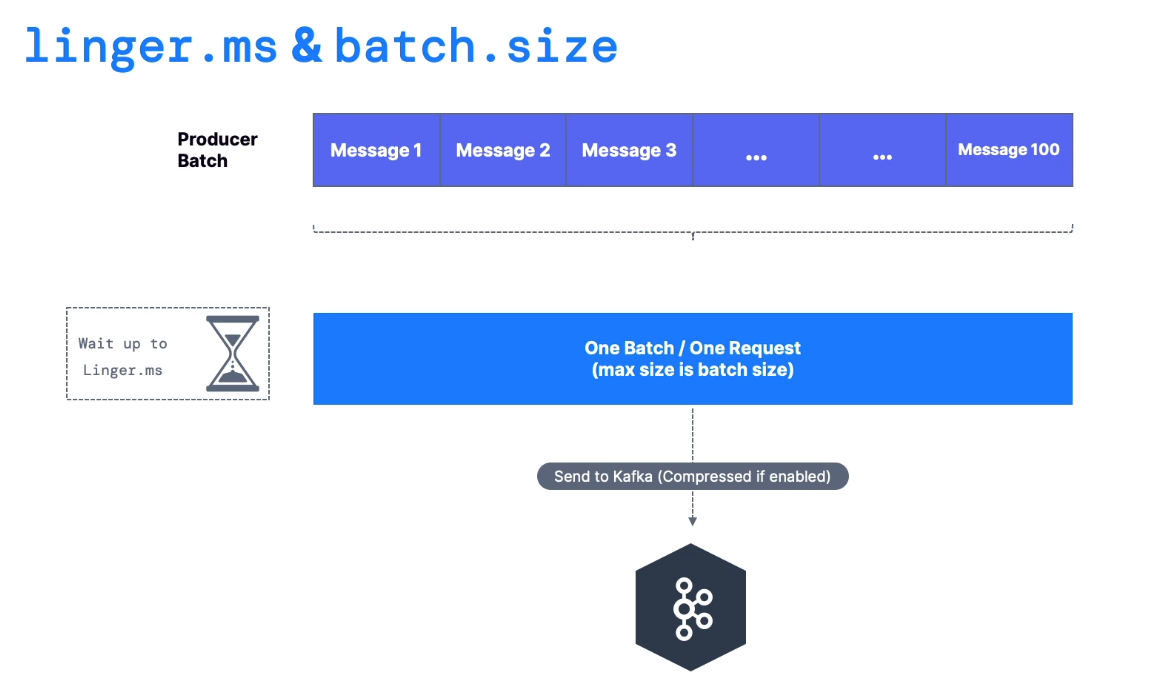

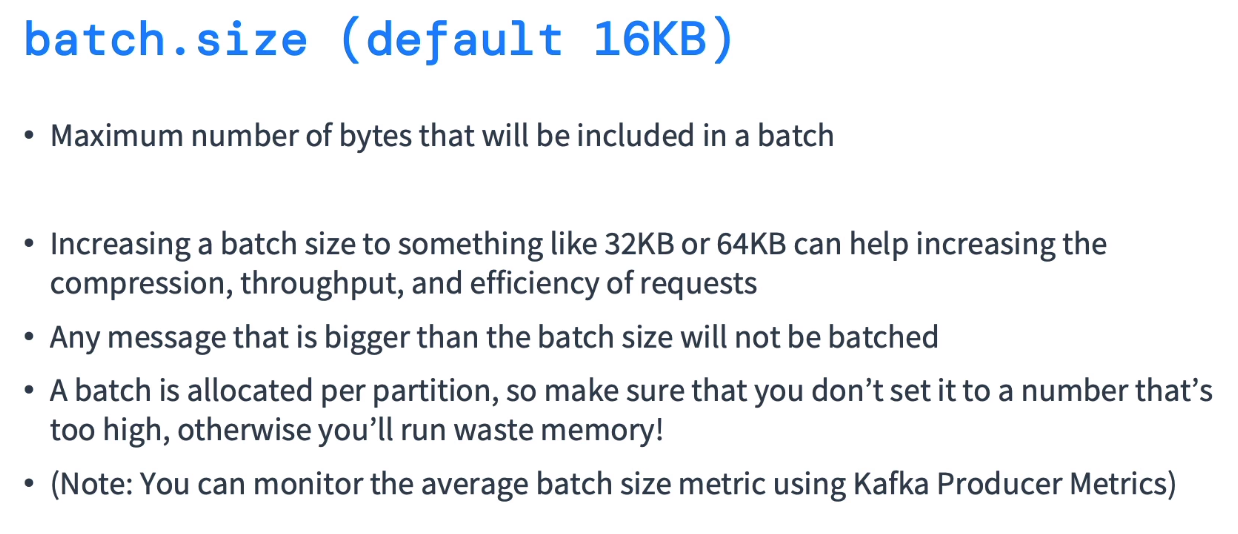

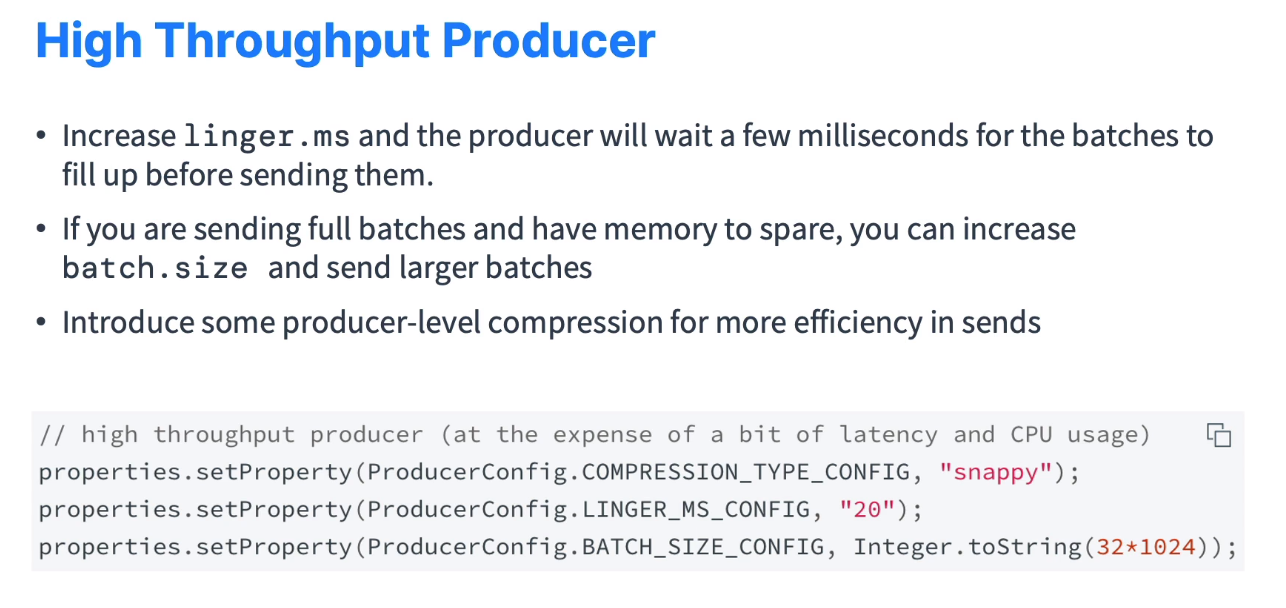

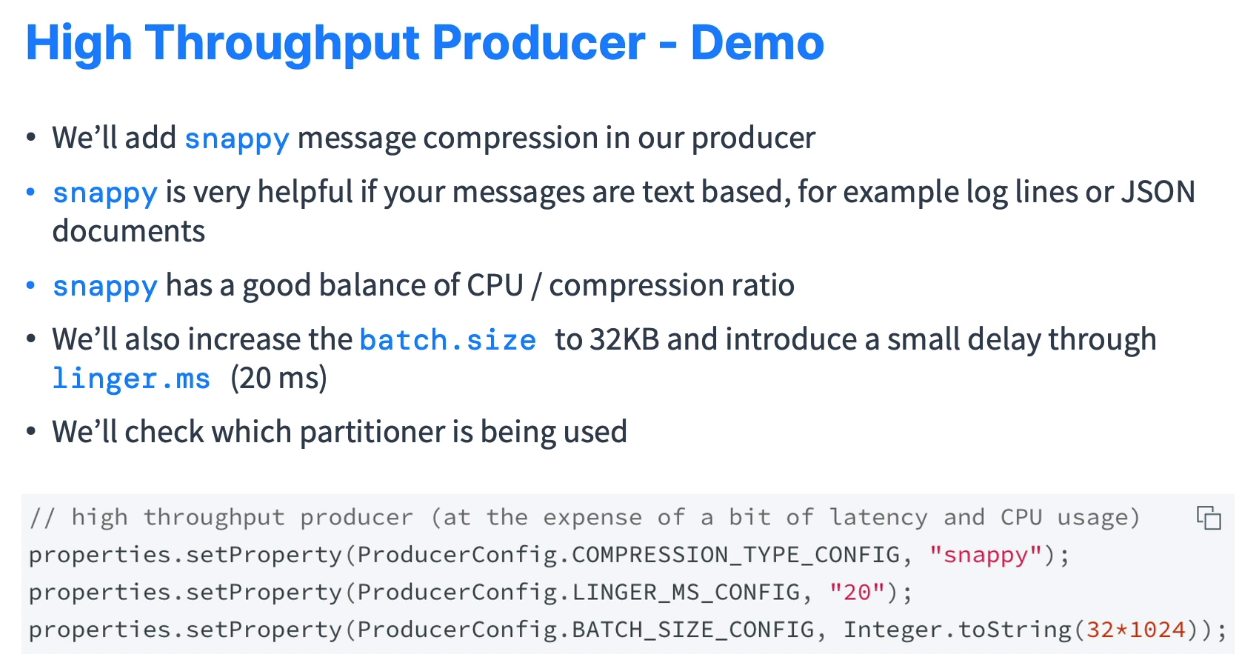

Message Compression

-

Compression (snappy)

-

Partitioner

-

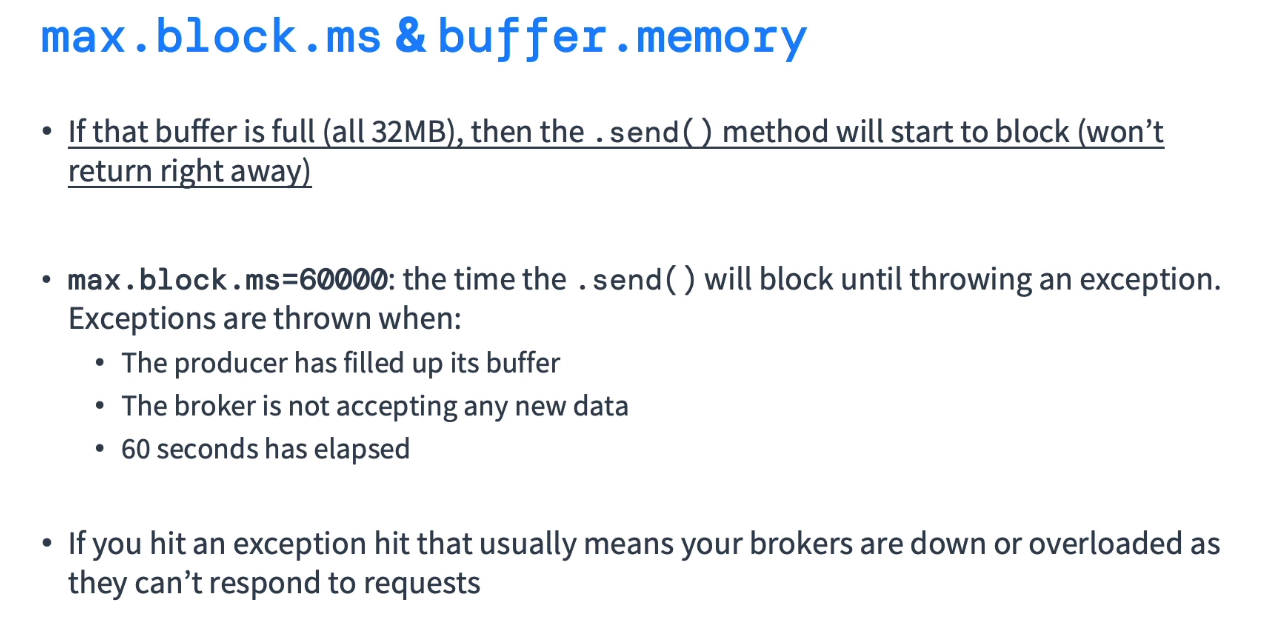

Block buffer

-

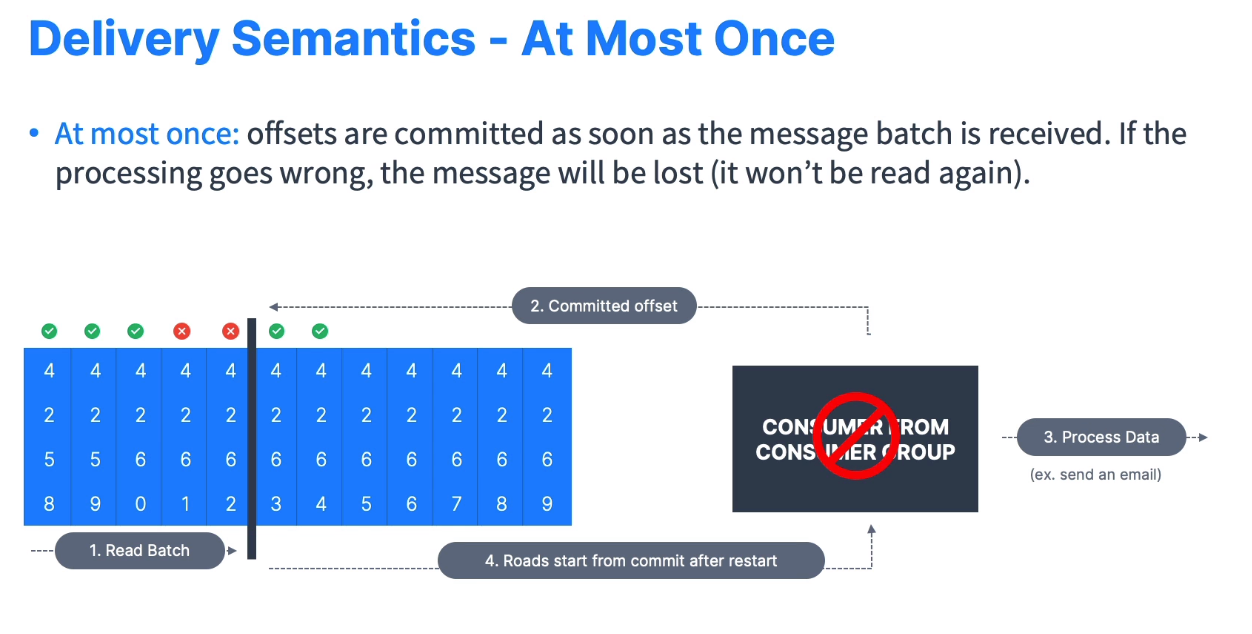

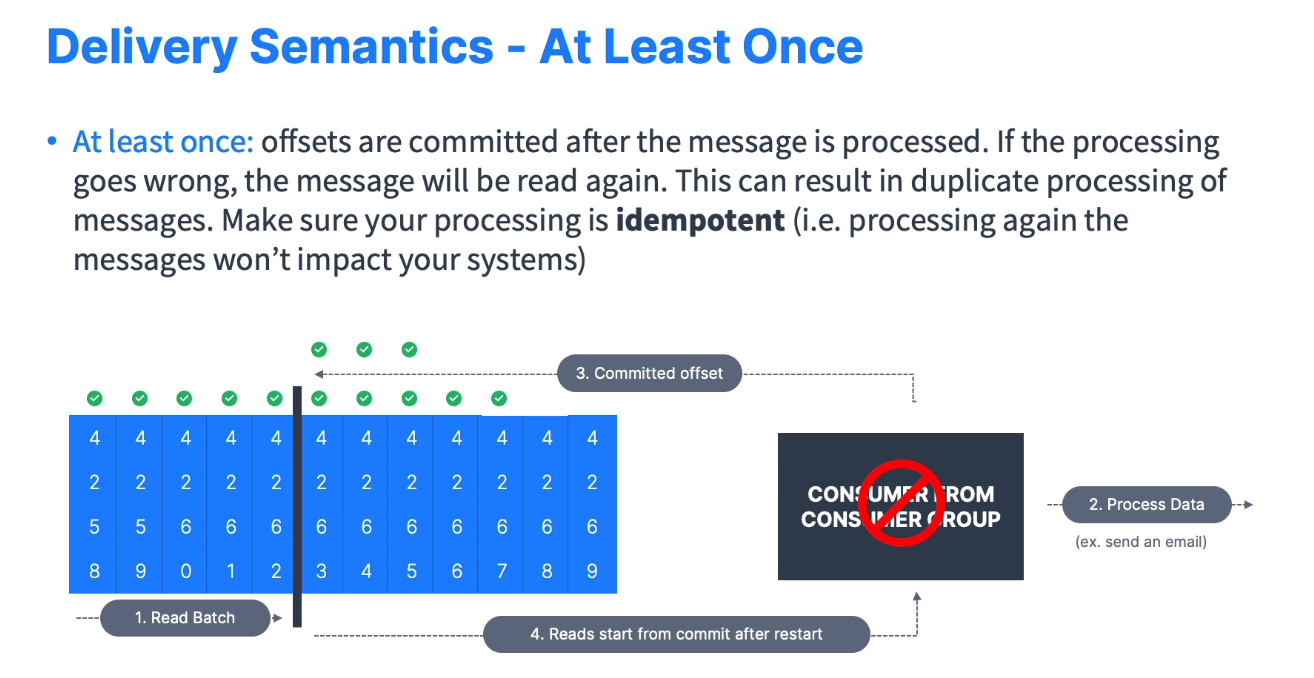

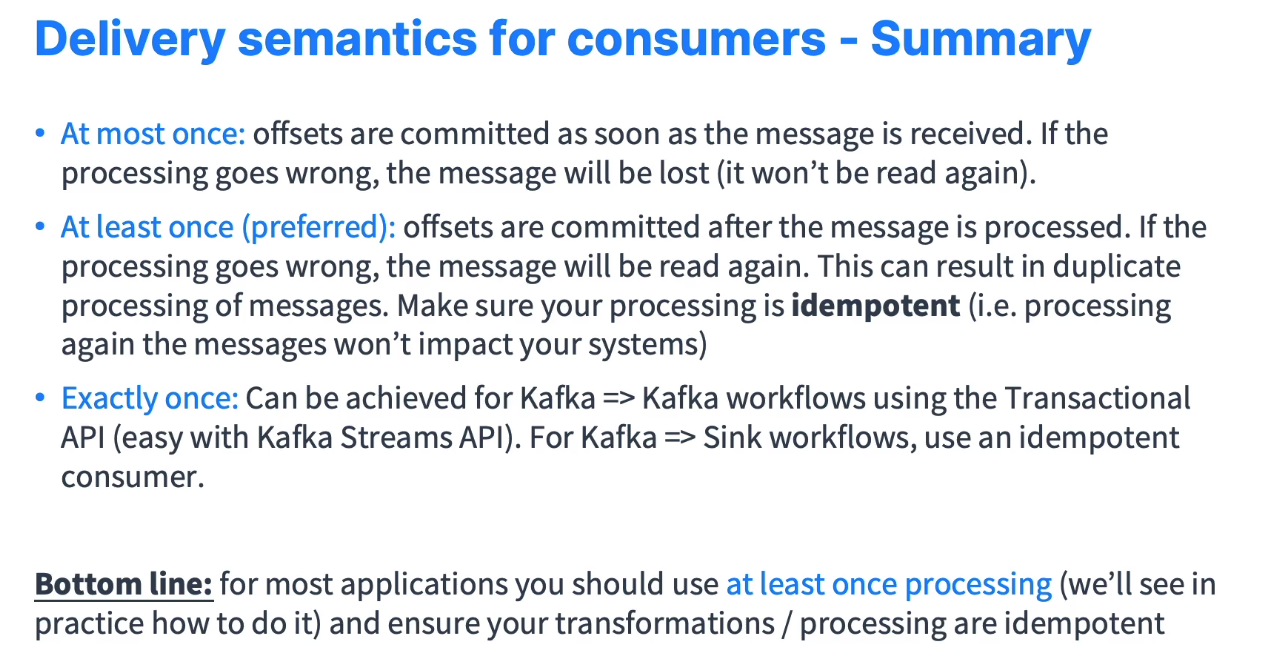

Delivery Semantics

-

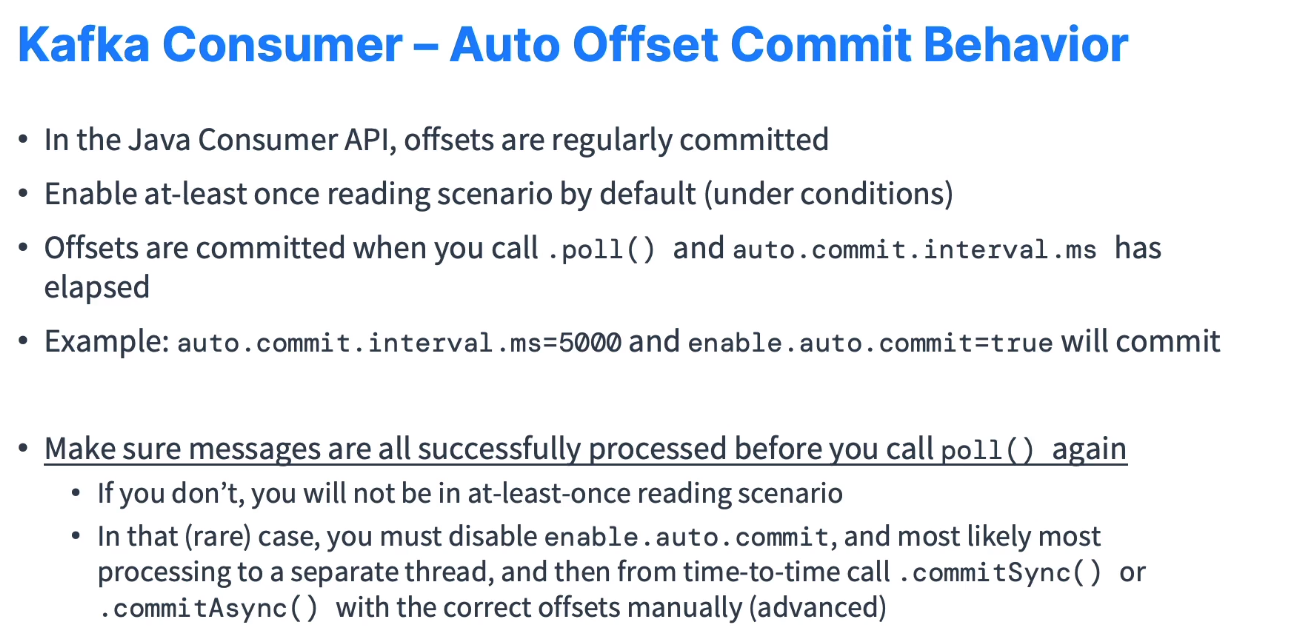

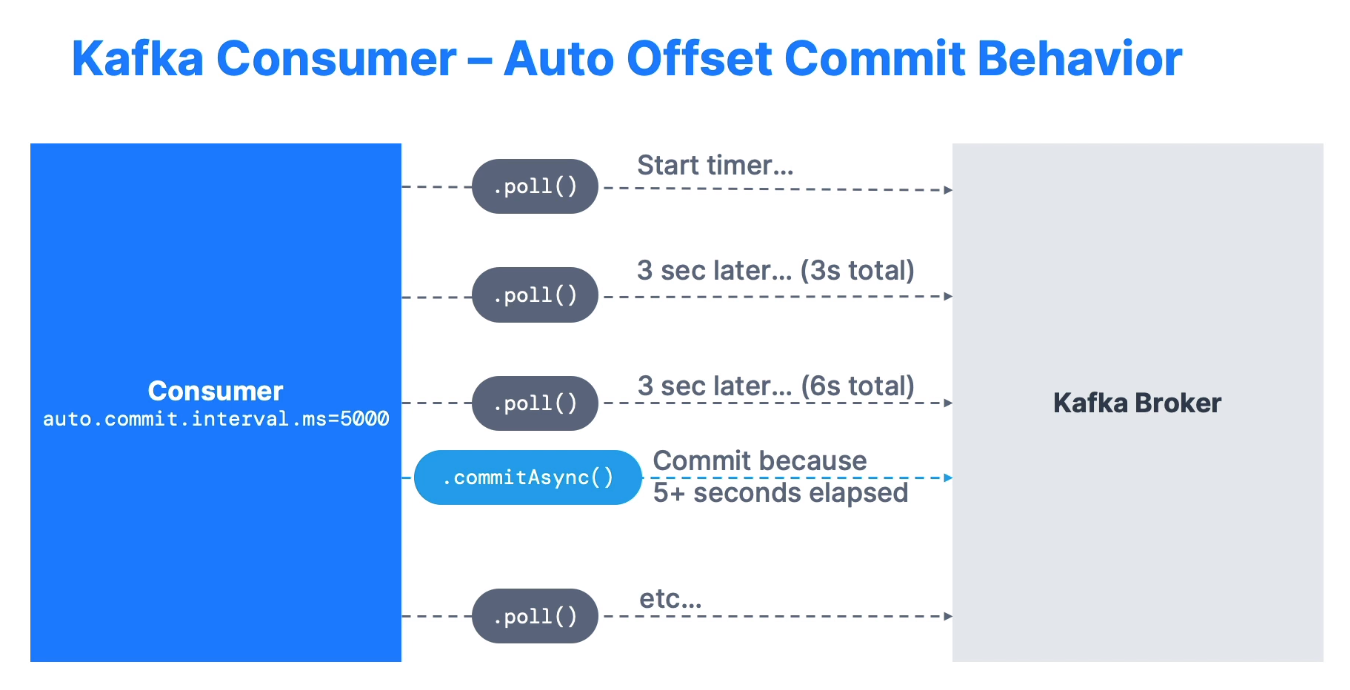

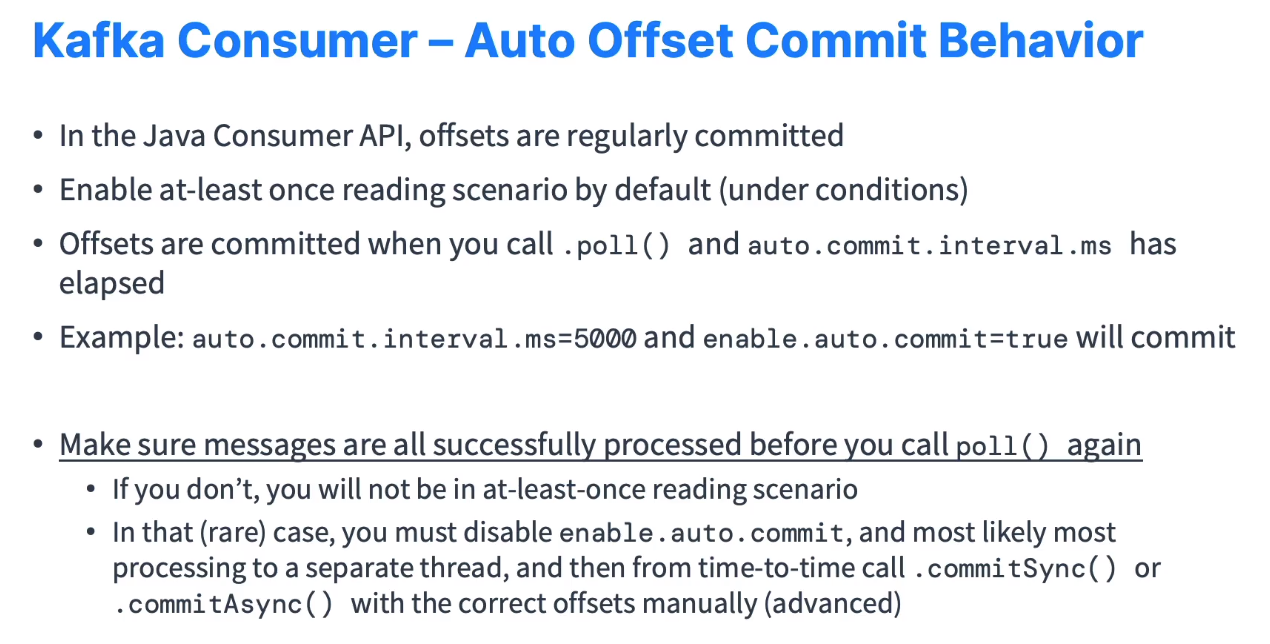

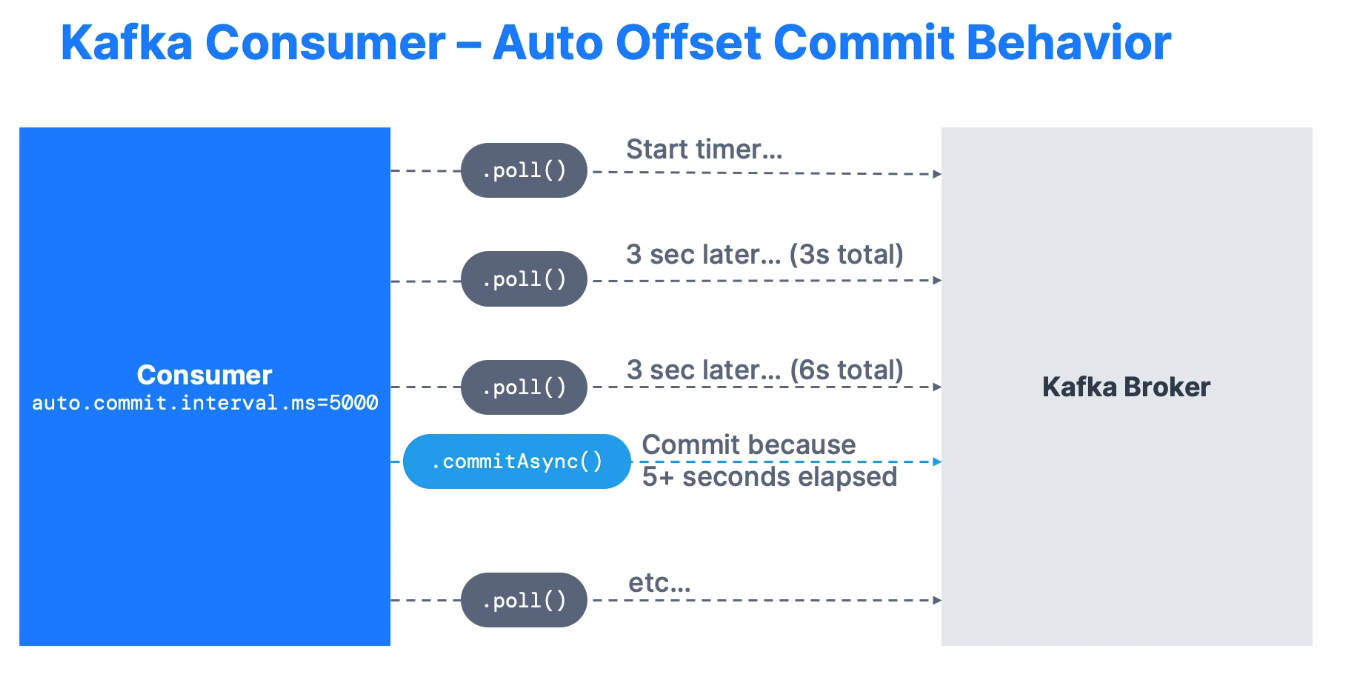

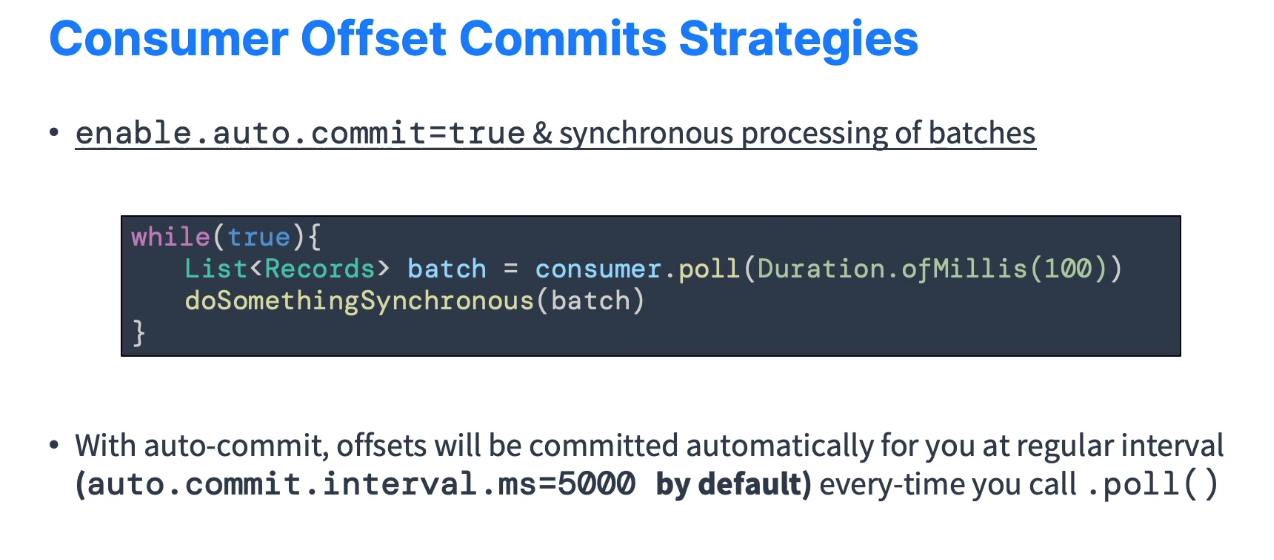

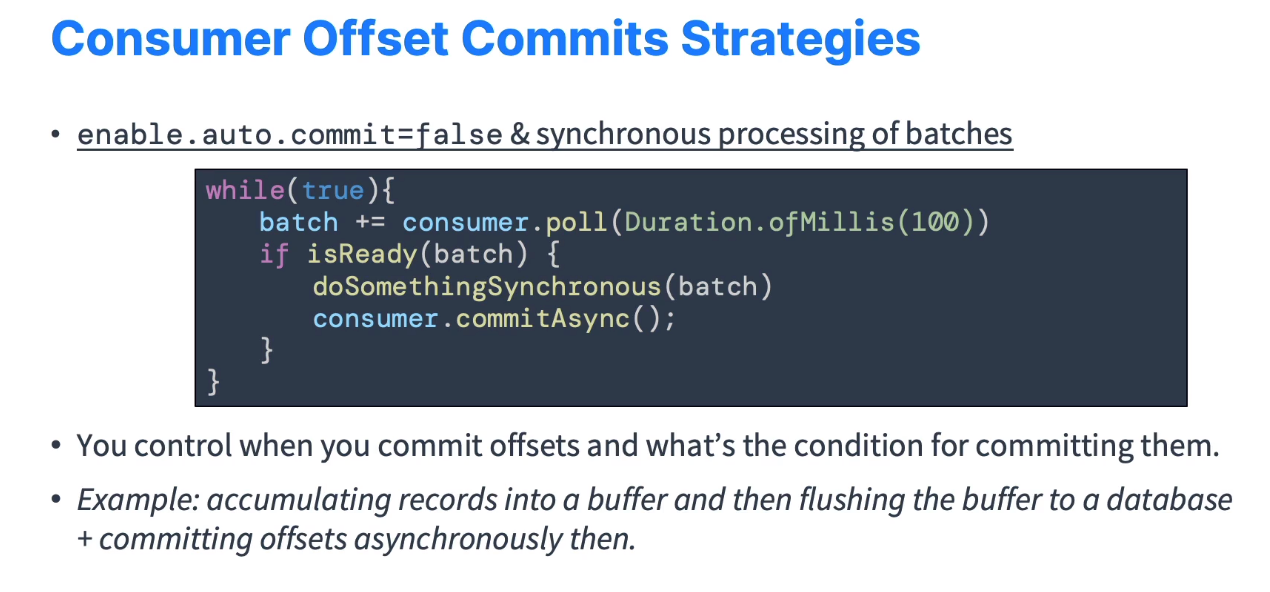

Consumer Offset Commit Strategy

-

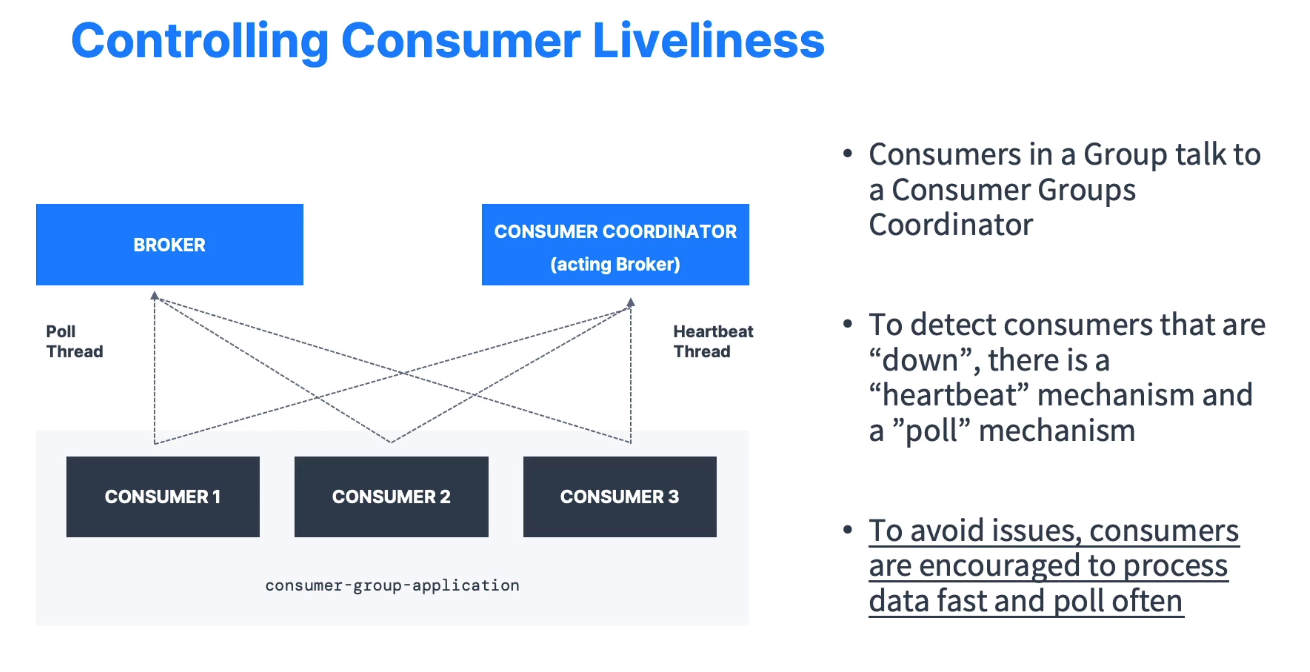

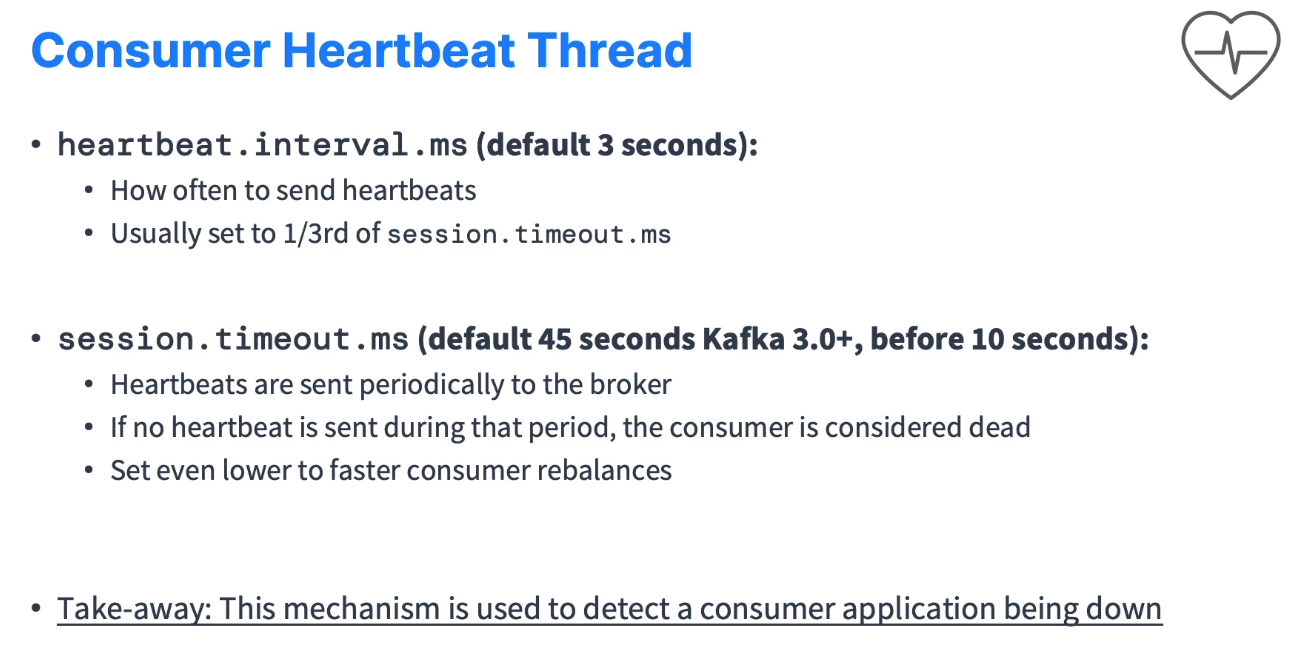

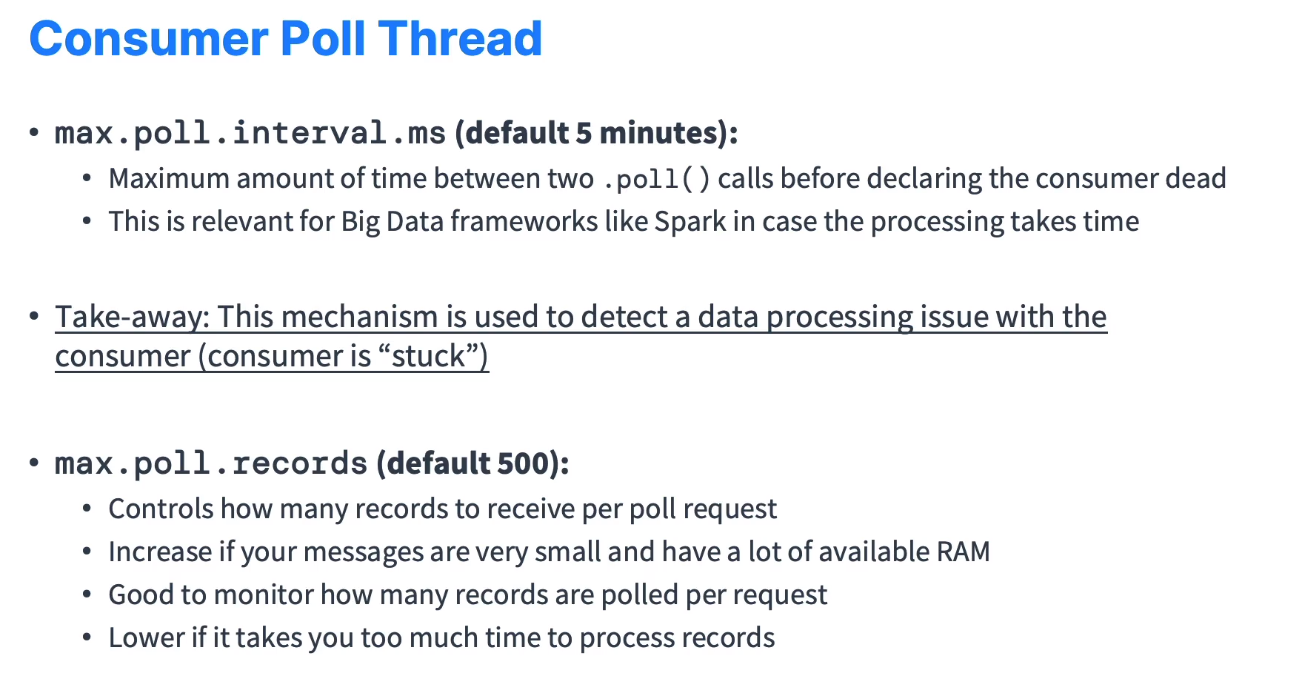

Controlling Consumer Liveliness