This repository has some of my works on VAEs in Pytorch. At the moment I am doing experiments on usual non-hierarchical VAEs. ConvVAE architecture is based on this repo, and MLPVAE on this.

- Standard Gaussian based VAE

- Gamma reparameterized rejection sampling by Naesseth et al.. This implementation is based on the work of Mr. Altosaar.

Example 1:

$ python3 main.pyExample 2:

$ python3 main --model normal --epochs 5000Example 3:

$ python3 main --model gamma --b_size 256Example 4:

$ python3 main --model gamma --dataset mnist --z_dim 5usage: main.py [-h] [--model M] [--epochs N] [--dataset D]

[--b_size B] [--z_dim Z]

optional arguments:

-h, --help show this help message and exit

--model M vae model to use: gamma | normal, default is normal

--epochs N number of total epochs to run, default is 10000

--dataset D dataset to run experiments (cifar-10 or mnist)

--b_size B batch size

--z_dim Z size of the latent space

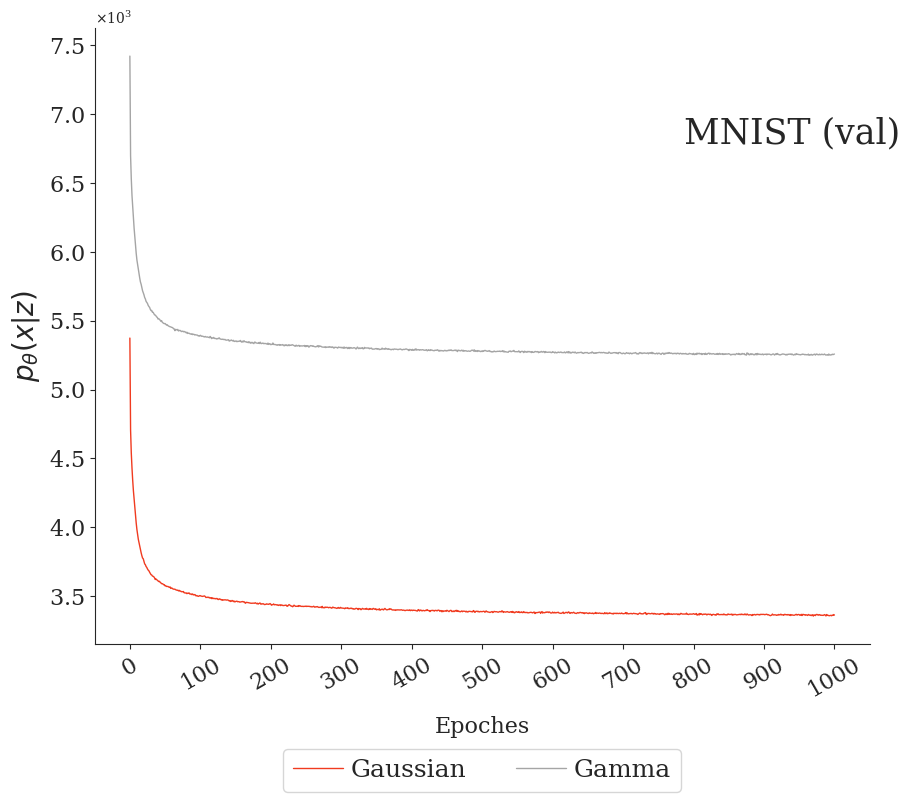

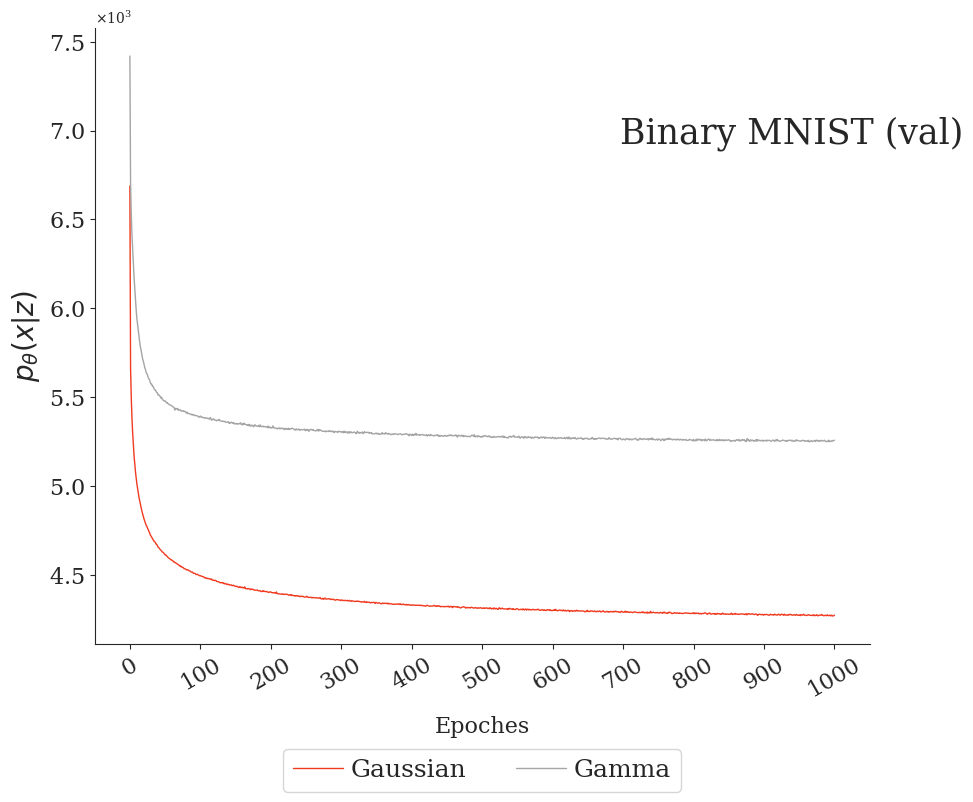

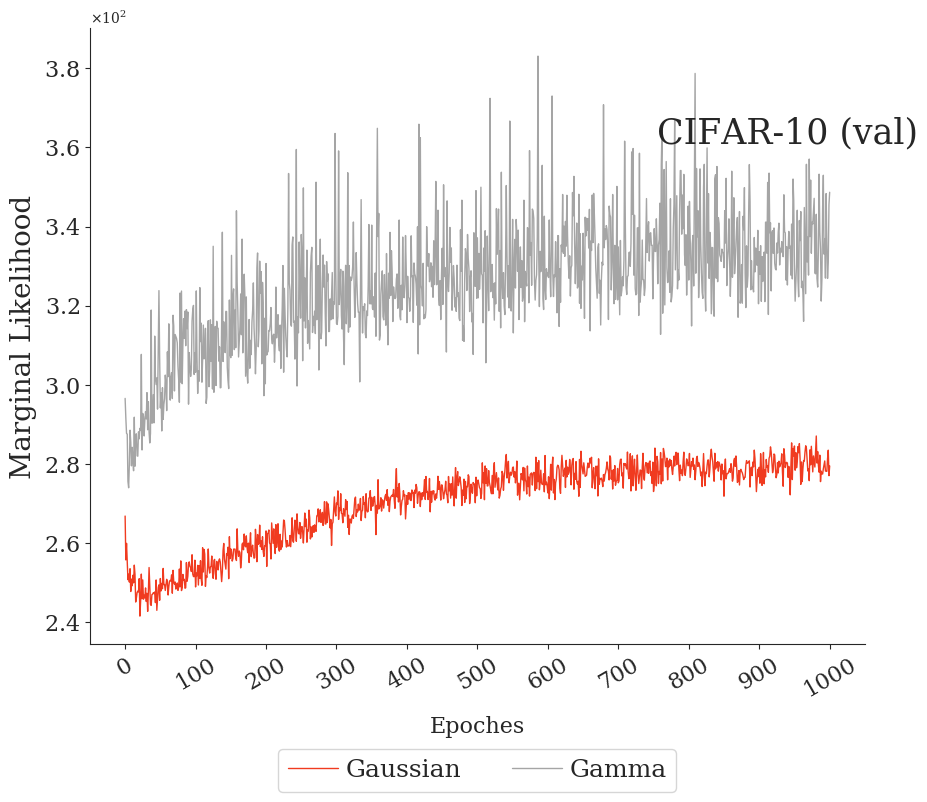

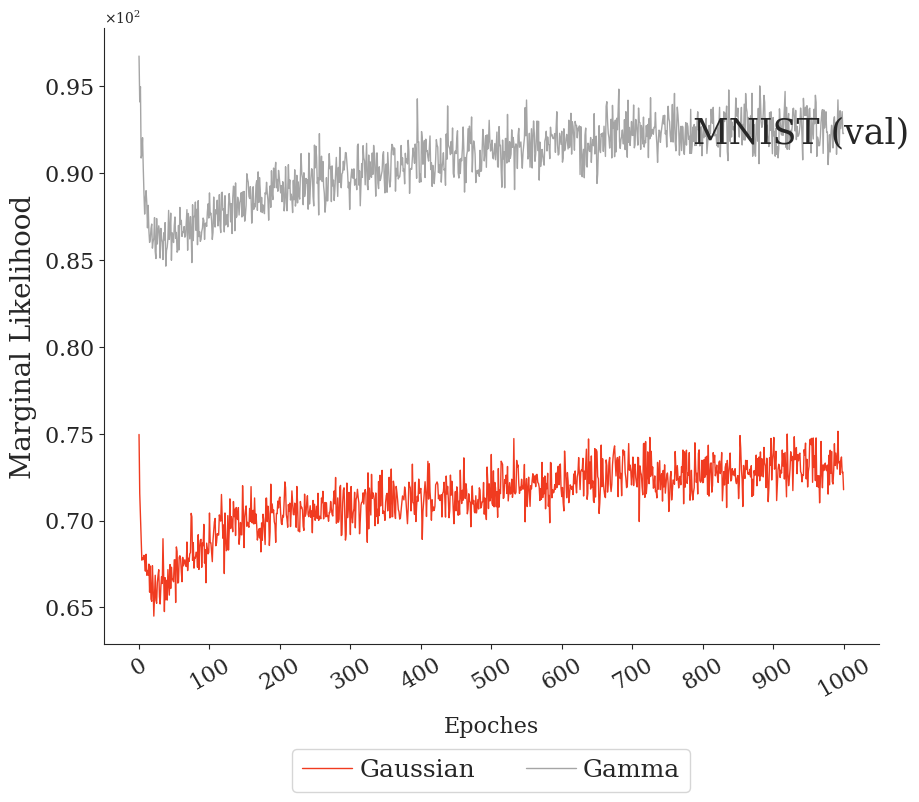

- z_dim = 4

- b_size = 128

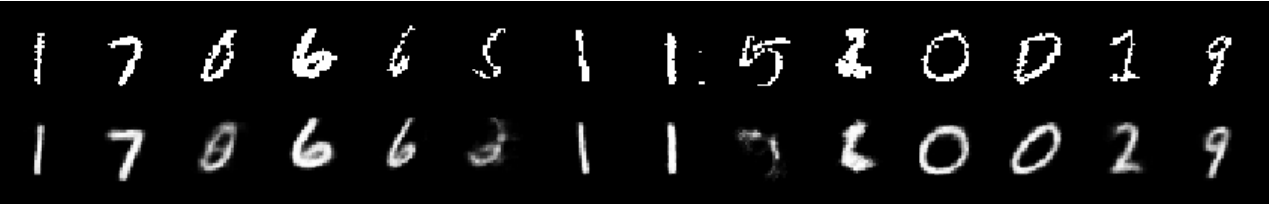

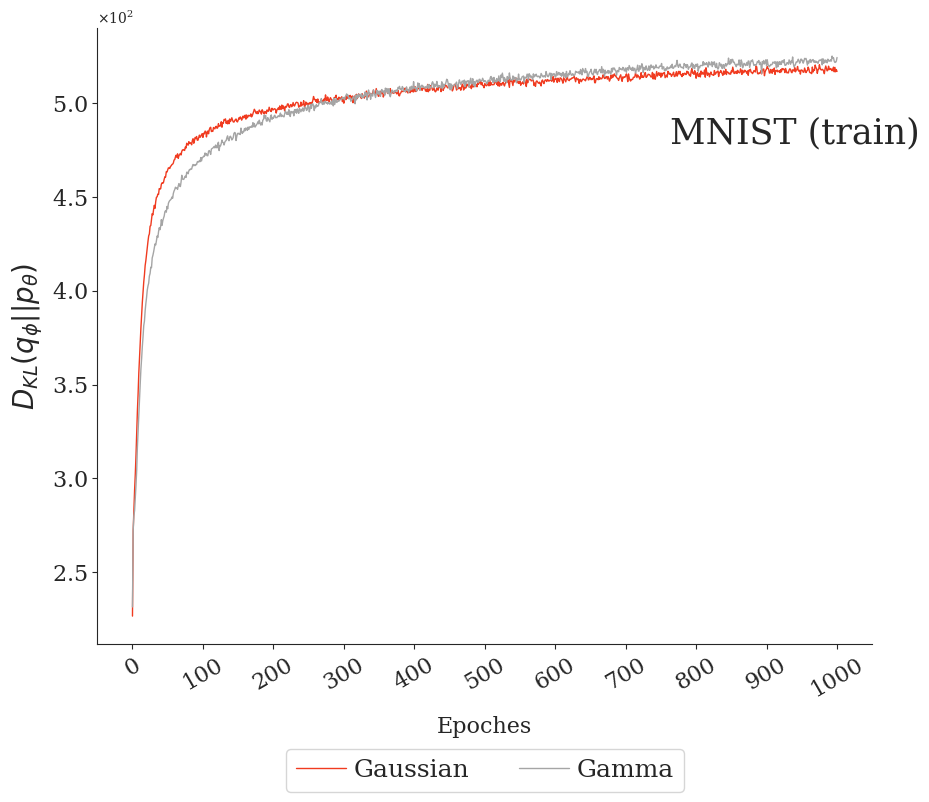

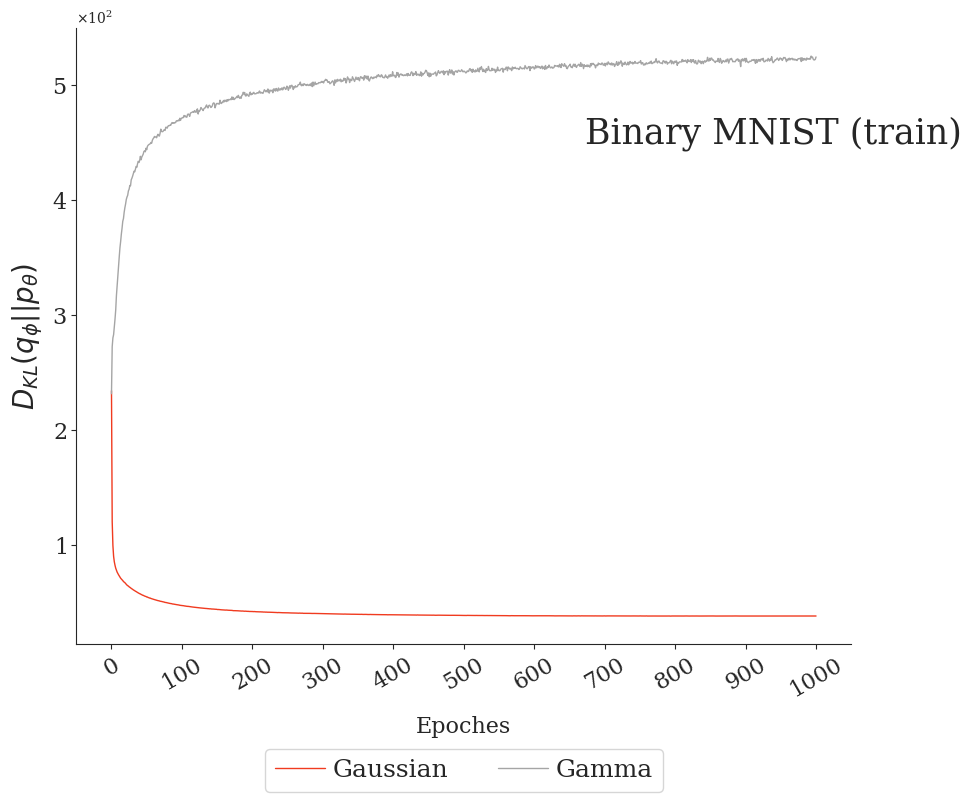

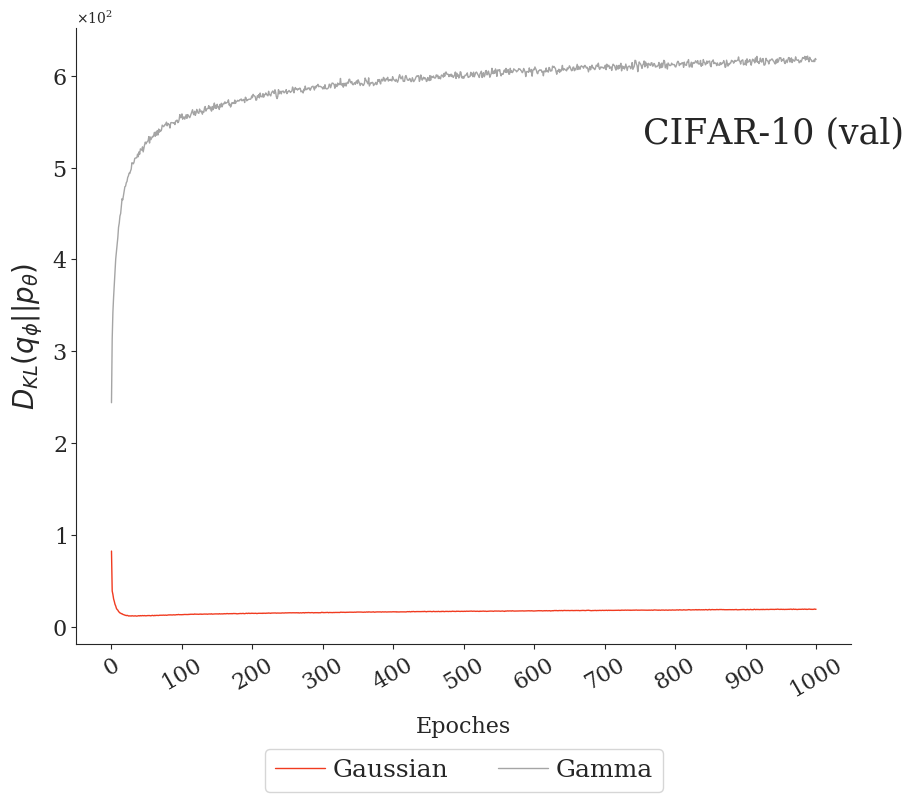

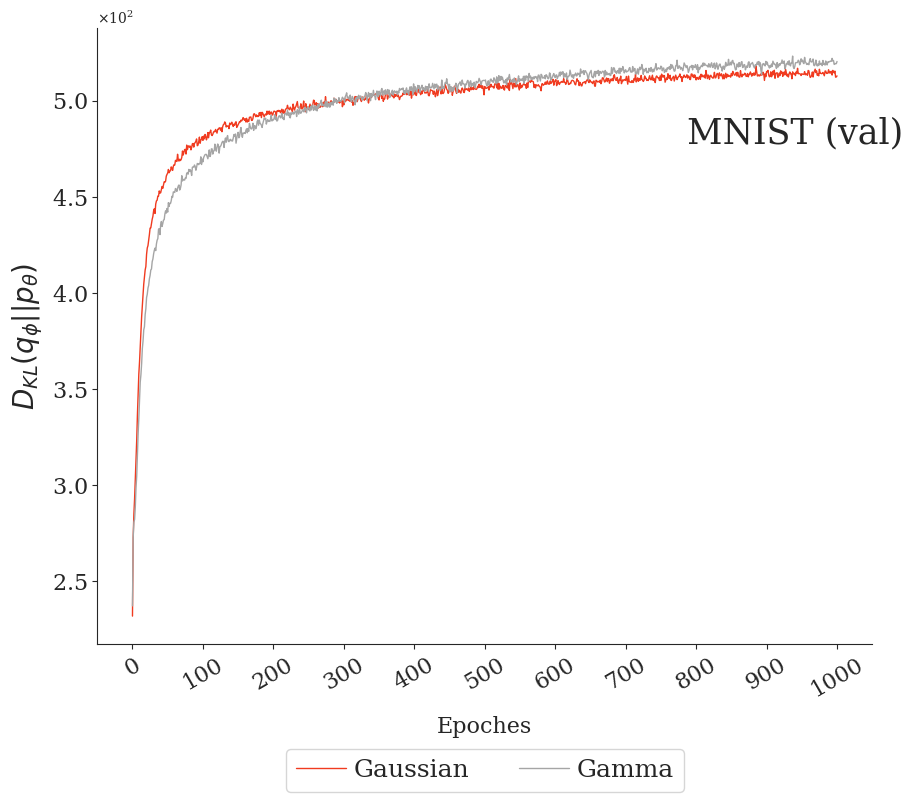

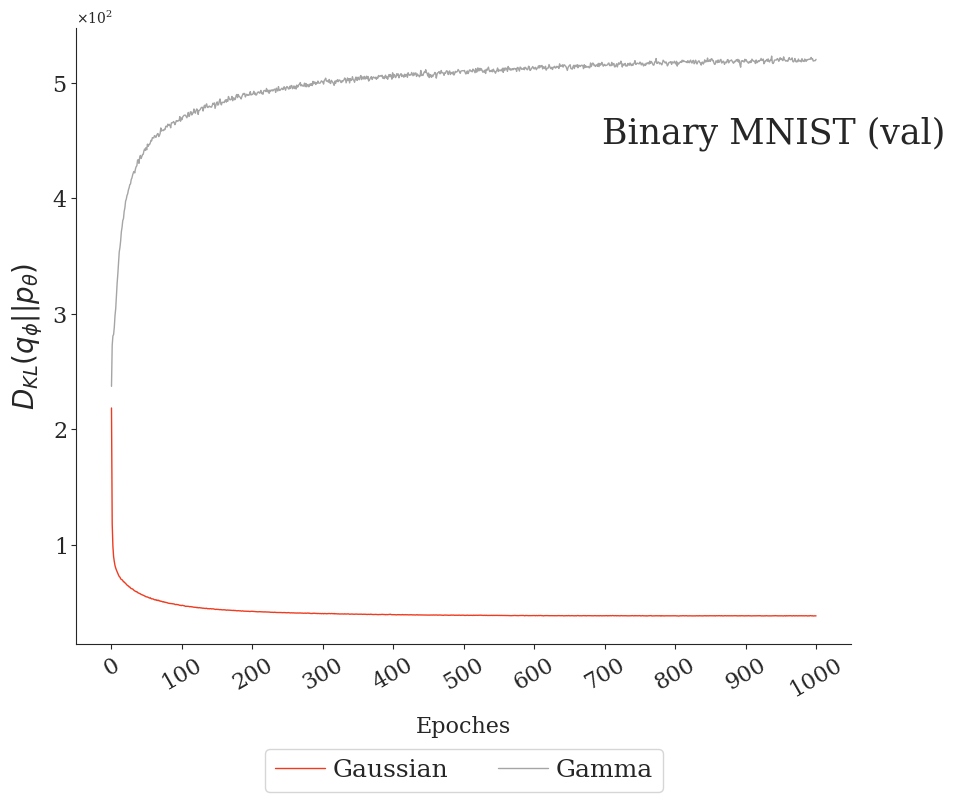

| CIFAR-10 | MNIST | Binary MNIST | |

|---|---|---|---|

| KL |  |

|

|

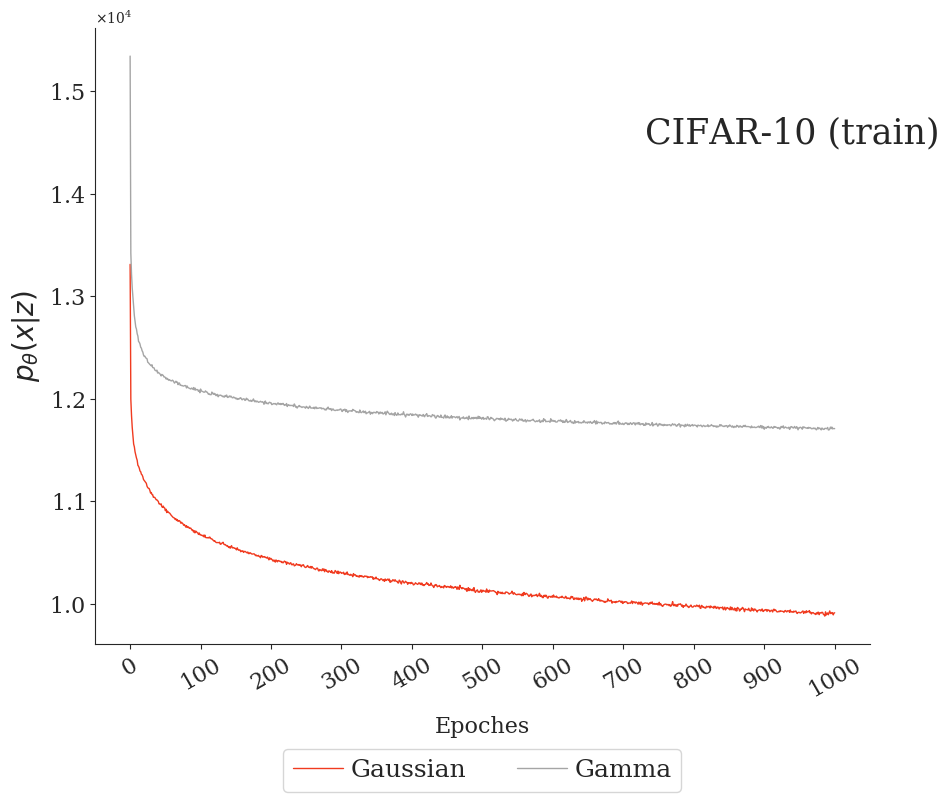

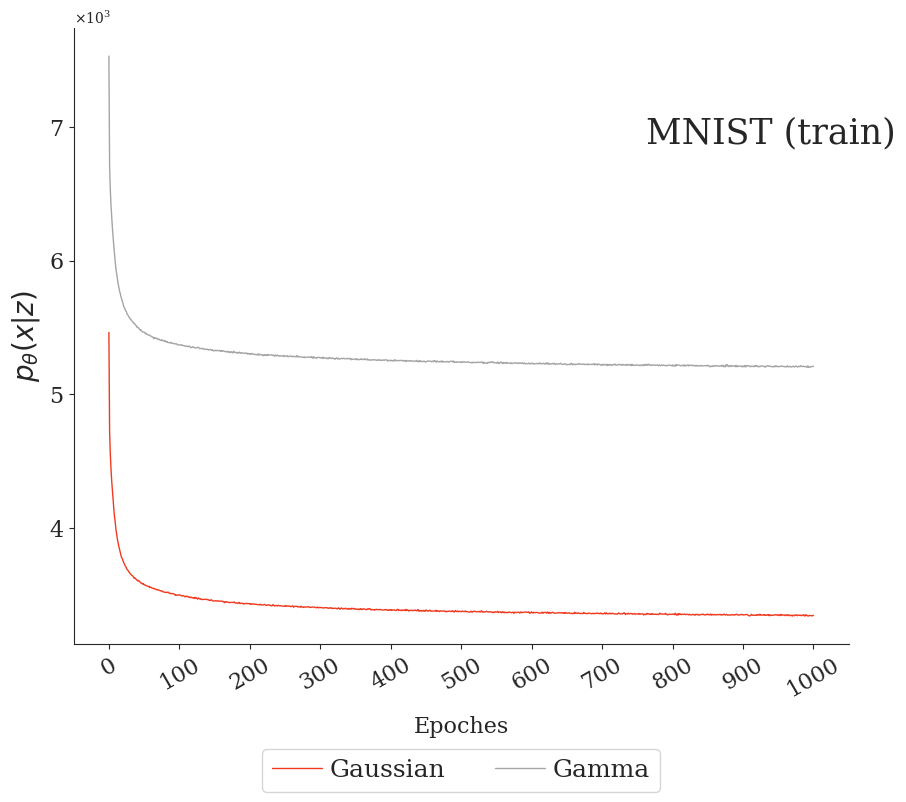

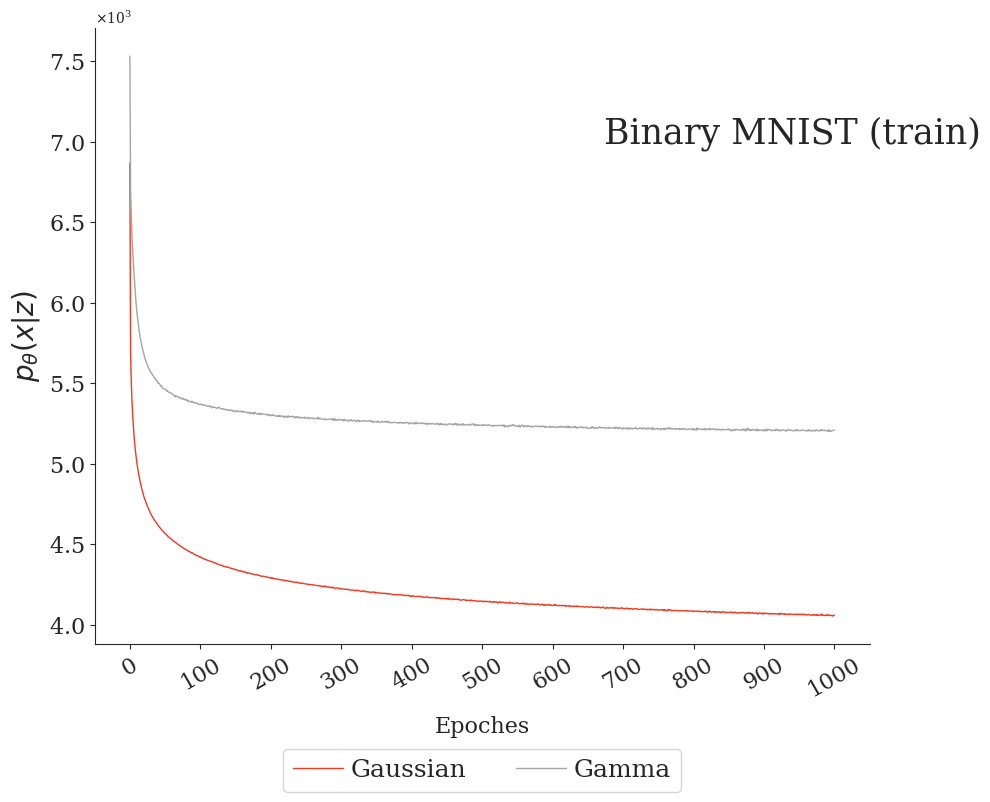

| Recons |  |

|

|

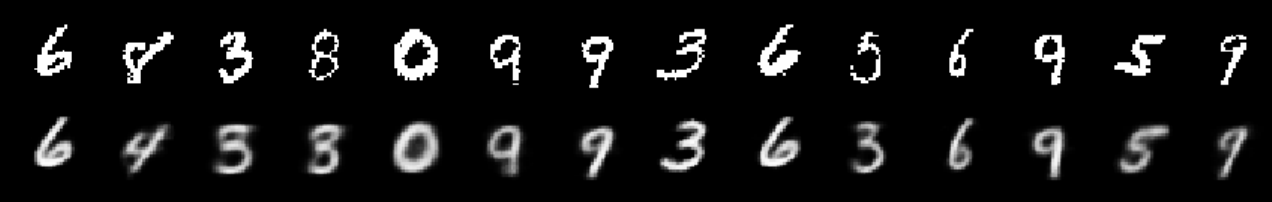

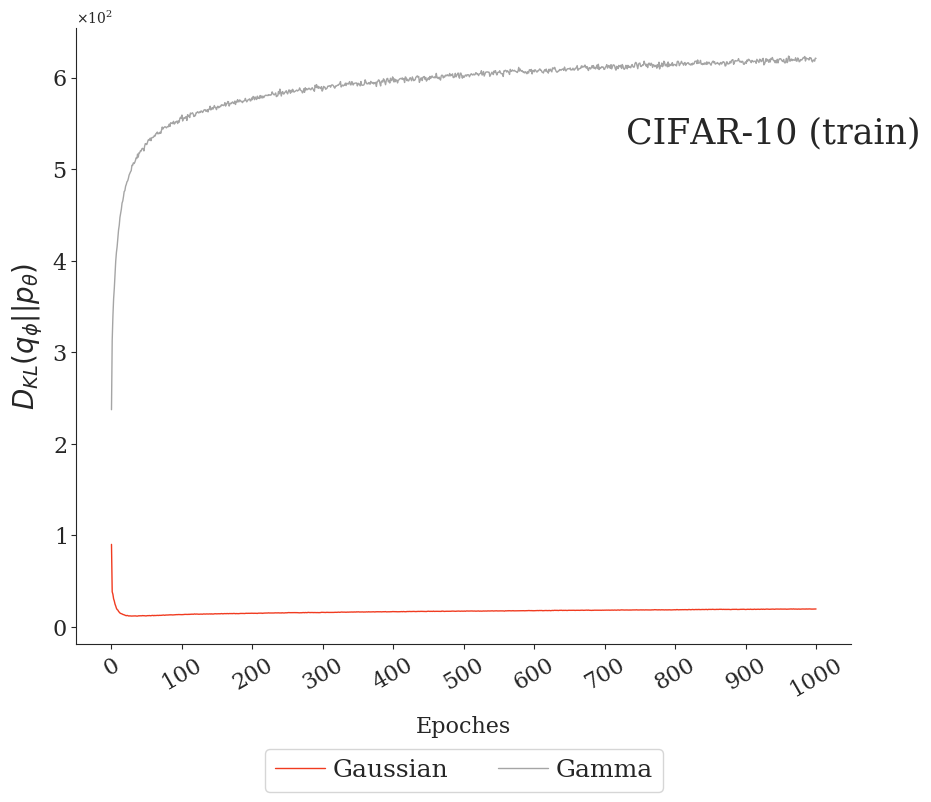

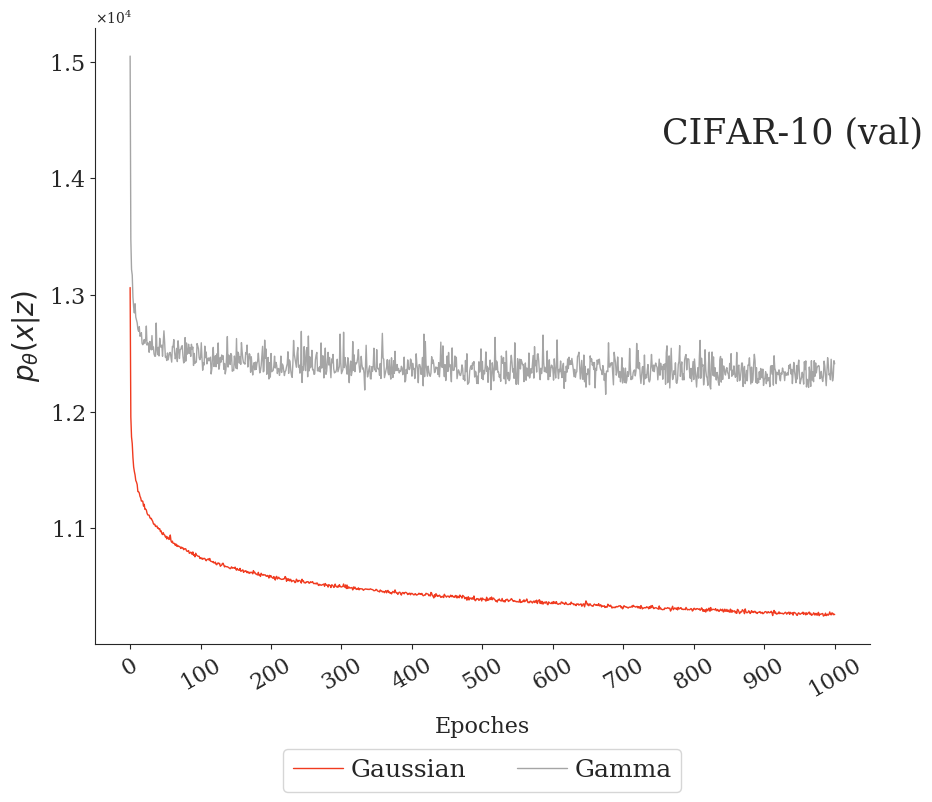

| CIFAR-10 | MNIST | Binary MNIST | |

|---|---|---|---|

| KL |  |

|

|

| Recons |  |

|

|

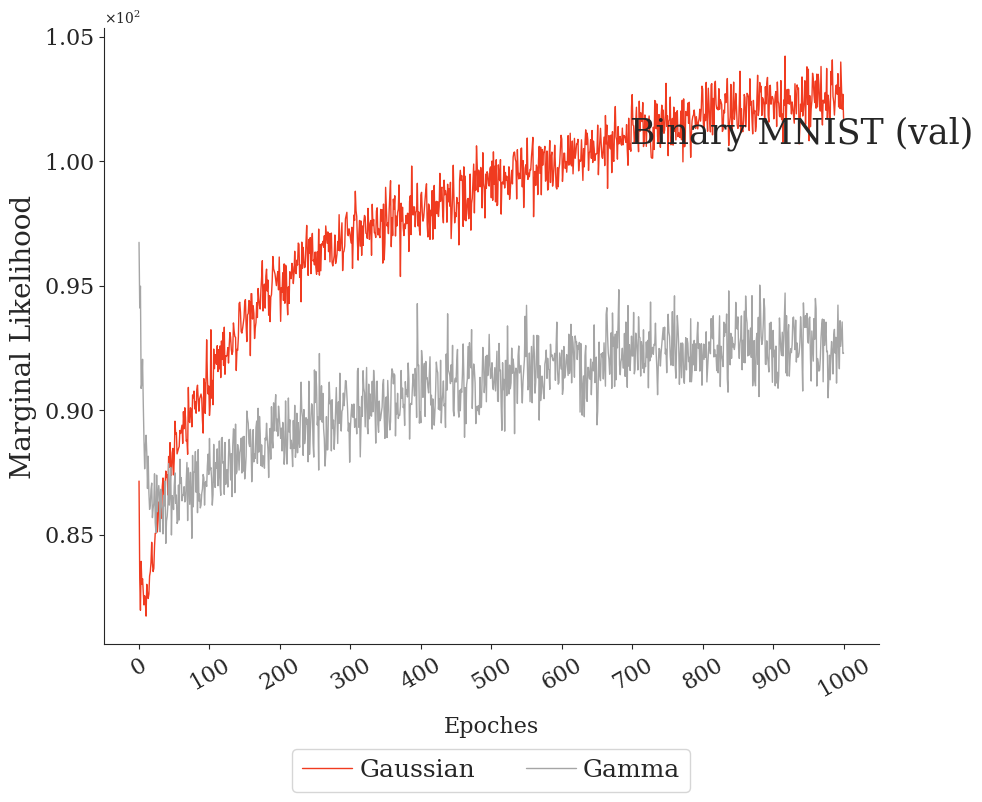

| M Likelihood |  |

|

|

- To Mr. Altosaar (@altosaar) for helping me on some questions I had in his implementation and several other questions in the subject of VAEs.