Watch this video made by Omnipotent of RaidForums or follow the tutorial below (Thanks):

https://www.youtube.com/watch?v=3r8SQT7mNHk

Read the #FAQ at the bottom of this page before submitting a issue.

From the project folder open CMD/Terminal and run the command below:

pip install -r requirements.txt

Start:

python start_ofd.py or double click start_ofd.py

Open and edit:

config.json

[auth]

Fill in the following:

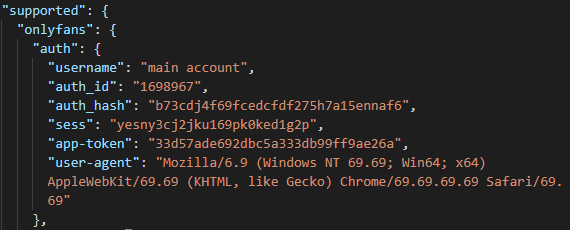

{"auth_id":"your_auth_id"}{"sess":"your_sess_token"}{"user-agent":"your_user-agent"}

If you're using 2FA or have these cookies:

{"auth_hash":"your_auth_hash"}{"auth_uniq_":"your_auth_uniq_"}

Optional change:

{"fp":"your_fp"}{"app-token":"your_token"}

Only enter in cookies that reflect your OnlyFans account otherwise, you'll get auth errors.

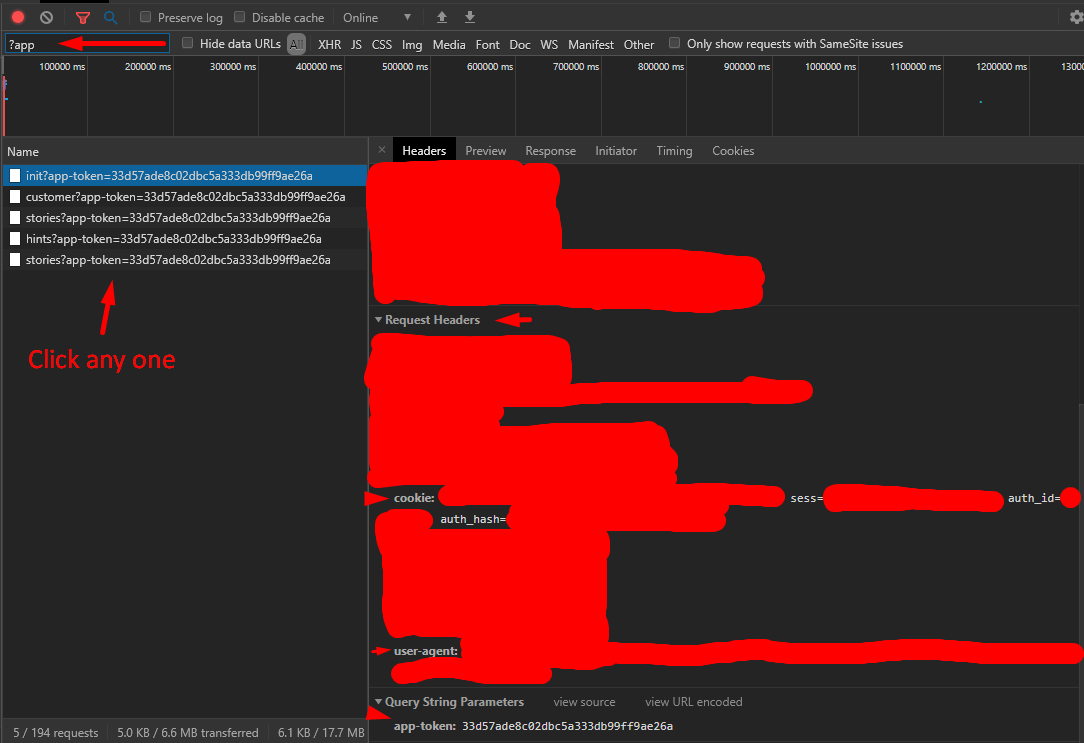

Go to www.onlyfans.com and login, open the network debugger, then check the image below on how to get said above cookies

Your auth config should look similar to this

python start_ofd.py or double click start_ofd.py

Enter in inputs as prompted by console.

Open:

config.json (Open with a texteditor)

[settings]

Default = ["{site_name}"]

{site_name} = The site's name you're scraping

Leave directory empty if you want files to be downloaded in the script folder.

If you're going to fill, please remember to use forward ("/") slashes only.

Default = ""

This puts each media file into a folder.

See file_name_format below for a list of options to use.

You don't need to use a unique identifier for file_directory_format.

Default = "{file_name}.{ext}"

{post_id} = The posts's ID

{media_id} = The media's ID [UNIQUE]

{file_name} = The media's file name [UNIQUE]

{username} = The account's username

{date} = The post's creation date

{text} = The media's text (You will get errors; don't use this)

{ext} = The media's file extension

Example: {date}/{text}-{file_name}.{ext}

Warning: It's important to keep a unique identifier next to .{ext}. By default it's {file_name}.

Default = ""

Ideal = "50"

Max = "255"

When you use {text} in file_name_format, a limit of how many characters can be set by inputting a number.

Default = ""

When you start the script you will be presented with the following scraping choices:

onlyfans = onlyfans

justforfans = justforfans

You can automatically choose what you want to scrape if you add it in the config file.

Default = ""

When you start the script you will be presented with the following scraping choices:

Everything = "a"

Images = "b"

Videos = "c"

Audios = "d"

You can automatically choose what you want to scrape if you add it in the config file.

Default = false

If set to true, the script will scrape all the names.

Default = true

If set to false, you'll be given the option to scrape individual apis.

Default = "json"

a = "json"

b = "csv"

You can export an archive to different formats.

Default = true

If set to false, any file with the same name won't be downloaded.

Default = "%d-%m-%Y"

If you live in the USA and you want to use the incorrect format, use the following:

"%m-%d-%Y"

Default = -1

When number is set below 1, it will use all threads.

Set a number higher than 0 to limit threads.

Default = 0

Type: Float

Space is calculated in GBs.

0.5 is 500mb, 1 is 1gb,etc.

When a drive goes below minimum drive space, it will move onto the next drive or go into an infinite loop until drive is above the minimum space.

Default = []

Supported webhooks:

Discord

Data is sent whenever you've completely downloaded a model.

You can also put in your own custom url and parse the data.

Need another webhook? Open an issue.

Default = false

If set to true the scraper run once and exit upon completion, otherwise the scraper will give the option to run again. This is useful if the scraper is being executed by a cron job or another script.

Default = true

If set to false, the script will run once and ask you to input anything to continue.

Default = 0

When infinite_loop is set to true this will set the time in seconds to pause the loop in between runs.

Default = []

Example = ["s", "gif"]

Input boards names that you want to automatically scrape.

Default = []

Example = ["ignore", "me"]

Any words you input, the script will ignore any content that contains these words.

Default = ""

a = "paid"

b = "free"

This setting will not include any paid or free accounts in your subscription list.

Example: "ignore_type": "paid"

This choice will not include any accounts that you've paid for.

Default = true

Set to false if you don't want to save metadata.

Default = true

Set to false if you want to use the old file structure.

If you do set to false, it'll be incompatable.

Default = ""

This setting will not include any blacklisted usernames when you choose the "scrape all" option.

Go to https://onlyfans.com/my/lists and create a new list; you can name it whatever you want but I called mine "Blacklisted".

Add the list's name to the config.

Example: "blacklist_name": "Blacklisted"

You can create as many lists as you want.

-m

The script will download metadata files only.

Before troubleshooting, make sure you're using Python 3.9.

Error: Access Denied / Auth Loop

Make sure your cookies and user-agent are correct. Use this tool Cookie Helper

AttributeError: type object 'datetime.datetime' has no attribute 'fromisoformat'

Only works with Python 3.7 and above.

I can't see ".settings" folder'

Make sure you can see hidden files

I'm getting authed into the wrong account

Enjoy the free content. | This has been patched.

I'm using Linux OS and something isn't working.

Script was built on Windows 10. If you're using Linux you can still submit an issue and I'll try my best to fix it.

Am I able to bypass paywalls with this script?

Hell yeah! My open source script can bypass paywalls for free. Tutorial

Do OnlyFans or OnlyFans models know I'm using this script?

No, but there is identifiable information in the metadata folder which contains your IP address, so don't share it unless you're using a proxy/vpn or just don't care.

Do you collect session information?

No. The code is on Github which allows you to audit the codebase yourself. You can use wireshark or any other network analysis program to verify the outgoing connections are respective to the modules you chose.

Does this script violate the Onlyfans.com ToS?

No... OnlyFans themselves allow users to download content as stated in their Terms of Service

8.2.2 you may print or download one copy of a reasonable number of pages of the Website for your own personal, non-commercial use and not for further reproduction, publication, or distribution."

Disclaimer:

OnlyFans is a registered trademark of Fenix International Limited.

The contributors of this script isn't in any way affiliated with, sponsored by, or endorsed by Fenix International Limited.

The contributors of this script are not responsible for the end users' actions.