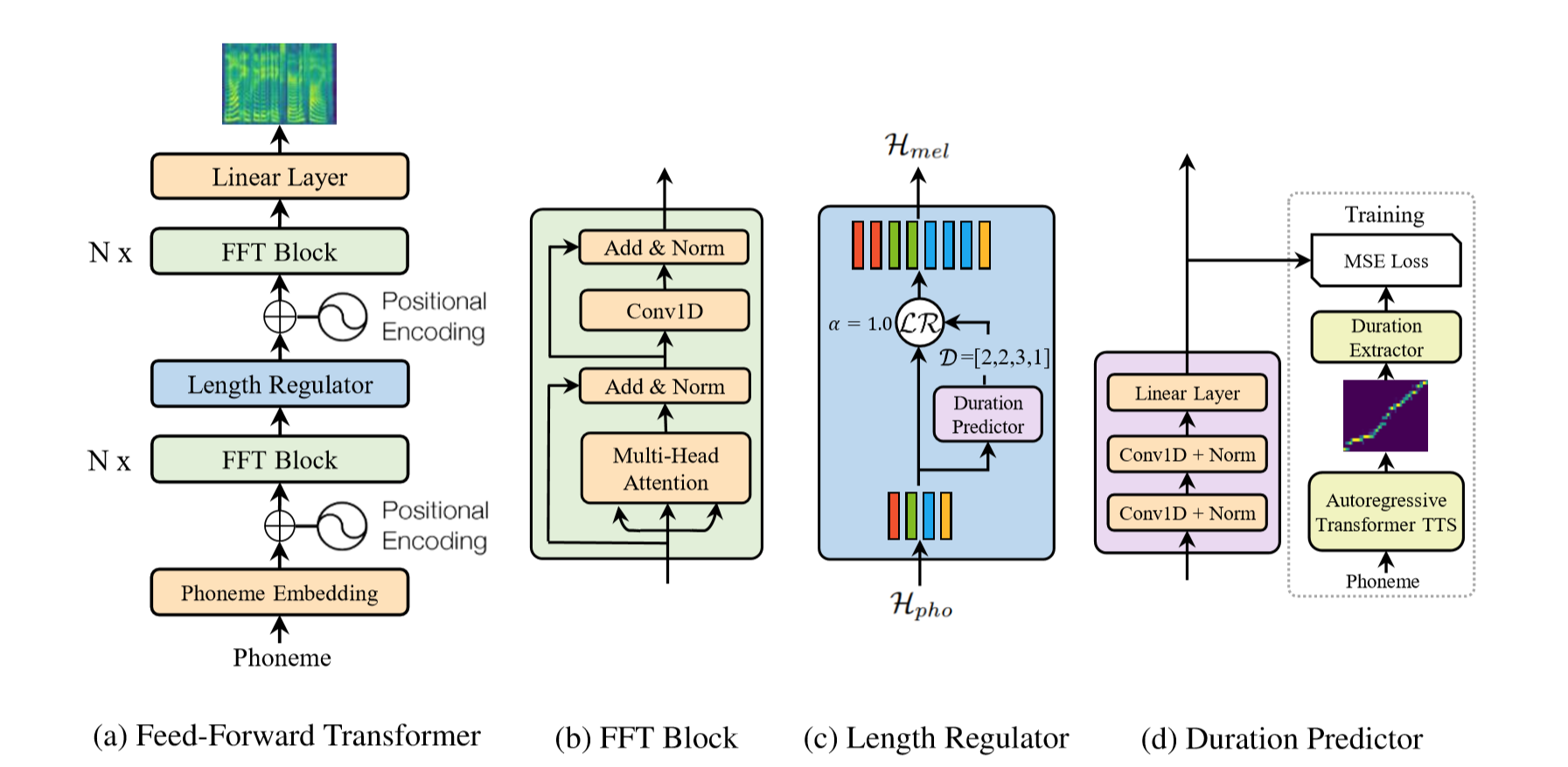

Inspired by Microsoft's FastSpeech we modified Tacotron (Fork from fatchord's WaveRNN) to generate speech in a single forward pass using a duration predictor to align text and generated mel spectrograms. Hence, we call the model ForwardTacotron.

The model has following advantages:

- Robustness: No repeats and failed attention modes for challenging sentences.

- Speed: The generation of a mel spectogram takes about 0.04s on a GeForce RTX 2080.

- Controllability: It is possible to control the speed of the generated utterance.

- Efficiency: In contrast to FastSpeech and Tacotron, the model of ForwardTacotron does not use any attention. Hence, the required memory grows linearly with text size, which makes it possible to synthesize large articles at once.

You can use this notebook below to synthesize your own text:

Make sure you have:

- Python >= 3.6

- PyTorch 1 with CUDA

Then install the rest with pip:

pip install -r requirements.txt

(1) Download and preprocess the LJSpeech Dataset.

python preprocess.py --path /path/to/ljspeech

(2) Train Tacotron with:

python train_tacotron.py

(3) Use the trained tacotron model to create alignment features with

python train_tacotron.py --force_align

(4) Train ForwardTacotron with

python train_forward.py

(5) Generate Sentences with Griffin-Lim vocoder

python gen_forward.py --alpha 1 --input_text "this is whatever you want it to be" griffinlim

As in the original repo you can also use a trained WaveRNN vocoder:

python gen_forward.py --input_text "this is whatever you want it to be" wavernn

- https://github.com/keithito/tacotron

- https://github.com/fatchord/WaveRNN

- https://github.com/xcmyz/LightSpeech

- Christian Schäfer, github: cschaefer26

See LICENSE for details.