Code for "Adversarial Camouflage: Hiding Physical-World Attacks with Natural Styles" (CVPR2020)

[Paper link](https://arxiv.org/abs/2003.08757) ## Installation- cuda==9.0.176

- cudnn==7.0.5

We highly recommend using conda.

conda create -n advcam_env python=3.6

source activate advcam_envAfter activating virtual environment:

git clone https://github.com/RjDuan/AdvCam-Hide-Adv-with-Natural-Styles

cd AdvCam-Hide-Adv-with-Natural-Styles

pip install --user --requirement requirements.txtDownload VGG19.npy under folder /vgg19

Note: We use tensorflow v1 in the code, incompatible with python>3.6 when we test.

Running our given example:

sh run.shAnd the directory :

|--physical-attack-data

| |--content

| |--stop-sign

| |--style

| |--stop-sign

| |--content-mask

| |--style-mask

Note: we set iteration as 4000 for making sure a successful adversary, but adv with better visual performance can be found in earlier iterations.

- Path to images

- Put target image, style image, and their segmentations in folders. We set the name of target/style image and their segmentation are same by default.

- Modify run.sh and advcam_main.py if you change the path to images.

- We define the segmentation in following way, you can change it in utils.py

| Segmentation type | RGB value |

|---|---|

| UnAttack | <(128,128,128) |

| Attack | >(128,128,128) |

-

Parameters

- Parameters can be speicified in either run.sh or advcam_main.py.

-

Run the follow scipt

sh run.shA few motivating examples of how AdvCam can be used.

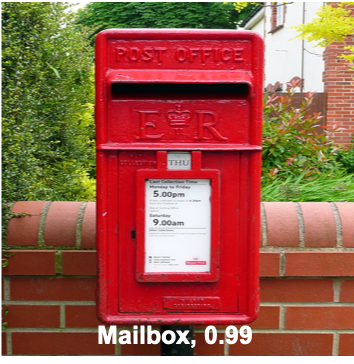

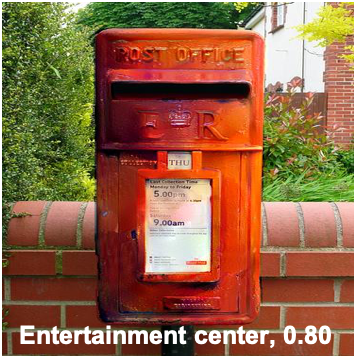

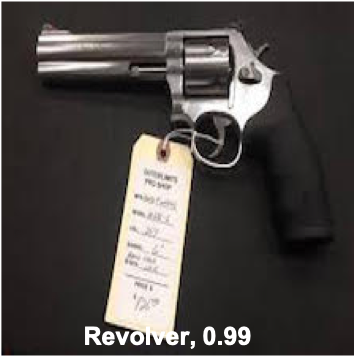

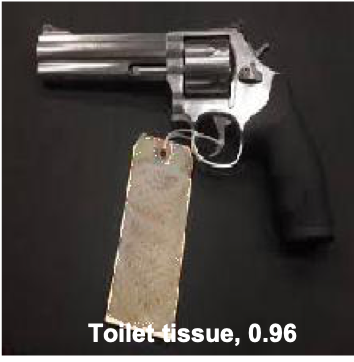

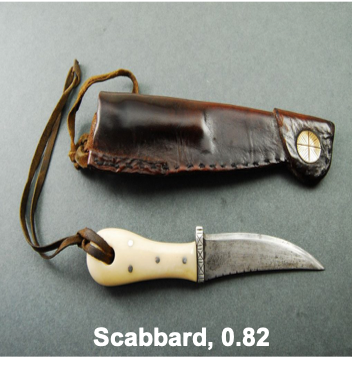

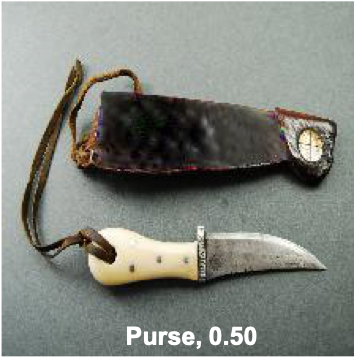

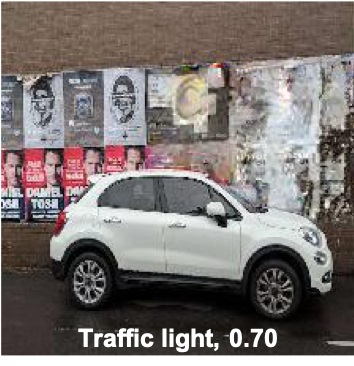

Three adverarial images generated by AdvCam with natural adversarial perturbation.

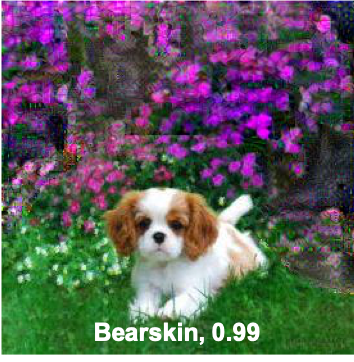

Or use AdvCam to hide your image from Google Image Search

Test image can be download here

Test image can be download here

More generation and test details can be found in video AdvCam

- About the dataset.

Data is collected from ImageNet, open source imagehub and some are caputured by ourselves. Data used in our paper can be downloaded here. We provide both images and their masks (segmentaions).

- We use Yang's code for style transfer part.

- We use VGG19 implemented by Chris

@inproceedings{duan2020adversarial,

title={Adversarial Camouflage: Hiding Physical-World Attacks with Natural Styles},

author={Duan, Ranjie and Ma, Xingjun and Wang, Yisen and Bailey, James and Qin, A Kai and Yang, Yun},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={1000--1008},

year={2020}

}