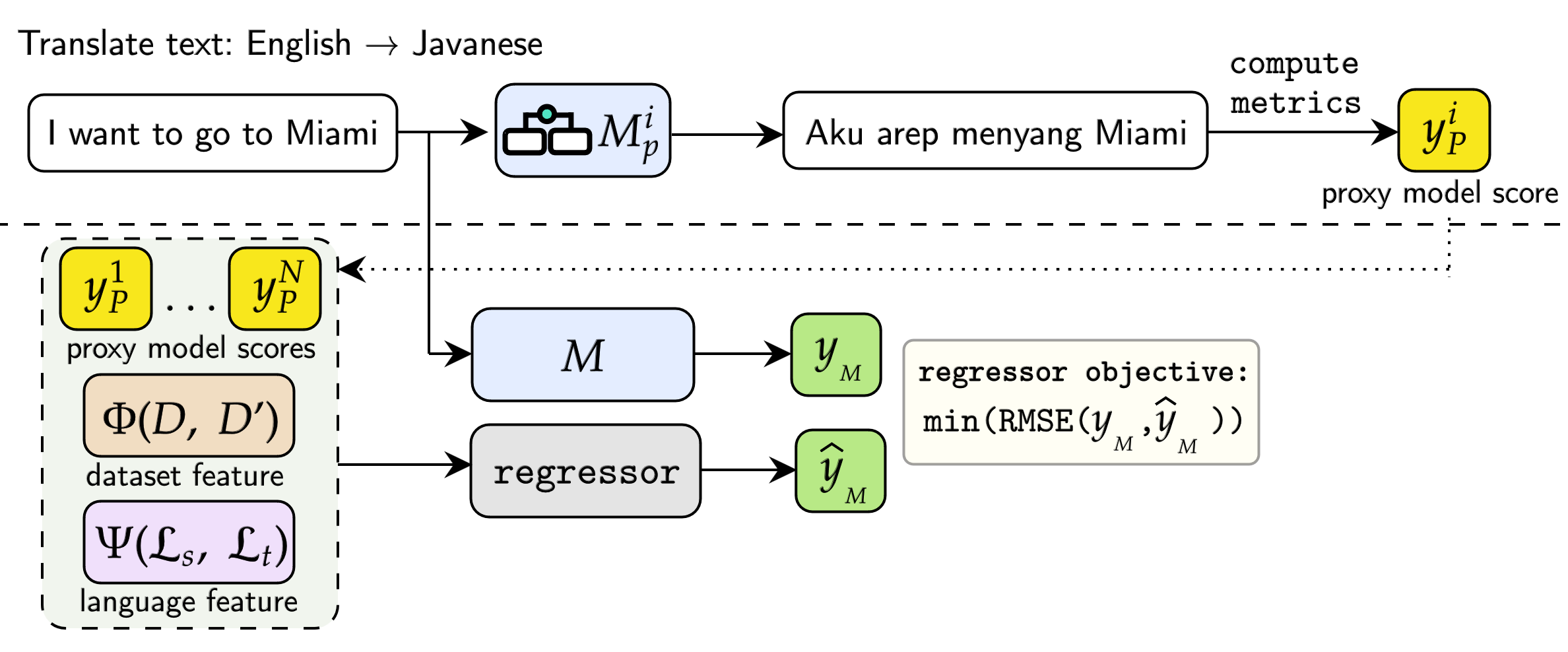

Performance prediction is a method to estimate the performance of Language Models (LMs) on various Natural Language Processing (NLP) tasks, mitigating computational costs associated with model capacity and data for fine-tuning. Our paper introduces ProxyLM, a scalable framework for predicting LM performance using proxy models in multilingual tasks. These proxy models act as surrogates, approximating the performance of the LM of interest. By leveraging proxy models, ProxyLM significantly reduces computational overhead on task evaluations, achieving up to a 37.08x speedup compared to traditional methods, even with our smallest proxy models. Additionally, our methodology showcases adaptability to previously unseen languages in pre-trained LMs, outperforming the state-of-the-art performance by 1.89x as measured by root-mean-square error (RMSE). This framework streamlines model selection, enabling efficient deployment and iterative LM enhancements without extensive computational resources.

If you are interested for more information, check out our full paper.

- Environment

- Setup Instruction

- Dataset Manual Download Links

- LMs Manual Download Links

- Example LM Finetuning Usages

- Example Regressor Usages

- Citation

Python 3.8 or higher. Details of dependencies are in requirements.txt.

- Run

setup.sh. The script will automatically install required dependencies, download selected models, and our curated dataset. - If the model or dataset cannot be downloaded successfully, please refer to section Dataset Manual Download Links and LMs Manual Download Links.

- Download our curated dataset for LM fine-tuning. You can also download the dataset from the original papers of MT560 dataset and NusaTranslation dataset, but we have compiled our dataset in a way that it smoothly runs within our pipeline.

- Unzip the dataset by running

tar -xzvf dataset.tar.gz datasetand put thedatasetfolder inexperimentsfolder

If any of the download link has expired or become invalid, please use the following link below to download the model manually.

-

Start training/finetuning tasks by running:

python -m src.lm_finetune.<lm_name>.main --src_lang ${src_lang} --tgt_lang ${tgt_lang} --finetune 1 --dataset ${dataset} --size ${size}

-

Start generation tasks by running:

python -m src.lm_finetune.<lm_name>.main --src_lang ${src_lang} --tgt_lang ${tgt_lang} --finetune 0

-

Replace

<lm_name>with LMs name such asm2m100,nllb,small100, ortransformer. -

All the results will be displayed in

experimentsfolder

-

Running a Random Experiment

python -m src.proxy_regressor.main -em random -r ${regressor_name} -rj path/to/regressor_config.json -d ${dataset_name} -m ${lm_name}

-

Running a LOLO Experiment for All Languages

python -m src.proxy_regressor.main -em lolo -r ${regressor_name} -rj path/to/regressor_config.json -d ${dataset_name} -m ${lm_name} -l all

-

Running a LOLO Experiment for a Specific Language

python -m src.proxy_regressor.main -em lolo -r ${regressor_name} -rj path/to/regressor_config.json -d ${dataset_name} -m ${lm_name} -l ind

-

Running a Seen-Unseen Experiment

python -m src.proxy_regressor.main -em seen_unseen -r ${regressor_name} -rj path/to/regressor_config.json -d ${dataset_name} -m ${lm_name}

-

Running a Cross-Dataset Experiment

python -m src.proxy_regressor.main -em cross_dataset -r ${regressor_name} -rj path/to/regressor_config.json -m ${lm_name}

-

Running an Incremental Experiment

python -m src.proxy_regressor.main -em incremental -r ${regressor_name} -rj path/to/regressor_config.json -d ${dataset_name} -m ${lm_name}

-

Replace

${regressor_name}withxgb,lgbm,poly, ormf. -

Replace

${lm_name}with estimated LMs name such asm2m100ornllb. -

Replace

${dataset_name}withmt560ornusa. -

Note that running each code will run all feature combinations.

If you use this code for your research, please cite the following work:

@article{anugraha2024proxylm,

title={ProxyLM: Predicting Language Model Performance on Multilingual Tasks via Proxy Models},

author={Anugraha, David and Winata, Genta Indra and Li, Chenyue and Irawan, Patrick Amadeus and Lee, En-Shiun Annie},

journal={arXiv preprint arXiv:2406.09334},

year={2024}

}If you have any questions, you can open a GitHub Issue or send us an email.