This repository contains a TensorFlow 2.0 implementation of SRNet, a Deep Learning steganalyzer presented by Mehdi Boroumand et al. The main goal of SRNet is to obtain good results by distinguishing between images with hidden information inside and those without hidden information without using any kind of domain information, such as initialising the weights of the first layers with high-pass filters. One architecture that does use this initialisation of the initial layers is YeNet, presented in Jian Ye et al.

The SRNet accuracy reported in its paper is 89.77%, but with the code shown in this repository only an accuracy close to 83% has been attained. This may be due to the dataset creation randomness or even the framework used to code the architecture. In the Pytorch-implementation-of-SRNet repository a Pytorch version of SRNet can be seen that reaches an accuracy of 89.43% according to the creator.

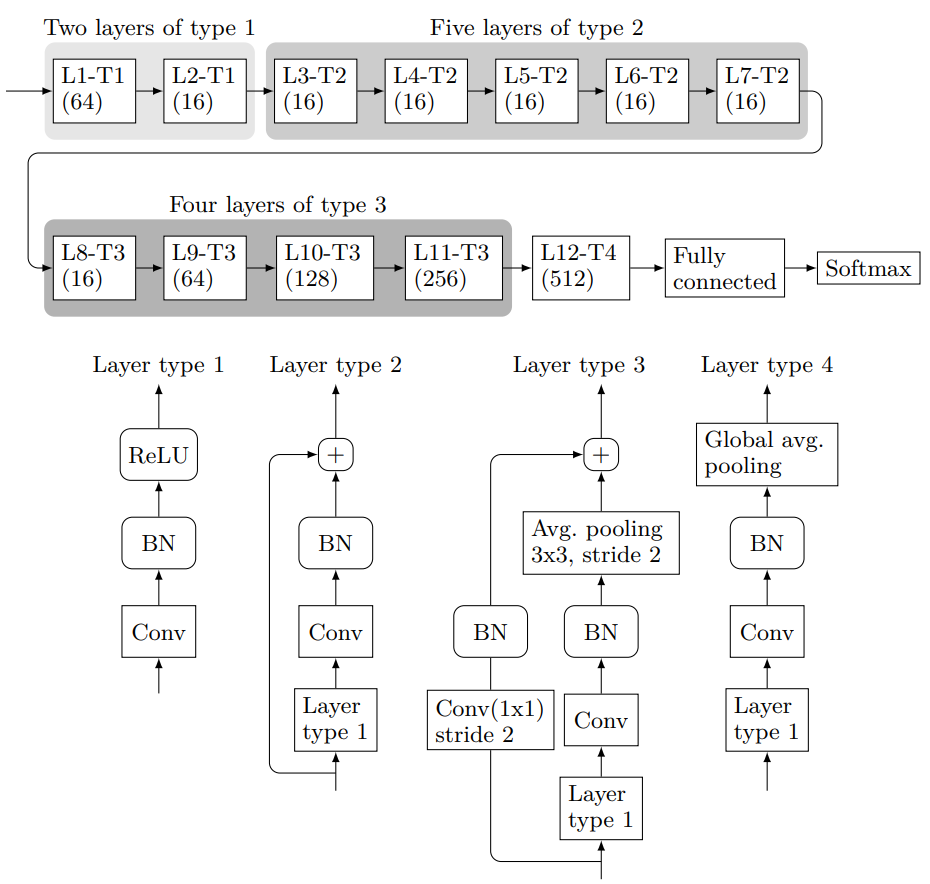

The SRNet architecture can be seen in the following figure.

There are a bunch of different files and folders in the repository:

dataset/This folder is thought to be the one that contains the different used datasets. It also contains theSRNet_dataset_creation.ipynbnotebook whose objective is to generate a dataset similar to the one described in Mehdi Boroumand et al. after the stego images have been created.trained_models/This folder contains the output files created by theSRNet_training.ipynbnotebook. It also has theSRNet-PairedBatches-2model as an example of the output files.SRNet_model.pyIt contains the SRNet architecture coded in TensorFlow 2.0.SRNet_training.ipynbNotebook to train SRNet once the dataset is created. This notebook creates some files that are stored in thetrained_models/folder.paired_image_generator.pyThis file contains a custom image generator that generates paired batches. This is, if we have a 16 images batch, 8 of them are cover images and the remaining 8 images are their respective stego versions. This is fundamental for the correct convergence of the model.

In this section, some instructions are given to train a model from scratch using the different files given in the repository.

- BOSS and BOWS datasets have to be downloaded. These are the two datasets used in Mehdi Boroumand et al.

- Generate the stego version of all the images downloaded in the previous step. This can be performed by using the different steganographic algorithm implementations that can be found in the Binghamton University website.

- Once the stego versions are generated, we should have four different folders; BOSS cover and stego images and BOWS cover and stego images. Execute the

dataset/SRNet_dataset_creation.ipynbnotebook to finally generate the dataset that will be used in the training phase. - Configure the different variables of the

SRNet_training.ipynbnotebook and execute it.

David González González LinkedIn