We use Clojure to start the kafka clusters. See clojure folder.

You need to install Clojure Cli

make kafka-clusterThe command will get into the clojure directory and launch the clojure-cli and download all the deps and launch a kafka cluster with the "sf_crime" topic.

Unzip the data with

tar -xjvf police-department-calls-for-service.tar.bz2conda create -n venv

conda activate venv

conda install -c conda-forge --file requirement.txt

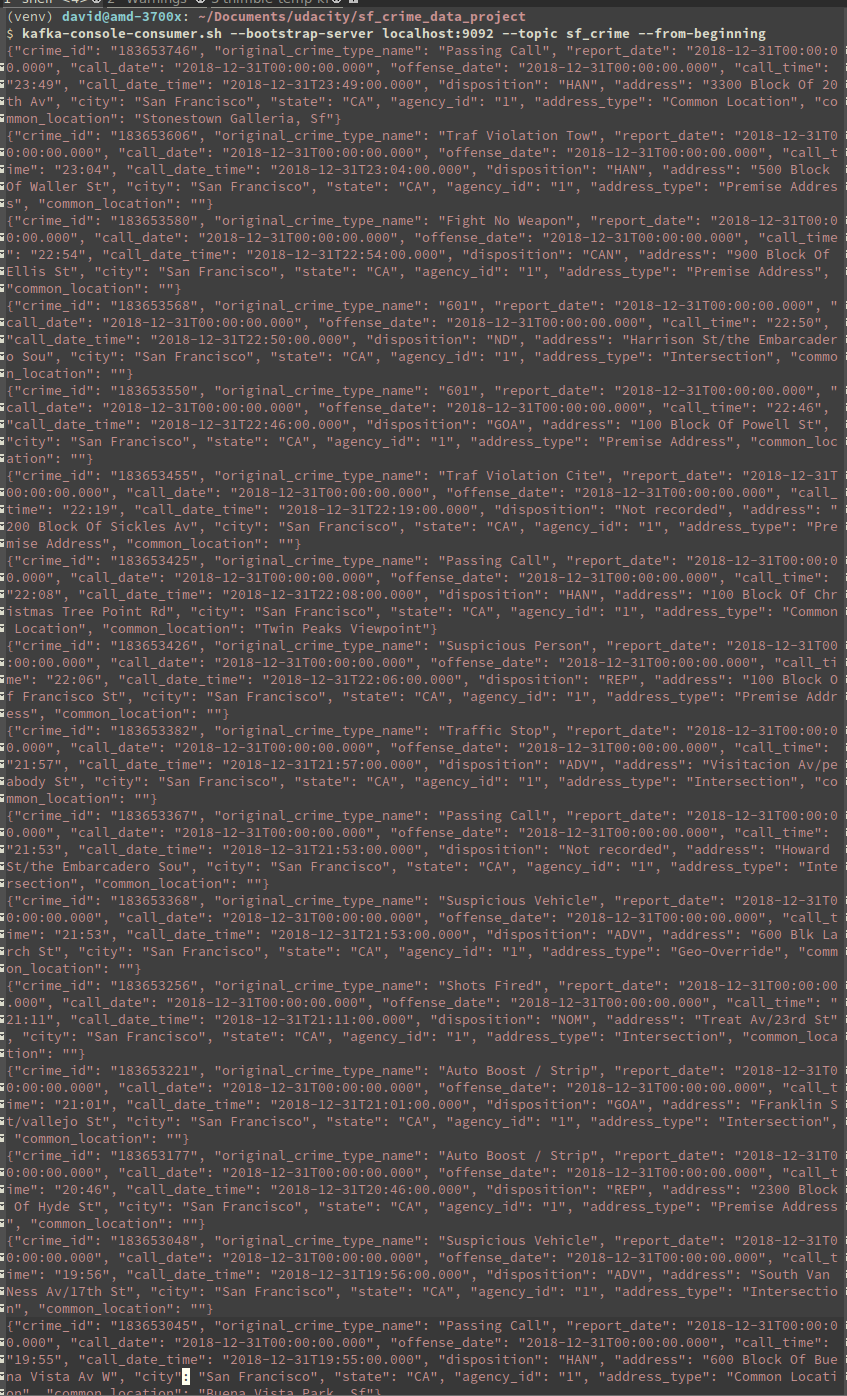

make kafka-serverThis is the default kafka console consumer

make kafka-consumer # console

make kafka-consumer-py # pythonmake data-stream #

# Manually

# spark-submit --verbose --packages org.apache.spark:spark-sql-kafka-0-10_2.11:2.4.3 data_stream.py-

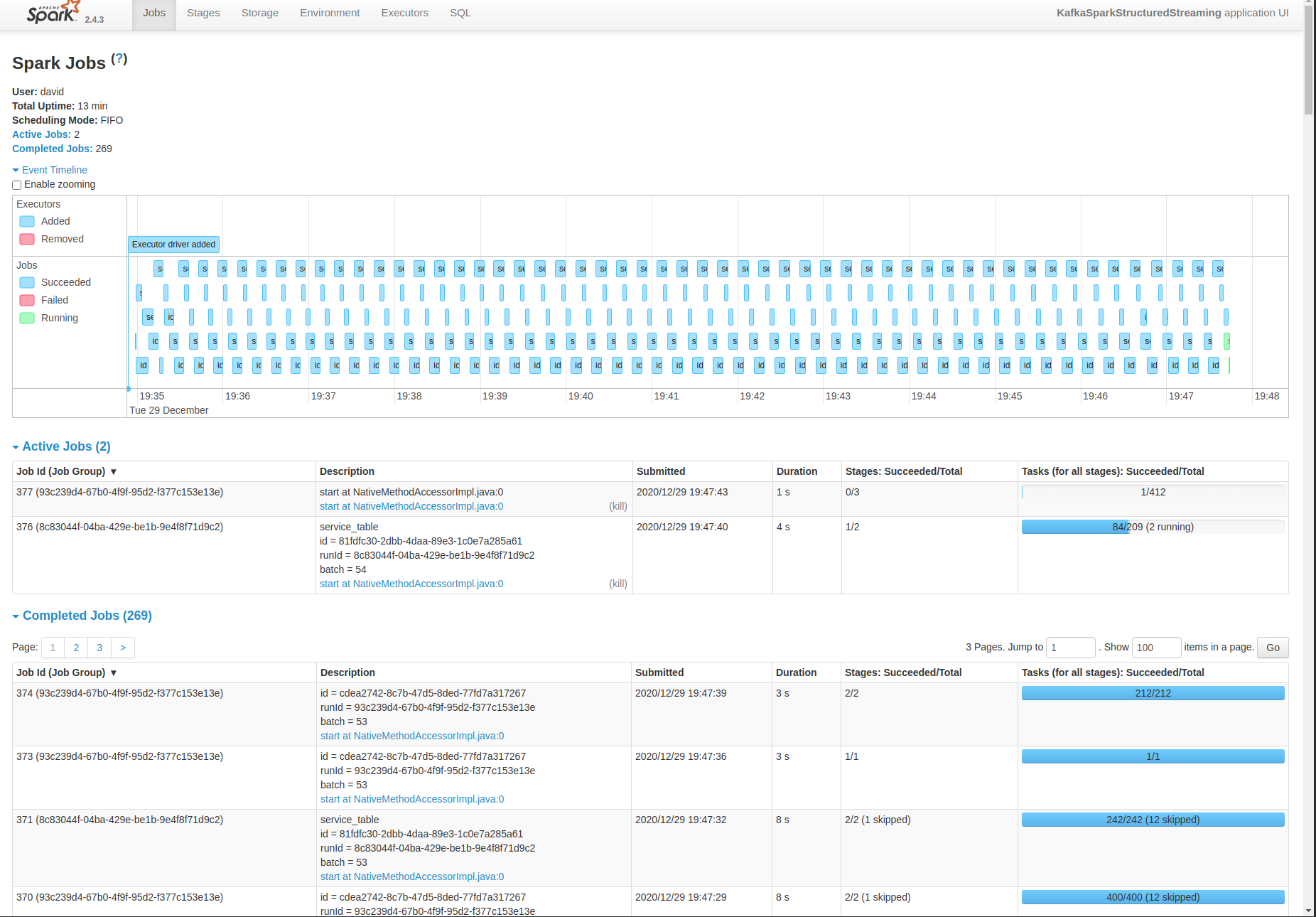

I increased the memory and the number of cores of the executor to increase througput, by increase a bit the latency. The rate of data arriving into our spark streaming application. I tried to use

processedRowsPerSecond(the rate at which Spark is processing data) to control the number of records being processed as well. -

I play with the

spark.default.parallelismand thespark.streaming.kafka.maxRatePerPartitionto increase throughput. There is balance between number of process and communication trade off though. I chose to have the same number as logical cores as my machine and have a max rate of per partition of 10 to balance between the kafka partition. This increased my processing bit a factor of 2.