The official implementation of the ICCV 2023 paper Robust Object Modeling for Visual Tracking

[CVF Open Access] [Poster] [Video]

[Models and Raw Results] (Google Drive) [Models and Raw Results] (Baidu Netdisk: romt)

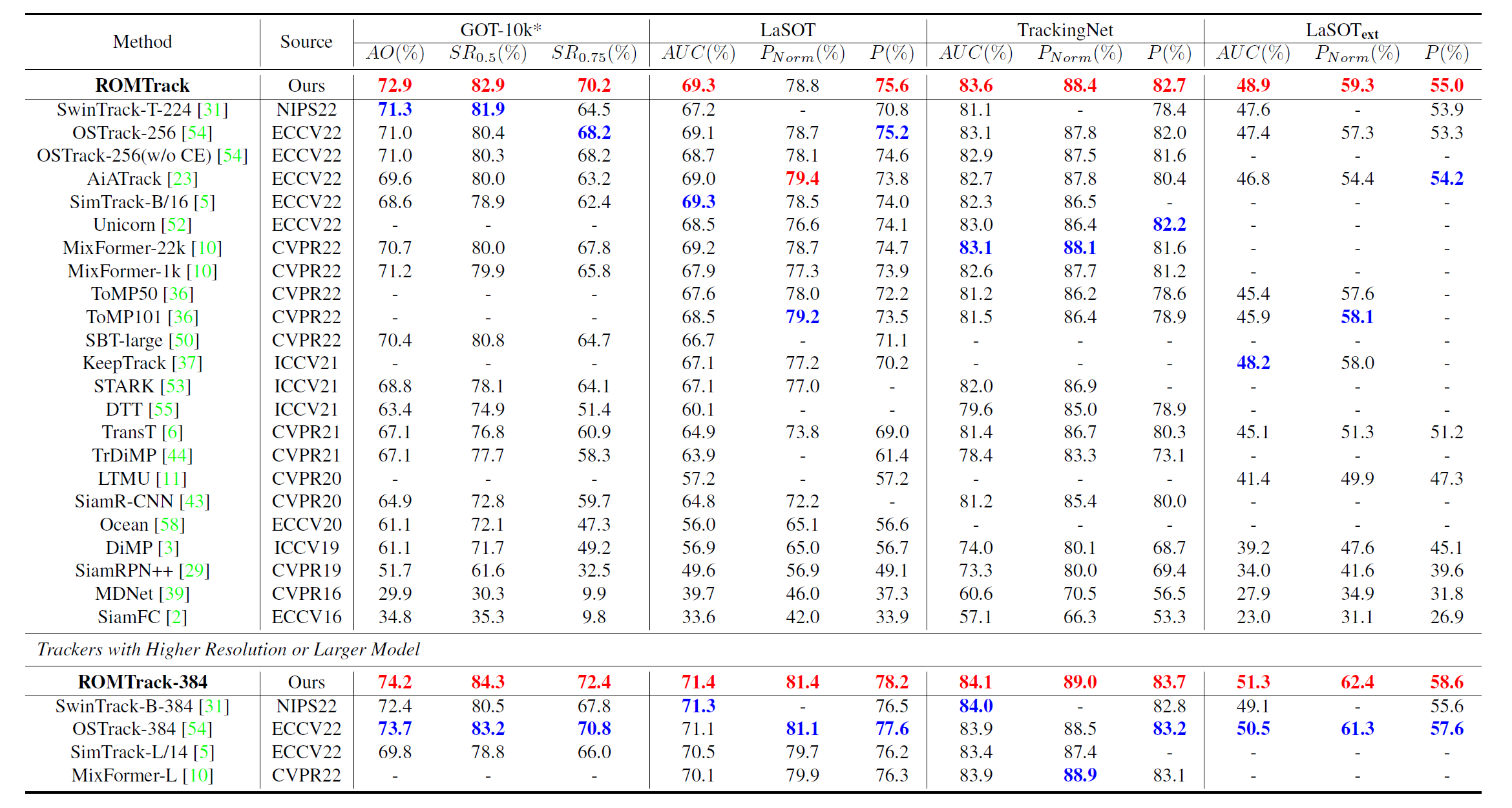

| Variant | ROMTrack | ROMTrack-384 |

|---|---|---|

| Model Setting | ViT-Base | ViT-Base |

| Pretrained Method | MAE | MAE |

| Pretrained Weight | MAE checkpoint | MAE checkpoint |

| Template / Search | 128×128 / 256×256 | 192×192 / 384×384 |

| GOT-10k (AO / SR 0.5 / SR 0.75) |

72.9 / 82.9 / 70.2 | 74.2 / 84.3 / 72.4 |

| LaSOT (AUC / Norm P / P) |

69.3 / 78.8 / 75.6 | 71.4 / 81.4 / 78.2 |

| TrackingNet (AUC / Norm P / P) |

83.6 / 88.4 / 82.7 | 84.1 / 89.0 / 83.7 |

| LaSOT_ext (AUC / Norm P / P) |

48.9 / 59.3 / 55.0 | 51.3 / 62.4 / 58.6 |

| TNL2K (AUC / Norm P / P) |

56.9 / 73.7 / 58.1 | 58.0 / 75.0 / 59.6 |

| NFS / OTB / UAV (AUC) |

68.0 / 71.4 / 69.7 | 68.8 / 70.9 / 70.5 |

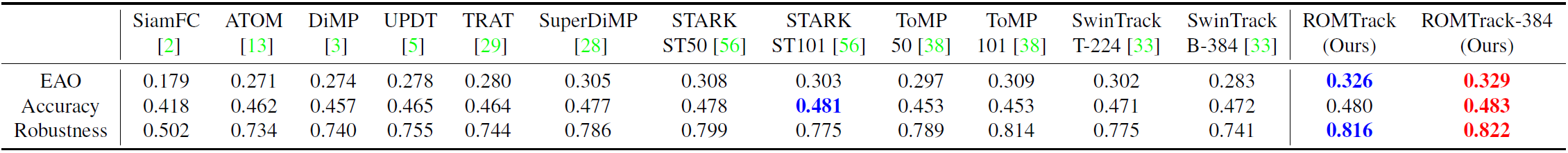

| VOT2020 BBox (EAO / A / R) |

0.326 / 0.480 / 0.816 | 0.329 / 0.483 / 0.822 |

| GPU FPS / MACs(G) / Params(M) | 116 / 34.5 / 92.1 | 67 / 77.7 / 92.1 |

| CPU FPS | 9.9 | 3.0 |

| Variant | ROMTrack-Tiny-256 | ROMTrack-Small-256 |

|---|---|---|

| Model Setting | ViT-Tiny | ViT-Small |

| Pretrained Method | Supervised on ImageNet-22k | Supervised on ImageNet-22k |

| Pretrained Weight | Timm checkpoint | Timm checkpoint |

| Template / Search | 128×128 / 256×256 | 128×128 / 256×256 |

| LaSOT (AUC / Norm P / P) |

59.3 / 68.8 / 60.4 | 62.3 / 72.3 / 65.3 |

| TrackingNet (AUC / Norm P / P) |

75.8 / 81.7 / 71.5 | 78.5 / 84.3 / 75.3 |

| LaSOT_ext (AUC / Norm P / P) |

40.4 / 49.7 / 43.1 | 43.2 / 52.9 / 47.1 |

| TNL2K (AUC / Norm P / P) |

48.6 / 64.4 / 45.5 | 52.0 / 68.7 / 50.5 |

| NFS / OTB / UAV (AUC) |

62.5 / 68.5 / 62.9 | 65.3 / 68.9 / 66.4 |

| VOT2020 BBox (EAO / A / R) |

0.265 / 0.459 / 0.704 | 0.297 / 0.477 / 0.764 |

| GPU FPS / MACs(G) / Params(M) | 466 / 2.7 / 8.0 | 236 / 9.3 / 25.4 |

| CPU FPS | 36.6 | 17.2 |

| Variant | ROMTrack-Large-384 |

|---|---|

| Model Setting | ViT-Large |

| Pretrained Method | MAE |

| Pretrained Weight | MAE checkpoint |

| Template / Search | 192×192 / 384×384 |

| LaSOT (AUC / Norm P / P) |

72.0 / 81.7 / 79.1 |

| TrackingNet (AUC / Norm P / P) |

85.2 / 89.8 / 85.4 |

| LaSOT_ext (AUC / Norm P / P) |

52.9 / 64.3 / 60.9 |

| TNL2K (AUC / Norm P / P) |

60.4 / 77.7 / 63.9 |

| NFS / OTB / UAV (AUC) |

69.2 / 71.0 / 71.5 |

| VOT2020 BBox (EAO / A / R) |

0.338 / 0.492 / 0.820 |

| GPU FPS / MACs(G) / Params(M) | 21 / 266.5 / 311.3 |

| CPU FPS | 1.1 |

[May 2, 2024]

- We release the extended models ROMTrack-Large-384 for Performance-Oriented Visual Tracking!

- Models and Raw Results for all versions of ROMTrack are available on Google Drive or Baidu Netdisk.

- Code and script for VOT2020 evaluation are available now.

[April 18, 2024]

- We release the extended models ROMTrack-Tiny-256 and ROMTrack-Small-256 for Efficient Visual Tracking!

- We provide detailed information for all versions of ROMTrack, see Base Models and Extended Models above.

[April 17, 2024]

- Repository Upgrade is already done! Training and Evaluation using PyTorch 2.2.0 and Python 3.8 brings more efficiency.

- Training and Evaluation Devices for the upgraded code: RTX A6000, Intel(R) Xeon(R) Silver 4314 CPU @ 2.40GHz, Ubuntu 20.04.1 LTS.

[March 25, 2024]

- We upgrade the implementation to Python 3.8 and PyTorch 2.2.0!

- We update results on TNL2K!

- We update FPS metrics on RTX A6000 GPU for reference.

[March 21, 2024]

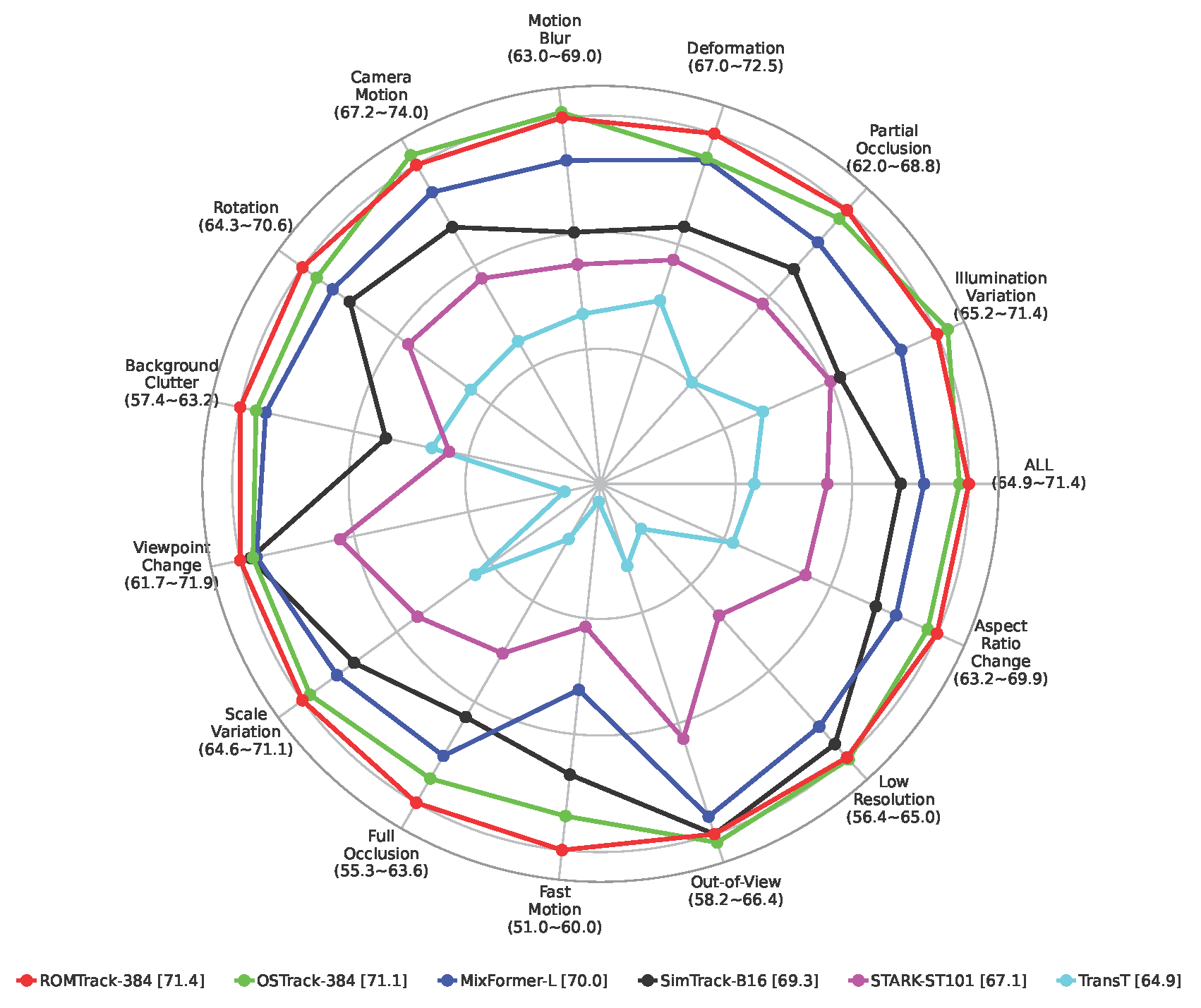

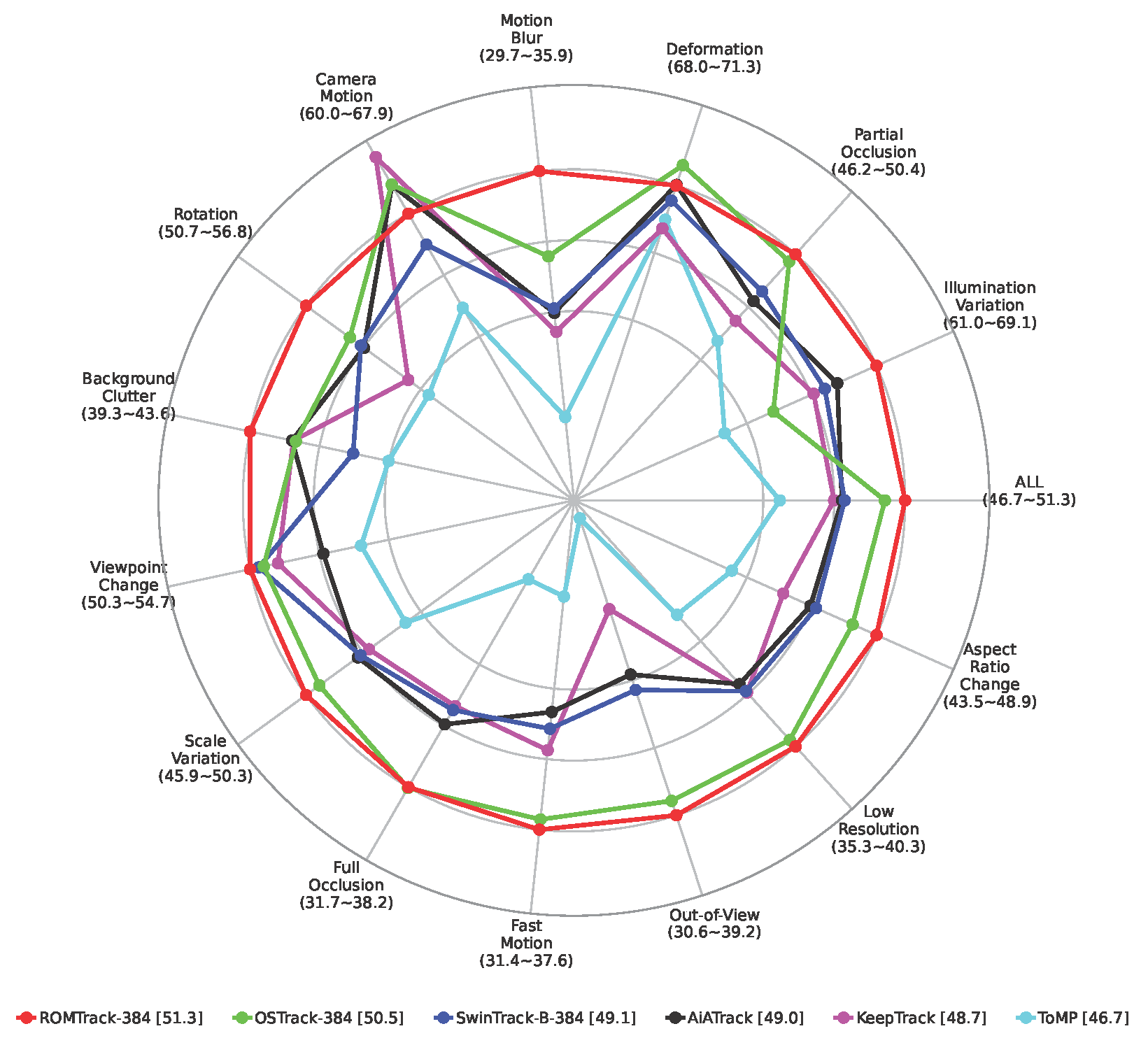

- We update 2 radar plots for visualization on LaSOT and LaSOT_ext.

- We post a blog on Zhihu, welcome for reading.

[October 18, 2023]

- We update paper in CVF Open Access version.

- We release poster and video.

[September 21, 2023]

- We release Models and Raw Results of ROMTrack.

- We refine README for more details.

[August 6, 2023]

- We release Code of ROMTrack.

[July 14, 2023]

- ROMTrack is accepted to ICCV2023!

- Extended Models (Efficiency-Oriented & Performance-Oriented) for ROMTrack

- Repository Upgrade

- More Analysis (Radar Plot) and More Results (TNL2K Dataset)

- Code for ROMTrack

- Model Zoo and Raw Results

- Refine README

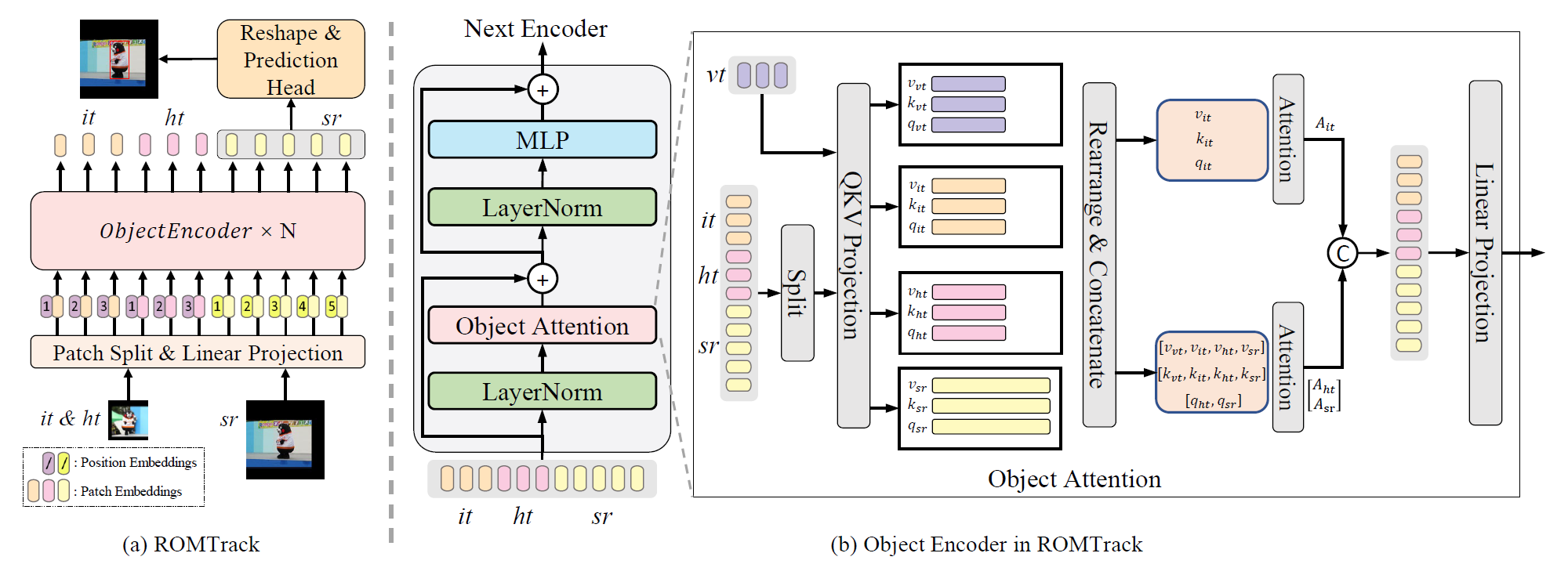

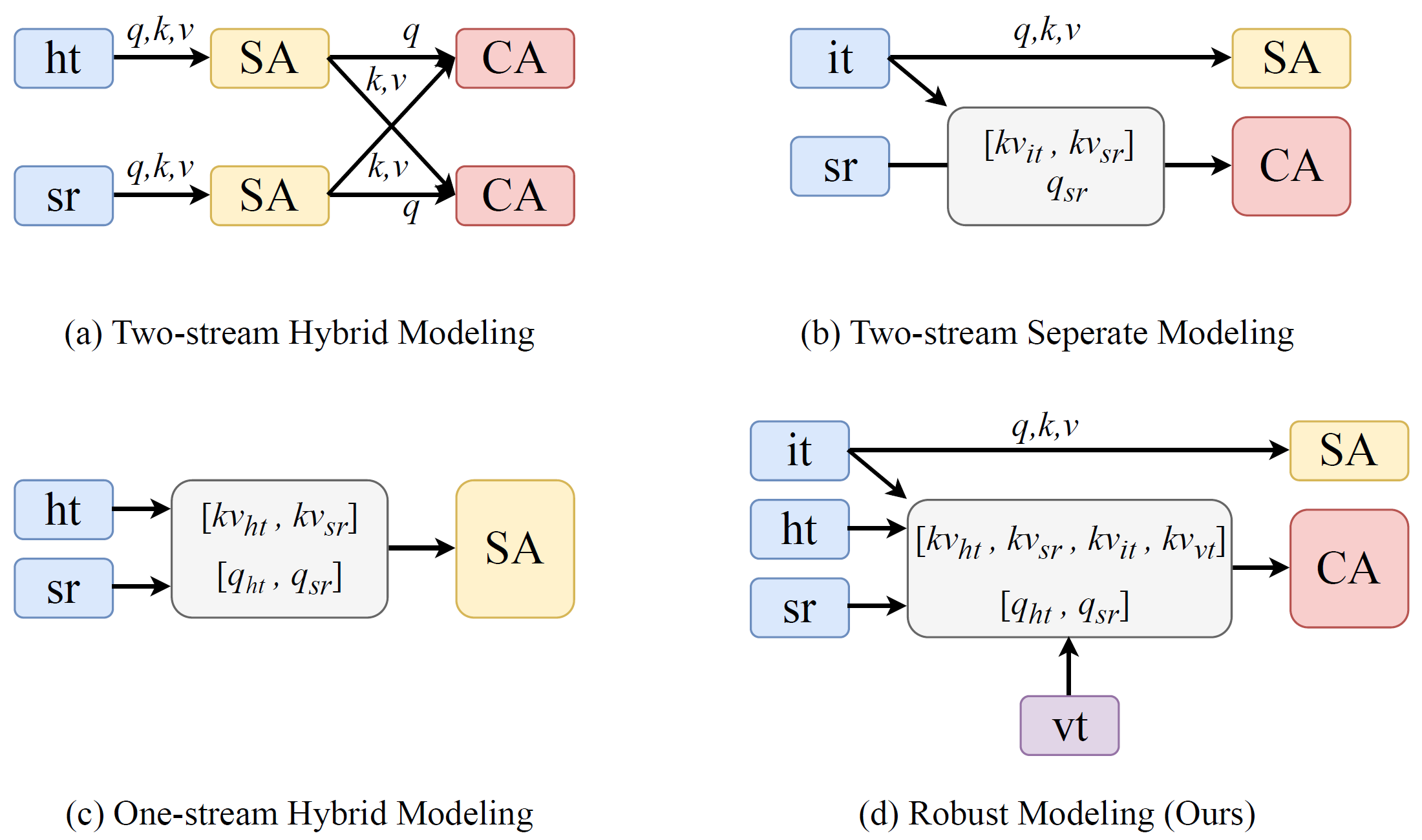

- ROMTrack employes a robust object modeling design which can keep the inherent information of the target template and enables mutual feature matching between the target and the search region simultaneously.

- Performance on Benchmarks

- Radar Analysis on LaSOT and LaSOT_ext

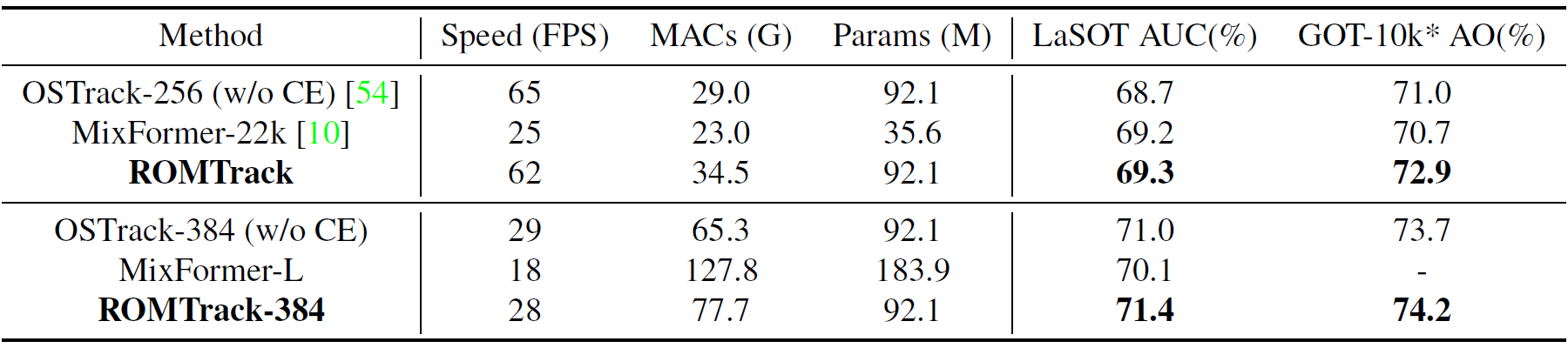

- Speed, MACs, Params (Test on 1080Ti)

Use the Anaconda

conda create -n romtrack python=3.8

conda activate romtrack

bash install_pytorch.sh

Put the tracking datasets in ./data. It should look like:

${ROMTrack_ROOT}

-- data

-- lasot

|-- airplane

|-- basketball

|-- bear

...

-- lasot_ext

|-- atv

|-- badminton

|-- cosplay

...

-- got10k

|-- test

|-- train

|-- val

-- coco

|-- annotations

|-- train2017

-- trackingnet

|-- TRAIN_0

|-- TRAIN_1

...

|-- TRAIN_11

|-- TEST

Run the following command to set paths for this project

python tracking/create_default_local_file.py --workspace_dir . --data_dir ./data --save_dir .

After running this command, you can also modify paths by editing these two files

lib/train/admin/local.py # paths about training

lib/test/evaluation/local.py # paths about testing

Training with multiple GPUs using DDP. More details of other training settings can be found at tracking/train_romtrack.sh

bash tracking/train_romtrack.sh

- LaSOT/LaSOT_ext/GOT10k-test/TrackingNet/OTB100/UAV123/NFS30.

- More details of test settings can be found at

tracking/test_romtrack.sh

- More details of test settings can be found at

bash tracking/test_romtrack.sh

- VOT2020. Current version is vot-toolkit(==0.5.3) and vot-trax(==3.0.3).

- Take ROMTrack-Large-384 below as an example.

### Evaluate ROMTrack-Large-384 with AlphaRefine

vot evaluate --workspace ./external/vot2020/ROMTrack_large_384 ROMTrack_large_384_AR

vot analysis --nocache --workspace ./external/vot2020/ROMTrack_large_384 ROMTrack_large_384_AR

### Evaluate ROMTrack-Large-384 without AlphaRefine

vot evaluate --workspace ./external/vot2020/ROMTrack_large_384 ROMTrack_large_384

vot analysis --nocache --workspace ./external/vot2020/ROMTrack_large_384 ROMTrack_large_384

bash tracking/profile_romtrack.sh

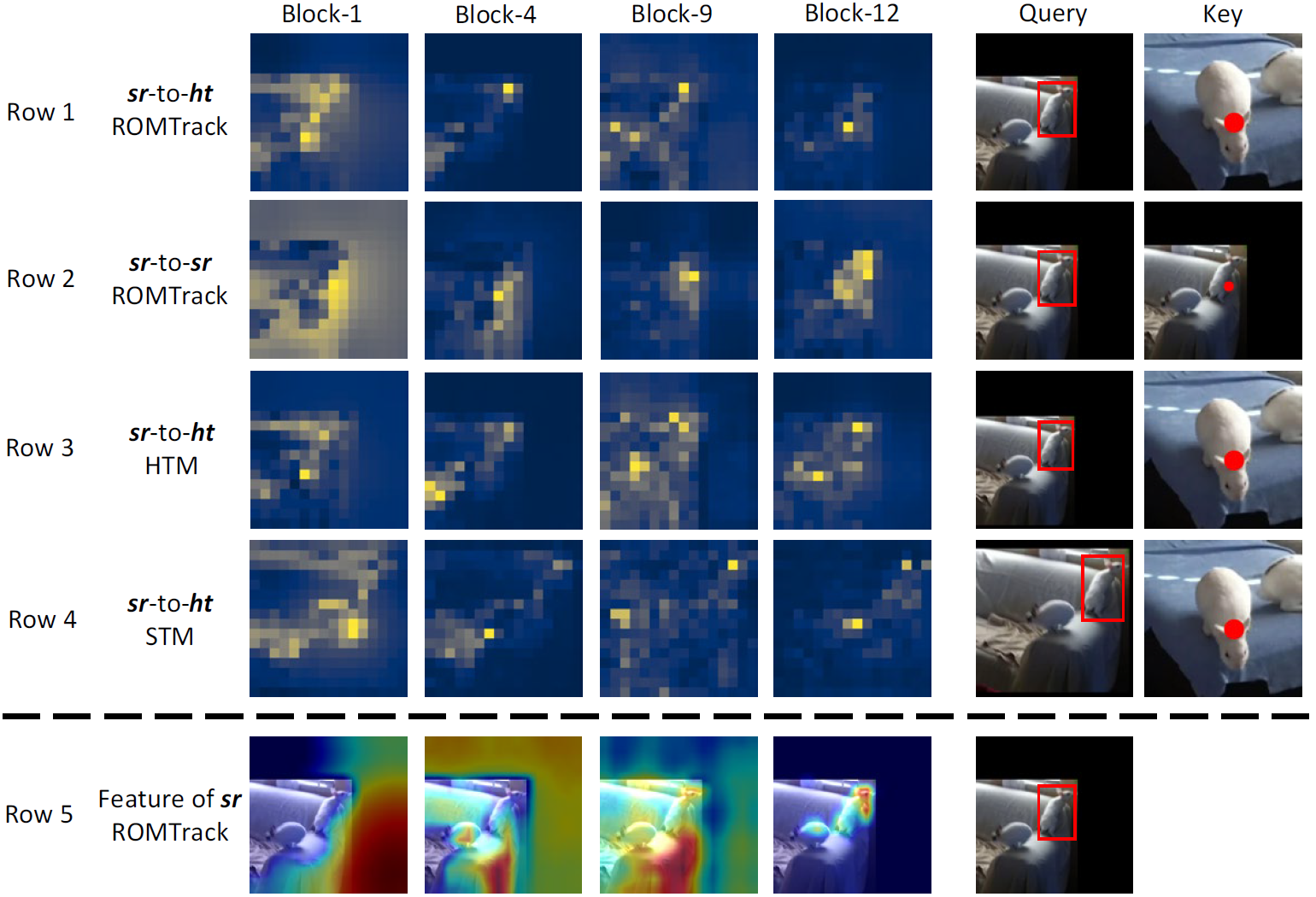

We provide attention maps and feature maps for several sequences on LaSOT. Detailed analysis can be found in our paper.

- Thanks for STARK, PyTracking and MixFormer Library, which helps us to quickly implement our ideas and test our performances.

- Our implementation of the ViT is modified from the Timm repo.

If our work is useful for your research, please feel free to star ⭐ and cite our paper:

@InProceedings{Cai_2023_ICCV,

author = {Cai, Yidong and Liu, Jie and Tang, Jie and Wu, Gangshan},

title = {Robust Object Modeling for Visual Tracking},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {9589-9600}

}