This repo presents a new research methodology to automatically detect students at-risk of failing a computer-based examination in computer programming modules (courses). By leveraging historical student data, we built predictive models using students' offline (static) resources including student characteristics and demographics, and online (dynamic) resources using programming and behavioural logs. Predictions are generated weekly during semester and evaluated afterwards.

Please consider citing the following if you use any of the work:

@article{azcona2019detecting,

title={Detecting students-at-risk in computer programming classes with learning analytics from students’ digital footprints},

author={Azcona, David and Hsiao, I-Han and Smeaton, Alan F},

journal={User Modeling and User-Adapted Interaction},

pages={1--30},

publisher={Springer}

}

- Python

- numpy

- scipy

- Pandas

- Matplotlib

- scikit-learn

- Jupyter

- Data Collection:

- Programming work

- Web interactions & events

- Demographics

- Academic grades

- Exploratory Data Analysis:

- Handcrafting Features:

- Measure correlations:

- Split Data into Training, Validation and Test:

- Model Selection:

- Predictions in real time!

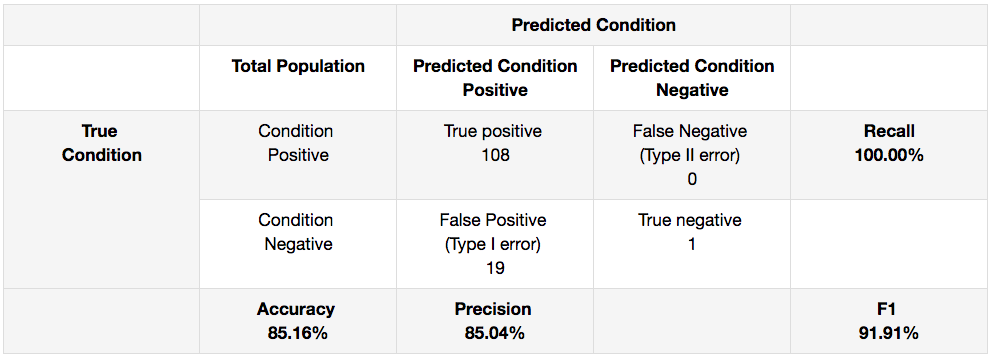

- Evaluation:

You can always view a notebook using https://nbviewer.jupyter.org/

- Creation of a virtual environments done by executing the command venv

- Command to activate virtual environment

- Install dependencies

- List the libraries installed on your environment

- Do your work!

- When you are done, the command to deactivate virtual environment

$ python3 -m venv env/

$ source env/bin/activate

(env) $ pip install -r requirements.txt

(env) $ pip freeze

(env) $ jupyter notebook

(env) $ ...

(env) $ deactivate

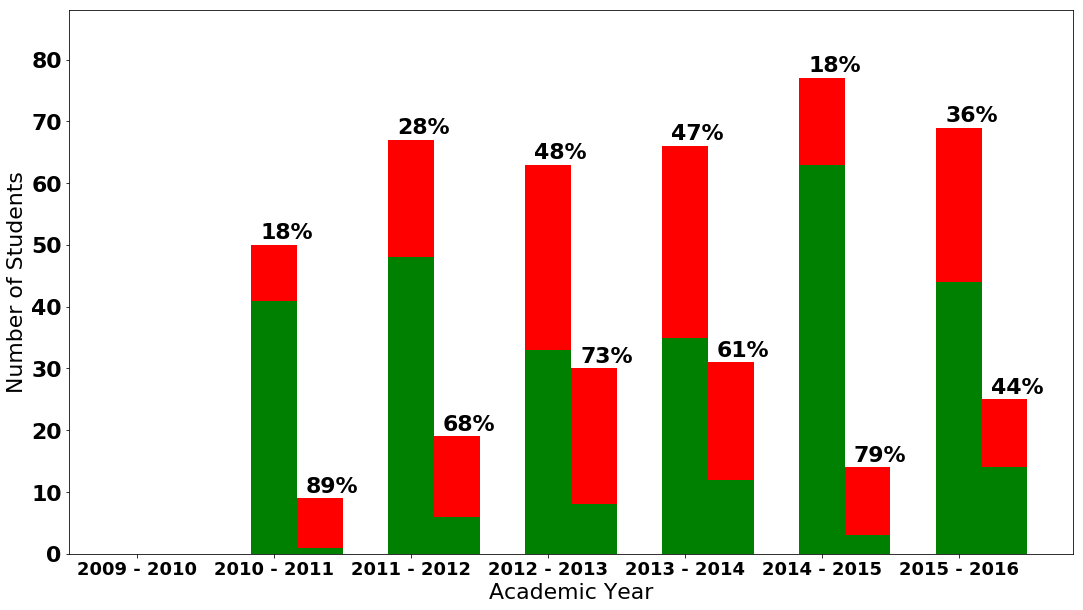

Exploring Passing & Failing Rates for CA114:

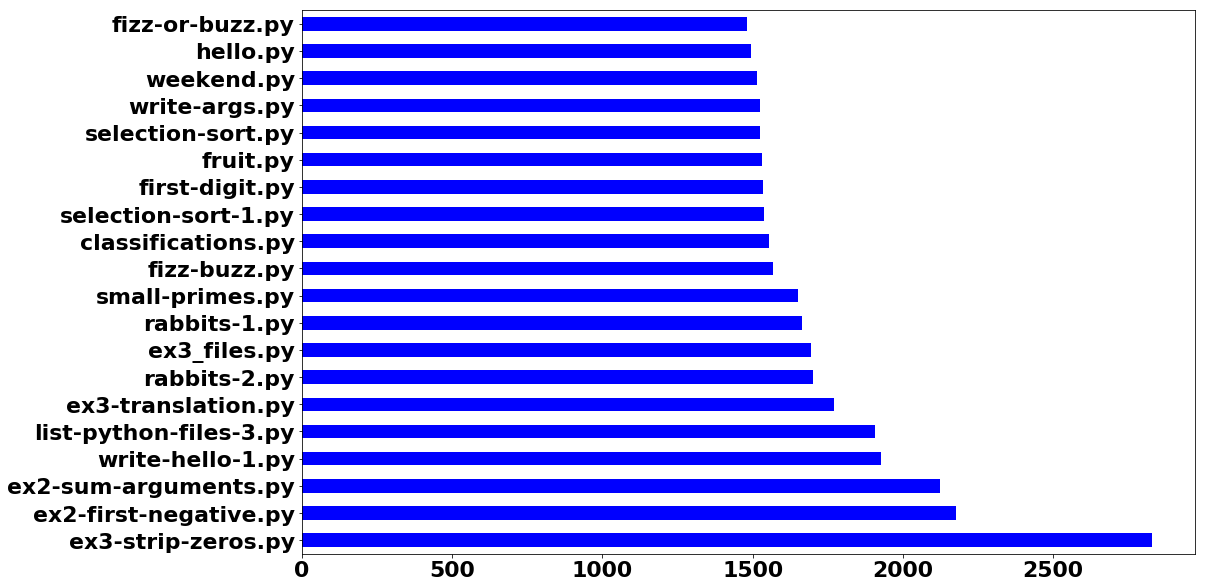

Exploring the TOP 20 most-submitted tasks:

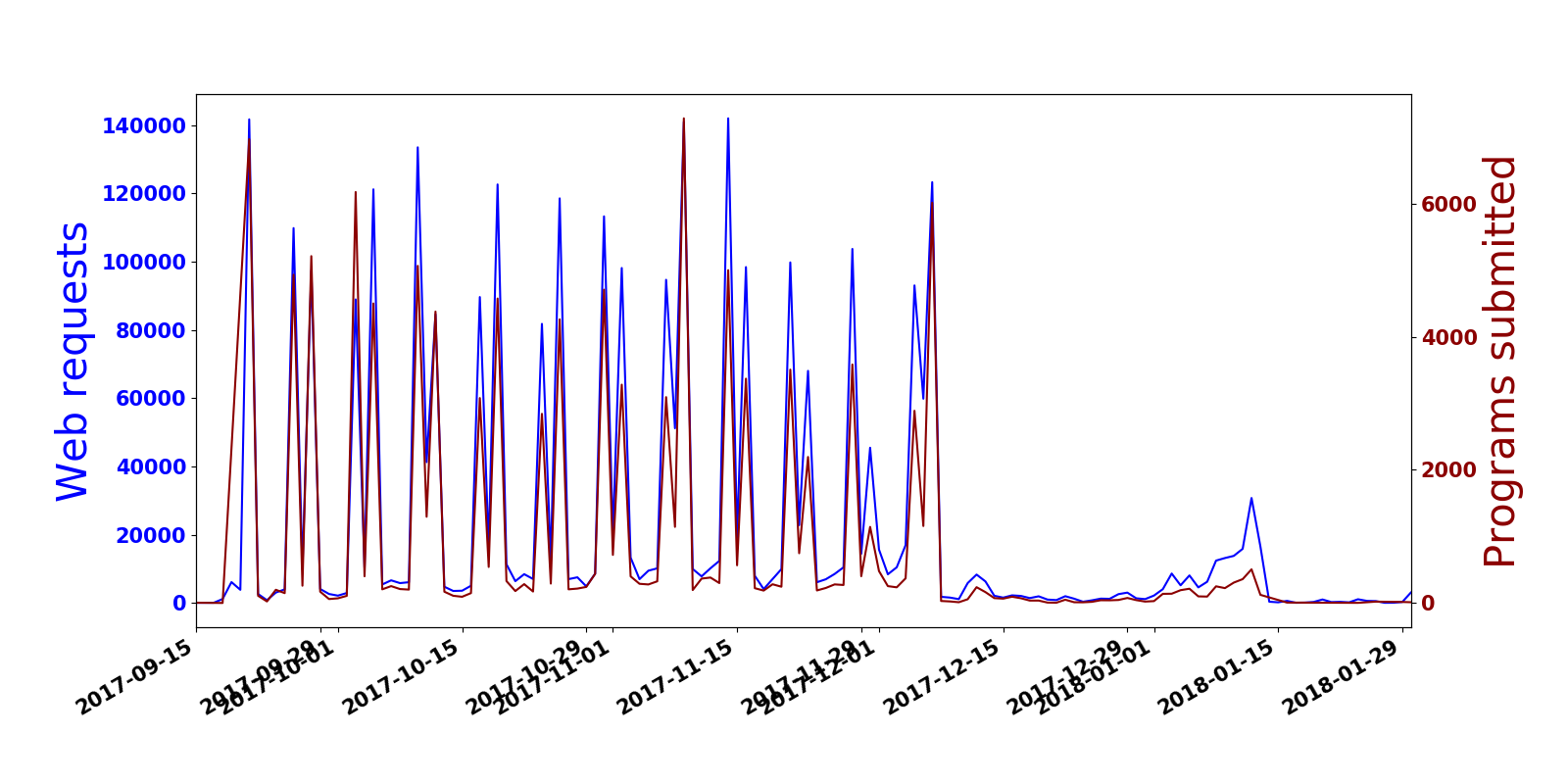

Exploring Programming & Web activity on 2017/2018 academic year:

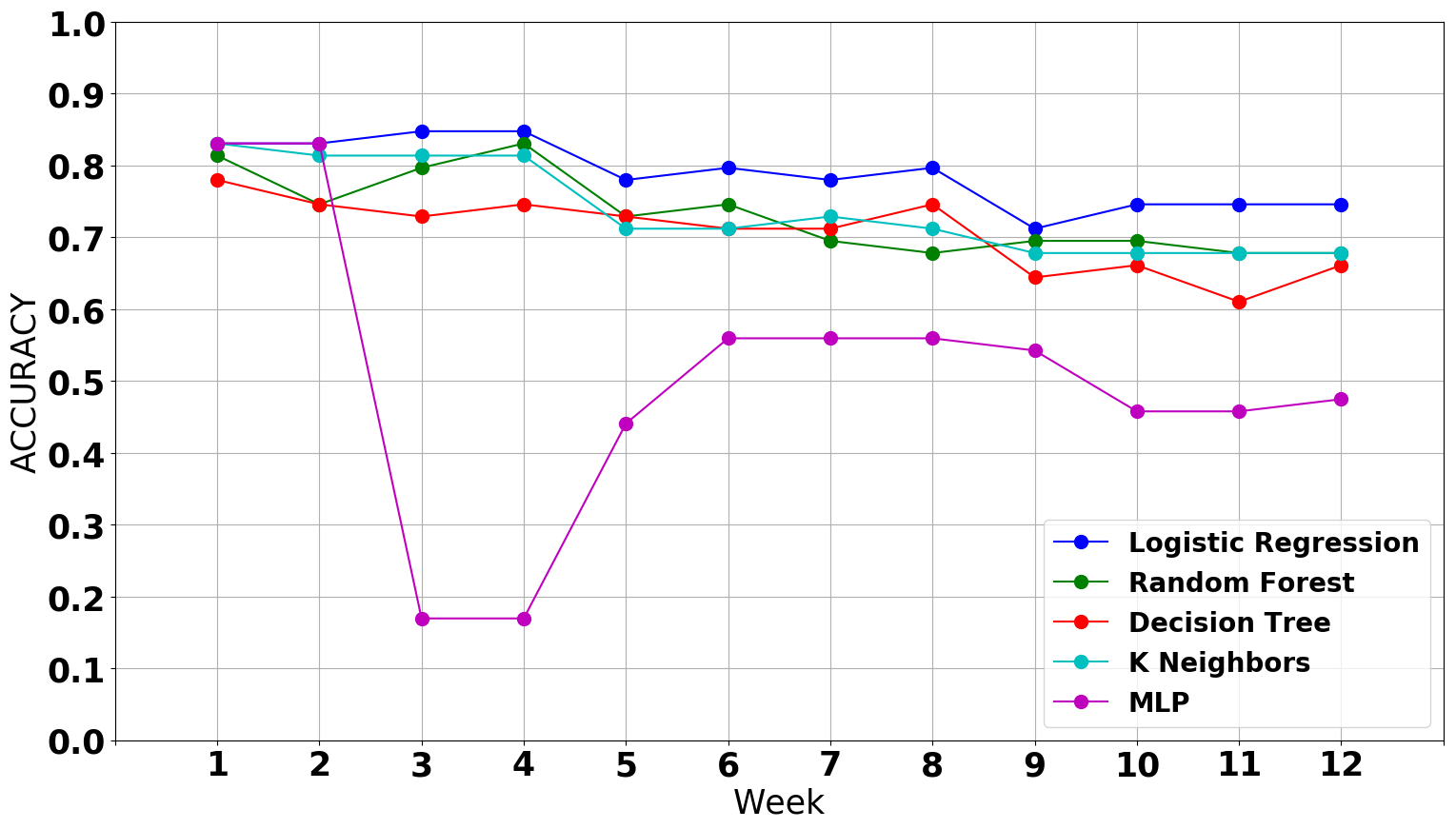

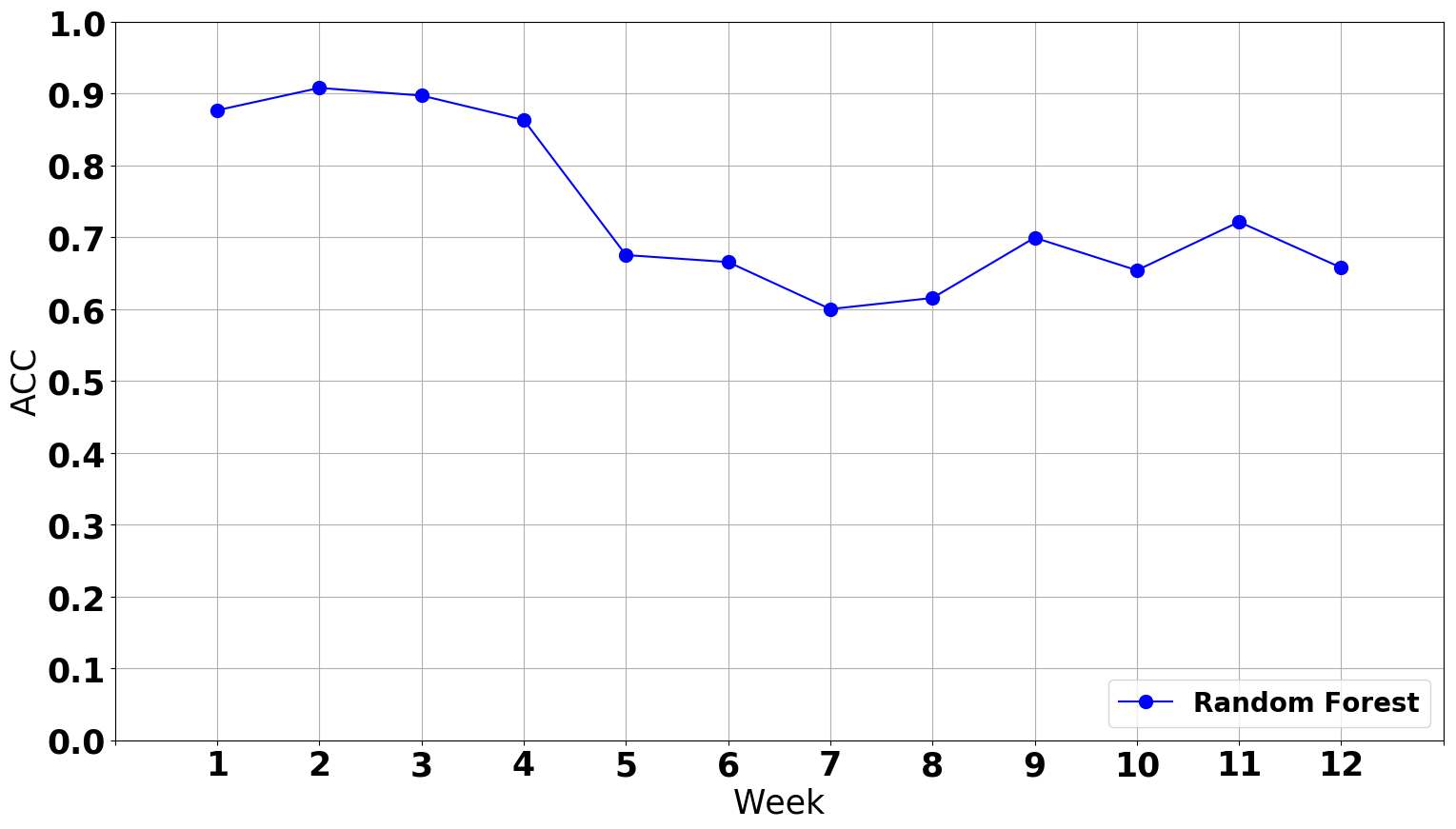

Selecting a model: Empirical Risk Minimization approach