This is based on the great work that https://github.com/itwars done with Ansible, all I left to do is to put it all together with terraform and Proxmox!

- The deployment environment must have Ansible 2.4.0+

- Terraform installed

- Proxmox server

for updated documentation check out my medium.

This setup is relaying on cloud-init images.

Using cloud-init image save us a lot of time and it's work great! I use ubuntu focal image, you can use whatever distro you like.

to configure the cloud-init image you will need to connect to a Linux server and run the following:

install image tools on the server (you will need another server, these tools cannot be installed on Proxmox)

apt-get install libguestfs-toolsGet the image that you would like to work with. you can browse to https://cloud-images.ubuntu.com and select any other version that you would like to work with. for Debian, got to https://cloud.debian.org/images/cloud/. it can also work for centos (R.I.P)

wget https://cloud-images.ubuntu.com/focal/current/focal-server-cloudimg-amd64.imgupdate the image and install Proxmox agent - this is a must if we want terraform to work properly. it can take a minute to add the package to the image.

virt-customize focal-server-cloudimg-amd64.img --install qemu-guest-agentnow that we have the image, we need to move it to the Proxmox server.

we can do that by using scp

scp focal-server-cloudimg-amd64.img Proxmox_username@Proxmox_host:/path_on_Proxmox/focal-server-cloudimg-amd64.imgso now we should have the image configured and on our Proxmox server. let's start creating the VM

qm create 9000 --name "ubuntu-focal-cloudinit-template" --memory 2048 --net0 virtio,bridge=vmbr0for ubuntu images, rename the image suffix

mv focal-server-cloudimg-amd64.img focal-server-cloudimg-amd64.qcow2import the disk to the VM

qm importdisk 9000 focal-server-cloudimg-amd64.qcow2 local-lvmconfigure the VM to use the new image

qm set 9000 --scsihw virtio-scsi-pci --scsi0 local-lvm:vm-9000-disk-0add cloud-init image to the VM

qm set 9000 --ide2 local-lvm:cloudinitset the VM to boot from the cloud-init disk:

qm set 9000 --boot c --bootdisk scsi0update the serial on the VM

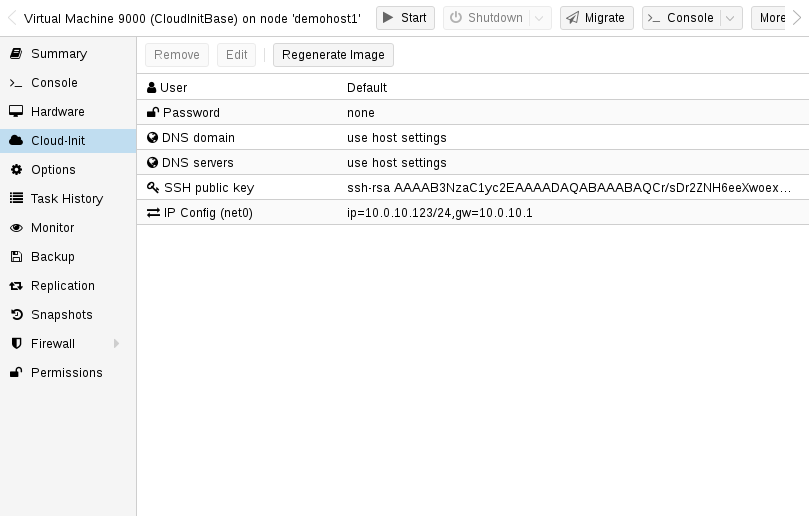

qm set 9000 --serial0 socket --vga serial0Good! so we are almost done with the image. now we can configure our base configuration for the image. you can connect to the Proxmox server and go to your VM and look on the cloud-init tab, here you will find some more parameters that we will need to change.

you will need to change the user name, password, and add the ssh public key so we can connect to the VM later using Ansible and terraform.

update the variables and click on Regenerate Image

Great! so now we can convert the VM to a template and start working with terraform.

qm template 9000our terraform file also creates a dynamic host file for Ansible, so we need to create the files first

cp -R inventory/sample inventory/my-clusterRename the file terraform/variables.tfvars.sample to terraform/variables.tfvars and update all the vars.

there you can select how many nodes would you like to have on your cluster and configure the name of the base image. its also importent to update the ssh key that is going to be used and proxmox host address.

to run the Terrafom, you will need to cd into terraform and run:

cd terraform/

terraform init

terraform plan --var-file=variables.tfvars

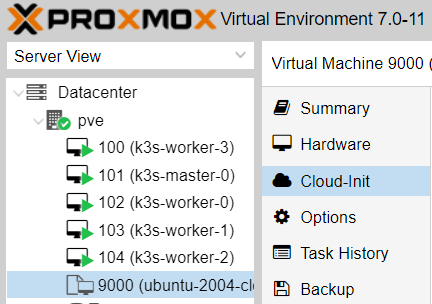

terraform apply --var-file=variables.tfvarsit can take some time to create the servers on Proxmox but you can monitor them over Proxmox. it should look like this now:

First, update the var file in inventory/my-cluster/group_vars/all.yml and update the ansible_user that you're selected in the cloud-init setup. you can also choose if you wold like to install metallb and argocd. if you are installing metallb, you should also specified an ip range for metallb.

if you are running multiple clusters in your kubeconfig file, make sure to disable copy_kubeconfig.

after you run the Terrafom file, your file should look like this:

[master]

192.168.3.200 Ansible_ssh_private_key_file=~/.ssh/proxk3s

[node]

192.168.3.202 Ansible_ssh_private_key_file=~/.ssh/proxk3s

192.168.3.201 Ansible_ssh_private_key_file=~/.ssh/proxk3s

192.168.3.198 Ansible_ssh_private_key_file=~/.ssh/proxk3s

192.168.3.203 Ansible_ssh_private_key_file=~/.ssh/proxk3s

[k3s_cluster:children]

master

nodeStart provisioning of the cluster using the following command:

# cd to the project root folder

cd ..

# run the playbook

ansible-playbook -i inventory/my-cluster/hosts.ini site.ymlIt can a few minutes, but once its done, you should have a k3s cluster up and running.

The ansible should already copy the file to your ~/.kube/config (if you enable the copy_kubeconfig in inventory/my-cluster/group_vars/all.yml), but if you are having issues you can scp and check the status again.

scp debian@master_ip:~/.kube/config ~/.kube/configTo get argocd initial password run the following:

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d; echo

Kubernets is realy fun to learn and there is so muche things that you can automate.

Have fun :)