Tekton is a Cloud Native solution to build Continous Integration pipelines.

The power of Tekton can be combined with ArgoCD as a Continous Deployment tool, getting a CI/CD complete cycle.

- OpenShift Pipelines 1.10.3

- OpenShift GitOps 1.8.3

- Openshift 4.13

Operator installation:

oc apply -f operator/openshift-gitops.yamlRetrieve ArgoCD route:

oc get route -A | grep openshift-gitops-server | awk '{print $3}'Get the ArgoCD admin password:

oc -n openshift-gitops get secret openshift-gitops-cluster -o json | jq -r '.data["admin.password"]' | base64 -dArgoCD needs some privileges to create specific resources. In this demo, we'll apply cluster-role to ArgoCD to avoid the fine-grain RBAC.

oc apply -f gitops/cluster-role.yamlNow, we apply the bootstrap application:

oc apply -f gitops/argocd-app-bootstrap.yamlThe bootstrap application initializes the cluster with the necessary dependencies and configuration. This repository is structure functionally:

- apps: this folder contains the configuration of the applications that are running on the cluster.

- dependencies: our system needs some dependencies which are installed by ArgoCD.

- gitops: contains all the ArgoCD objects and cluster configuration as security role bindings.

- operator: operators definitions

- pipelines: pipelines definitions which are managed by ArgoCD too.

- tasks: our own tasks definitions.

- triggers: triggers to configure and deploy automatically the pipelines.

- workspaces: pvc creation. You also can use a PVC template.

As we're using ArgoCD, we only have to apply the ArgoCD bootstrap application and it's going to install the Tekton Operator. This operation was done in the previous step.

A task is a series of steps that make one or some actions like clone, build, test or something necessary. A task receives input parameters and produces outputs that can be used by others tasks.

By default, Tekton provides a series of tasks for common operations but we can build new tasks. In addition, a task is deployed in OpenShift as a Cloud Native object that can be reused.

A task can be launched with specific parameters, this instance is called TaskRun. In our case, we only are going to launch its with Pipelines.

For this example, we're going to use these tasks:

- git-clone: to clone the repository

- maven: to test and build the artifact

- buildah: to build the image

Define a series of tasks to build a specific application or functional unit. A pipeline can be launched manually using PipelineRun or using an event.

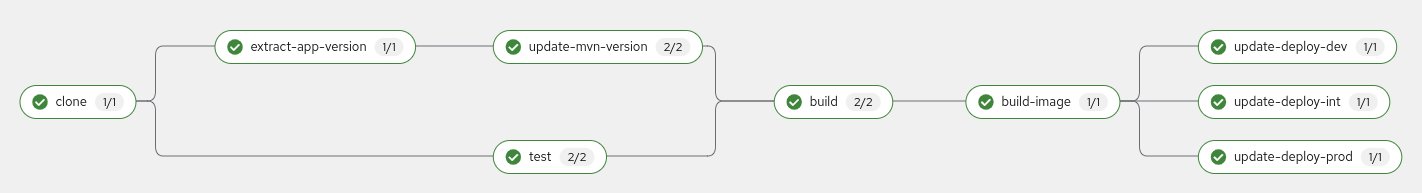

In our case, we're using the previous task definitions to define the following steps in our pipeline:

Using triggers, we can manage easily the pipeline execution automatically. For example, in this workshop we configure a webhook in our git repository that initialize the pipeline, to do that, we use the following objects:

- Route: the route defines the endpoint where the webhook has to call.

- EventListener: this object defines how it must actuate when a webhook is received. It's essential to complete

bindingsandtemplatesections. - TriggerBinding: indicate the values that substitute the different pipeline variables, for example, you can use values received in the webhook.

- TriggerTemplate: the definition of the

PipilineRunwhich is the object that represents a pipeline execution.

Once, our application image is in the containers' registry, we can deploy it.

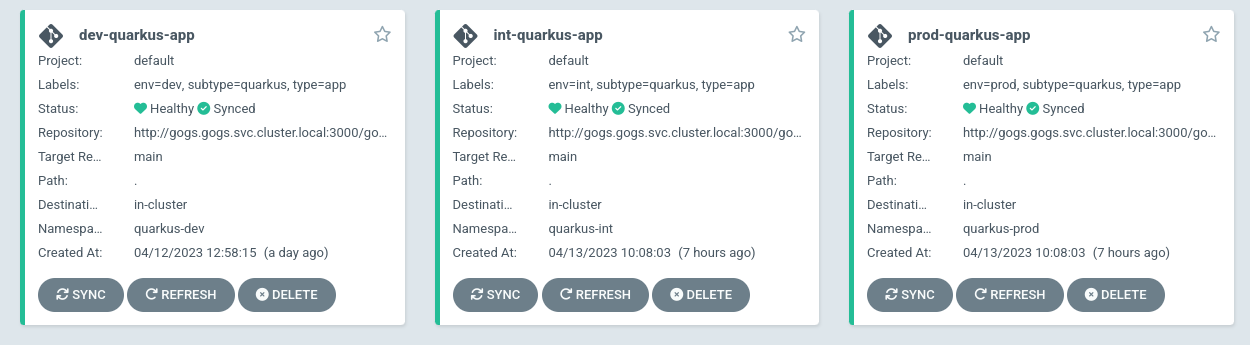

ArgoCD ensures that the definition of our deployment is exactly what we have in a Git repository.

In this example, our pipeline finishes changing the image version in a git repository that ArgoCD is watching. When ArgoCD detects the change, it updates the OpenShift deployment.

If you've completed the installation section, you can start this step.

At this moment, we've deployed all our dependencies, security and ArgoCD applications but we need our code. I've implemented two repositories, one for a Quarkus application and another with a Helm chart that contains the deployment definition.

Now, it's the moment to play with them.

Although our repositories are in GitHub we fork them in an internal Git server to avoid a lot of unnecessary commits.

This internal server is Gogs. You can enter in ArgoCD and visualize the state of Gogs, if it is correctly synchronized we can enter with user and password gogs.

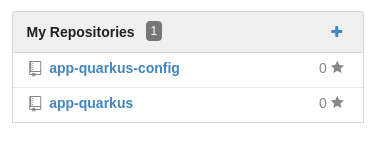

oc get route -n gogs gogs -o template --template='{{"http://"}}{{.spec.host}}'We can see that we don't have repositories, so we'll initialize it with a specific pipeline. To run it:

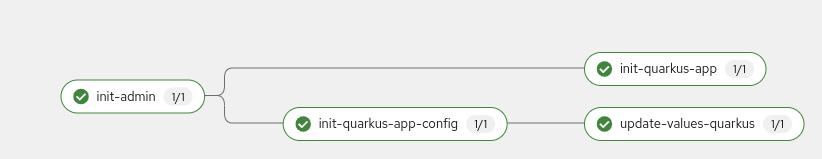

oc apply -f gitops/init-pipeline-run.yamlWe can enter in OpenShift console and see the pipeline execution in the `Pipeline```` tab:

After some seconds, we can see the projects in gogs.

At this point, we can clone and modify some code in the application. When we push the changes to the repository our application pipeline will start. We can see it in the same place as the gogs configuration:

In ArgoCD we have three applications, one per environment (dev, pre and prod), so we can inspect each and test directly the application.