Deep-learning-based low-light image enhancer specialized on restoring dark images from events (concerts, parties, clubs...).

- Project structure

- General usage of the project

- Usage of the web-application and REST API based on the model

The deliverables for Assignment 1 - Initiate are located in milestones/initiate.md. As for Assignment 2 - Hacking, the complementary information to this README that describes general usage is in milestones/hacking.md.

The following figure describes how the repository is structured and which additional non-versioned folders need to be created to run the project (more details how to use the released weights or datasets are specified in General usage of the project).

The model is composed of multiple successive residual recurrent groups, preceded and followed by single convolutional layers and a residual connection between the input and the output. The model in-between the residual connection generates the values to be added to the original image to enlighten it. Each residual recurrent group has a similar structure: a convolutional layer, followed by multiple multi-scale residual blocks, and another convolutional layer followed by a residual connection to the input of the block. The multi-scale residual blocks have the following structure:

In a Python 3.10 environment, install the requirements using pip install -r requirements.txt.

In case of any version conflicts, the exact versions of the packages used during development are specified in requirements_with_versions.txt that you can use in the previously mentioned command.

Model training is generally performed with the training/train.py script. Before running it, the training run must be configured in training/config.yaml (or any other yaml file in that directory). The configuration file contains the following parameters:

# *** REQUIRED SETTINGS ***

epochs: int # the number of epochs to train for.

batch_size: int

learning_rate: float

early_stopping: bool # whether to use enable early stopping or not.

train_dataset_path: string # path to the training dataset - should contain the 'imgs' and 'targets' folders with images.

val_dataset_path: string

test_dataset_path: string

image_size: 128 # At training, random crops of (image_size//2, image_size//2) are used. At validation/testing, the smallest dimension of the image is resized to image_size while preserving the aspect ratio.

# *** OPTIONAL SETTINGS ***

from_pretrained: string # Used for finetuning: path to the pretrained model weights (.pth file).

resume_epoch: int # Used to resume training from a given checkpoint. If specified and a file in the format model/weights/Mirnet_enhance{resume_epoch}.pth exists (automatic checkpoints are named this way), the optimizer, scheduler and model states will be restored and training will resume from there up to the desired amount of epochs.

num_features: int # Number of channels to be used by the MIRNet, defaults to 64 if not specified.

num_msrb: int # Number of MSRB blocks in the MIRNet recurrent residual groups, defaults to 2.

workers: int # Number of workers to use for data loading, defaults to 8.

disable_mixup: bool # Whether to disable mixup augmentation or not. By default, mixup is enabled.Then, you can run the training script while pointing to your configuration file with python training/train.py --config training/config.yaml.

The training procedure can be interrupted at any time, and resumed later by specifying the resume_epoch parameter in the configuration file. The script will automatically load the optimizer, scheduler and model states from the checkpoint file from that epoch and resume training from there as the models are automatically checkpointed at every epoch. For memory saving purposes, if the validation PSNR does not improve for 5 epochs, the checkpoints of those 5 epochs get deleted.

Warning

As Weights And Biases is used for experiment tracking (logging configurations, training runs, metrics...), your API key will be requested on the first run of the script. You can find your API key in your WANDB account.

The hyperparameters of the training procedure can be optimized using the training/hyperparameter_optimization.py script. The procedure relies on WANDB Sweeps to test and compare different combinations of hyperparameters. The tuning can be run with python training/hyperparameter_optimization.py. The value grid, as well as the exploration method (random, Bayes, grid search...) can be further adapted at the bottom of the script.

The MIRNets released in this project have first been pre-trained on the LoL dataset for 100 epochs, on 64x64 crops from images where the smallest dimension was resized to 128, but you can use the following instructions with any other dataset to pre-train on different data.

The weights resulting from the LoL-pre-training can be obtained from this release, and the corresponding training configuration file is available here. To obtain the pre-training dataset, follow the steps specified in Generating the datasets section.

To reproduce the pre-training from scratch, you can run the following command after setting up a pre-training dataset:

$ python training/train.py --config training/pretraining_config.yamlThe fine-tuned model is based on the pre-trained weights described in the previous section. It has been further trained on the fine-tuning dataset of event-photographs for 100 epochs, with early-stopping (kicked in a 35 epochs), on 64x64 crops from images where the smallest dimension was resized to 128. Most of the earlier layers of the pre-trained model have been frozen for fine-tuning which led to the best results.

The weights resulting from the fine-tuning can be obtained from this release, and the corresponding configuration file is available here. To obtain the fine-tuning dataset or set up your own, follow the steps specified in Generating the datasets section.

To reproduce the fine-tuning from scratch, you can run the following command after setting up a fine-tuning dataset:

$ python training/train.py --config training/finetuning_config.yamlNote

For fine-tuning, the from_pretrained parameter in the configuration file must be set to the path of the pre-trained weights, as visible in the fine-tuning configuration file and explained in Training the model.

To run model inference, put the model weights in the model/weights folder. For example, weights of the pretrained and fine-tuned MIRNet model are available in the releases.

To enhance a low-light image with a model, run the inference script as follows:

$ python inference/enhance_image.py -i <path_to_input_image>

[-o <path_to_output_folder> -m <path_to_model>]

# or

$ python inference/enhance_image.py --input_image_path <path_to_input_image>

[--output_folder_path <path_to_output_folder> --model_path <path_to_model>]- If the output folder is not specified, the enhanced image is written to the directory the script is run from.

- If the model path is not specified, the default model defined in the

MODEL_PATHconstant of the script, which can be updated as needed, is used.

A typical use-case looks like this:

$ python inference/enhance_image.py -i data/test/low/0001.png

-o inference/results -m model/weights/pretrained_mirnet.pt

To gauge how a model performs on a directory of images (typically, the test or validation subset of a dataset), the inference/visualize_model_predictions.py script can be used. It generates a grid of the original images (dark and light) and their enhanced versions produced by the model to visually evaluate performance, and computes the PSNR and Charbonnier loss on those pictures as well.

To use the script, change the values of the three constants defined at the top of the file if needed (IMG_SIZE, NUM_FEATURES, MODEL_PATH - the default values should be appropriate for most cases), and run the script as:

$ python inference/visualize_model_predictions.py <path_to_image_folder> The image folder should contain two subfolders, imgs and targets as any split of the datasets. The script will generate a grid of the original images and their enhanced versions and save it as a png file in inference/results, and output the PSNR and Charbonnier loss on the dataset.

For example, to visualize model performance on the test subset of the LoL dataset, proceed as follows:

$ python inference/visualize_model_predictions.py data/pretraining/test

-> mps device detected.

100%|████████████████████████████████████████████████████████████████| 1/1 [00:05<00:00, 5.31s/it]

***Performance on the dataset:***

Test loss: 0.10067714005708694 - Test PSNR: 19.464811325073242

Image produced by the script:

To run model training or inference on the datasets used for the experiments in this project, the datasets in the right format have to be generated, or prepared datasets need to be downloaded and placed in the data folder.

The pre-training dataset is the LoL dataset. To download and format it in the expected format, run the dedicated script with python dataset_generation/pretraining_generation.py. The script downloads the dataset from HuggingFace Datasets, and generates the pre-training dataset in the data/pretraining folder (created if it does not exist) with the dark images in the imgs subfolder, and the ground-truth pictures in targets.

To use a different dataset for pre-training, simply change the HuggingFace dataset reference following line in the following line of dataset_generation/pretraining_generation.py:

dataset = load_dataset("geekyrakshit/LoL-Dataset") # can be changed to e.g. "huggan/night2day"

If you would like to directly use the processed fine-tuning dataset, you can download it from the corresponding release, extract the archive and place the three train, val, and test folders it contains in the data/finetuning folder (to be created if it does not exist).

Otherwise, to generate a fine-tuning dataset in the correct format, the dataset_generation/finetuning_generation.py script can be used to re-generate the finetuning dataset from the original images, or to create a finetuning dataset from any photographs of any size you would like.

To do so:

- Place your well-lit original images in a

data/finetuning/original_imagesfolder. Note that the images do not need to be of same size of orientation, the generation script takes care of unifying them. - Then, you can run the generation script with

python dataset_generation/finetuning_generation.py, which will create thedata/finetuning/[train&val&test]folders with the ground-truth images in atargetssubfolder, and the corresponded low-light images in ainputssubfolder. The images are split into the train, validation, and test sets with a 85/10/5 ratio.

The low-light images are generated by randomly darkening the original images by 80 to 90% of their original brightness, and then adding random gaussian noise and color-shift noise to better emulate how a photographer's camera would capture the picture in low-light conditions. For both the ground-truth and darkened images, the image's smallest dimension is then resized to 400 pixels while preserving the aspect ratio, and a center-crop of 704x400 or 400x704 (depending on the image orientation) is applied to unify the image aspect-ratios (this leads to a 16/9 aspect-ratio, which is a standard out of most cameras).

The unit and integration tests are located in the tests folder, and they can be run with the pytest command from the root directory of the project. Currently, they test the different components of the model and the full model itself, the loss function and optimizer, the data pipeline (dataset, data loader, etc.), as well as the training and testing/validation procedures.

Note

All tests are run on every commit on the main branch through Github Actions alongside linting, and their status can be observed here.

To add further tests, simply add a new file in the tests folder, and name it test_*.py where * describes what you want to test. Then, add your tests in a class named Test*.

To start the inference endpoint (an API implemented with Flask), run the following command from the root directory of the project:

python app/api.pyThe inference endpoint should then be accessible at localhost:5000 and allow you to send POST requests with images to enhance.

Two routes are available:

/enhance: takes a single image as input and returns the enhanced version as a png file.

Example usage with curl:Example usage with Python requests:curl -X POST -F "image=@./img_102.png" http://localhost:5000/enhance --output ./enhanced_image.pngimport requests response = requests.post('http://localhost:5000/enhance', files={'image': open('image.jpg', 'rb')}) if response.status_code == 200: with open('enhanced_image.jpg', 'wb') as f: f.write(response.content)

/enhance_batch: takes multiple images as input and returns a zip file containing the enhanced versions of the images as png files.

Example usage with curl:Example usage with Python requests:curl -X POST -F "images=@./image1.jpg" -F "images=@./image2.jpg" http://localhost:5000/batch_enhance -o batch_enhanced.zip

import requests files = [ ('images', ('image1.jpg', open('image1.jpg', 'rb'), 'image/jpeg')), ('images', ('image2.jpg', open('image2.jpg', 'rb'), 'image/jpeg')) ] response = requests.post('http://localhost:5000/batch_enhance', files=files) with open('enhanced_images.zip', 'wb') as f: f.write(response.content)

The same inference endpoint can be run without setting up the environment using the Docker image provided in the Dockerfile in the root directory of the project. To do so, run the following commands from the root directory of the project:

docker build -t low-light-enhancer .

docker run -p 5000:5000 low-light-enhancerThe image automatically downloads and sets up the model weights from the GitHub release. If you would like to use the weights of a particular release, you can specify the weight URL with the --build-arg MODEL_URL=... argument when building the image.

The inference endpoint should then be accessible at <address of the machine hosting the container, localhost if it is the local machine>:5000 and allow you to send POST requests with images to enhance as described in the previous section.

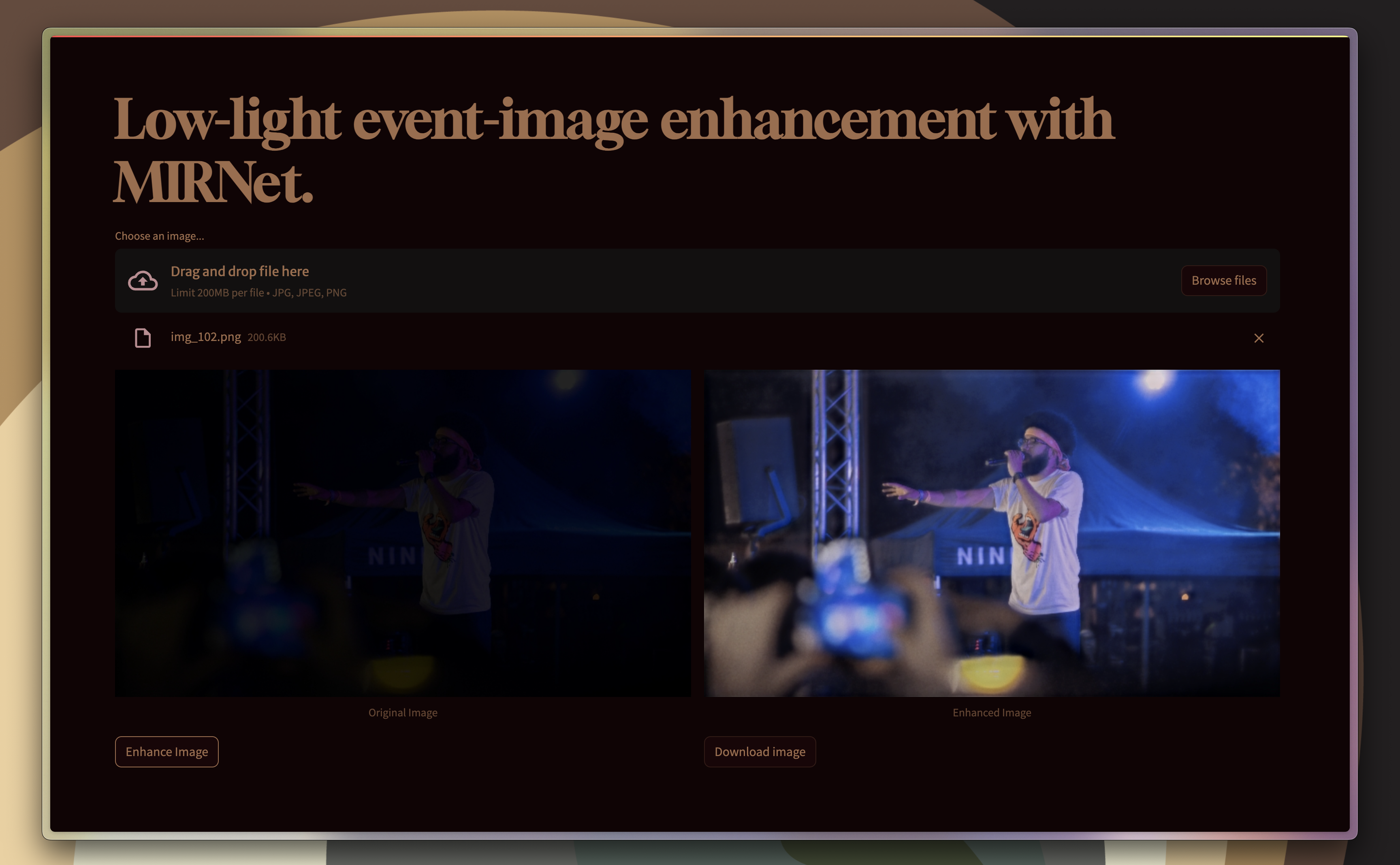

The application has been made accessible online as a HuggingFace Space.

Alternatively, to start the inference web application locally, run the following command from the root directory of the project:

$ streamlit run app/app.pyThe web application should then be accessible at localhost:8501 in your browser and allow you to upload images in any size and format, enhance them and download the enhanced version: