Official Repository for Google Summer of Code 2022.

The main purpose of this project

is to explore the use of Vision Transformers in the domain of particle physics.

.

- Installation

- Abstract

- Introduction

- Data Pre-processing

- Training

- Results

- Notebooks and Docs

- References

- Acknowledgement

- Contributing and Reporting

!git clone https://github.com/dc250601/GSOC.git

%mv GSOC Transformers

%cd TransformersIf the reader knows what they are dealing with, I would advise proceeding further.

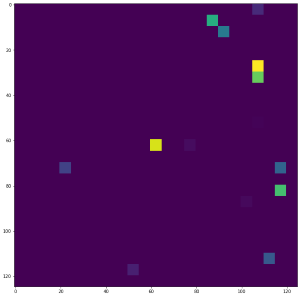

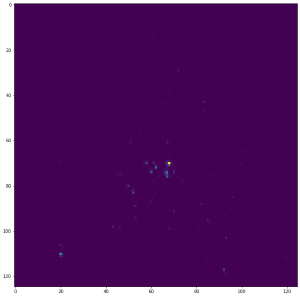

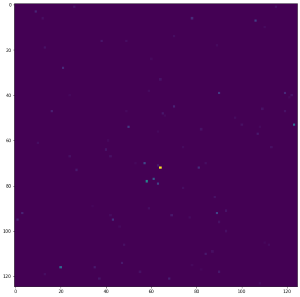

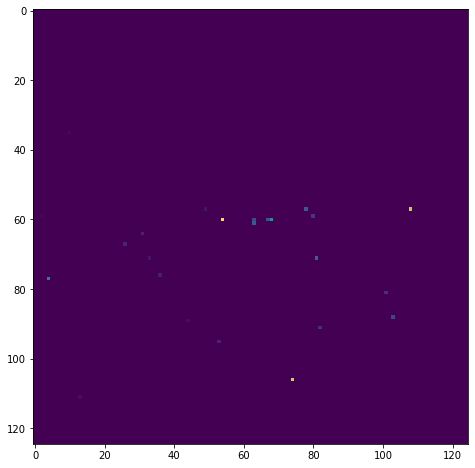

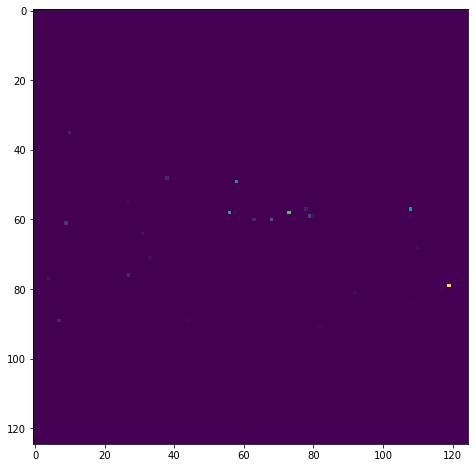

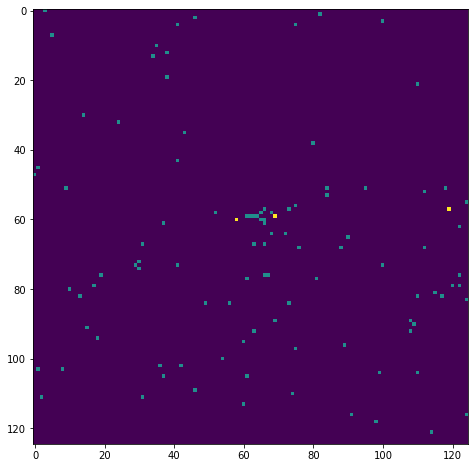

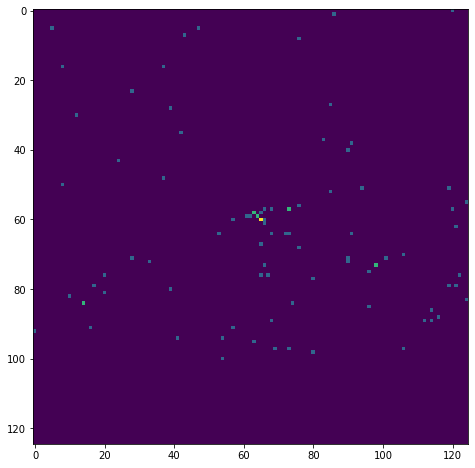

In this project, we are working with multi-detector images corresponding to actual maps of low-level energy deposits in the detector for various types of particle collision events in CMS(CERN). In layman's terms, we use the data generated by the detectors in the CMS experiment to learn more about the particles and analyse them. The used in our case are Monte-Carlo simulated data. We are using the following data and constructing images(jet images) out of them, and using Machine Learning to analyse(in our case Classify) them. Different types of detectors or sensors produce different kinds of data or, in our case, jet images.

This a sample dataset in the Quark-Gluon dataset

| HCAL | ECAL | TRACKS |

|---|---|---|

|

|

|

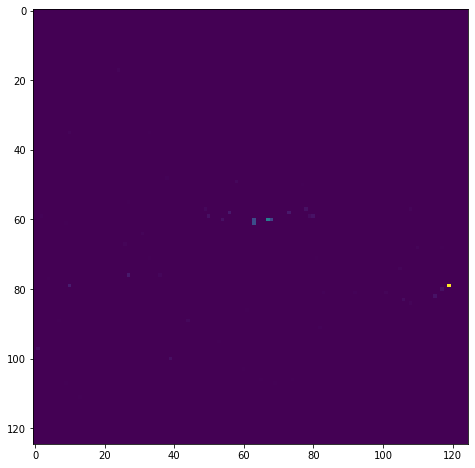

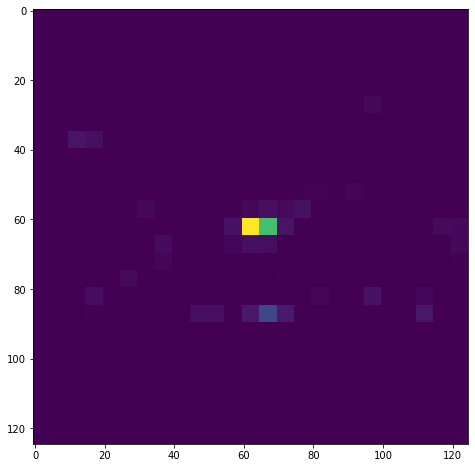

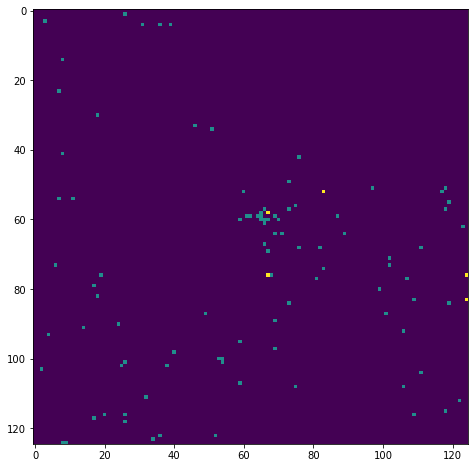

This is a sample datapoint in the Top-Quark dataset

|

|

|

|

|---|---|---|---|

|

|

|

|

Previously there have been attempts to classify the images with ResNet-based architectures. In our case we will use Transformers, namely Vision Transformers and various state-of-the-art Transformer based architectures to achieve a somewhat higher score than the previous attempts. To see if our hypothesis is indeed true and the ViT are performing better we have tested the models across two different datasets namely the Quark-Gluon dataset and the Boosted Top-Quark dataset. Since the two datasets were quite different from one another two different approaches were used in training and deploying them. The exact process is decribed below.

ViTs are very sensitive to data pre-processing techniques. It has often been seen that even things like Image Interpolation Techniques, if not done properly, can adversely affect the performance of Transformers-based models. In our case, the data is directly sourced from CMS Open Data, and the outputs(pixel) can be arbitrarily large for a single detector(calorimeter) hence proper normalization techniques are employed. We employ the following steps to ensure that the smaples or our datapoints are properly normalised and free from outliers:

- Zero suppression for any value under 10^-3.

- Channel Wise Z-score Normalisation across batches of size 4096.

- Channel Wise Max value clipping with clip value set equal to 500 times the standard deviation of the pixel values.

- Sample wise Min Max scaling. Although the pre-processing is same for both the datasets but the input pipelines are vastly difference due to the training environment and computational challenges.

The following tutorials will help the reader to generate the processed data for training.

The Quark Gluon models are trained on a 2080Ti using Pytorch and the input data is in form of .png . While for the Top-Quark dataset we have used TPU-V3s for training. The models for Top-Quark dataset is written in Tensorflow and the input data is in form of TFRecords. The following diagrams best describe the input pipelines for both the types of datasets.

flowchart TB;

subgraph Quark-Gluon

direction TB

A0[Read Raw Parquet Files using PyArrow] -->B0[Pre-process the images];

B0 -->C0[Save the images in .png form];

C0 -->D0[Read them using native Pytorch Dataloaders]

D0 -->E0[Feed the data to a single GPU]

end

subgraph Top-Quark

direction TB

A1[Read Raw Parquet Files using PyArrow] -->B1[Pre-process the images];

B1 -->C1[Combine the Images into chunks of data];

C1 -->D1[Save them into TFRecord shards]

D1 -->E1[Read using the tf.data pipeline]

E1 -->F1[Feed the data to multiple TPU cores]

end

The we trained a bunch of different models to get a basic understanding of the problem which includes

- Vision Tranformers

- Swin Transformers

- CoAt nets

- MaxViT

- DaViTs

- Efficient Nets

- ResNets(ResNet 15)

- Hybrid EffSwin(Custom Architecture)

But out off the above models only a few stood out which are-

- Swin Transformers

- CoAt Nets

- Hybrid EffSwin The above three models performed the best although Swin Transformer performed which worse to that of CoAt and Hybrid architetcure still it is kept under considersation due to the fact that the Hybrid EffSwin is built using it.

The model Hybrid EffSwin can be easily made by combining Swin Transformers with EfficientNet-B3. The following diagram best represent it.

flowchart TB

subgraph Stage1

direction TB

A[Initialize a Swin Tiny Architecture]--Train for 50 epochs--> B[Trained Swin Transformer];

end

subgraph Stage2

direction TB

C[Initialise a EfficientNet-B3]--> D[Take the first two stages of the model]

B---> E[Take the last to stages of the trained Swin Transformer]

D--> F[Concatenate]

E--> F[Concatenate]

F--Train for 30-50 epochs--> G[Trained Hybrid EffSwin]

end

The following Tutorial will help the reader to Train the models if they want

- Tutorial to train CoAt-Net(Torch-GPU)

- Tutorial to train CoAt-Net(Tensorflow-TPU)

- Tutorial to train Hybrid EffSwin(Torch-GPU)

- Tutorial to train Hybrid EFFSwin(Tensorrflow-TPU)

We get the following results. All scores are ROC-AUC scores

| Model Name | Quark Gluon | Top Quark |

|---|---|---|

| Swin-Tiny | 80.83 | 98.58 |

| CoAt-Net-0 | 81.46 | 98.72 |

| Hybrid-EffSwin | 81.44 | 98.77 |

All the model weights are saved and stored in the following Google Drive

All the relevant notebooks are listed below

- Tutorial for Dataset creation Quark-Gluon(.png type dataset)

- Tutorial for Dataset creation Boosted Top-Quark(TFRecord dataset)

- Tutorial to train CoAt-Net(Torch-GPU)

- Tutorial to train CoAt-Net(Tensorflow-TPU)

- Tutorial to train Hybrid EffSwin(Torch-GPU)

- Tutorial to train Hybrid EFFSwin(Tensorrflow-TPU)

- End-to-End Jet Classification of Quarks and Gluons with the CMS Open Data

- End-to-End Jet Classification of Boosted Top Quarks with the CMS Open Data

- An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

- Swin Transformer: Hierarchical Vision Transformer using Shifted Windows

- CoAtNet: Marrying Convolution and Attention for All Data Sizes

- MaxViT: Multi-Axis Vision Transformer

- DaViT: Dual Attention Vision Transformers

- EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks

- A big thanks to Dr Sergei Gleyzer, for his guidance and support during the entire project period.

- Special thanks to the entire ML4Sci community for their combined support and the meaningful discussions.

- A big thanks to the TRC program, TPU Research was supported with Cloud TPUs from Google's TPU Research Cloud (TRC)

The above package is written keeping a research oriented mindset. Further interest in this field is very appreciated. To contribute to this repository feel free to make a pull request. If there are any bugs please feel free to raise an issue.